On Tensors, Tensorflow, And Nvidia's Latest 'Tensor Cores'

Nvidia announced a brand new accelerator based on the company’s latest Volta GPU architecture, called the Tesla V100. The chip’s newest breakout feature is what Nvidia calls a “Tensor Core.” According to Nvidia, Tensor Cores can make the Tesla V100 up to 12x faster for deep learning applications compared to the company’s previous Tesla P100 accelerator. (See our coverage of the GV100 and Tesla V100 here.)

Tensors And Tensorflow

A tensor is a mathematical object represented by an array of components that are functions of the coordinates of a space. Google created its own machine learning framework that uses tensors because tensors allow for highly scalable neural networks.

Google surprised industry analysts when it open sourced its Tensorflow machine learning software library, but this may have been a stroke of genius because Tensorflow quickly became one of the most popular machine learning frameworks used by developers. Google was also using Tensorflow internally, and it benefits Google if more developers know how to use Tensorflow because it increases the potential talent pool for the company to recruit from. Meanwhile, chip companies seem to be optimizing their products, either for Tensorflow directly, or for tensor calculations (as Nvidia is doing with the V100). In other words, chip companies are battling each other to improve Google’s open sourced machine learning framework - a situation that can only benefit Google.

Finally, Google also built its own specialized Tensor Processing Unit, and if the company decides to offer cloud services powered by the TPU, there will be a wide market of developers that could stand to benefit from it (and purchase access to it).

Nvidia Tensor Cores

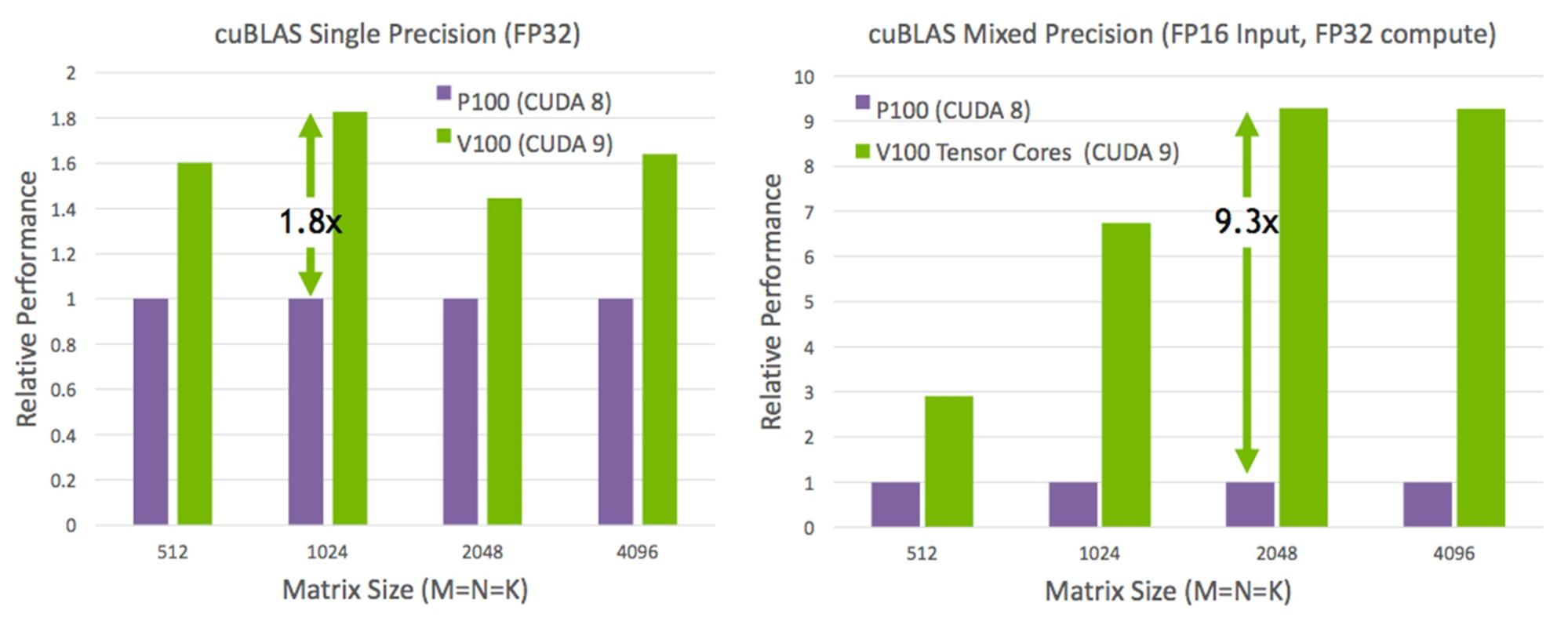

The Tensor Cores in the Volta-based Tesla V100 are essentially mixed-precision FP16/FP32 cores, which Nvidia has optimized for deep learning applications.

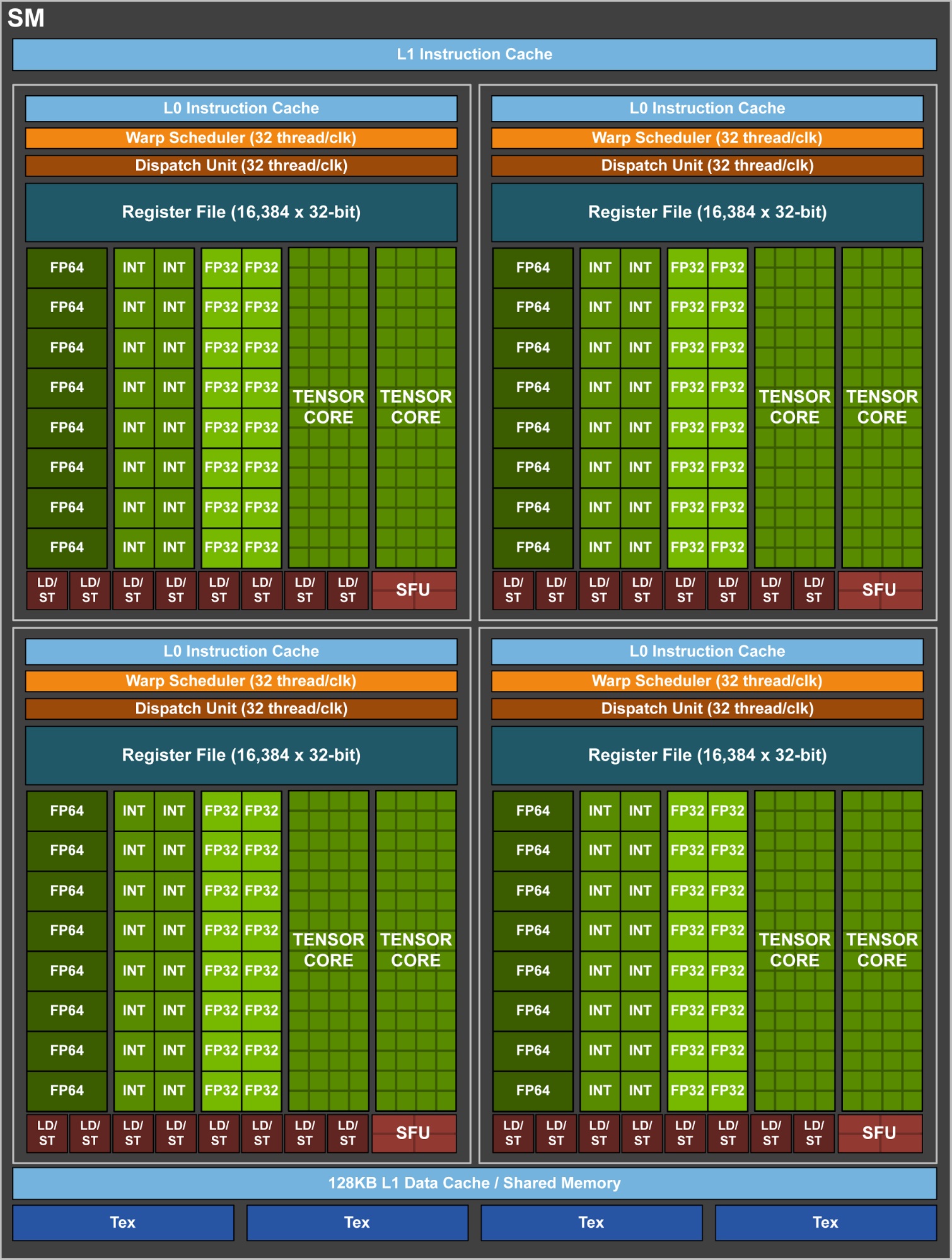

The new mixed-precision cores can deliver up to 120 Tensor TFLOPS for both training and inference applications. According to Nvidia, V100’s Tensor Cores can provide 12x the performance of FP32 operations on the previous P100 accelerator, as well as 6x the performance of P100’s FP16 operations. The Tesla V100 comes with 640 Tensor Cores (eight for each SM).

In the image below, Nvidia is showing how for a matrix-matrix multiplication, commonly used in the training of neural networks, the V100 can be more than 9x faster compared to the Pascal-based P100 GPU.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

The company said that this result is due to the custom crafting of the Tensor Cores and their data paths to maximize their floating point performance with a minimal increase in power consumption.

Each Tensor Core performs 64 floating point FMA mixed-precision operations per clock (FP16 multiply and FP32 accumulate). A group of eight Tensor Cores in an SM perform a total of 1024 floating point operations per clock.

According to the GPU maker, this is an 8x increase in throughput per SM in Volta, compared to the Pascal architecture. In total, with Volta’s other performance improvements, the V100 GPU can be up to 12x faster for deep learning compared to the P100 GPU.

Developers will be able to program the Tensor Cores directly or make use of V100’s support for popular machine learning frameworks such as Tensorflow, Caffe2, MXNet, and others.

Rise Of The Specialized Machine Learning Chip

In a recent paper, Google revealed that its TPU can be up to 30x faster than a GPU for inference (the TPU can’t do training of neural networks). As the main provider of chips for machine learning applications, Nvidia took some issue with that, arguing that some of its existing inference chips were already highly competitive to the TPU.

Nvidia’s case wasn’t that strong, though, considering that it was comparing its chips only for sub-10ms latency (a less important metric for Google in the data center). Nvidia also ignored the power consumption and cost metrics when it made the comparisons.

Nevertheless, Google's TPU was probably not even the biggest threat to Nvidia’s machine learning business, considering that for now, at least, Google doesn’t intend to sell it, although the company may increasingly use the TPUs more internally.

A bigger threat to Nvidia may be other companies that develop and sell specialized machine learning chips with better performance/watt and cost metrics than a typical GPU can offer to other customers. Even more worrisome for Nvidia could be the fact that Intel has been snapping up all the more interesting ones.

Therefore, until now, it looked as if Nvidia could start to fall behind in this race due to the company’s focus on GPUs. However, with the announcement of the “Tensor Cores,” Nvidia may have made it more difficult for others to beat it in this market (at least for now).

Lucian Armasu is a Contributing Writer for Tom's Hardware US. He covers software news and the issues surrounding privacy and security.

-

dstarr3 Why do I feel like one day, there's going to be a discrete AI card for gaming, like how their used to be discrete PhysX cards. And then eventually they'll vanish because only three games ever utilize them properly, and the tech will be watered down and slowly absorbed into typical GPUs because it's not altogether useless tech.Reply -

bit_user I think it's not accurate to call these "tensor cores". I think they're merely specialized execution units accessed via corresponding SM instructions.Reply -

bit_user According to https://devblogs.nvidia.com/parallelforall/cuda-9-features-revealed/:Reply

During program execution, multiple Tensor Cores are used concurrently by a full warp of execution.

This sounds like tensor "cores" aren't really cores, but rather async compute units driven by SM threads. As I thought, more or less.

If these tensor units trickle down into smaller Volta GPUs, I can foresee a wave of GPU-accelerated apps adding support for them. Not sure Nvidia will do it, though. They didn't give us double-rate fp16 in any of the smaller Pascal GPUs, and this is pretty much an evolution of that capability. -

bit_user Reply

Why would this functionality even migrate out of GPUs into dedicated (consumer-oriented) cards, in the first place? Remember that PhysX started out as dedicated hardware that got absorbed into GPUs, as GPUs became more general.19678246 said:Why do I feel like one day, there's going to be a discrete AI card for gaming, like how their used to be discrete PhysX cards.

If I'm a game developer, why would I even use some dedicated deep learning hardware that nobody has, when I could just do the same thing on the GPU? Maybe it increases the GPU specs required by a game, but gamers would probably rather spend another $100 on their GPU than to buy a $100 AI card that's supported by only 3 games. -

dstarr3 Reply19679543 said:

Why would this functionality even migrate out of GPUs into dedicated (consumer-oriented) cards, in the first place? Remember that PhysX started out as dedicated hardware that got absorbed into GPUs, as GPUs became more general.19678246 said:Why do I feel like one day, there's going to be a discrete AI card for gaming, like how their used to be discrete PhysX cards.

If I'm a game developer, why would I even use some dedicated deep learning hardware that nobody has, when I could just do the same thing on the GPU? Maybe it increases the GPU specs required by a game, but gamers would probably rather spend another $100 on their GPU than to buy a $100 AI card that's supported by only 3 games.

*shrug* People bought the stuff before. Not a lot, obviously. But some. Just a stray thought, anyway.

-

nharon If precision is such a non-factor, why not just use analog calculation cores? It would take up a LOT less space. Power draw? something to think about...Reply -

bit_user Reply

Since I don't actually know what's the deal-breaker for analog (though I could speculate), I'll just point out that it's been tried before:19723300 said:If precision is such a non-factor, why not just use analog calculation cores? It would take up a LOT less space. Power draw? something to think about...

http://www.nytimes.com/1993/02/13/business/from-intel-the-thinking-machine-s-chip.html

I assume that if this approach continued to make sense, people would be pursuing it.