Nvidia Pits Tesla P40 Inference GPU Against Google’s TPU

Google recently published a paper about the performance of its Tensor Processing Unit (TPU) and how it compared to Nvidia’s Kepler-based K80 GPU working in conjunction with Intel’s Haswell CPU. The TPU's deep learning results were impressive compared to the GPUs and CPUs, but Nvidia said it can top Google's TPU with some of its latest inference chips, such as the Tesla P40.

Tesla P40 Vs Google TPU

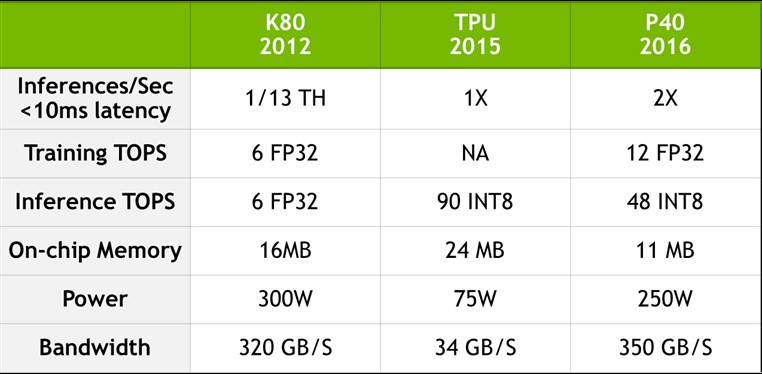

Google’s TPU went online in 2015, which is why the company compared its performance against other chips that it was using at that time in its data centers, such as the Nvidia Tesla K80 GPU and the Intel Haswell CPU.

Google is only now releasing the results, possibly because it doesn’t want other machine learning competitors (think Microsoft, rather than Nvidia or Intel) to learn about the secrets that make its AI so advanced, at least until it’s too late to matter. Releasing the TPU results now could very well mean Google is already testing or even deploying its next-generation TPU.

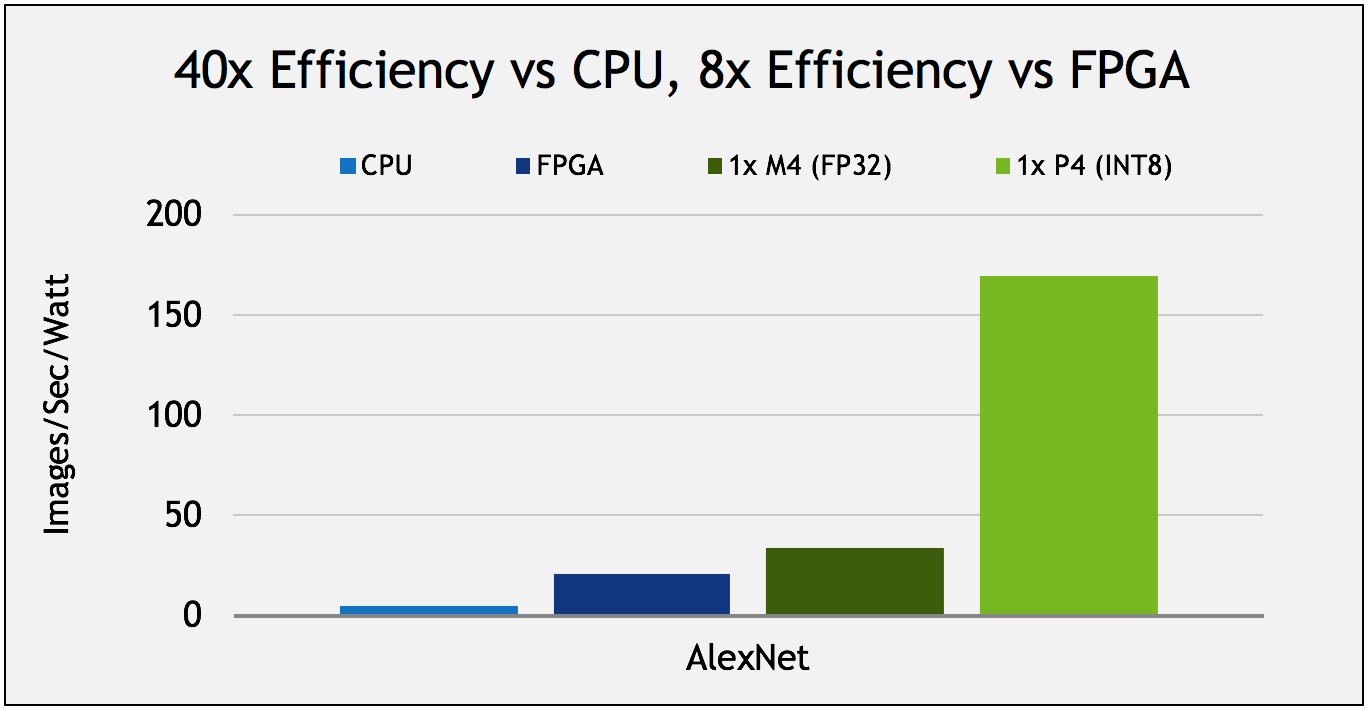

Nevertheless, Nvidia took the opportunity to show that its latest inference GPUs, such as the Tesla P40, have evolved significantly since then, too. Some of the increase in inference performance seen by Nvidia GPUs is due to the company jumping from the previous 28nm process node to the 16nm FinFET node. This jump offered its chips about twice as much performance per Watt.

Nvidia also further improved its GPU architecture for deep learning in Maxwell, and then again in Pascal. Yet another reason for why the new GPU is so much faster for inferencing is that Nvidia’s deep learning and inference-optimized software has improved significantly as well.

Finally, perhaps the main reason for why the Tesla P40 can be up to 26x faster than the old Tesla K80, according to Nvidia, is because the Tesla P40 supports INT8 computation, as opposed to the FP32-only support for the K80. Inference doesn’t need too high accuracy when doing calculations and 8-bit integers seem to be enough for most types of neural networks.

According to Nvidia, all of these improvements allow the P40 to be highly competitive to an application-specific integrated circuit (ASIC) such as Google’s TPU. In the Nvidia-provided chart below, the Tesla P40 even seems to be twice as fast as Google’s TPU for inferencing.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Nvidia said that the P40 also has ten times as much bandwidth, as well as 12 teraflops 32-bit floating point performance, which would be more useful for training neural networks. Google's TPU is only an inference chip, so inference performance is all it’s got.

Is It A Fair Comparison?

Comparing an ASIC and a GPU is a little like comparing apples to oranges. However, what matters most to a company like Google is the cost-performance of a given chip, no matter the architecture.

Google is a big enough company that it doesn’t need its chips to do multiple things at once. It can afford to pick GPUs for training neural networks and TPUs for inferencing. If the TPUs have a better cost-performance than GPUs, then the company would likely choose it (as it already has).

We don’t know how much a TPU costs Google, and we know that a P40 can cost over $5,000. The Tesla P40 may have double the inference performance, but if it costs at least twice as much, then Google may still stick to the TPUs.

Another aspect is how much the chips cost to run. The Tesla P40 has a 250W TDP, or three times higher than the TPU’s 75W. Even if the upfront cost for both the TPU and the Tesla P40 is similar, Google would probably still choose the TPU because of the significantly lower run costs.

Nvidia’s lower-end inference chip, the Tesla P4, may have been a closer competitor in terms of upfront cost as well as cost to run, because it also has a TDP of 75W. However, according to Nvidia, the P4 has a little less than half of P40’s performance. That could mean that the Tesla P4 has slightly less performance than the TPU at the same power consumption level.

Nvidia mentioned that the P40 has 10x higher bandwidth, which is likely the main limiting factor of the TPU and why the P40 can be twice as fast for inference performance. Google also admitted in its recent paper that if it had four times as much bandwidth for its TPU, its inference performance could have been three times higher.

However, this improvement would likely come to a next-generation TPU. Combined with other possible improvements that Google mentioned in its paper, the next-gen TPU could be multiple times faster compared to the current one and the Tesla P40. Whether that TPU can be matched by Nvidia’s future inference GPUs for a similar power draw and cost remains to be seen.

Machine Learning Chips Crave Optimization

What we're seeing from both Google's TPU, as well as Nvidia's latest GPUs is that machine learning needs as much performance as you can throw at it. That means we should see chip makers strive to optimize their chips for machine learning as much as possible over the next few years, as well as narrowing their focus (for training or inferencing) to squeeze even more performance out of each transistor. The machine learning market is a booming one, so there should be plenty of interested customers for more specialized chips, too.

The specialization of machine learning chips should also be a boon for the embedded markets, which should soon see plenty of small but increasingly powerful inference chips. This could allow more embedded devices, such as smartphones, drones, robots, surveillance cameras, and so on, to offer "AI-enhanced" services with impressive capabilities at a low cost and without a need for an internet connection to function.

Lucian Armasu is a Contributing Writer for Tom's Hardware US. He covers software news and the issues surrounding privacy and security.

-

tsnor Good information. Liked to the pointer to the google paper. Do you have a reference you can share for the nvidia paper that describes the environment for "Tesla P40 even seems to be twice as fast as Google’s TPU". Neat to see a general purpose GPU outperform an ASIC. Seems unlikely. The section noting that bandwidth was the root cause was interesting.Reply

Your "power/performance" and "cost/perforamance" analysis seems right. Consider also the google claim of more predictable (lower variance) response time from inferencing using the TPU. Not sure why this would be true vs. GPU, but google says it is. Consistent, fast performance is valuable. -

cordes85 so can i put in a tesla p40 alongside my nvidia titan- pascal -x- pascal. 128gb ddr4 ram,Reply -

Petaflox You are say that Goggle design and produce is own TPU?Reply

If so the will cost is far more than Tesla P4. And yes TDP will be mach less because

are design to do exactly what they need. -

t1gran "In the Nvidia-provided chart below, the Tesla P40 even seems to be twice as fast as Google’s TPU for inferencing" - isn't it about inferences/sec WITH <10 ms latency only? Because the total inferencing performance of Google's TPU seems to be twice as fast as Nvidia’s Tesla P40 - 90 INT8 vs 48 INT8.Reply -

B Salita Looks like Nvidia will have to bow out of the NN market. The world needs a 3rd party TPU clone. Where's Kickstart when you need 'em?Reply -

Petaflox Goggle made a custom chip, there is no point to compare versus a commercial chip.Reply

Also, did goggle have is own patents? Do they use other company patents? Is there any patent at all?

This article doesn't reflect the professional standard I'm used to read in tom's website.