Elon Musk’s OpenAI Project First To Receive Nvidia's DGX-1 'AI Supercomputer In A Box'

The Elon Musk-backed OpenAI nonprofit became the first ever customer for Nvidia’s so called “AI supercomputer in a box,” officially named the DGX-1.

The “Xerox PARC of AI”

Elon Musk has been one of the more prominent tech figures to warn about centralized artificial intelligence control. Musk believes that no one company or government should have a monopoly on artificial intelligence. The thing about artificial intelligence is that once it passes a certain threshold, it could quickly become orders of magnitude more intelligent than any other AI solution out there.

Even today we see, for instance, that Google’s DeepMind AI could relatively easily beat an 18-time world champion at Go, whereas Facebook’s Go AI solution, which is one of the more advanced AI Go-playing solutions out there, can currently play only against amateur Go players.

Musk thinks that if artificial intelligence solutions are decentralized, then if one AI solution becomes too strong and somehow “goes rogue,” all the other AI solutions out there have a chance at fighting back.

It’s also possible that in the long run, a “crowdsourced” version of an artificial intelligence could beat any solution that is developed by a single company or country. This crowdsourced solution would also be distributed to multiple players in various countries and industries, thus reducing the risks that are involved with artificial intelligence.

Elon Musk, together with a group of partners, created “OpenAI” last year, a billion-dollar-funded project that has has since been dubbed the “Xerox PARC of AI.” The idea is to get as many smart people as possible from all over the world to improve this AI solution so it can at least keep up with the other centralized and commercial solutions out there.

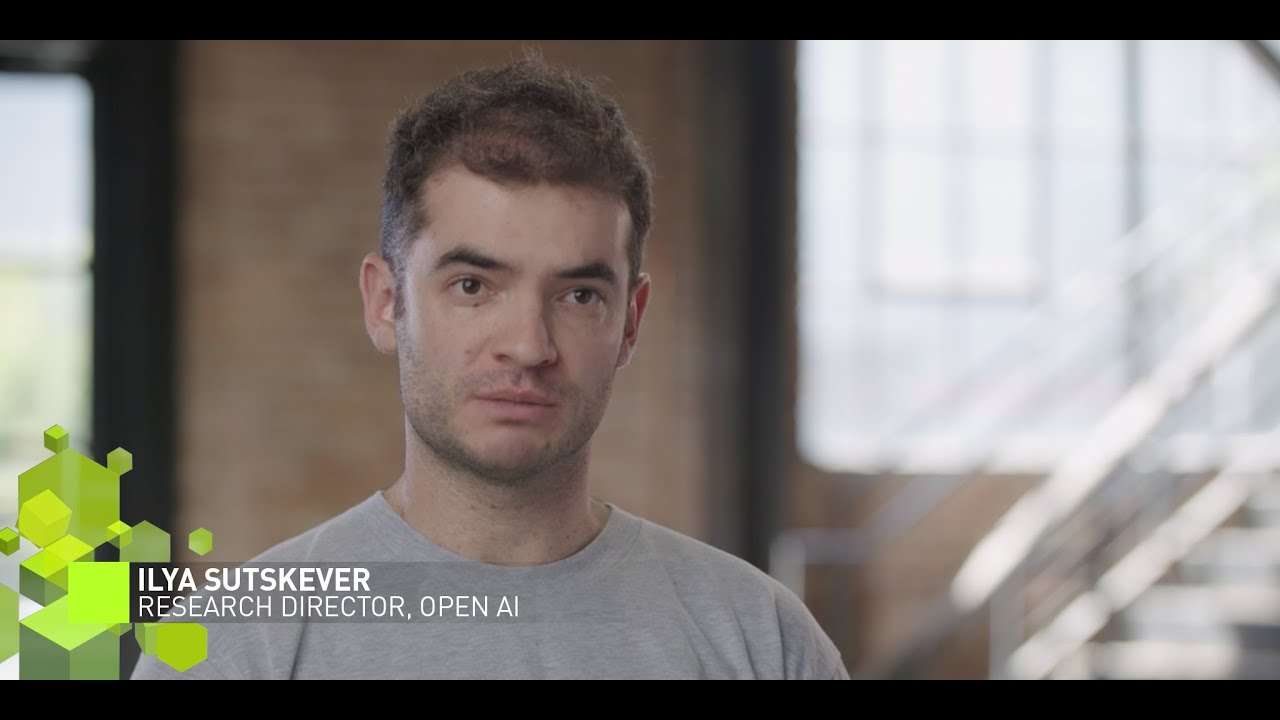

“As a non-profit, our aim is to build value for everyone rather than shareholders,” said Ilya Sutskever, OpenAI’s Research Director, last year.He added that, “Researchers will be strongly encouraged to publish their work, whether as papers, blog posts, or code, and our patents (if any) will be shared with the world. We'll freely collaborate with others across many institutions and expect to work with companies to research and deploy new technologies.”The group also fears that without an open source solution such as OpenAI, artificial intelligence research could also become more limited, as companies start seeing their AI research as a “competitive advantage.”

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

DGX-1 Taking OpenAI Research To The Next Level

To achieve its goals of keeping up with centralized competition, OpenAI needs not just good machine learning software, but also cutting-edge hardware. That’s why the OpenAI non-profit is the first to get Nvidia’s new DGX-1 “AI supercomputer in a box.”

The reason Nvidia calls it that is because the DGX-1 is a $129,000 system that integrates eight Nvidia Tesla P100 GPUs. The P100 is Nvidia’s highest-end GPU right now, capable of up to 21.2 teraflops of FP16 performance (which is the type of low-precision computation that machine learning software would use). The whole system is capable of up to 170 teraflops.

High-performance and well-optimized systems like these are more useful to researchers than having many more weaker and widely distributed GPU cores, because software doesn’t scale that well in parallel. Such networked solutions are also almost always bottlenecked by bandwidth, further decreasing their efficiency and performance.

Together with the raw compute power of its hardware system, Nvidia is also delivering its machine learning-optimized SDK, so the researchers at OpenAI and elsewhere can more easily program machine learning solutions on the DGX-1 hardware.

One of OpenAI’s goals is to use “generative modeling” to train an AI that can not only recognize speech patterns, but can also learn how to generate appropriate responses to the questions that people ask it. However, the researchers behind OpenAI say that until now they’ve been limited by the computation power of their systems.

“One very easy way of always getting our models to work better is to just scale the amount of compute,” said OpenAI Research Scientist Andrej Karpathy.“So right now, if we’re training on, say, a month of conversations on Reddit, we can, instead, train on entire years of conversations of people talking to each other on all of Reddit. And then we can get much more data in terms of how people interact with each other. And, eventually, we’ll use that to talk to computers, just like we talk to each other,” he added.

The Nvidia DGX-1 should help the OpenAI researchers get the significant boost in performance they need to jump to the next level in AI research. According to Ilya Sutskever, the DGX-1 will shorten the time each experiment takes by weeks. The DGX-1 will allow the researchers to come up with new ideas that were perhaps not practical before because they couldn’t allocate weeks of research time to every single idea they had.

Lucian Armasu is a Contributing Writer for Tom's Hardware US. He covers software news and the issues surrounding privacy and security.

-

hdmark just to be clear... musk basically wants to train a bunch of AI's in case one AI tries to take over the world?Reply -

JamesSneed Reply18442180 said:just to be clear... musk basically wants to train a bunch of AI's in case one AI tries to take over the world?

If I had to guess this is only a very small part of it. However if you think about AI computers once they become savvy enough it could make for some serious competition to current security practices. I could easily see one day an AI being taught on how to probe systems and hack them. That would be where you would want another AI system in place to prevent those attacks, like a very smart firewall so to speak. -

bit_user ReplyMusk thinks that if artificial intelligence solutions are decentralized, then if one AI solution becomes too strong and somehow “goes rogue,” all the other AI solutions out there have a chance at fighting back.

Many scientists behind the original atom bomb once thought this, too. During the late 40's, there was remarkable openness around the science underpinning nuclear weapons, with the thought that if everyone had them, no one would use them.

Of course, assuming everyone has access to custom ASICs, the barriers to entry for AI are much lower.

can also learn how to generate appropriate responses to the questions that people ask it. ... we can, instead, train on entire years of conversations of people talking to each other on all of Reddit.

Um, didn't Microsoft already get burned by this? Something about a lewd, racist chat bot comes to mind...

Anyway, I wonder how long before we can rent time on DGX-1's, through Amazon. That's probably how most researchers will actually use them. Most institutions can't afford more than a couple, but what do you do when half a dozen grad students all want to run to use it to get the data they need in time for the submission deadline of the next big AI conference?