Nvidia says it’s ‘delighted’ with Google’s success, but backhanded compliment says it is ‘the only platform that runs every AI model’ — statement comes soon after Meta announces proposed deal to acquire Google Cloud TPUs

Is Google's TPU a threat to Nvidia's dominance?

Nvidia released a statement saying that it’s “delighted by Google’s success,” but said that it continues to supply AI chips to the company. The AI giant made the X post in an apparent response to the news that Meta is in talks with Google to rent its Cloud Tensor Processing Units (TPUs) in 2026 and then to purchase them the following year. This news resulted in an increase in stock prices for Alphabet, Google’s parent company, and Meta, but also saw Nvidia take a 3% hit, especially as the market probably saw this as a break on the AI giant’s monopoly on the AI chip market.

We’re delighted by Google’s success — they’ve made great advances in AI and we continue to supply to Google.NVIDIA is a generation ahead of the industry — it’s the only platform that runs every AI model and does it everywhere computing is done.NVIDIA offers greater…November 25, 2025

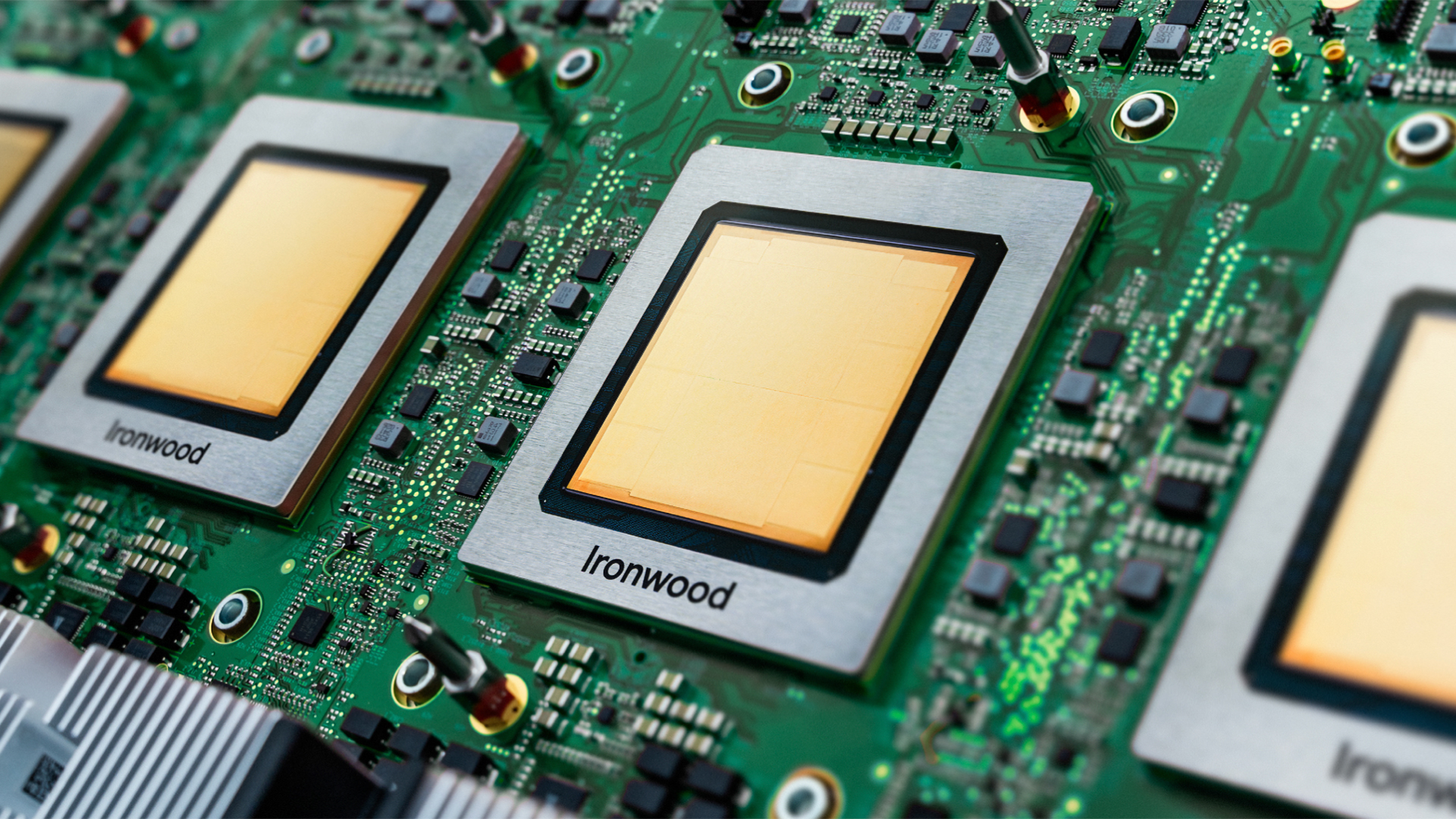

"Nvidia is a generation ahead of the industry — it's the only platform that runs every AI model and does it everywhere computing is done," the company said on X via its Nvidia Newsroom account. "Nvidia offers greater performance, versatility, and fungibility than ASICs, which are designed for specific AI frameworks or functions." Google's Cloud TPUs are ASICs — application-specific integrated circuits — designed for AI workloads like training and inference. On the other hand, Nvidia's AI GPUs are general-purpose machines designed for parallel processing that have been repurposed for AI workloads.

Nvidia's Blackwell GPUs are indeed superior to almost anything else on the market, especially in terms of versatility. Although they're primarily advertised for AI applications, they can also be used for high-performance computing, data analytics, graphics rendering and visualization, and many more. On the other hand, Google's TPUs are designed for matrix multiplication — the arithmetic operation that primarily runs most modern AI. This means that you cannot easily repurpose these chips for other purposes. However, it also gives them an advantage in AI processing, even sometimes giving them an advantage in AI workloads, especially in terms of efficiency.

Meta isn't the first company to consider using Google TPUs for its AI operations. In fact, Anthropic has been using it since 2023, and the company has signed a deal to expand its contracted capacity to 1GW in 2026. But the biggest challenge for Google is the widespread adoption of its TPU chips, especially given Nvidia's huge market share. More than that, its CUDA platform is widely used in the industry, making it harder for developers to switch to Google's alternatives.

However, the size of the proposed deal between Meta and Google appears large enough to threaten Nvidia's dominance in the market. While it would still be a drop in the bucket compared to Nvidia's skyrocketing revenues, it would at least show potential clients that there's a viable alternative to Team Green's GPUs. Just this thought is enough to send Nvidia's stock price sliding, and it comes so soon after the company's record highs, leading CEO Jensen Huang to say the market does not appreciate the AI GPU manufacturer.

Follow Tom's Hardware on Google News, or add us as a preferred source, to get our latest news, analysis, & reviews in your feeds.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Jowi Morales is a tech enthusiast with years of experience working in the industry. He’s been writing with several tech publications since 2021, where he’s been interested in tech hardware and consumer electronics.

-

timsSOFTWARE Apparently you can't mention the "c" word here, but the comparison would have been relevant, as it underwent a similar shift in regard to graphics cards giving way to custom ASICs. Regardless, AI companies shifting to dedicated chips is one of the biggest long-term risks to Nvidia's AI GPU business. (Along with the overall bubble popping/companies not being able to sustain current or higher levels of hardware investment.)Reply