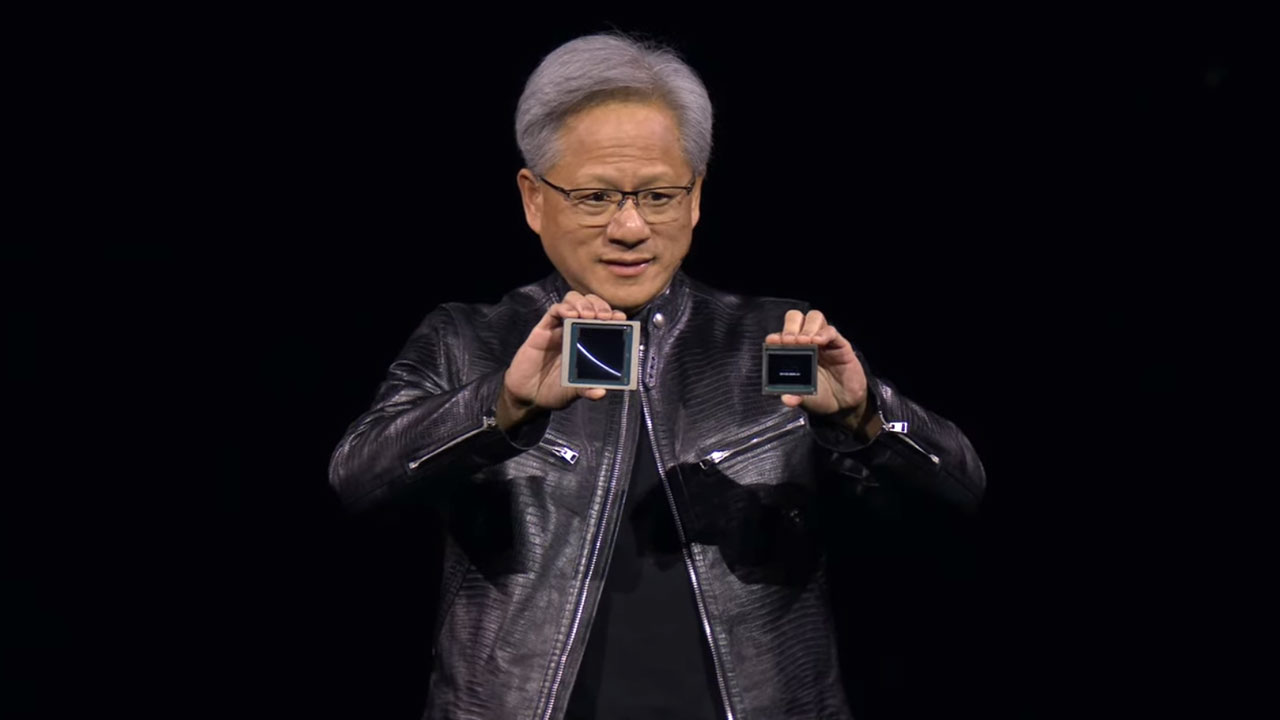

Amazon and Google tip off Jensen Huang before announcing information about their homegrown AI chips — companies tread carefully to avoid surprising Nvidia, says report

The quiet deference to Nvidia’s CEO reveals how much power the company still holds over AI’s most ambitious companies.

A new report claims that before Amazon or Google reveals anything about their latest artificial intelligence chips, they first notify Nvidia CEO Jensen Huang. The practice, touted by sources in The Information’s recent report on Nvidia’s internal dealmaking, paints a picture of a quiet reality of the AI hardware market: Nvidia is still the dominant supplier of training compute, and its customers are trying not to get cut off.

According to the report, Amazon and Google each provide advance notice to Huang before unveiling updates to their custom silicon. The reason, sources say, is that Nvidia is still deeply embedded in their cloud operations and neither wants to surprise the person who effectively controls their AI infrastructure supply. Nvidia accounts for the overwhelming majority of the accelerators used to train large language models, and its GPUs also handle a growing share of inference tasks in the public cloud.

This deference comes as Nvidia is pouring billions into customers, suppliers, and competitors alike in a bid to tighten its grip on the market. In September alone, Nvidia signed a deal to buy up to $6.3 billion worth of unused GPU capacity from CoreWeave over the next seven years. It also invested $700 million in British data center startup Nscale and spent more than $900 million to acquire the CEO and key engineers of networking startup Enfabrica while licensing its chip technology. That followed news of a $5 billion investment in Intel to support joint chip development and a letter of intent with OpenAI to back a 10-gigawatt GPU data center buildout that could cost up to $100 billion.

The scale and timing of those investments show how aggressively Nvidia is trying to preempt the rise of non-GPU accelerators. Companies like Amazon, Google, and OpenAI are all pursuing in-house silicon efforts designed to reduce dependency on Nvidia hardware and improve performance or cost at scale. But even with years of effort and tens of billions of dollars behind them, their platforms are still heavily reliant on Nvidia’s CUDA ecosystem, software tooling, and manufacturing pipeline.

According to The Information, Nvidia’s dominance has created a dynamic where it acts like a financial backstop to the entire AI supply chain. The company can now fund suppliers, rent out capacity, and underwrite long-term purchases to support continued demand for its hardware. That makes it harder for any individual customer to walk away, even as they build competing products.

For now, the biggest names in cloud computing are still briefing Jensen Huang before revealing their next chips. That sort of dynamic may not last forever, but it says a lot about where the power still sits today.

Follow Tom's Hardware on Google News to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Luke James is a freelance writer and journalist. Although his background is in legal, he has a personal interest in all things tech, especially hardware and microelectronics, and anything regulatory.

-

RemmRun Reply

Insider trading, jail them all and send me the proceeds.Admin said:According to a recent report by The Information, Amazon and Google each provide advance notice to Huang before unveiling updates to their custom silicon.

Amazon and Google tip off Jensen Huang before announcing information about their AI chips, says report — companies tread carefully to avoid surpris... : Read more -

JarredWaltonGPU Meanwhile, Jensen is constantly talking about how the US export restrictions on China aren't working and Nvidia should be allowed to sell its fastest GPUs there as well. Because if it can't, then China will break free from Nvidia dependence! And we can't have that...Reply -

HansSchulze Reply

China is only about 25% of their business. Which company do you know that silently accepted that reduction without complaining?JarredWaltonGPU said:Meanwhile, Jensen is constantly talking about how the US export restrictions on China aren't working and Nvidia should be allowed to sell its fastest GPUs there as well. Because if it can't, then China will break free from Nvidia dependence! And we can't have that... -

HansSchulze Reply

Insider trading is someone buying shares of another company on their news. Why would JH buy lots of shares of companies whose market price rises slower than his own plentiful stock? Please explain the financial logic here.RemmRun said:Insider trading, jail them all and send me the proceeds.

Also, why would JH care about chip releases that are already announced a year in advance, possibly earlier behind closed doors? This is common in long lead items, like Intel and others have done for decades.

And since I'm writing here, there is no reason not to use Cerebras and other hardware, cheaper and lower power than Nvidia GPUs. I don't get the "taking over the market", "tightening their grip" etc headlines. AMD stuff works fine, so does Google, etc. But it's not the most efficient as Tesla is slowly figures out. There will have to be more improvements, Nvidia being very GPU centric, may not be the long term winner in inference due to power cost. 35 years of pushing forward at their pace does give them an edge, we got here, where do we go next? -

Dntknwitall Reply

China holds more than that. They are predominantly Nvidia when it comes to GPUs? They won't buy anything else. The brand power that Nvidia holds in China is crazy, same with intel. Nvidia probably sells 40% to China and if they could break the barrier and are able to sell more powerful GPUs to China it would probably account for 60% of Nvidia market share. China is all about major brands.HansSchulze said:China is only about 25% of their business. Which company do you know that silently accepted that reduction without complaining? -

Dntknwitall Reply

I absolutely agree. Why is Huang the boss leader of AI and why do other companies have to let him know when they are coming out with a new chip. This whole AI business stinks of corruption and illegal deals being made. It is getting as bad as the world's drug trade but in this case you have only one man in a leather jacket running it all!HansSchulze said:Insider trading is someone buying shares of another company on their news. Why would JH buy lots of shares of companies whose market price rises slower than his own plentiful stock? Please explain the financial logic here.

Also, why would JH care about chip releases that are already announced a year in advance, possibly earlier behind closed doors? This is common in long lead items, like Intel and others have done for decades.

And since I'm writing here, there is no reason not to use Cerebras and other hardware, cheaper and lower power than Nvidia GPUs. I don't get the "taking over the market", "tightening their grip" etc headlines. AMD stuff works fine, so does Google, etc. But it's not the most efficient as Tesla is slowly figures out. There will have to be more improvements, Nvidia being very GPU centric, may not be the long term winner in inference due to power cost. 35 years of pushing forward at their pace does give them an edge, we got here, where do we go next? -

Dntknwitall Reply

We all know he is making under the table deals through shell companies to China. Nvidia is not losing very much in the China high end AI graphics hardware.JarredWaltonGPU said:Meanwhile, Jensen is constantly talking about how the US export restrictions on China aren't working and Nvidia should be allowed to sell its fastest GPUs there as well. Because if it can't, then China will break free from Nvidia dependence! And we can't have that... -

RobtheRobot Isn't this a monopoly? Ironic that two other monopolies are being controlled by a third.Reply

It really illustrates the problem of allowing companies to grow too big for their boots. -

HansSchulze Reply

The Chinese government just recently (a week or so?) forbade companies from buying H200. Whether or not that stops anyone is anyone's guess. Rumors of GPU chips being bought on the black market, possibly after removal from consumer boards. Other rumors link UAE purchases to Chinese use. China will find a way to use GPUs. Even G has tons of rogue users who never pay. Nvidia might also never see a dime, doesn't mean China isn't learning.Dntknwitall said:Nvidia probably sells 40% to China and if they could break the barrier and are able to sell more powerful GPUs to China it would probably account for 60% of Nvidia market share. -

AJenko Reply

Insider trading is 'any' news given to somebody that could influence a stock price before the public. This is insider trading.HansSchulze said:Insider trading is someone buying shares of another company on their news. Why would JH buy lots of shares of companies whose market price rises slower than his own plentiful stock? Please explain the financial logic here.

Also, why would JH care about chip releases that are already announced a year in advance, possibly earlier behind closed doors? This is common in long lead items, like Intel and others have done for decades.

And since I'm writing here, there is no reason not to use Cerebras and other hardware, cheaper and lower power than Nvidia GPUs. I don't get the "taking over the market", "tightening their grip" etc headlines. AMD stuff works fine, so does Google, etc. But it's not the most efficient as Tesla is slowly figures out. There will have to be more improvements, Nvidia being very GPU centric, may not be the long term winner in inference due to power cost. 35 years of pushing forward at their pace does give them an edge, we got here, where do we go next?

An example, if this news results in a slight share drop (it will because traders may feel more major competitors entering the market may affect future share price) then you could, a friend or your entire family place a sell bet that the shares will drop. When they do, you will make a lot of money.

Also major investors do trade off news and not results. This week one of my shares went up 12% because they hired a CEO with a track record of success in all of his previous businesses. They are banking on future successes. It's not totally foolproof, but you would always like to play a roulette table where most of the segments were black.