Top China silicon figure calls on country to stop using Nvidia GPUs for AI — says current AI development model could become 'lethal' if not addressed

Develop AI-specific ASICs instead.

Wei Shaojun, vice president of China Semiconductor Industry Association, and a senior Chinese academic and government adviser, has called on China and other Asian countries to ditch using Nvidia GPUs for AI training and inference. At a forum in Singapore, he warned that reliance on U.S.-origin hardware poses long-term risks for China and its regional peers, reports Bloomberg.

Wei criticized the current AI development model across Asia, which closely mirrors the American path of using compute GPUs from Nvidia or AMD for training large language models such as ChatGPT and DeepSeek. He argued that this imitation limits regional autonomy and could become 'lethal' if not addressed. According to Wei, Asia's strategy must diverge from the U.S. template, particularly in foundational areas like algorithm design and computing infrastructure.

After the U.S. government imposed restrictions on the performance of AI and HPC processors that could be shipped to China in 2023, it created significant hardware bottlenecks in the People's Republic, which slowed down the training of leading-edge AI models. Despite these challenges, Wei pointed to examples such as the rise of DeepSeek as evidence that Chinese companies are capable of making significant algorithmic advances even without cutting-edge hardware.

He also noted Beijing's stance against using Nvidia's H20 chip as a sign of the country’s push for true independence in AI infrastructure. At the same time, he acknowledged that while China's semiconductor industry has made progress, it is still years behind America and Taiwan, so the chances that China-based companies will be able to build AI accelerators that offer performance comparable to that of Nvidia's high-end offerings are thin.

Wei proposed that China should develop a new class of processors tailored specifically for large language model training, rather than continuing to rely on GPU architectures, as they were originally aimed at graphics processing. While he did not outline a concrete design, his remarks are a call for domestic innovation at the silicon level to support China’s AI ambitions. However, he did not point out how China plans to catch up with Taiwan and the U.S. in the semiconductor production race.

He concluded on a confident note, stating that China remains well-funded and determined to continue building its semiconductor ecosystem despite years of export controls and political pressure from the U.S. The overall message was clear: China must stop following and start leading by developing unique solutions suited to its own technological and strategic needs.

Nvidia GPUs became dominant in AI because their massively parallel architecture was ideal for accelerating matrix-heavy operations in deep learning, offering far greater efficiency than CPUs. Also, the CUDA software stack introduced in 2006 enabled developers to write general-purpose code for GPUs, paving the way for deep learning frameworks like TensorFlow and PyTorch to standardize on Nvidia hardware.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Over time, Nvidia reinforced its lead with specialized hardware (Tensor Cores, mixed-precision formats), tight software integration, and widespread cloud and OEM support, making its GPUs the default compute backbone for AI training and inference. Nvidia's modern architectures like Blackwell for data centers have plenty of optimizations for AI training and inference and have almost nothing to do with graphics. By contrast, special-purpose ASICs — which are advocated by Wei Shaojun — are yet to gain traction for either training or inference.

Follow Tom's Hardware on Google News, or add us as a preferred source, to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button!

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

S58_is_the_goat Is this one of those "please stop buying Nvidia gpus so we can buy them" kind of deals? What's the alternative?Reply -

thisisaname Reply

I think it is more like please buy china made chips, they are just as good. If you discount power usage and performance.S58_is_the_goat said:Is this one of those "please stop buying Nvidia gpus so we can buy them" kind of deals? What's the alternative? -

acadia11 No it’s part of thr party’s 40+ year march towards technical superiority and self-reliance away from Western and if you think it’s a joke you haven’t all been watching what’s been happening in the world. They’ve already done it with CPUs what makes you think gPUs aren’t next … china is taking its place back in the world as a technology leader. It’s already happened it Green Energy, EVs, cell phones …Reply -

acadia11 Reply

You guys don’t get itZthisisaname said:I think it is more like please buy china made chips, they are just as good. If you discount power usage and performance. -

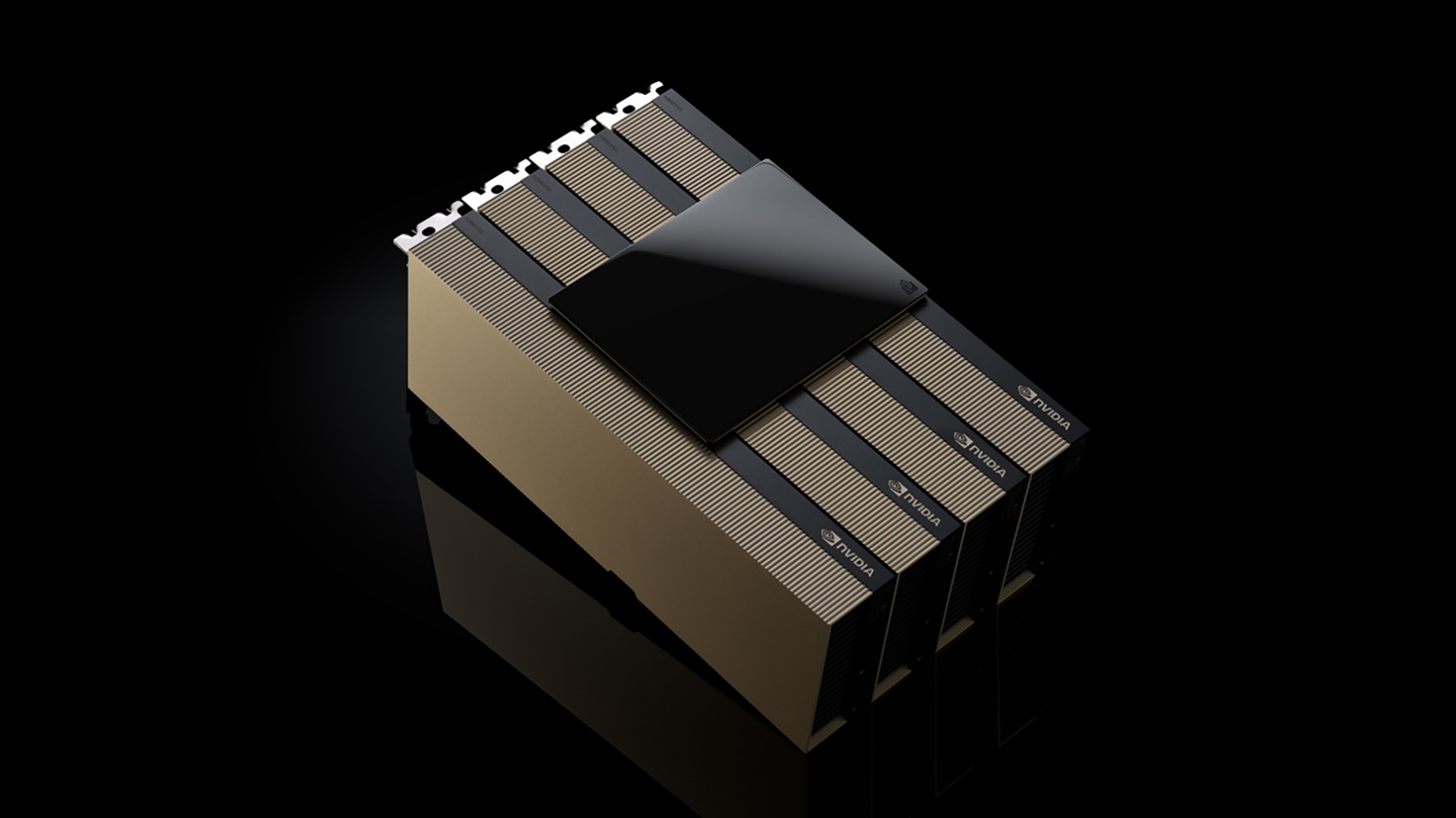

Stomx Noticed on top page how nice looking this motherboard with these four Nvidia GPU accelerator cards and the NVlink on top to make all of them to work like one giant chip? They pack their stuff in 4, 8, and for large businesses 72, 144, 288 etc quantities and soon will explode from the river of money they earn. When lying face down in the mud Intel and AMD with their motherboards manufacturers barely succeed to place 2 processors in parallel setup...Reply -

dynamicreflect Sincerely, plz buy my GPU, only 4000 dollars in price, so cheap! ( Chinese COVID vaccine costs nearly twice the most expensive one in the west at first, after some time, the price is lowered to the most expensive western one. The best part is that there is propaganda about how cheap they were compared to the western)Reply -

ChipMonster Reply

There's no alternative. Building a custom ASIC also involves building a custom software stack (which has been built at a tremendous cost by Google, Meta, OpenAI, Microsoft, NVIDIA, and AMD in tens of billions of dollars). If it were feasible to move over to a home grown systems they would have due to the incentives provided by the Chinese government to do so. Inference is still fine for Chinese homegrown solutions (some Chinese AI companies have been using Huawei etc for inference) but training is a different story.S58_is_the_goat said:Is this one of those "please stop buying Nvidia gpus so we can buy them" kind of deals? What's the alternative?

What this boss is saying isn't really feasible either. Many parts of the models used today are in constant flux and there are many changes every couple of months. What can be accelerated is already being accelerated by AI GPUs (FMA, Sparsity, MatMul, etc). Ultimately this isn't going to change any time soon. -

FoxtrotMichael-1 If you don’t take China seriously, then you aren’t paying attention. They can and will develop homegrown technology. China always plays the long game and can push an agenda for the top down for decades until it works.Reply

I think this is further proof that AI is pushing semiconductors into the military space more than ever before. China doesn’t want to rely on US technology because we can cut them off at any time or limit their ability to grow clusters. It’s become a national security issue for both the US and China. -

derekullo US: You are hereby forbidden from buying Nvidia Cards able to play Crisis with more than 90 fps!Reply

China: I hold myself in contempt! -

Loophero Reply

But GPUs aren’t EVs or solar panels. CPUs were tough, but GPUs are a whole different ballgame; it’s not just the chip, it’s the decades of software, developer adoption, and ecosystem NVIDIA built. Beijing can subsidize factories, but it can’t shortcut global trust or the tools it’s still blocked from. “Inevitable dominance” just isn’t how semiconductors work.acadia11 said:No it’s part of thr party’s 40+ year march towards technical superiority and self-reliance away from Western and if you think it’s a joke you haven’t all been watching what’s been happening in the world. They’ve already done it with CPUs what makes you think gPUs aren’t next … china is taking its place back in the world as a technology leader. It’s already happened it Green Energy, EVs, cell phones …