Dev gambles on 'obviously fake' $8K Grace Hopper system, scores $80,000 worth of hardware on Reddit for one-tenth of the cost — buyer's haul includes 960GB of DDR5 RAM worth more than what he paid for the entire rig

The included 960GB of LPDDR5X memory, alone, is now worth more than what was paid for the full system.

Would you like to run 235B parameter LLMs at home, but your lowly $10,000 budget restricts you to “consumer GPUs that can barely handle 70B parameter models”? This was the situation developer David Noel Ng found himself in, until he stumbled across an “obviously fake” Nvidia Grace-Hopper platform being sold on Reddit, of all places. Ng took the gamble and, according to his blog post, it paid off royally. He’s managed, with a bit of tinkering and fixing up, to get an enterprise system that would usually cost ~ $80,000 for a tenth of that sum. The included 960GB of LPDDR5X memory, alone, is now worth more than he paid for the full system. Hilariously, he even lowballed the seller, noting the original listing on Reddit was for 10,000 EUR before offering just 7,000 EUR.

Why Ng made the offer on an ‘obviously fake’ listing

As we mentioned in the intro, the deal Ng found seemed a little too good to be true. However, he researched the seller, who seemed to be a legitimate server equipment reseller within two hours' driving distance, so he quickly made an offer to get first in line.

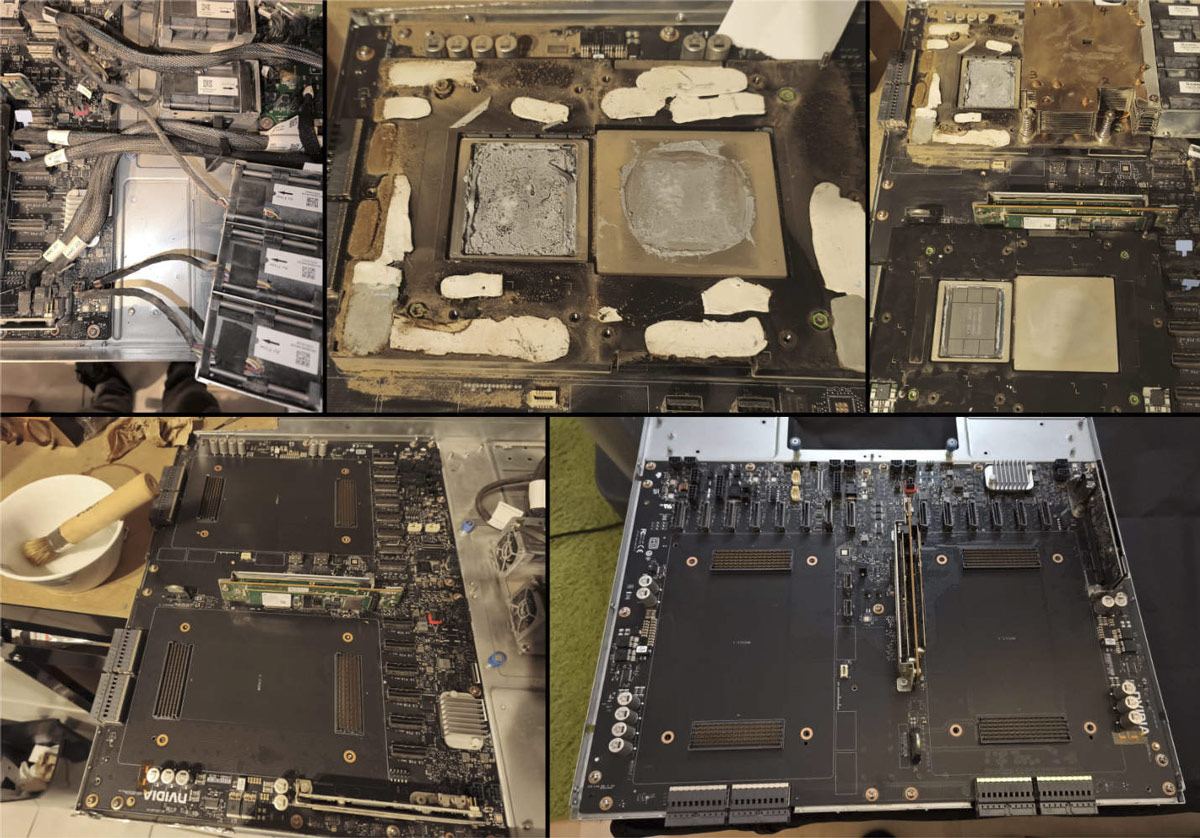

There were some underlying, but not insurmountable, issues with the Grace Hopper system as sold, which meant it wouldn’t be widely popular on a consumer marketplace. Specifically, it was “a Frankensystem converted from liquid-cooled to air-cooled” operation. It also looked a bit of a mess, wasn’t rackable, and ran using a 48V power supply.

On the other hand, even if this were just a collection of components, the offer seemed irresistible. The specs of the system, as sold, were as follows:

- 2x Nvidia Grace-Hopper Superchip

- 2x 72-core Nvidia Grace CPU

- 2x Nvidia Hopper H100 Tensor Core GPU

- 2x 480GB of LPDDR5X memory with error-correction code (ECC)

- 2x 96GB of HBM3 memory

- 1,152GB of total fast-access memory

- NVLink-C2C: 900 GB/s of bandwidth

- Programmable from 1000W to 2000W TDP (CPU + GPU + memory)

- 1x High-efficiency 3000W PSU 230V to 48V

- 2x PCIe Gen4 M.2 22110/2280 slots on board

- 4x FHFL PCIe Gen5 x16

Naturally, the $80,000 value of the rig is probably a fairly modest estimate. Ng notes that the two H100 chips alone are "about 30-40,000 euro each."

Getting the Frankensystem working

A significant section of Ng’s blog post is devoted to receiving, cleaning, and building a new working cooling system for the Frankensystem. It makes for a fascinating read. Suffice to say, the Nvidia Hopper system, with its awesome potential, was acquired as a dusty, extremely noisy, very hot-running machine. And it was demonstrated as such before Ng took it home.

With care and patience, five liters of Isopropyl alcohol, four repurposed but cheap Arctic AiO liquid coolers, a pair of custom CNC-milled copper parts, a kilo (~2 pounds) of 3D printed parts, microscope-assisted soldering, an LED lighting strip, and some know-how, Ng eventually triumphed. You can see the finished, reassembled Grace Hopper system, pictured at the top.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Memory gold mine

Ng seems extremely happy with the finished system and its AI performance. He says he can now “run 235B parameter models at home for less than the cost of a single H100.” The cherry on the cake, though, is that since buying the system, memory prices “have become insane,” meaning that the 960GB of DDR5X in this system would cost more than Ng paid for the whole caboodle.

Follow Tom's Hardware on Google News, or add us as a preferred source, to get our latest news, analysis, & reviews in your feeds.

Mark Tyson is a news editor at Tom's Hardware. He enjoys covering the full breadth of PC tech; from business and semiconductor design to products approaching the edge of reason.

-

Stomx He had two GPUs with 480GB each on them. How this huge 480GB LPDDR5X memory amount got connected to any NVIDIA GPU when they never had so far a single GPU with the spec with such large memory amount ? The GPU memory is always soldered on the boards near the GPU chip and there is no place around the chip for that amount of memory. Even coming Vera Rubin modules have almost twice less memory (they are HBM4 not GDDR but still).Reply -

edzieba Reply

Two CPU+GPU modules, each with 96GB HBM onboard the GPU package, and 480GB offboard the module (on the motherboard).Stomx said:He had two GPUs with 480GB each on them.

This isn't the same architecture as consumer devices use. -

Stomx Reply

I deleted my first post by mistake above.edzieba said:Two CPU+GPU modules, each with 96GB HBM onboard the GPU package, and 480GB offboard the module (on the motherboard).

This isn't the same architecture as consumer devices use.

Of course this is not a consumer device but something is strange here.

480GB LPDDR5 were probably on the system block running by regular processors feeding either 2x Nvidia H100 or 2x Grace-Hopper Superchips GB200. But there were only 2 HBM3 modules. That means either H100 or GB200 were without HBM3?

2x 96GB is not enough to run even full DeepSeek R1 -

edzieba ReplyStomx said:I deleted my first post by mistake above.

Of course this is not a consumer device but something is strange here.

480GB LPDDR5 were probably on the system block running by regular processors feeding either 2x Nvidia H100 or 2x Grace-Hopper Superchips GB200. But there were only 2 HBM3 modules. That means either H100 or GB200 were without HBM3?

2x 96GB is not enough to run even full DeepSeek R1

The HBM (96GB) is directly connected to the GPU, the LPDDR is directly connected to the CPU, and the GPU can access that memory over the NVLink bus between the two (and vice versa). Since the NVLink bandwidth (900GB/s) is greater than LPDDR bandwidth (512GB/S), there is minimal bottleneck for use of the LPDDR connected to the CPU than if it were connected directly to the GPU. -

teeejay94 "technically" but in reality a terabyte of rM isn't worth the cost of a house. It doesn't cost that much to produce sonit can't cost that much, whatever ram is sitting at now is a fake inflated number, things cost what they cost relative to the cost of production. So no, it doesn't cost 20K$ or whatever ludicrous amount it adds up , to produce the ram.Reply -

DingusDog Reply

What are you on about? Nobody said it costs that much to produce. It's called supply and demand that fake inflated number is what people are (begrudgingly) willing to pay.teeejay94 said:"technically" but in reality a terabyte of rM isn't worth the cost of a house. It doesn't cost that much to produce sonit can't cost that much, whatever ram is sitting at now is a fake inflated number, things cost what they cost relative to the cost of production. So no, it doesn't cost 20K$ or whatever ludicrous amount it adds up , to produce the ram. -

TheOtherOne Reply

A house "produced" (built) 50 years ago would sell for probably 10x of that cost. Even if taking the inflation to account for "production" cost, the value of that house currently is exactly what market is willing to pay.teeejay94 said:"technically" but in reality a terabyte of rM isn't worth the cost of a house. It doesn't cost that much to produce sonit can't cost that much, whatever ram is sitting at now is a fake inflated number, things cost what they cost relative to the cost of production. So no, it doesn't cost 20K$ or whatever ludicrous amount it adds up , to produce the ram. -

purposelycryptic Reply

No one said that it does - but 960GB of ECC LPDDR5X, even used, is worth a rather ridiculous amount in the current market.teeejay94 said:"technically" but in reality a terabyte of rM isn't worth the cost of a house. It doesn't cost that much to produce sonit can't cost that much, whatever ram is sitting at now is a fake inflated number, things cost what they cost relative to the cost of production. So no, it doesn't cost 20K$ or whatever ludicrous amount it adds up , to produce the ram.

For comparison, a quick price check shows a single refurbished 96GB stick of significantly slower DDR5-4800 ECC server memory is going for $1,320.00 on serversupply.com right now, and for similar prices across most of the web. If you want that in two sticks of 48GB instead, it'll be around $1,400 instead. Even on eBay, non-refurbished used, the prices are the same or higher.

A plain old pair of second-hand regular, non-ECC 48GB DDR5-4800 sticks is going for anywhere from $550 to, well, much higher.

So, if you are currently in the market for 960GB of the even the slowest second-hand DDR5 ECC RAM (not LPDDR5X), you will have to pay well over $10,000 for the privilege. Even r/homelabsales can't help you there.

...of course, the GH200's RAM is soldered on-package, so our guy won't be reselling it separately anytime soon.

But the point stands that, regardless of what it costs to manufacture, none of us are in any position to manufacture it, making that a moot point. If you want to acquire that amount of that general class of RAM, you have to pay what the market demands - and it is currently demanding A LOT.

Which is why my good old home server is still running 512GB of DDR3. -

Stomx So the confusing part was that GrassHopper Superchip is not an additional separate chip but the name of the architecture. Separate chips were 2x processors and 2x H100 combined by NVlink into this unified "superchip" which addresses though several times slower LPDDR5X memory but a lot larger one than HBM3 in size. OK, what's the result? How many tokens per second it delivers on DeepSeek R1 ?Reply

Was this system dig out from California city dumps and landfill places? This is what will happen when AI flop