Huawei-powered mini-PC debuts with Huawei AI chip and 192GB of memory — Orange Pi AI Studio Pro wields Ascend 310 chip with 352 TOPS of AI performance, but relies on a single USB-C port

Powered by the ARM-based Huawei Ascend 310 SoC

Orange Pi has introduced a powerful mini-PC designed for those interested in experimenting with artificial intelligence development and applications. At its core is a 64-bit ARM-based Huawei Ascend 310 AI octa-core processor, which is claimed to deliver up to 176 TOPS of AI performance. That’s more than double what the recently announced Qualcomm Snapdragon X2 Elite can achieve, though the latter is aimed specifically at laptops.

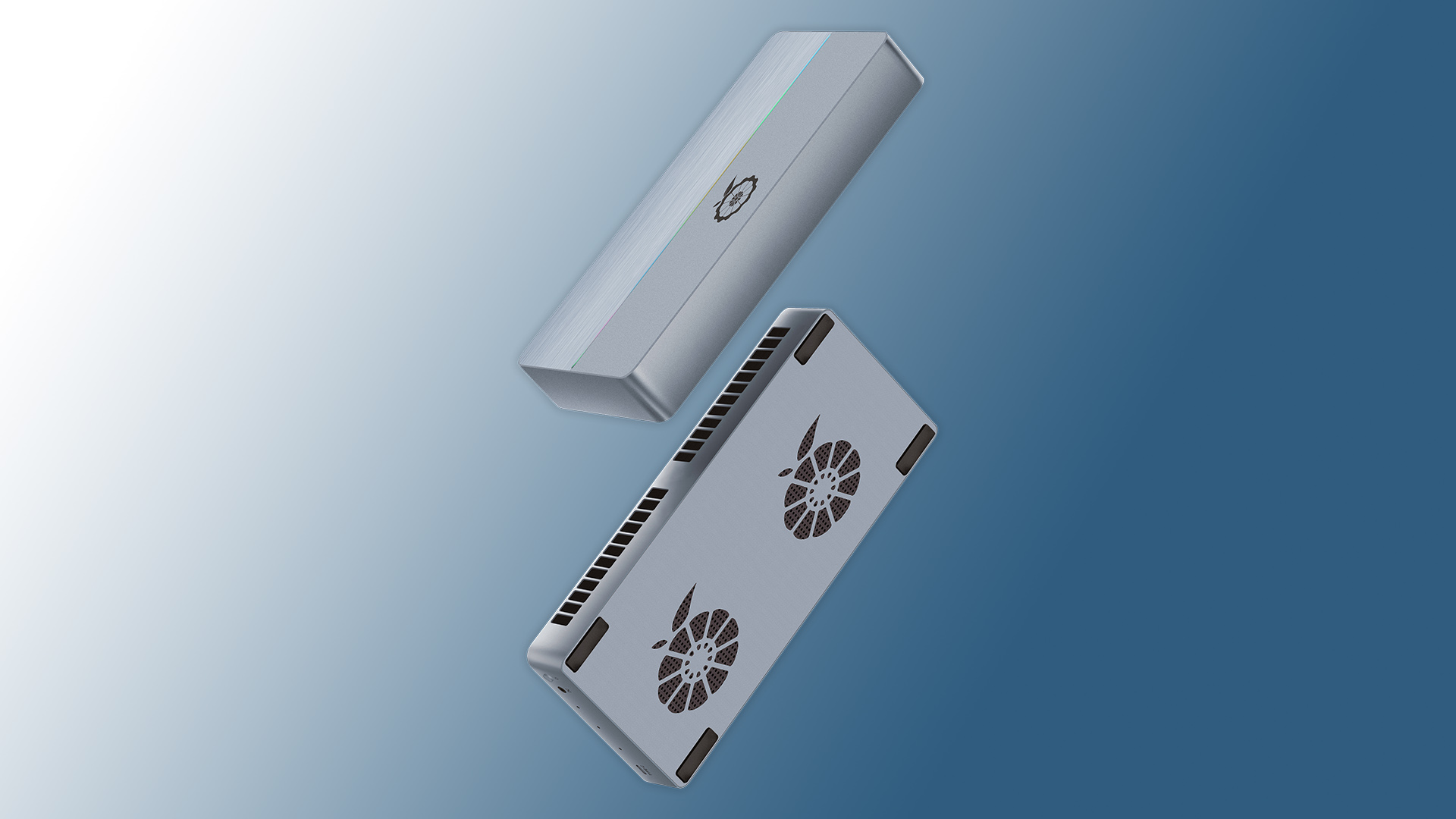

There are two versions of the Orange Pi AI Studio, where the base model features the above-mentioned SoC and the option of 48GB or 96GB of LPDDR4X 4266 memory and 32MB of SPI Flash. The Pro model takes it up a notch by combining two AI Studio mini PCs into one, meaning that you now get a 16-core CPU that offers exactly double the performance of 352 TOPS. It also comes with higher memory options of 96GB or 192GB. The Pro model also comes with dual cooling fans, rather than the single unit on the non-Pro model.

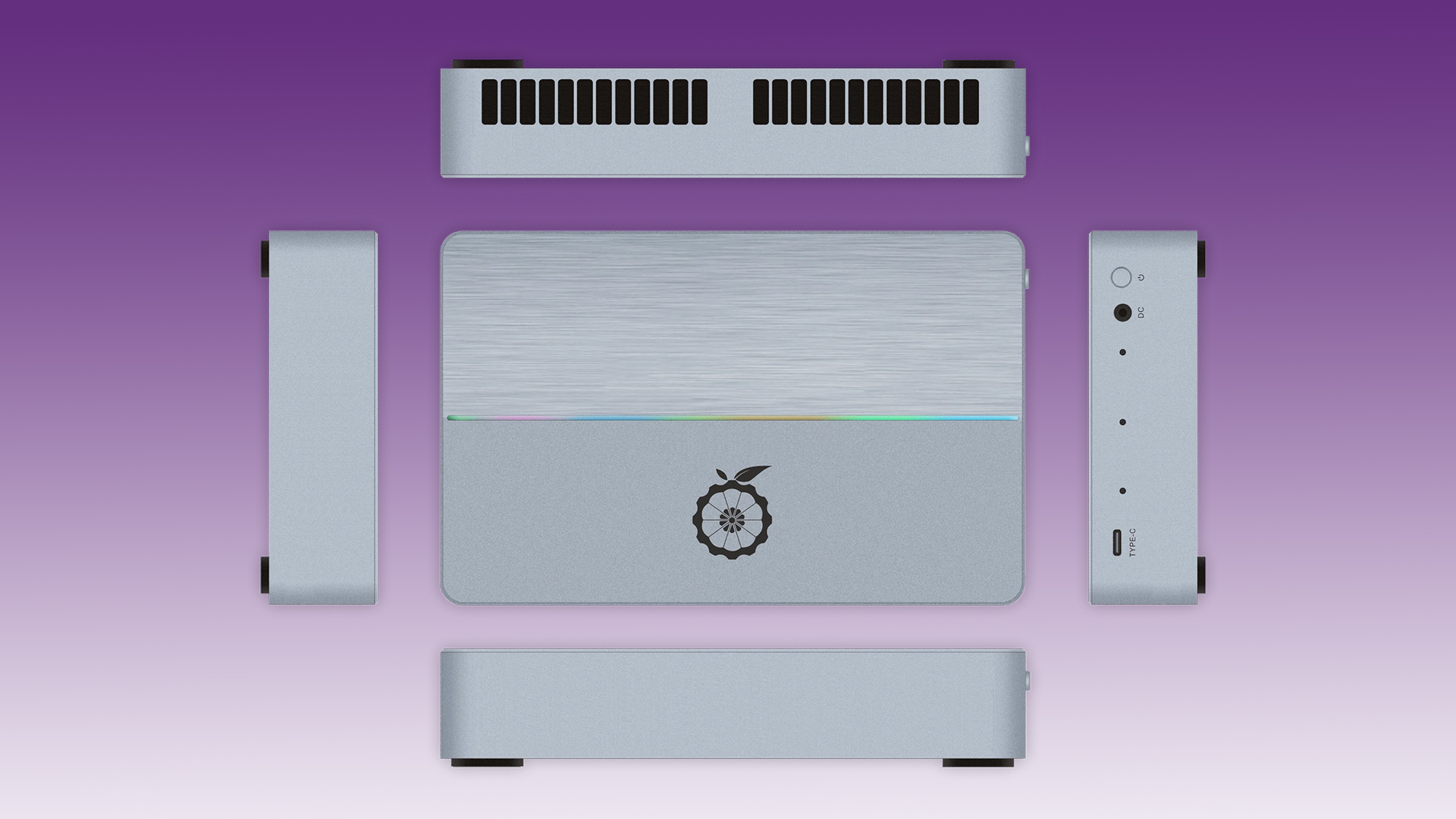

The biggest drawback, however, is the limited interface as the mini-PC comes with a power switch, an LED indicator, a DC power port, and just a single USB 4.0 Type-C port. Having a single USB port means that all connectivity, including display out, storage, and peripherals, must rely on a single link, potentially using a dock or hub. Networking could also be problematic, as the product page (Chinese) does not mention built-in wireless options such as Wi-Fi or Bluetooth.

The product page does, however, mention that it currently supports Ubuntu 22.04.5 and Linux 5.15.0.126, with Windows support expected in the future. It is also described as suitable for local deployment of the Deepseek-R1 distillation model, as well as basic AI tasks in offices, IoT applications, smart transportation systems, and more.

The AI mini PCs are currently listed on JD.com, with the AI Studio priced at 6,808 RMB ($955) for the 48GB model and 7,854 RMB ($1,100) for the 96GB variant. As for the AI Studio Pro, it is available at 13,606 RMB ($1,909) with 96GB RAM or 15,698 RMB ($2,200) with 192GB RAM. While these are primarily selling in China, the Orange Pi AI Studio Pro is available for purchase on AliExpress, priced at over $2,350 plus shipping.

Follow Tom's Hardware on Google News, or add us as a preferred source, to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button!

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Kunal Khullar is a contributing writer at Tom’s Hardware. He is a long time technology journalist and reviewer specializing in PC components and peripherals, and welcomes any and every question around building a PC.

-

GeorgeLY I have Orange Pi 5 16GB - great performance, crappy Linux support. The best distribution for it is Armbian and even for it you need to do some juggling magic to have usb WiFi working. And it was released several years ago!Reply

So I do not expect this to be well-supported. -

w_barath No bandwidth numbers. Training needs tons of bandwidth. Even inference needs a reasonable proportion of bandwidth relative to the number of TOPS. For example. Strix Halo has 256GB/s of bandwidth with 128GB of LPDDR5x at 8000MT/s. This LPDDR4x platform will have about 1/4 the bandwidth for the lower tier models, and half the bandwidth for the higher tier models, so it plain and simply can't compete, regardless of how many more TOPS it brings to the unpaved deer track it plans to feed it with.Reply -

richardnpaul Reply

Looking at the memory amounts it's seems that they might be using a 192bit interface, like the x2 elite, for the single chip and so a dual 192bit (384bit) interface for the dual chip. So, likely, it'll probably have around 192GB/s or 384GB/s of bandwidth, depending on if they're using 8000MT or greater chips (I'm assuming that your original maths is correct).w_barath said:No bandwidth numbers. Training needs tons of bandwidth. Even inference needs a reasonable proportion of bandwidth relative to the number of TOPS. For example. Strix Halo has 256GB/s of bandwidth with 128GB of LPDDR5x at 8000MT/s. This LPDDR4x platform will have about 1/4 the bandwidth for the lower tier models, and half the bandwidth for the higher tier models, so it plain and simply can't compete, regardless of how many more TOPS it brings to the unpaved deer track it plans to feed it with.