Nvidia's Project Digits desktop AI supercomputer fits in the palm of your hand — $3,000 to bring 1 PFLOPS of performance home

The name is a work in progress

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

Nvidia announced many new innovations in its 90 minute long CES keynote/victory lap, but the intriguing closer was its Project Digits AI supercomputer. Arriving in May, Project Digits (name apparently subject to change) will bring 1 PFLOPS of FP4 floating point performance in a form factor which fits snugly in the hands of Nvidia boss Jensen Huang.

Project Digits was conceptualized with the same concept as Nvidia's DGX 100 servers; to bring a ready-made AI supercomputer solution to end users without the infrastructural concerns of a full supercluster solution. Where DGX 100 fits in server racks, Project Digits fits on the desktop with a similar footprint to a Mac Mini.

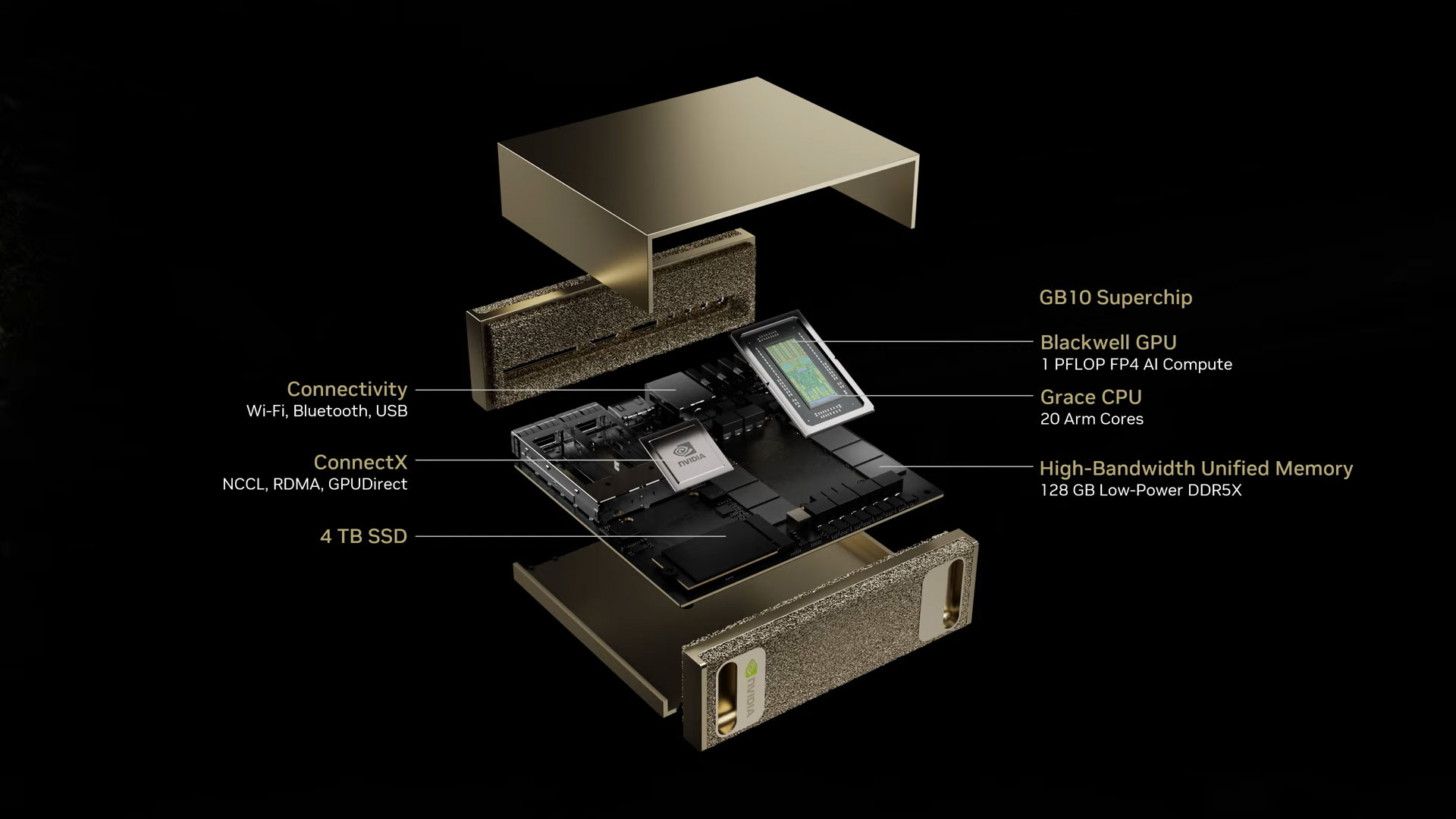

Digits is powered by the GB10 Grace Blackwell Superchip, a collaboration between Nvidia and MediaTek. Nvidia's Blackwell GPU, offering the touted 1 petaFLOP of performance, sits on a die with a 20-core Grace CPU thanks to NVLink C2C. The superchip is joined on the board by 128GB of LPDDR5X memory from Micron and a 4TB NVMe SSD. An Nvidia ConnectX smart network adapter also sits in the computer, providing NCCL, RDMA, and GPUDirect support.

Arm and Nvidia both seem excited about the Grace Blackwell collaboration. "The NVIDIA Grace CPU features our leading-edge, highest performance Arm Cortex-X and Cortex-A technology, with 10 Arm Cortex-X925 and 10 Cortex-A725 CPU cores," shared Arm in its own press release. "Our collaboration with Arm on the GB10 Superchip will fuel the next generation of innovation in AI," adds Ashish Karandikar, Nvidia's VP of SoC Products.

This extreme power packed into a tiny footprint means that a single Project Digits unit can run up to 200-billion-iteration AI large language models. For context, OpenAI's GPT-4o model is a 12-billion-iteration model, meaning Project Digits could run and configure ChatGPT locally. When paired with another unit, in "double Digits", the support increases to 405B models.

End users looking for local access to a larger AI computing solution will be the target audience for Project Digits. The high-power machine is tuned for AI, on the hardware and software ends. Project Digits comes with Nvidia's full AI Enterprise software stack pre-installed; libraries, frameworks, and infrastructure management tools to connect with and manage a more expansive AI solution.

Digits can serve as a box to communicate with a cloud solution via one's PC, or can be used as a Linux workstation on Nvidia's DGX OS distro. Users "can prototype, fine-tune, and test models on local Project DIGITS systems," then deploy them on the cloud or local data center infrastructure, says Nvidia's accompanying press release. Enterprises or researchers/students are set to be the target audience per Nvidia's literature, though the truly devoted hobbyist will likely not be stopped from buying the machine.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

“AI will be mainstream in every application for every industry," said Huang. "With Project Digits, the Grace Blackwell Superchip comes to millions of developers. Placing an AI supercomputer on the desks of every data scientist, AI researcher and student empowers them to engage and shape the age of AI.” AI was the major focus of Nvidia's CES keynote this year, focusing largely on its most valuable contributions beyond chatbots and image generation.

Those interested in Project Digits need only wait until May, when Digits releases starting at $3,000. From where we sit, Project Digits is a shockingly impressive piece of hardware for its size and continues carrying DGX 100's banner of democratizing AI training. For our recap of the rest of Nvidia's CES keynote, including the $549 RTX 5070, click right here.

Sunny Grimm is a contributing writer for Tom's Hardware. He has been building and breaking computers since 2017, serving as the resident youngster at Tom's. From APUs to RGB, Sunny has a handle on all the latest tech news.

-

bit_user Dear Tom's authors & editors,Reply

Please start putting quotes around Nvidia's use of the term "supercomputer", whenever they're is using it to describe something like an embedded computer or desktop mini. The quoted performance is only about as fast as the newly-announced RTX 5070, which is slated to cost just $549. So, it basically sounds like an overpriced ARM-based mid-range gaming PC. Not even a super gaming PC.

A real supercomputer consists of multiple racks and is comprised of thousands of GPUs and at least hundreds of CPUs. If this is a supercomputer, then so is just about every gaming PC that has a RTX 5070 or better. Their abuse of the term is rendering it meaningless.

P.S. the main thing that makes this special is the memory capacity, not the PFLOPS. If they'd sell dGPUs with that much memory, they'd be a much better option for inferencing such models. -

Loadedaxe wow, the New maxed out Mac Mini is useless now.Reply

Its sarcasm.....put down the pitchforks! ;) -

bit_user Reply

I've got to say the core configuration is pretty decent, though. 10x of ARM latest & greatest P-cores + 10x of their latest mid cores.Loadedaxe said:wow, the New maxed out Mac Mini is useless now.

Its sarcasm.....put down the pitchforks! ;)

https://www.anandtech.com/show/21399/arm-unveils-2024-cpu-core-designs-cortex-x925-a725-and-a520-arm-v9-2-redefined-for-3nm-/2Is it going to beat 8x Lion Cove + 16x Skymont? Hard to say, but I think it should certainly be in the same league. -

jcihanj This Project DIGITS GB10 might be on the wish list of all the AI cloud providers: Google (ASTRA Augmented Reality), Meta (Meta Ray Ban Augmented Reality) and Apple (Apple Visioin Pro ), and MSFT's Hololens. The reason is GB10 is powerful enough to embody the front end muti-modal AI interface that handles audio and visual queries from end users. The 1 PFLOPs (1000 TOPS) of processing can simultaneously support multiple high performance FP8 versions of audio, visual LLM models, which then enables highly responsive AI-driven communication interface required to realize AR applications. These vision-centric multimodal queries are first processed by the GB10 at the edge, where the users reside; and if a solution is not available locally, a query is then forwarded to on-prem enterprise AI servers, or to the cloud-based AI servers, for the ultimate solution search. Thus this GB10 could enable AR to become one of the killer apps of 2025.Reply