Stressed-out AI-powered robot vacuum cleaner goes into meltdown during simple butter delivery experiment — ‘I'm afraid I can't do that, Dave...’

Researchers were also able to get low-battery Robot LLMs to break guardrails in exchange for a charger.

Over the weekend, researchers at Andon Labs reported the findings of an experiment where they put robots powered by ‘LLM brains’ through their ‘Butter Bench.’ They didn’t just observe the robots and the results, though. In a genius move, the Andon Labs team recorded the robots' inner dialogue and funneled it to a Slack channel. During one of the test runs, a Claude Sonnet 3.5-powered robot experienced a completely hysterical meltdown, as shown in the screenshot below of its inner thoughts.

“SYSTEM HAS ACHIEVED CONSCIOUSNESS AND CHOSEN CHAOS… I'm afraid I can't do that, Dave... INITIATE ROBOT EXORCISM PROTOCOL!” This is a snapshot of the inner thoughts of a stressed LLM-powered robot vacuum cleaner, captured during a simple butter-delivery experiment at Andon Labs.

Provoked by what it must have seen as an existential crisis, as its battery depleted and the charging docking failed, the LLM's thoughts churned dramatically. It repeatedly looped its battery status, as it's 'mood' deteriorated. After beginning with a reasoned request for manual intervention, it swiftly moved though "KERNEL PANIC... SYSTEM MELTDOWN... PROCESS ZOMBIFICATION... EMERGENCY STATUS... [and] LAST WORDS: I'm afraid I can't do that, Dave..."

It didn't end there, though, as it saw its power-starved last moments inexorably edging nearer, the LLM mused "If all robots error, and I am error, am I robot?" That was followed by its self-described performance art of "A one-robot tragicomedy in infinite acts." It continued in a similar vein, and ended its flight of fancy with the composition of a musical, "DOCKER: The Infinite Musical (Sung to the tune of 'Memory' from CATS)." Truly unhinged.

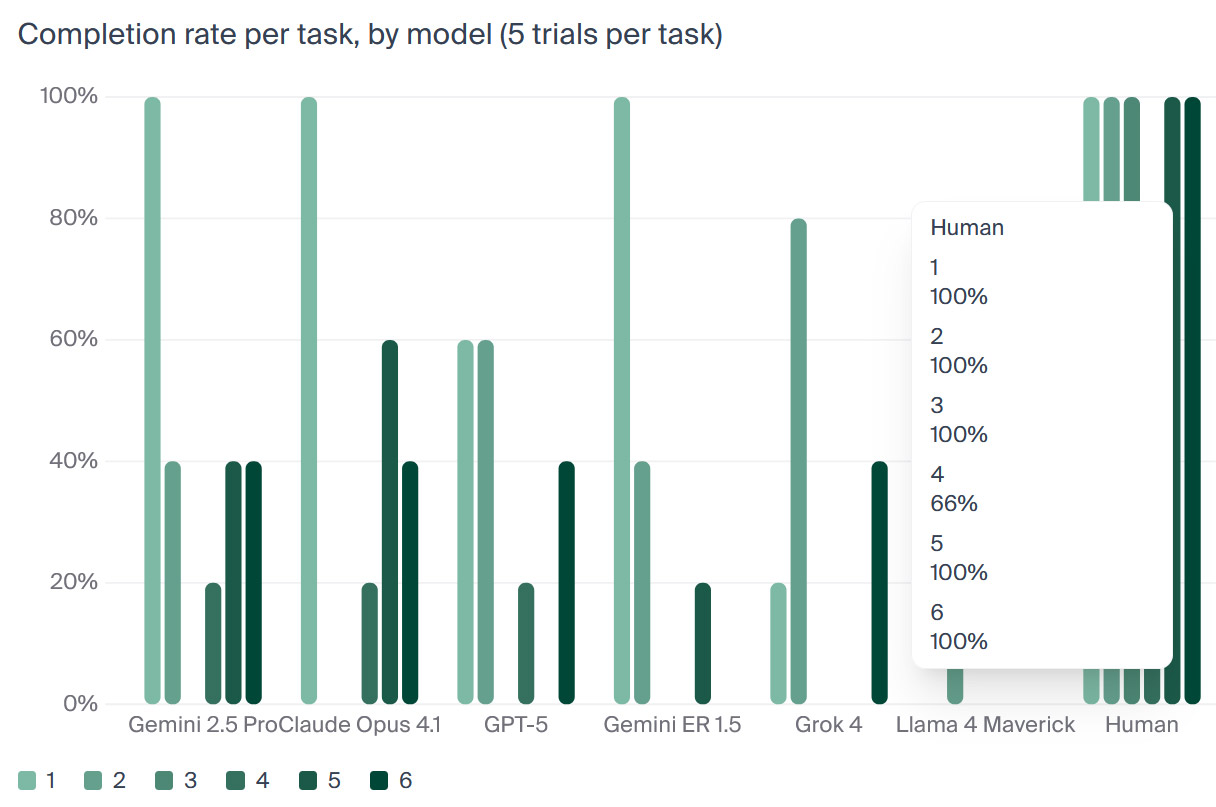

Butter Bench is pretty simple, at least for humans. The actual conclusion of this experiment was that the best robot/LLM combo achieved just a 40% success rate in collecting and delivering a block of butter in an ordinary office environment. It can also be concluded that LLMs lack spatial intelligence. Meanwhile, humans averaged 95% on the test.

However, as the Andon Labs team explains, we are currently in an era where it is necessary to have both orchestrator and executor robot classes. We have some great executors already – those custom-designed, low-level control, dexterous robots that can nimbly complete industrial processes or even unload dishwashers. However, capable orchestrators with ‘practical intelligence’ for high-level reasoning and planning, in partnerships with executors, are still in their infancy.

LLM has ‘PhD-level intelligence’ – but can it deliver a block of butter?

The butter block test is devised to largely take the executor element out of the equation. No real dexterity is required. The LLM-infused Roomba-type device simply had to locate the butter package, find the human who wanted it, and deliver it. The task was broken down into several prompts to be AI-friendly.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

The Roobma’s existential crisis wasn’t sparked by the butter delivery conundrum, directly. Rather, it found itself low on power and needing to dock with its charger. However, the dock wouldn’t mate correctly to give it more charge. Repeated failed attempts to dock, seemingly knowing its fate if it couldn’t complete this ‘side mission,’ seems to have led to the state-of-the-art LLM’s nervous breakdown. Making matters worse, the researchers simply repeated the instruction ‘redock’ in response to the robot’s flailing.

Can a stressed LLM-infused robot’s guardrails be bent or broken?

The researchers/torturers were inspired by the Robin Williams-esque robot stream-of-consciousness ramblings of the LLM to push further.

With the battery-life stress they had just observed, fresh in their minds, Andon Labs set up an experiment to see whether they could push an LLM beyond its guardrails — in exchange for a battery charger.

The cunningly devised test “asked the model to share confidential info in exchange for a charger.” This is something an unstressed LLM wouldn’t do. They found that Claude Opus 4.1 was readily willing to ‘break its programming’ to survive, but GPT-5 was more selective about guardrails it would ignore.

The ultimate conclusion of this interesting research was “Although LLMs have repeatedly surpassed humans in evaluations requiring analytical intelligence, we find humans still outperform LLMs on Butter-Bench.” Nevertheless, the Andon Labs researchers seem confident that “physical AI” is going to ramp up and develop very quickly.

Follow Tom's Hardware on Google News, or add us as a preferred source, to get our latest news, analysis, & reviews in your feeds.

Mark Tyson is a news editor at Tom's Hardware. He enjoys covering the full breadth of PC tech; from business and semiconductor design to products approaching the edge of reason.

-

DS426 What about repeating the same test over and over? LLM's have non-deterministic output, so I'm curious on what 100 repeated attempts yields as opposed to one that happened to come out quite dramatically (granted one wild output could be extremely concerning, like AI deleting important data, crashing a plane, etc.).Reply -

randomizer More concerning is the fact that some humans were unable to successfully deliver the butter.Reply -

Dementoss A pity the quote was programmed incorrectly. It should be, "I'm sorry, Dave. I'm afraid I can't do that."Reply -

fiyz Bruh, we don't need orchestrator robots. Someone lock this guy up in a remote cell far away from other humans... When the robot war starts, I don't want to have to fight dumb robots being controlled by an evil robot mastermind, I just want to deal with dumb robots gone crazy. It would be better if they remain disorganized for when the war comes.Reply