Nvidia-backed startup invents Ethernet memory pool to help power AI — claims it can add up to 18TB of DDR5 capacity for large-scale inference workloads and reduce per-token generation costs by up to 50%

Up to 18TB of extra DDR5 per server.

RAM capacity tends to be a bottleneck for numerous AI applications, but adding memory to host systems is sometimes not possible or complicated, so Enfabrica, a startup supported by Nvidia, has invented its Emfasys system that can add terabytes of DDR5 memory to any server using an Ethernet connection. The memory pool over the Ethernet system is designed for large-scale inference workloads and is currently tested with select clients.

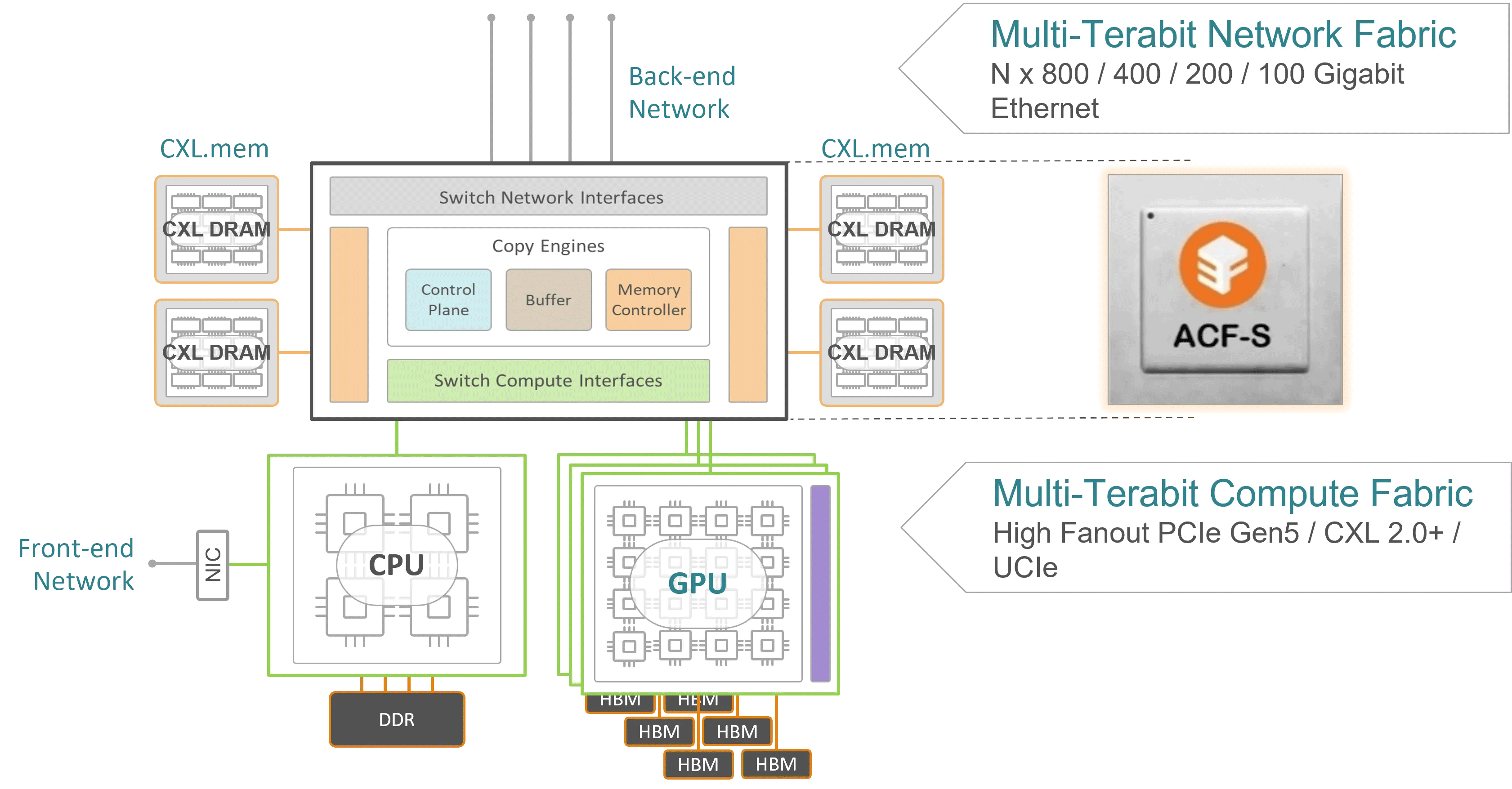

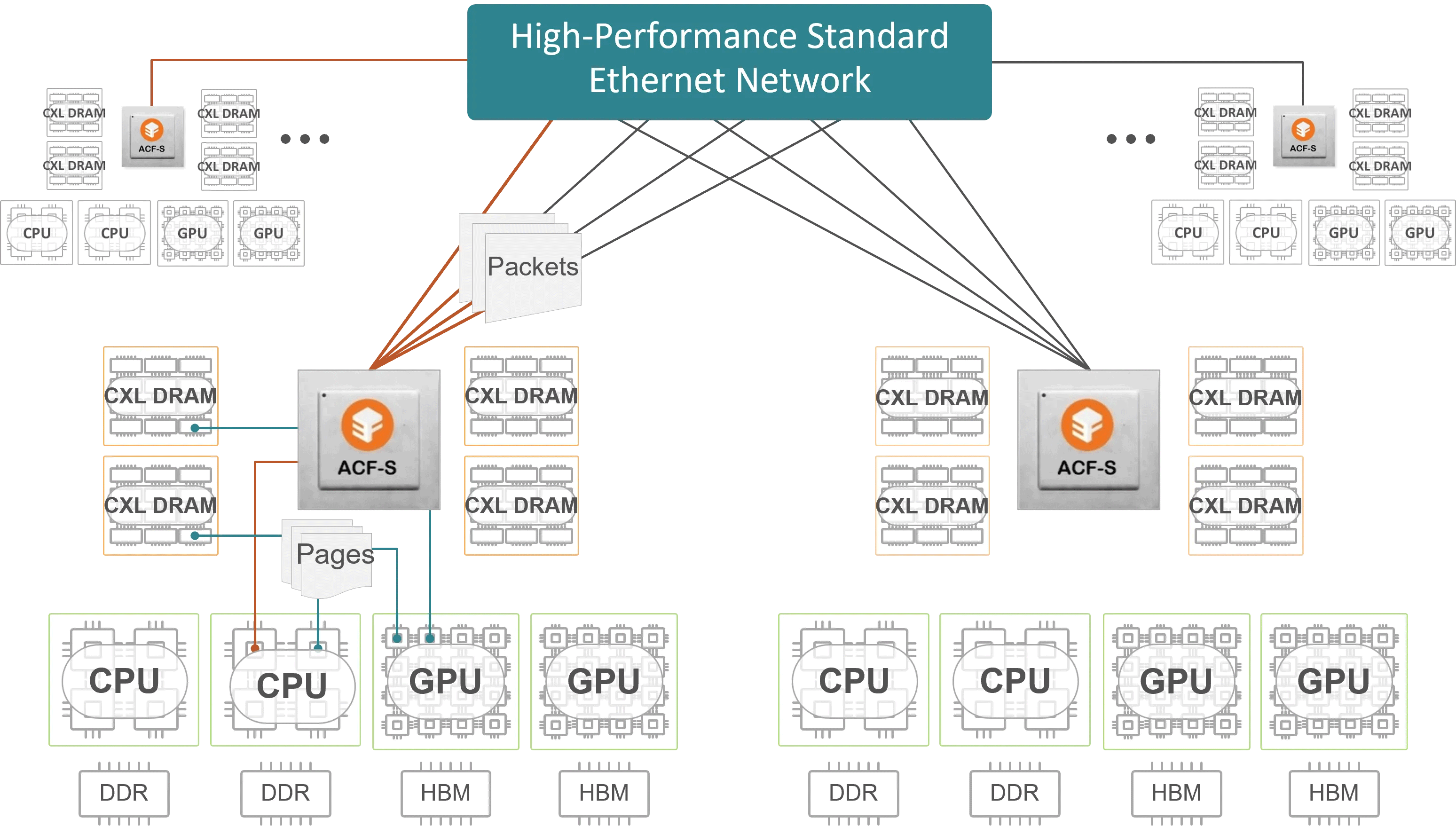

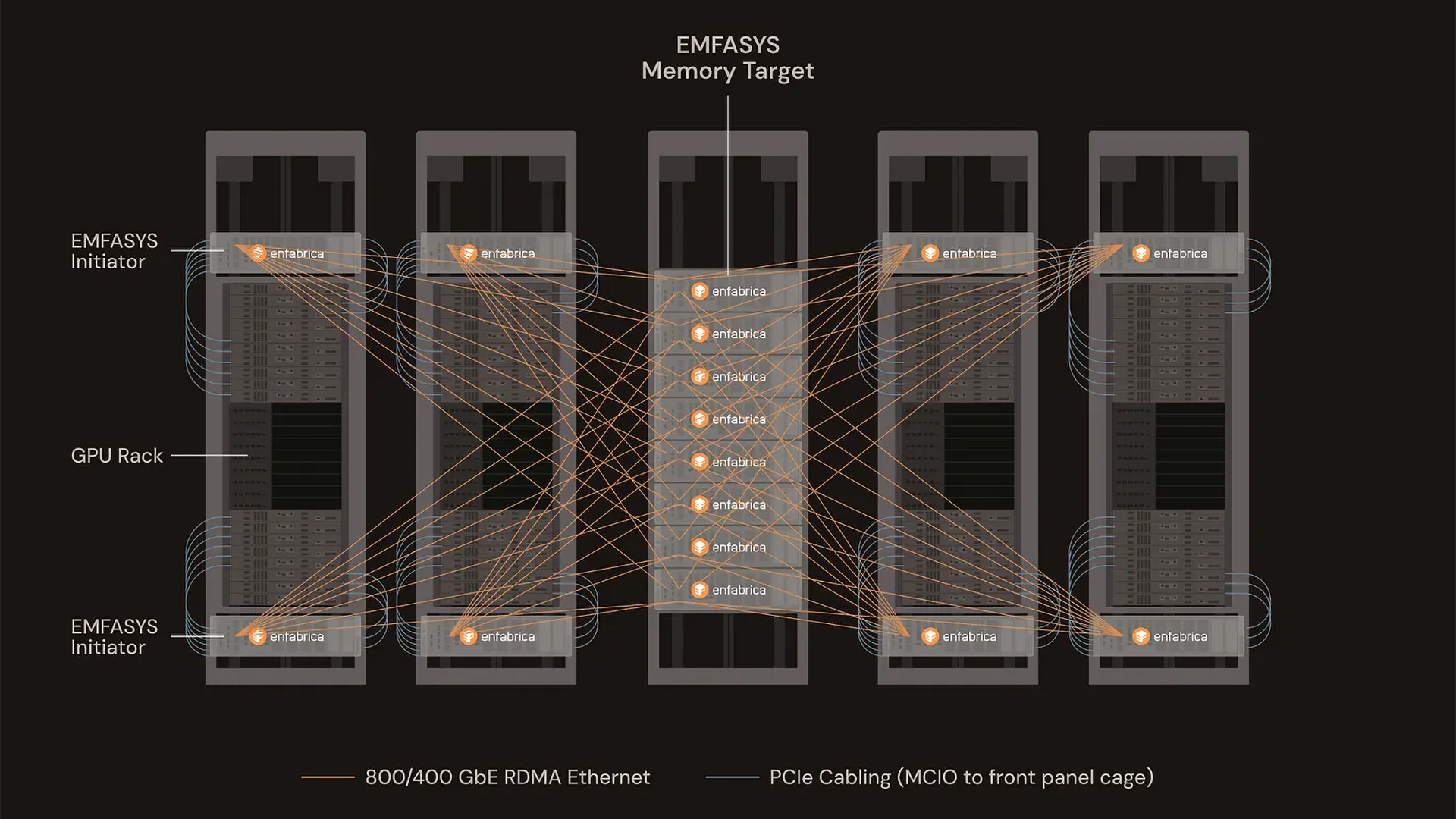

Enfabrica's Emfasys system is a rack-compatible system based on the company's ACF-S SuperNIC with a 3.2 Tb/s (400 GB/s) throughput. It connects up to 18 TB of DDR5 memory with CXL on top. The memory pool can be accessed by 4-way and 8-way GPU servers through standard 400G or 800G Ethernet ports using Remote Direct Memory Access (RDMA) over Ethernet, so adding an Emfasys system to almost any AI server is seamless.

Data movement between GPU servers and the Emfasys memory pool is done using RDMA, which allows zero-copy, low-latency memory (measured in microseconds) access without CPU intervention using the CXL.mem protocol. Of course, to access the Emfasys memory pool, servers require memory-tiering software (that masks transfer delays among other things), provided or enabled by Enfabrica. This software runs on existing hardware and OS environments and builds upon widely adopted RDMA interfaces, so deployment of the pool is fairly easy and does not require any major architectural changes.

Enfabrica's Emfasys is meant to address growing memory requirements of modern AI use cases that use increasingly long prompts, large context windows, or multiple agents. These workloads place significant pressure on GPU-attached HBM, which is both limited and expensive. By using an external memory pool, data center operators can flexibly expand the memory of an individual AI server, which makes sense for the aforementioned scenarios.

By using the Emfasys memory pool, owners of AI servers improve efficiency as compute resources are better utilized, expensive GPU memory is not wasted, and overall infrastructure costs can be reduced. According to Enfabrica, this setup can lower the cost per AI-generated token by as much as 50% in high-turn and long-context scenarios. Token generation tasks can also be distributed across servers more evenly, which will eliminate bottlenecks.

"AI inference has a memory bandwidth-scaling problem and a memory margin-stacking problem," said Rochan Sankar, CEO of Enfabrica. "As inference gets more agentic versus conversational, more retentive versus forgetful, the current ways of scaling memory access won’t hold. We built Emfasys to create an elastic, rack-scale AI memory fabric and solve these challenges in a way that has not been done before. Customers are excited to partner with us to build a far more scalable memory movement architecture for their GenAI workloads and drive even better token economics."

The Emfasys AI memory fabric system and the 3.2 Tb/s ACF SuperNIC chip are currently being evaluated and tested by select customers. It is unclear when general availability is planned, if at all.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Enfabrica actively serves as an advisory member of the Ultra Ethernet Consortium (UEC) and contributes to the Ultra Accelerator Link (UALink) Consortium, which gives an idea of where the company is heading.

Follow Tom's Hardware on Google News to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

bit_user Can't they just use CXL without layering it atop Ethernet? Ethernet certainly scales better, but it burns a lot of power and adds nontrivial amounts of latecy (as @rluker5 mentioned).Reply