Nvidia's upcoming ARM-based N1X SoC leaks again, this time on FurMark — modest benchmark score indicates early engineering sample but confirms Windows evaluation

N1X is coming along nicely.

Nvidia’s upcoming N1X SoC has made a fresh appearance—this time in FurMark’s benchmark database—offering a first look at the chip’s performance running natively on Windows 11. While the numbers will certainly not grab headlines, they provide valuable insight into where the chip currently stands in its development cycle.

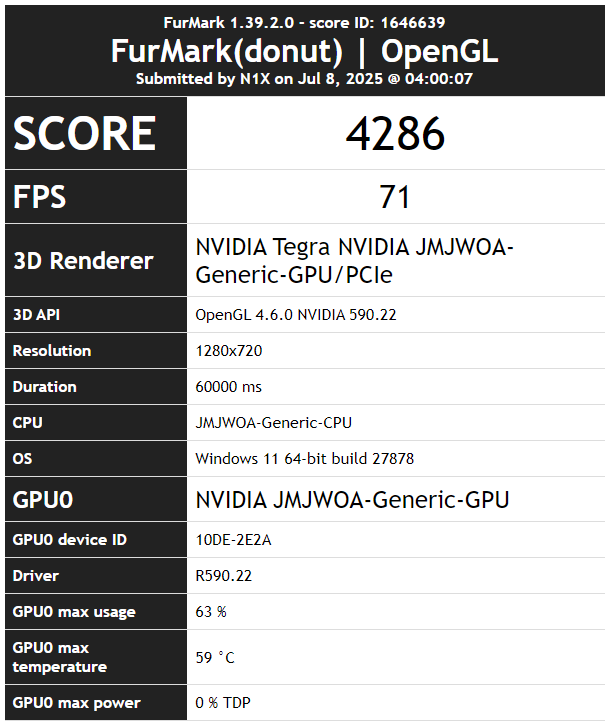

Identified as “JMJWOA” by FurMark, the N1X reportedly scored 4,286 points in the 720p stress test, averaging 71 FPS. That's not even as good as some RTX 2060 scores, despite the N1X reportedly featuring 6,144 CUDA cores, more than the RTX 5070. On the surface, that may seem like underperformance, especially considering the sizable core count, but there’s more nuance to the situation.

This is clearly not a finished product. Nvidia’s N1X is expected to debut in 2026, and the version tested here is almost certainly an early engineering sample. Running at a modest 120W power budget and paired with pre-release 590.22 drivers—drivers that are still in transition away from legacy GPU architectures like Kepler and Maxwell—it’s unrealistic to expect full-fledged performance at this point.

Moreover, FurMark itself is more of a stress test than a benchmark, often throttled or deprioritized by power and thermal management systems, especially in pre-release silicon. In this run, the N1X reportedly only hit 63% utilization and 59°C, possibly indicating built-in protections that prevent full ramp-up under synthetic loads. Whether it’s firmware, BIOS restrictions, or driver immaturity, this result doesn’t reflect the chip’s actual, real-world potential.

That being said, the bigger takeaway here is the fact that Nvidia has the N1X running on Windows 11 at all. That signals a critical step in software enablement, and the ongoing validation process across operating systems is a prerequisite for broader deployment. Nvidia has been aggressive in positioning the N1X as a versatile compute platform, targeting AI and workstation workloads rather than raw gaming performance. After all, every leak points toward it being a cut-down GB10.

With a year to go before release, the N1X’s FurMark outing should be seen less as a definitive performance indicator and more as a milestone in its evolving development journey. If anything, it shows Nvidia is steadily laying the groundwork for its most ambitious ARM-based SoC to date, something that will only stir up the competition in this market space.

Follow Tom's Hardware on Google News to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Hassam Nasir is a die-hard hardware enthusiast with years of experience as a tech editor and writer, focusing on detailed CPU comparisons and general hardware news. When he’s not working, you’ll find him bending tubes for his ever-evolving custom water-loop gaming rig or benchmarking the latest CPUs and GPUs just for fun.

-

Kindaian Interesting. I will look forward to this, when there is cuda support for it and laptops runing it with linux. Not interested at all in Windows.Reply -

bit_user Reply

There's your answer - or, at least a big part of it. Another big part might be the difference in memory bandwidth.The article said:That's not even as good as some RTX 2060 scores, despite the N1X reportedly featuring 6,144 CUDA cores, more than the RTX 5070.

...

Running at a modest 120W power budget ...

I found a claim that DGX Spark runs at 170 W, so that's probably the upper end of what the N1X would use:

https://www.reddit.com/r/hardware/comments/1jeghvg/comment/mij5pn6/ -

freebyrd26 Color me less then impressed... this was originally supposed to be a 2025 product. Now what mid to late 2026?Reply

Although, Strix Halo successor will probably be a year later than this, so it will probably sell. -

bit_user Reply

Another way to read that is they decided to go with a newer & more powerful iGPU. Medusa Halo was rumored still to use RDNA 3.5.baboma said:>Strix Halo successor will probably be a year later than this, so it will probably sell.

Medusa Halo is rumored to be cancelled. Judging from Strix Halo's lack of design wins, not a surprise.

https://www.techpowerup.com/339056/intel-skips-npu-upgrade-for-arrow-lake-refresh-amd-cancels-medusa-halo-in-latest-rumors -

Kindaian Reply

CUDA requires drivers for each of the specific cores. Just because it has CUDA cores, doesn't mean it will have support for the CUDA software out of the box. None of the news i've skimmed on the internet had any info about that. So we will wait and see "soon" :)baboma said:>...cuda support for it and laptops runing it with linux.

N1X will run on Linux and WoA.

https://www.laptopmag.com/laptops/windows-laptops/nvidia-n1x-apu-benchmarks

"The Nvidia N1x APU was tested on an HP unit based on the motherboard specification for an HP 8EA3. The test unit was running Linux AArch64 and featured 128GB of system memory, confirming the N1x as a 20-thread, 2.81 GHz ARM processor."

CUDA is supported in Linux

https://docs.nvidia.com/cuda/cuda-installation-guide-linux/

>Strix Halo successor will probably be a year later than this, so it will probably sell.

Medusa Halo is rumored to be cancelled. Judging from Strix Halo's lack of design wins, not a surprise.

https://www.techpowerup.com/339056/intel-skips-npu-upgrade-for-arrow-lake-refresh-amd-cancels-medusa-halo-in-latest-rumors -

bit_user Reply

Everything Nvidia ever shipped in the last 15+ years supported CUDA, even the handful of graphics cards that didn't officially support it (GT 1030 being one example, I think). That includes all of their Jetson boards.Kindaian said:CUDA requires drivers for each of the specific cores. Just because it has CUDA cores, doesn't mean it will have support for the CUDA software out of the box. None of the news i've skimmed on the internet had any info about that. So we will wait and see "soon" :)

So, the chance that they won't support CUDA on these is basically nil. However, you're perhaps aware that a lot of the deep learning horsepower is probably contained in NVDLA blocks, and those aren't regular CUDA devices, as far as I know. They should still be supported via TensorRT, as well as probably some other deep learning frameworks, but they might not be open for you to program, directly. -

artk2219 Reply

Probably not, but even if it is, it doesn't really matter as the only way to get an Apple CPU, is to buy an apple device, and get stuck with a whole different set of limitations.ezst036 said:Seems slower than the Apple M1/2/3/4 ARM chips.