Pliops expands AI's context windows with 3D NAND-based accelerator – can accelerate certain inference workflows by up to eight times

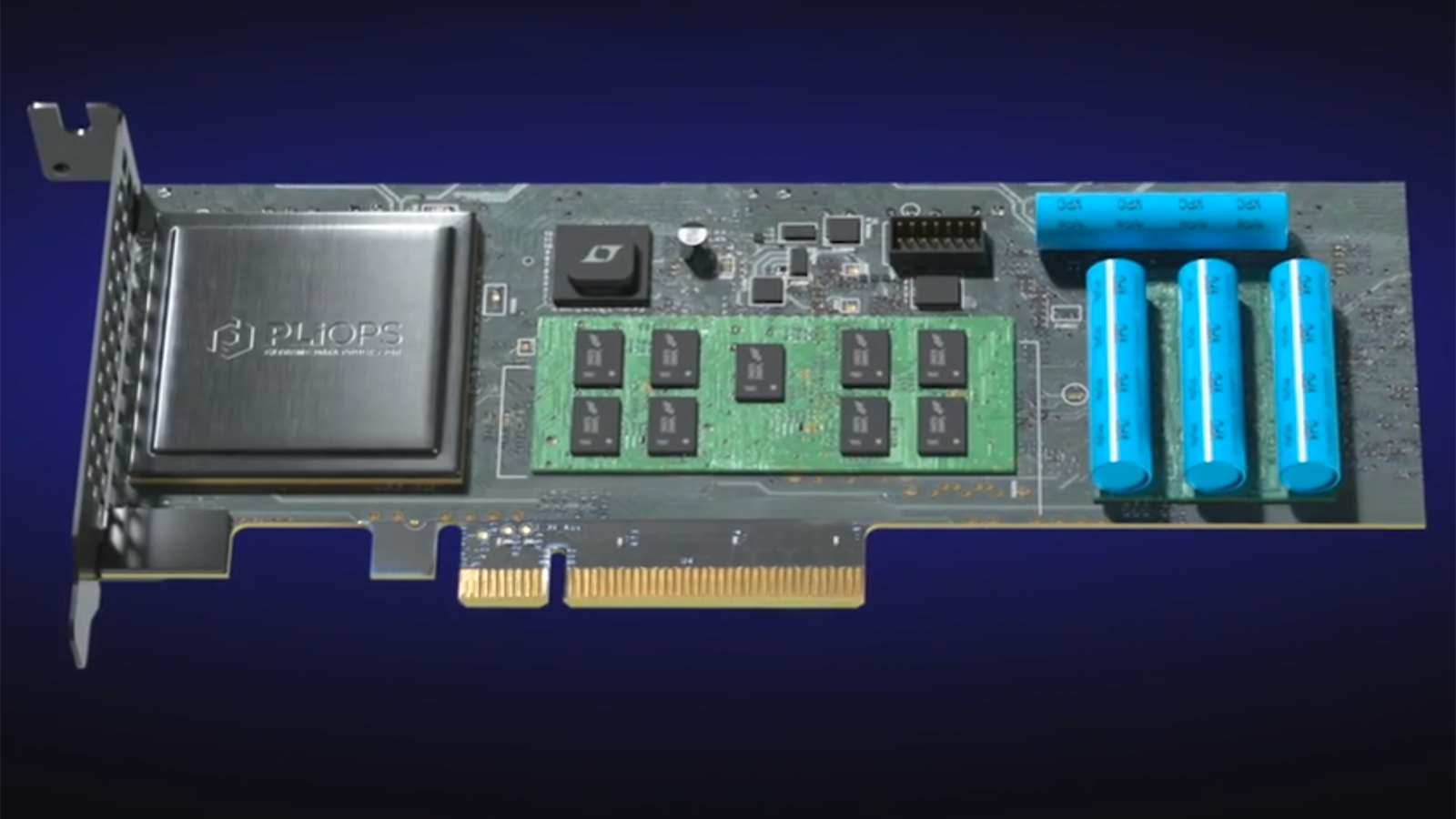

The PCIe add-in card functions as a memory tier for GPU servers

As language models grow in complexity and their context windows expand, GPU-attached high bandwidth memory (HBM) becomes a bottleneck, forcing systems to repeatedly recalculate data that no longer fits in onboard HBM. Pliops has addressed this challenge with its XDP LightningAI device and FusIOnX software, which store precomputed context on fast SSDs and retrieve it instantly when needed, reports Blocks and Files. The company says that its solution enables 'nearly' HBM speeds and can accelerate certain inference workflows by up to eight times.

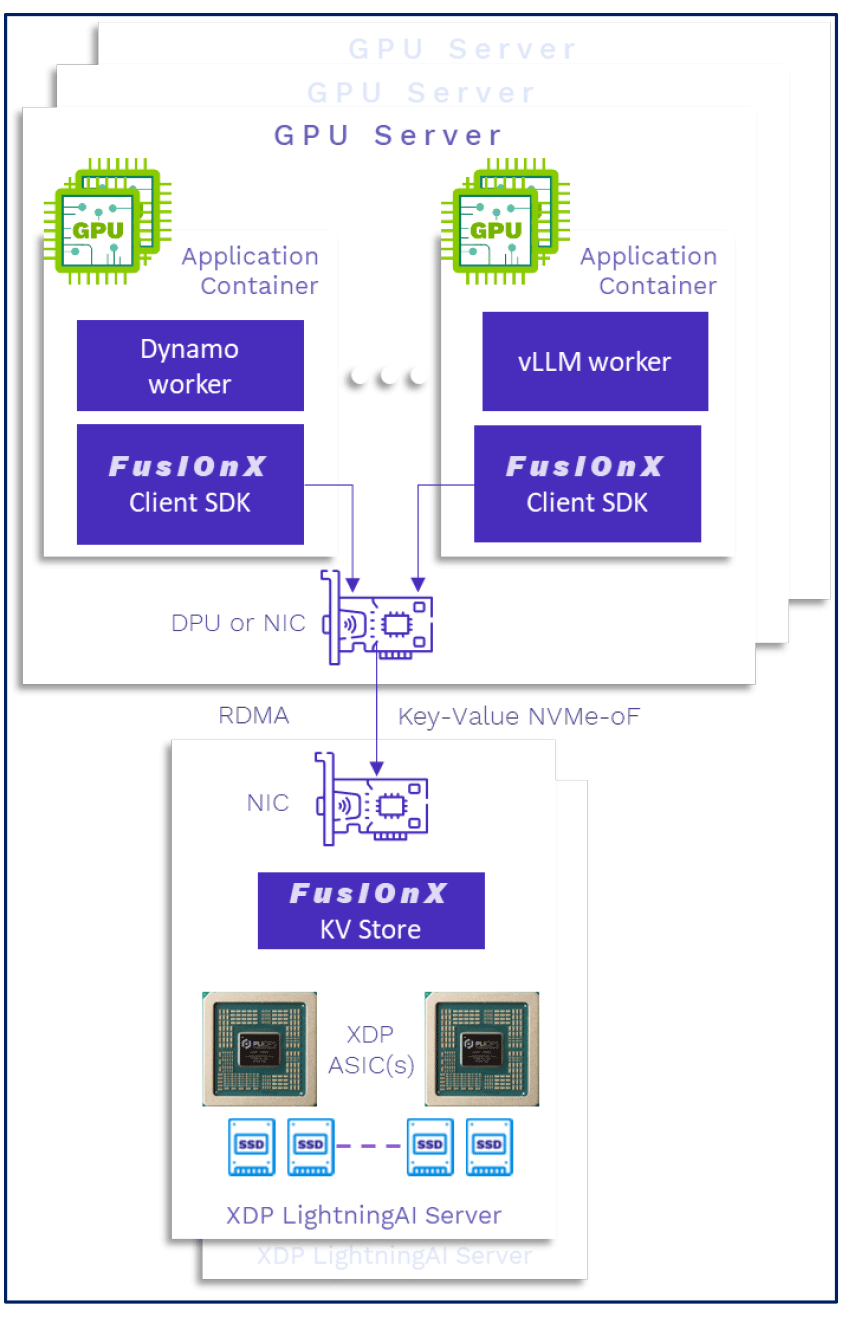

During inference, language models generate and reference key-value data to manage context and maintain coherence across long sequences. Normally, this information is stored in the GPU's onboard memory, but when the active context becomes too large, older entries are discarded, forcing the system to redo calculations if those entries are needed again, which increases latency and GPU load. To eliminate these redundant operations, Pliops has introduced a new memory tier that is enabled by its XDP LightningAI machine, a PCIe device that manages the movement of key-value data between GPUs and tens of high-performance SSDs.

The card uses a custom-designed XDP ASIC and the FusIOnX software stack to handle read/write operations efficiently and integrates with AI serving frameworks like vLLM and Nvidia Dynamo. The card is GPU agnostic and can support both standalone and multi-GPU server setups. In multi-node deployments, it also handles routing and sharing of cached data across different inference jobs or users, enabling persistent context reuse at scale.

This architecture allows AI inference systems to support longer contexts, higher concurrency, and more efficient resource utilization without scaling GPU hardware. Instead of expanding HBM memory through additional GPUs (keep in mind that the maximum scale-up world size, or the number of GPUs directly connected to each other, is limited), Pliops enables systems to retain more context history at a lower cost, with nearly the same performance, according to the company. As a result, it becomes possible to serve large models with stable latency, even under demanding conditions, while reducing the total cost of ownership for AI infrastructure.

Although on paper, even 24 high-performance PCIe 5.0 SSDs provide 336 GB/s of bandwidth, significantly less memory bandwidth compared to H100's 3.35 TB/s, the lack of necessity to repeatedly recalculate data provides significant performance enhancements compared to systems without an XDP LightningAI device and FusIOnX software.

According to Pliops, its solution boosts the throughput of a typical vLLM deployment by 2.5 to eight times, allowing the system to handle more user queries per second without increasing GPU hardware requirements.

Follow Tom's Hardware on Google News to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.