AMD announces MI325X AI accelerator, reveals MI350 and MI400 plans at Computex

More accelerators, more AI.

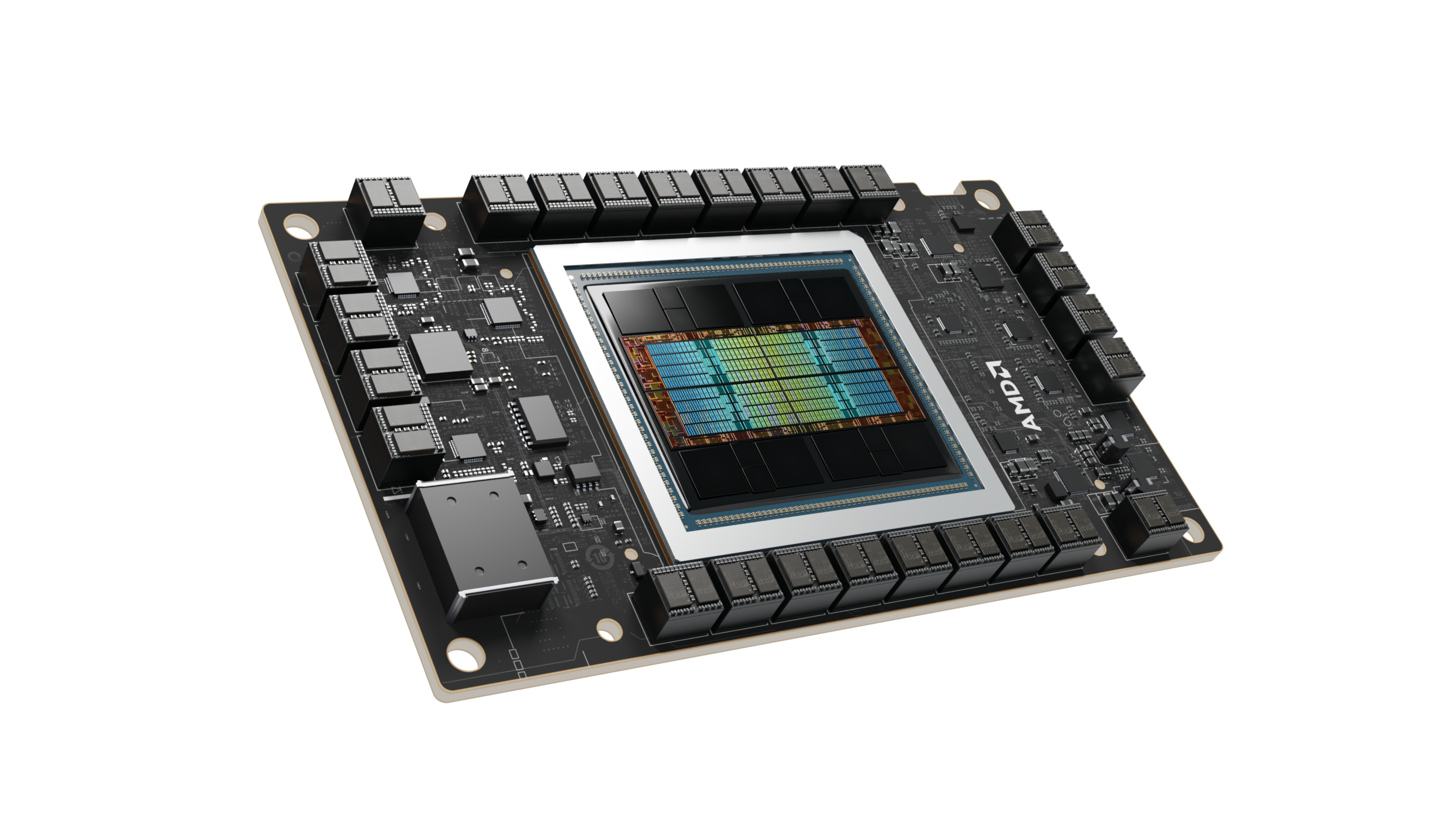

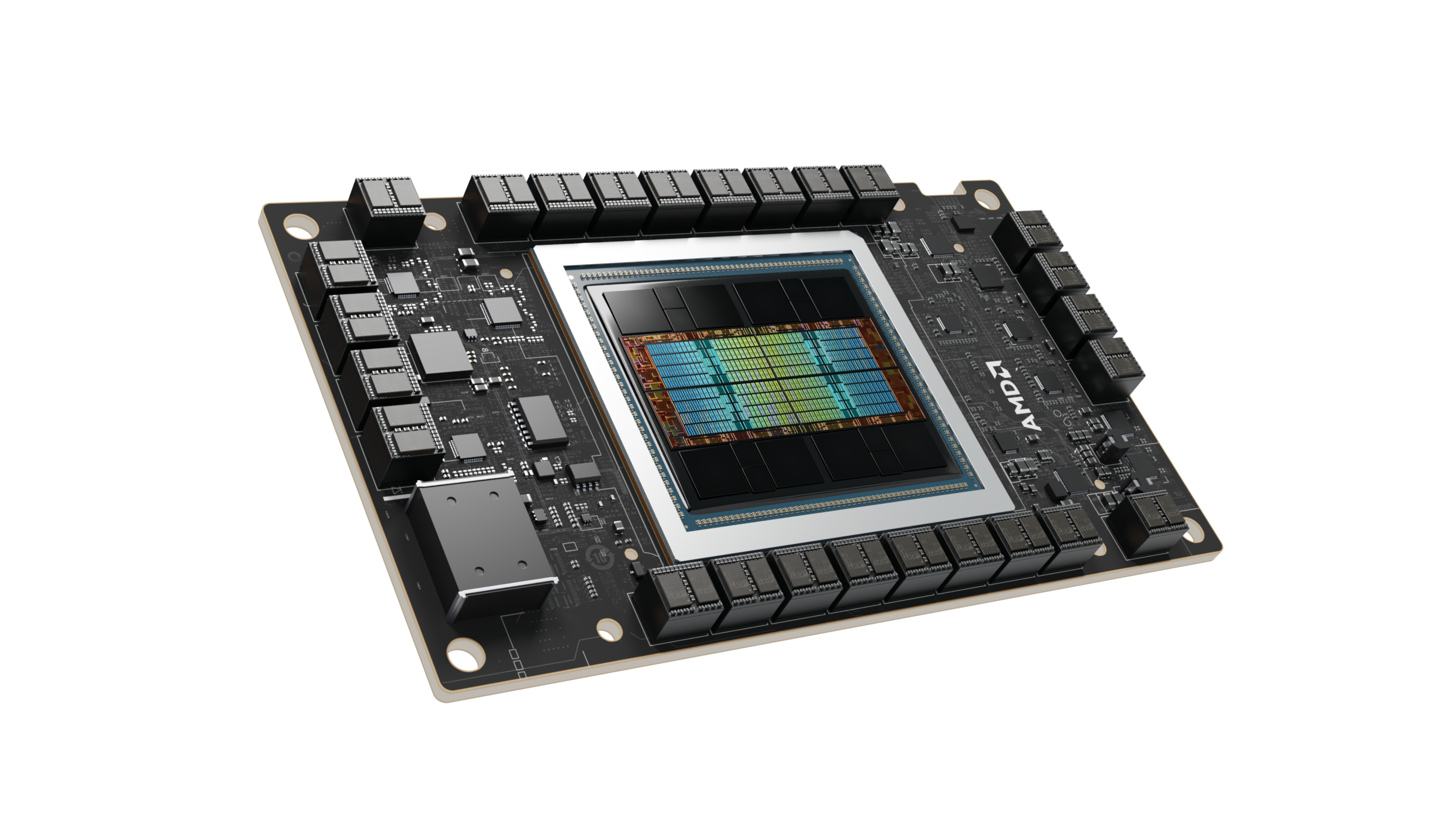

AMD kicked off Computex with a few pretty significant announcements: its new Instinct MI325X accelerators (pictured), which will be available in the fourth quarter of 2024; the upcoming Instinct MI350-series powered by the CDNA4 architecture that will launch next year; and the all-new CDNA 'Next'-based Instinct MI400-series products set to come out in 2026.

Perhaps the most significant announcement is that AMD's updated product roadmap commits to an annual release schedule, ensuring continuous increases in AI and HPC performance with enhanced instruction sets and higher memory capacity and bandwidth.

The AMD Instinct MI325X, set for release in Q4 2024, will feature up to 288GB of HBM3E memory with 6 TB/s of memory bandwidth. According to AMD, the MI325X will deliver 1.3x better inference performance and token generation compared to Nvidia's H100. One must remember that AMD's Instinct MI325X will be competing against Nvidia's H200 or even B100/B200 accelerators.

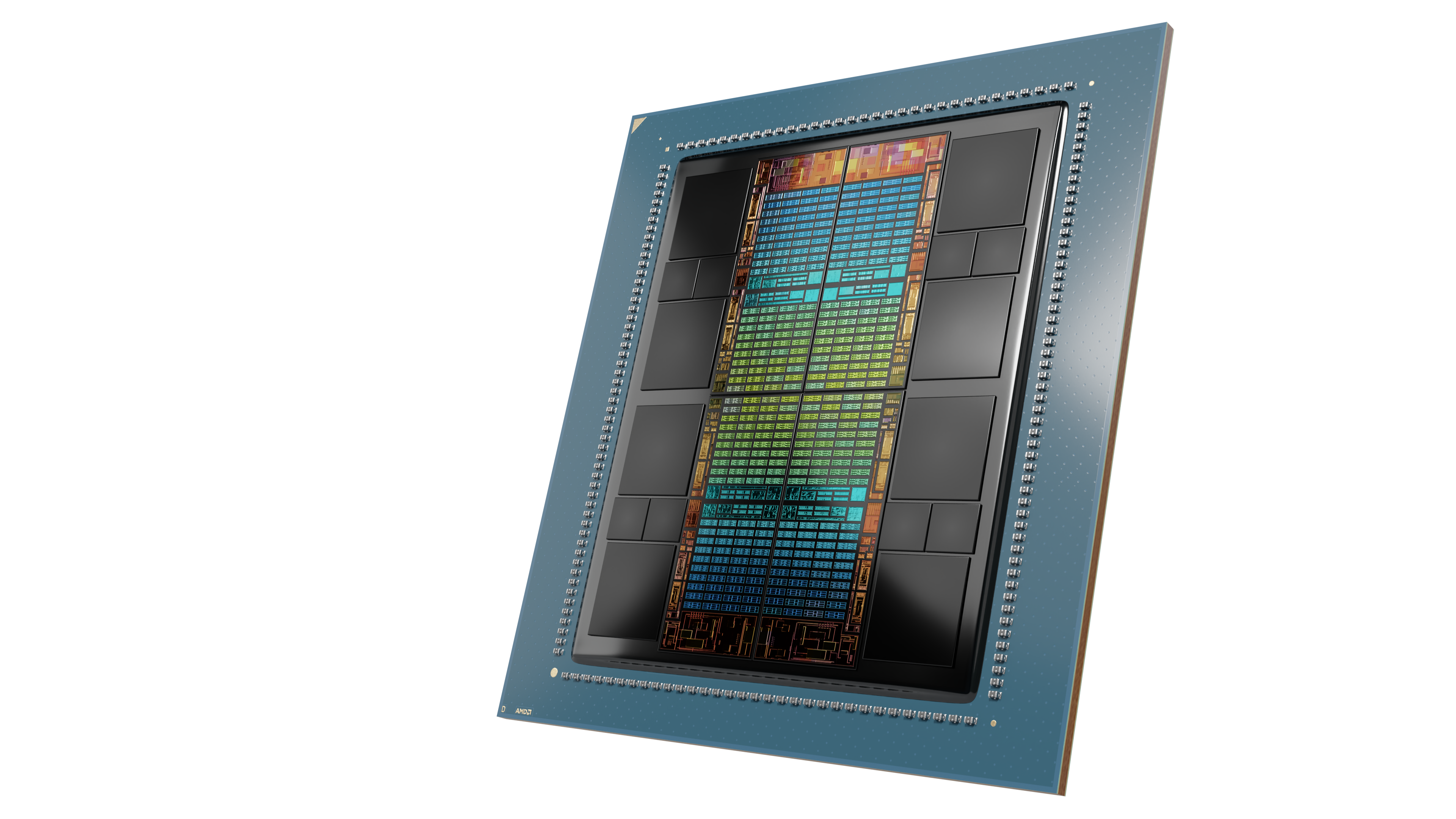

Following this, the MI350 series, built on the AMD CDNA 4 architecture, is expected in 2025. This series promises a 35-fold increase in AI inference performance over the current MI300 series. The Instinct MI350 series will use a 3nm-class process technology and support new data formats — FP4 and FP6 — and instructions to boost AI performance and efficiency.

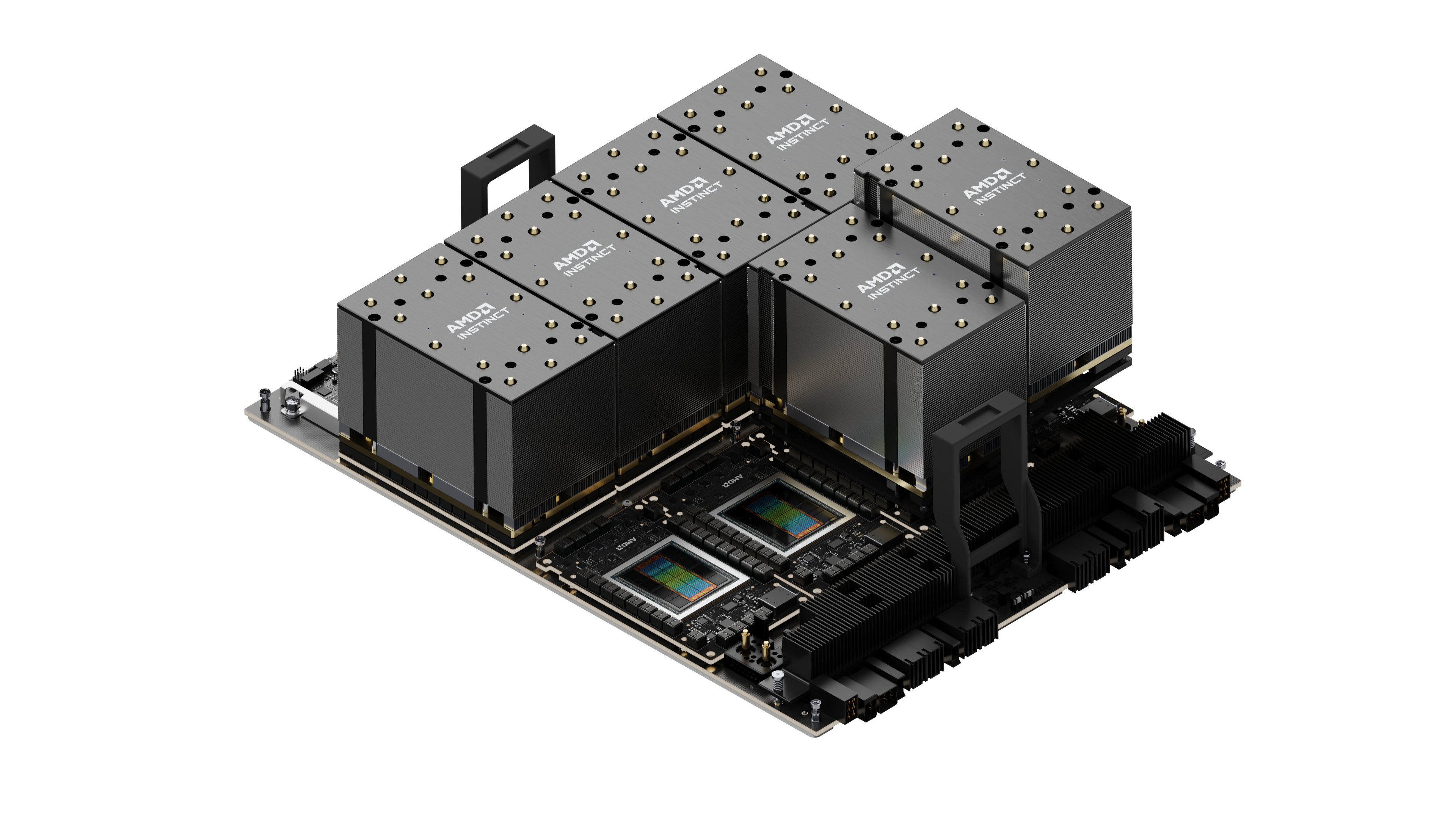

The AMD ROCm 6 software stack plays a crucial role in maximizing the performance of the MI300X accelerators, AMD said. The company's benchmarks showed that systems using eight MI300X accelerators outperformed Nvidia's H100 1.3 times in Meta Llama-3 70B model inference and token generation. Single MI300X accelerators also demonstrated superior performance in Mistral-7B model tasks, beating its competitor 1.2 times, based on AMD's tests.

The adoption of AMD's Instinct MI200 and MI300-series products by cloud service providers and system integrators is also accelerating. Microsoft Azure uses these accelerators for OpenAI services, Dell T integrates them into PowerEdge enterprise AI machines, and Lenovo and HPE utilize them for their servers.

"The AMD Instinct MI300X accelerators continue their strong adoption from numerous partners and customers, including Microsoft Azure, Meta, Dell Technologies, HPE, Lenovo, and others, a direct result of the AMD Instinct MI300X accelerator exceptional performance and value proposition," said Brad McCredie, corporate vice president, Data Center Accelerated Compute, AMD. "With our updated annual cadence of products, we are relentless in our pace of innovation, providing the leadership capabilities and performance in the AI industry, and our customers expect to drive the next evolution of data center AI training and inference."

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

It's good to see AMD offering a refresh or a completely new AI accelerator each respective year.Reply

The MI325X AI accelerator appears more like a interim solution, since it is a beefed-up refresh of the current MI300X, composed of eight compute, four I/O, and eight memory chiplets stitched together.

So AMD is now confident that its MI325X system could support 1 trillion parameter model ? But they are still focusing on FP16, which requires twice as much memory per parameter as FP8.

MI300X does have hardware support for FP8, but AMD has generally focused on half-precision performance in its benchmarks. At least for inferencing/vLLM, the MI300X was stuck with FP16. And vLLM lacks proper support for FP8 data types.

This isn't any apples-to-apples comparison though. But while it's great to have 288GB of capacity, I'm just worried the extra memory doesn't get overshadowed in a model that would run at FP8 vs Nvidia's H200.

Because that would still require extra memory on the MI325X, twice to be precise. But it appears AMD might have overcome this limitation with this new accelerator .

I hope so !

https://cdn.wccftech.com/wp-content/uploads/2024/06/2024-06-03_10-36-59-scaled.jpg -

TechyIT223 Instead of dubbing the future architecture as "CDNA Next" AMD should refer it as CDNA 4++ , or just CDNA 5 for sake of clarity.Reply