Limited scalability of AMD's Instinct MI325X limits scale of its sales, says analyst firm

AI customers are reportedly not interested in the MI325X.

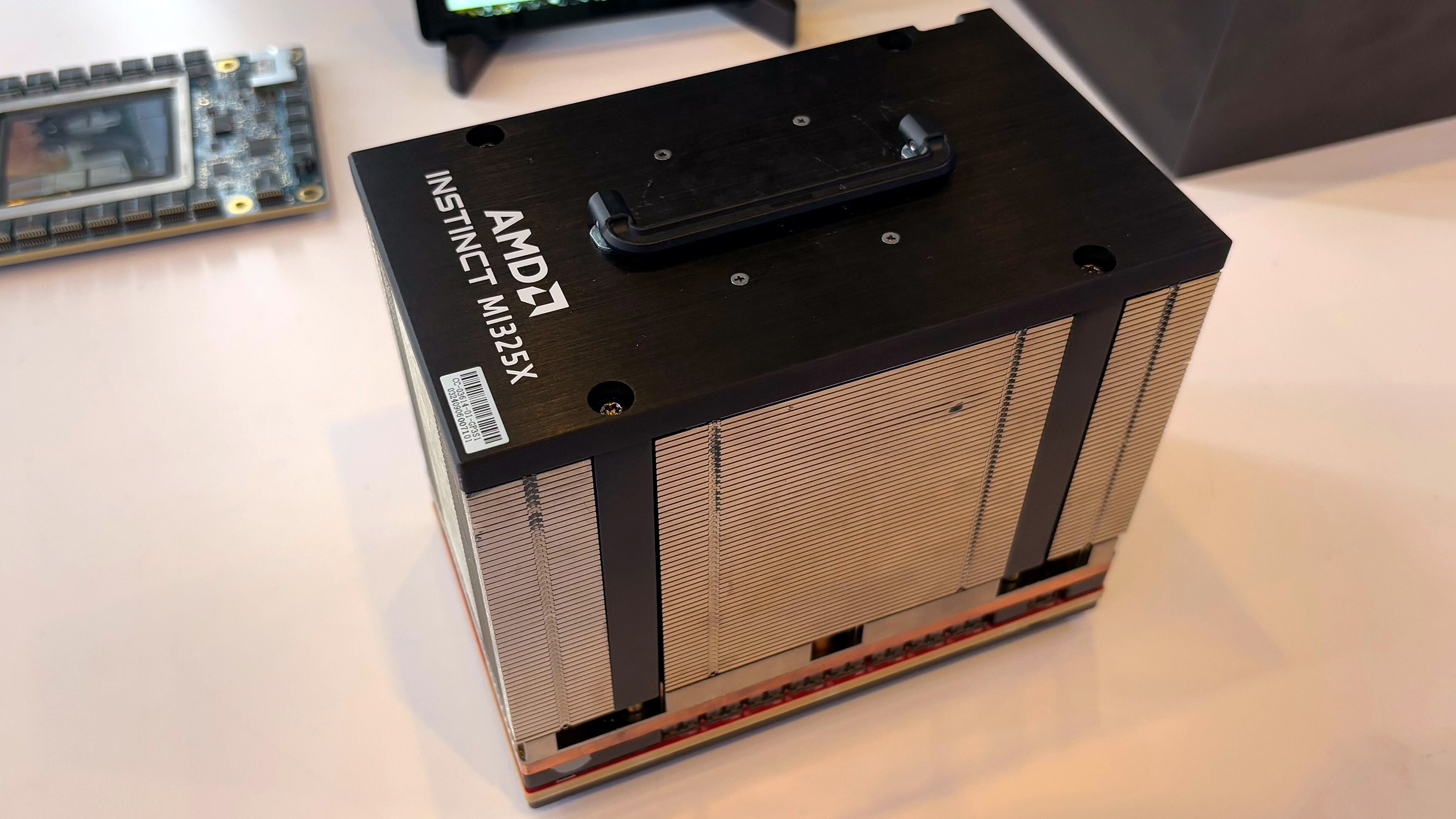

AMD's Instinct MI325X accelerator was supposed to compete with Nvidia's H200, but its limited scalability and delays with volume shipments greatly limit its commercial success, according to analysts from SemiAnalysis. One key reason for low interest in the Instinct MI325X is that it does not support rack-scale configurations, and another is the approaching launch of a more competitive Instinct MI355X.

AMD began to ship its Instinct MI355X accelerator for AI workloads in Q2 2020, three quarters after Nvidia initiated shipments of its H200 processors and in time with Nvidia's B200 'Blackwell' GPUs. So far, customers have largely favored Blackwell due to significantly better performance-per-dollar, and it does not look like the situation is going to get considerably better for the MI325X, according to SemiAnalysis.

EXCLUSIVE: There has been a ❗️lack of interest 🤯 from customers in purchasing AMD MI325X as we’ve been saying for a year.Below we explain why! 🧵👇1/7 pic.twitter.com/ep4y2fuV0VMay 12, 2025

First up, the maximum scale-up world size of AMD's Instinct MI325X and Instinct MI355X— the maximum number of GPUs that can be interconnected within a single system or tightly coupled cluster, enabling efficient communication and parallel processing for large-scale AI and HPC workloads — is eight GPUs. By contrast, Nvidia's B200 can scale to 72 GPUs in a cluster interconnected using NVLink.

To build AMD-based clusters with more than eight GPUs, one needs to move from scale-up to scale-out architecture and interconnect clusters using a networking technology, such as Ethernet or Infiniband, which reduces scalability.

Secondly, AMD's Instinct MI325X slow adoption was reportedly due to its high power consumption (1000W), lack of significant performance uplift from MI300, and requirement for a new chassis, offering no easy upgrade path from existing platforms.

Microsoft reportedly expressed disappointment with AMD's GPUs as early as 2024 and placed no follow-on orders, though Oracle and a few others have shown some renewed interest due to AMD's price cuts. Looking forward, AMD's Instinct MI355X may gain some traction if priced aggressively and supported by strong software, but it remains non-competitive against Nvidia's rack-scale GB200 NVL72, which supports up to 72 GPUs.

The MI355X may still find niche success in non-rack-scale deployments, assuming it has a competitive total cost of ownership (TCO) and mature software (good thing, it is reportedly improving), according to SemiAnalysis.

Follow Tom's Hardware on Google News to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

JRStern >AMD began to ship its Instinct MI355X accelerator for AI workloads in Q2 2020Reply

2024?

>To build AMD-based clusters with more than eight GPUs, one needs to move from scale-up

>to scale-out architecture and interconnect clusters using a networking technology, such as

>Ethernet or Infiniband, which reduces scalability.

That's not exactly a clear scale-up/scale-out difference, but I guess it's something. -

thestryker Reply

I think the two are reversed in that paragraph because it mentions the MI325X not looking to be any better at the end as well as the year being wrong.JRStern said:>AMD began to ship its Instinct MI355X accelerator for AI workloads in Q2 2020

2024?

I think what they're getting at here is that NVLink doesn't require additional interconnect hardware. Of course it's only the GB200 which has this interface so I don't think it would be a competitor in the first place. I'm not familiar enough with AI to give any insight on why this actually matters.JRStern said:>To build AMD-based clusters with more than eight GPUs, one needs to move from scale-up

>to scale-out architecture and interconnect clusters using a networking technology, such as

>Ethernet or Infiniband, which reduces scalability.

That's not exactly a clear scale-up/scale-out difference, but I guess it's something. -

DS426 Yeah... I found the best thing to do is just read the original article:Reply

https://semianalysis.com/2025/04/23/amd-2-0-new-sense-of-urgency-mi450x-chance-to-beat-nvidia-nvidias-new-moat/#mi325x-and-mi355x-customer-interest

I'm not an AI guru by any means, but they lay out their many points such that any tech person can understand. Moreover, they illustrate how much things have changed at AMD in the past ~5 months -- pretty much a total *oh sh*t!* moment back in Dec'24/Jan'25 where they realize nVidia is only pulling away with AI tech and most importantly -- customers -- at an ever-increasing pace.

"Developers, developers, developers!" Did AMD really think nVidia was only winning due to faster hardware? I'm really surprised that something like this would come as enlightening. Fortunately, it's also been acknowledged in gaming, so AMD will hopefully make some nice gains in the things that us Tom's readers can really appreciate! FSR4 was already a big gap closing in technical aspects, but obviously game studios need to be convinced to adopt it and make that adoption as easy as possible (which is something that is already coming to fruition, e.g. just being able to drop new .dll files in to provide a FSR 3.1 -> 4 upgrade).