JEDEC finalizes HBM4 memory standard with major bandwidth and efficiency upgrades

The latest JEDEC standard for HBM4 supports next-gen compute demands.

JEDEC has published the official HBM4 (High Bandwidth Memory 4) specification under JESD238, a new memory standard aimed at keeping up with the rapidly growing requirements of AI workloads, high-performance computing, and advanced data center environments. The new standard introduces architectural changes and interface upgrades that seek to improve memory bandwidth, capacity, and efficiency as data-intensive applications continue to evolve.

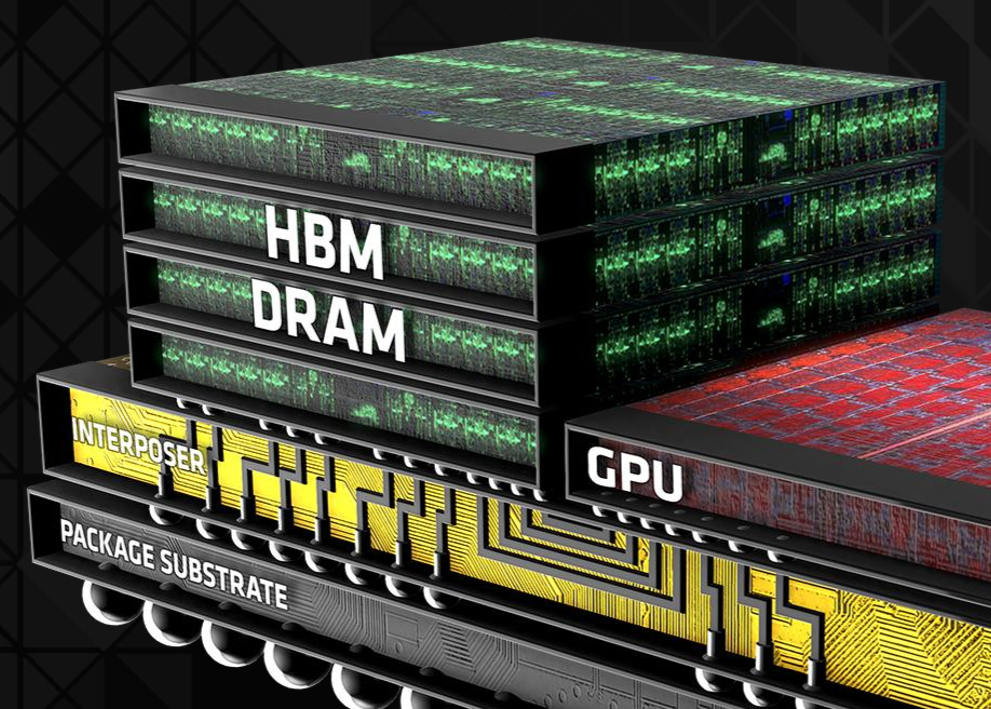

HBM4 continues the use of vertically stacked DRAM dies, a hallmark of the HBM family, but brings a host of improvements over its predecessor, HBM3, with significant advancements in bandwidth, efficiency, and design flexibility. It supports transfer speeds of up to 8 Gb/s across a 2048-bit interface, delivering a total bandwidth of up to 2 TB/s. One of the key upgrades is the doubling of independent channels per stack—from 16 in HBM3 to 32 in HBM4—each now featuring two pseudo-channels. This expansion allows for greater access flexibility and parallelism in memory operations.

In terms of power efficiency, the JESD270-4 specification introduces support for a range of vendor-specific voltage levels, including VDDQ options of 0.7V, 0.75V, 0.8V, or 0.9V, and VDDC options of 1.0V or 1.05V. These adjustments are said to contribute to lower power consumption and improved energy efficiency across different system requirements. HBM4 also maintains compatibility with existing HBM3 controllers, enabling a single controller to operate with either memory standard. This backwards compatibility eases adoption and allows for more flexible system designs.

Additionally, HBM4 incorporates Directed Refresh Management (DRFM), which enhances row-hammer mitigation and supports stronger Reliability, Availability, and Serviceability (RAS) features. On the capacity front, HBM4 supports stack configurations ranging from 4-high to 16-high, with DRAM die densities of 24Gb or 32Gb. This allows for cube capacities as high as 64GB using 32Gb 16-high stacks, enabling higher memory density for demanding workloads.

A notable architectural change in HBM4 is the separation of command and data buses, designed to enhance concurrency and reduce latency. This modification aims to improve performance in multi-channel operations, which are prevalent in AI and HPC workloads. Furthermore, HBM4 incorporates a new physical interface and signal integrity improvements to support faster data rates and greater channel efficiency.

The development of HBM4 involved collaboration among major industry players, including Samsung, Micron, and SK hynix, who contributed to the standard's formulation. These companies are anticipated to commence showcasing HBM4-compatible products in the near future, with Samsung indicating plans to begin production by 2025 to meet the growing demand from AI chipmakers and hyperscalers.

As AI models and HPC applications demand greater computational resources, there is a growing technical need for memory with higher bandwidth and larger capacity. The introduction of the HBM4 standard should address these requirements by outlining specifications for next-generation memory technologies designed to handle the data throughput and processing challenges associated with these workloads.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Follow Tom's Hardware on Google News to get our up-to-date news, analysis and reviews in your feeds. Make sure to click the Follow button.

Kunal Khullar is a contributing writer at Tom’s Hardware. He is a long time technology journalist and reviewer specializing in PC components and peripherals, and welcomes any and every question around building a PC.

-

razor512 Since managing multiple pools of VRAM has been around for a long time, e.g., the GTX 970 could manage 3 pools (3.5GB, 512MB, shared stem memory). With that in mind, would it be possible for a video card maker to do a hybrid approach, e.g., 16GB of HBM4, + 16GB of GDDR7?Reply -

thestryker Reply

It would certainly be possible, but I'm fairly confident it would not make any sense monetarily. The memory controller would need to be optimized for both and HBM packaging makes things more expensive.razor512 said:Since managing multiple pools of VRAM has been around for a long time, e.g., the GTX 970 could manage 3 pools (3.5GB, 512MB, shared stem memory). With that in mind, would it be possible for a video card maker to do a hybrid approach, e.g., 16GB of HBM4, + 16GB of GDDR7?

I'm not certain it would even make sense from a performance standpoint. The 50 series has significantly more memory bandwidth than the 40 series, but the performance improvement seems to largely mirror the core count and clock speed. This leads me to believe that the additional memory bandwidth is likely more related to the AI capabilities than raster/rt rendering. -

usertests Reply

I think the main benefits for HBM on consumer GPUs would be power efficiency and less board space taken up, which is good for SFF. I'd be more interested to see HBM added as an L4 cache in consumer CPUs, or as a stack of VRAM for APUs.thestryker said:I'm not certain it would even make sense from a performance standpoint. The 50 series has significantly more memory bandwidth than the 40 series, but the performance improvement seems to largely mirror the core count and clock speed. This leads me to believe that the additional memory bandwidth is likely more related to the AI capabilities than raster/rt rendering.

But we won't get any of that until the cost and demand plummets, following at least a big AI bubble burst. Even outside of AI it's being used in Xeons, Epycs, supercomputer chips, etc. -

thestryker Reply

I'm not really sure how much of a benefit it would be in practice though. You're absolutely right about it being more efficient and taking up less board space. The problem is most of the power consumption is the GPU and in turn the coolers required tend to be quite a bit larger than the PCB already.usertests said:I think the main benefits for HBM on consumer GPUs would be power efficiency and less board space taken up, which is good for SFF.

I'm not sure how much sense it'd make as a CPU cache due to latency, but it would make for a very interesting tiered memory system like Intel did with the Xeon Max. It would be fantastic for APU graphics though in something like an 8GB stack. Some future iteration of Strix Halo using two stacks instead of LPDDR would probably blow the doors off.usertests said:I'd be more interested to see HBM added as an L4 cache in consumer CPUs, or as a stack of VRAM for APUs. -

Mr Majestyk More L2 cache would be desirable, rather than some super expensive memory not designed for desktop usage.Reply -

DougMcC Reply

AI bust is years away at best. People in industry outside of the core ai circles are building like crazy, the demand for ai capacity is going to double or more every year for probably the next 5 years at least. My company has product plans out that far, and even existing models will clearly enable success. By the time we're 3 years out and next gen models offer even mild improvements, the list of projects will have grown rapidly.usertests said:

But we won't get any of that until the cost and demand plummets, following at least a big AI bubble burst. Even outside of AI it's being used in Xeons, Epycs, supercomputer chips, etc.

There's no bust in sight. -

Thunder64 ReplyDougMcC said:AI bust is years away at best. People in industry outside of the core ai circles are building like crazy, the demand for ai capacity is going to double or more every year for probably the next 5 years at least. My company has product plans out that far, and even existing models will clearly enable success. By the time we're 3 years out and next gen models offer even mild improvements, the list of projects will have grown rapidly.

There's no bust in sight.

Just because you don't see it doesn't mean it doesn't exist. It's not imminent but many seem to agree there will be an AI bust. -

DougMcC Reply

That's kind of the argument i'm making though. AI bust theorists think that there can be a sudden, large drop in AI investment. To think that requires blindness to the existence of the kinds of work and projects going on outside of the core AI providers. Just because they don't personally see that work, doesn't mean it doesn't exist. And I'm just saying: this exists. And its existence precludes the possibility of a short term (<5 years) major AI bust.Thunder64 said:Just because you don't see it doesn't mean it doesn't exist. It's not imminent but many seem to agree there will be an AI bust.