Tom's Hardware Verdict

The RTX 4080 Super mostly improves Nvidia's product stack by slashing the MSRP by $200. It's barely faster than the vanilla 4080 and otherwise doesn't offer anything truly new, but cheaper and faster are at least a step in the right direction.

Pros

- +

Second-fastest overall GPU

- +

$200 cheaper than the vanilla 4080

- +

All the usual Nvidia extras

Cons

- -

Barely faster than the 4080

- -

Still uses AD103 and 16GB

- -

$999 base price is still expensive

Why you can trust Tom's Hardware

The Nvidia RTX 4080 Super should be the final entry in Nvidia's RTX 40-series Ada Lovelace GPUs, though there's still room for a 4090 update perhaps (but unlikely). In terms of the Super models, it's the least exciting from a performance standpoint. It makes up for that by slashing the base MSRP from $1,199 on the RTX 4080 down to $999 for the 4080 Super, putting it more or less into direct competition with AMD's RX 7900 XTX. Considering the vastly inflated retail prices on the RTX 4090 these days, the 4080 Super now has to hold down the fort as the fastest Nvidia option that's still within reach of gamers, competing with the best graphics cards currently available.

We opined back when the 40-series Super rumors first started circulating that we really hoped the 4080 Super 20GB would become a reality. That hope was in vain, for a variety of reasons — chiefly demand from the AI sector. With AD102-based graphics cards frequently selling at $2,000 and up these days, Nvidia has no reason to push out lower-priced consumer cards that use those same GPUs. The result is that RTX 4080 Super uses a fully enabled AD103 chip, with slightly higher memory clocks, and it gets a price cut to try to soften the blow.

Here's the full rundown of the specifications for all the current generation Nvidia and AMD GPUs that have launched. Short of a surprise 4090 Super or similar reveal at GTC in a couple of months, this should mark the end of the RTX 40-series and RX 7000-series lineups.

| Graphics Card | RTX 4080 Super | RTX 4080 | RTX 4090 | RTX 4070 Ti Super | RTX 4070 Ti | RTX 4070 Super | RTX 4070 | RTX 4060 Ti 16GB | RTX 4060 Ti | RTX 4060 | RX 7900 XTX | RX 7900 XT | RX 7800 XT | RX 7700 XT | RX 7600 XT | RX 7600 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Architecture | AD103 | AD103 | AD102 | AD103 | AD104 | AD104 | AD104 | AD106 | AD106 | AD107 | Navi 31 | Navi 31 | Navi 32 | Navi 32 | Navi 33 | Navi 33 |

| Process Technology | TSMC 4N | TSMC 4N | TSMC 4N | TSMC 4N | TSMC 4N | TSMC 4N | TSMC 4N | TSMC 4N | TSMC 4N | TSMC 4N | TSMC N5 + N6 | TSMC N5 + N6 | TSMC N5 + N6 | TSMC N5 + N6 | TSMC N6 | TSMC N6 |

| Transistors (Billion) | 45.9 | 45.9 | 76.3 | 45.9 | 35.8 | 32 | 32 | 22.9 | 22.9 | 18.9 | 45.6 + 6x 2.05 | 45.6 + 5x 2.05 | 28.1 + 4x 2.05 | 28.1 + 3x 2.05 | 13.3 | 13.3 |

| Die size (mm^2) | 378.6 | 378.6 | 608.4 | 378.6 | 294.5 | 294.5 | 294.5 | 187.8 | 187.8 | 158.7 | 300 + 225 | 300 + 225 | 200 + 150 | 200 + 113 | 204 | 204 |

| SMs / CUs / Xe-Cores | 80 | 76 | 128 | 66 | 60 | 56 | 46 | 34 | 34 | 24 | 96 | 84 | 60 | 54 | 32 | 32 |

| GPU Cores (Shaders) | 10240 | 9728 | 16384 | 8448 | 7680 | 7168 | 5888 | 4352 | 4352 | 3072 | 6144 | 5376 | 3840 | 3456 | 2048 | 2048 |

| Tensor / AI Cores | 320 | 304 | 512 | 264 | 240 | 224 | 184 | 136 | 136 | 96 | 192 | 168 | 120 | 108 | 64 | 64 |

| Ray Tracing Cores | 80 | 76 | 128 | 66 | 60 | 56 | 46 | 34 | 34 | 24 | 96 | 84 | 60 | 54 | 32 | 32 |

| Boost Clock (MHz) | 2550 | 2505 | 2520 | 2610 | 2610 | 2475 | 2475 | 2535 | 2535 | 2460 | 2500 | 2400 | 2430 | 2544 | 2755 | 2655 |

| VRAM Speed (Gbps) | 23 | 22.4 | 21 | 21 | 21 | 21 | 21 | 18 | 18 | 17 | 20 | 20 | 19.5 | 18 | 18 | 18 |

| VRAM (GB) | 16 | 16 | 24 | 16 | 12 | 12 | 12 | 16 | 8 | 8 | 24 | 20 | 16 | 12 | 16 | 8 |

| VRAM Bus Width | 256 | 256 | 384 | 256 | 192 | 192 | 192 | 128 | 128 | 128 | 384 | 320 | 256 | 192 | 128 | 128 |

| L2 / Infinity Cache | 64 | 64 | 72 | 64 | 48 | 48 | 36 | 32 | 32 | 24 | 96 | 80 | 64 | 48 | 32 | 32 |

| Render Output Units | 112 | 112 | 176 | 96 | 80 | 80 | 64 | 48 | 48 | 48 | 192 | 192 | 96 | 96 | 64 | 64 |

| Texture Mapping Units | 320 | 304 | 512 | 264 | 240 | 224 | 184 | 136 | 136 | 96 | 384 | 336 | 240 | 216 | 128 | 128 |

| TFLOPS FP32 (Boost) | 52.2 | 48.7 | 82.6 | 44.1 | 40.1 | 35.5 | 29.1 | 22.1 | 22.1 | 15.1 | 61.4 | 51.6 | 37.3 | 35.2 | 22.6 | 21.7 |

| TFLOPS FP16 (FP8) | 418 (836) | 390 (780) | 661 (1321) | 353 (706) | 321 (641) | 284 (568) | 233 (466) | 177 (353) | 177 (353) | 121 (242) | 122.8 | 103.2 | 74.6 | 70.4 | 45.2 | 43.4 |

| Bandwidth (GBps) | 736 | 717 | 1008 | 672 | 504 | 504 | 504 | 288 | 288 | 272 | 960 | 800 | 624 | 432 | 288 | 288 |

| TDP (watts) | 320 | 320 | 450 | 285 | 285 | 220 | 200 | 160 | 160 | 115 | 355 | 315 | 263 | 245 | 190 | 165 |

| Launch Date | Jan 2024 | Nov 2022 | Oct 2022 | Jan 2024 | Jan 2023 | Jan 2024 | Apr 2023 | Jul 2023 | May 2023 | Jul 2023 | Dec 2022 | Dec 2022 | Sep 2023 | Sep 2023 | Jan 2024 | May 2023 |

| Launch Price | $999 | $1,199 | $1,599 | $799 | $799 | $599 | $599 | $499 | $399 | $299 | $999 | $899 | $499 | $449 | $329 | $269 |

| Online Price | $1,000 | $1,160 | $1,800 | $800 | $742 | $600 | $530 | $440 | $385 | $295 | $940 | $720 | $490 | $430 | $330 | $270 |

By the numbers, the RTX 4080 Super has the same nominal GPU clocks as the RTX 4080 — "nominal" because, in practice, all the Nvidia 40-series GPUs boost well above the stated boost clocks. That means the only real change in compute comes from the extra four SMs (Streaming Multiprocessors), which is a 5.3% increase over the vanilla 4080. The VRAM also gets a small bump, from 22.4 Gbps on the 4080 to 23 Gbps on the 4080 Super — a 2.7% increase.

The TGP (Total Graphics Power) remains the same at 320W, and that means we can expect the reference clocked 4080 Super Founders Edition that we're looking at today to offer less than a 5% improvement in performance compared to the 4080 Founders Edition.

The catch is that there are factory-overclocked RTX 4080 cards, as well as factory-overclocked RTX 4080 Super cards. Depending on how far the AIB (add-in board) partners push things, some of the existing 4080 OC models will already beat the reference 4080 Super in performance, while 4080 Super OC models might tack on another 5~10 percent in some cases — with higher power use, naturally.

That's it as far as paper specs go: a small boost in performance, and a $200 price cut. It's unexciting overall, unless you were in the market for an RTX 4080 and decided to hold off a bit to get the 4080 Super. Because saving $200 while getting slightly more performance is better.

The RTX 4080 Super officially launches today, on January 31, 2024. Nvidia normally allows reviews of base-MSRP models the day before reviews go live, but shipping delays caused a rescheduling of the review embargos. A cynic might say that it was also a way to have reviews of what will ultimately be a straightforward launch go live at the same time as retail availability, but hopefully, weather and shipping delays truly were the root cause.

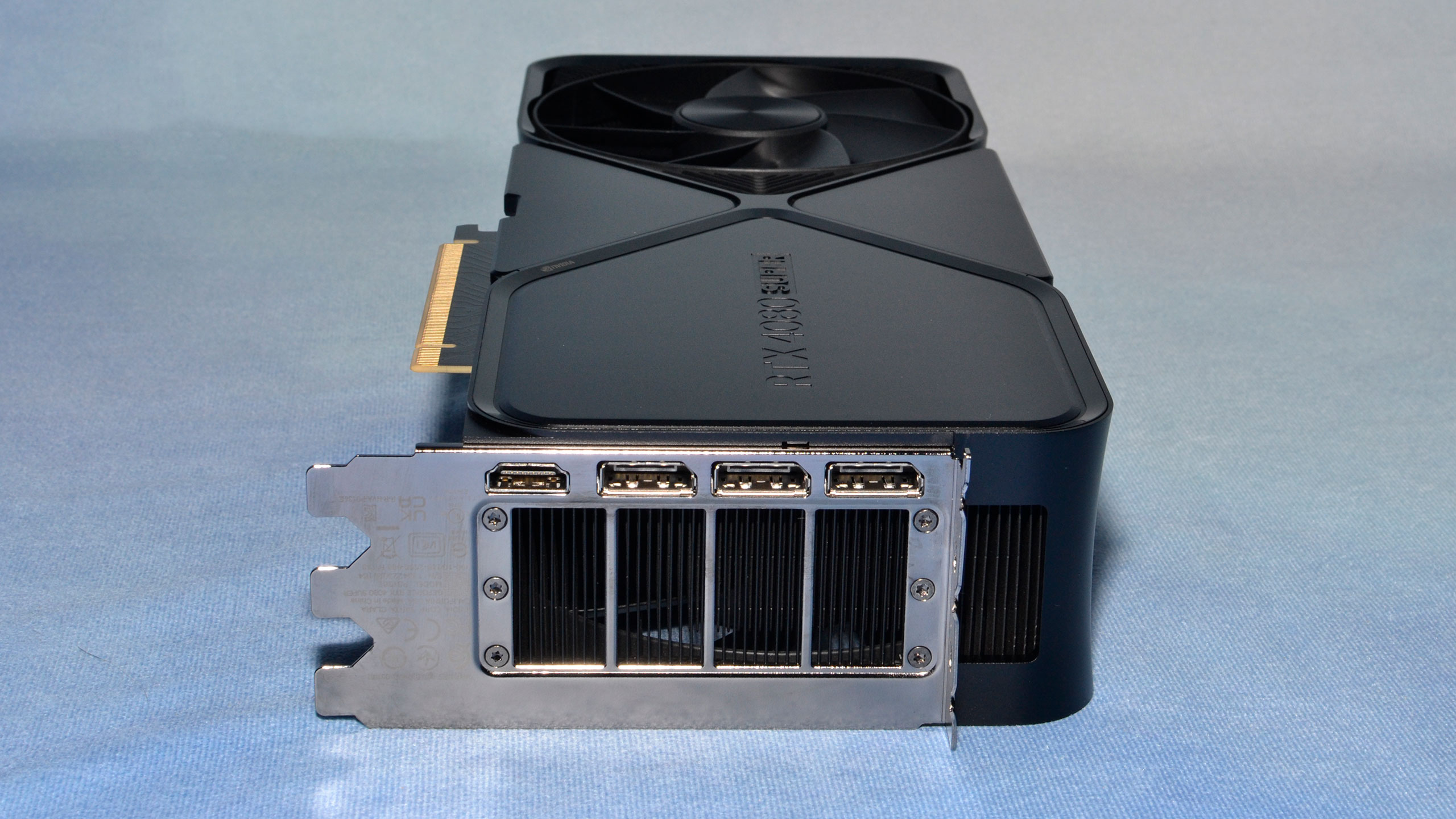

Nvidia GeForce RTX 4080 Super Founders Edition

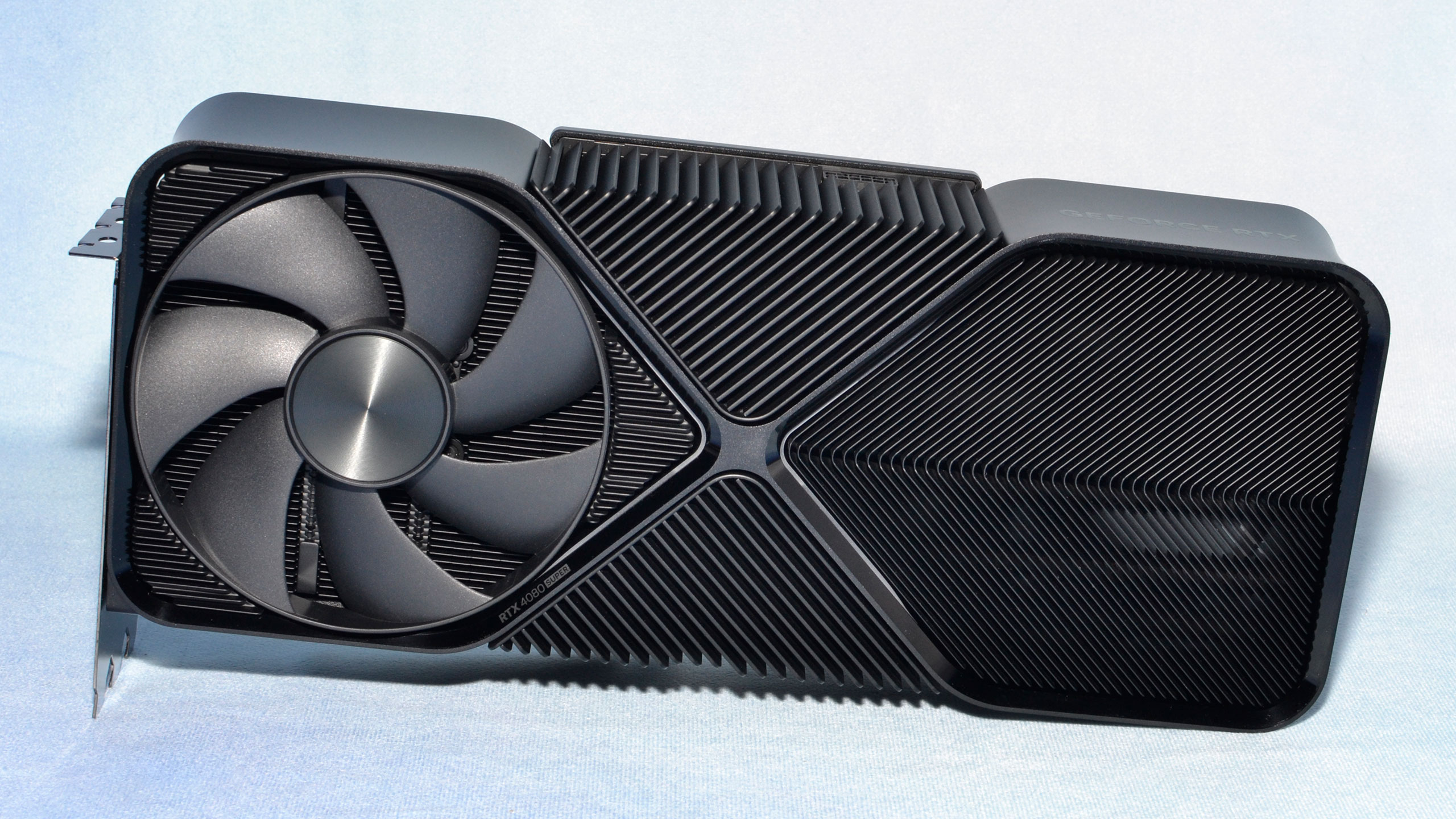

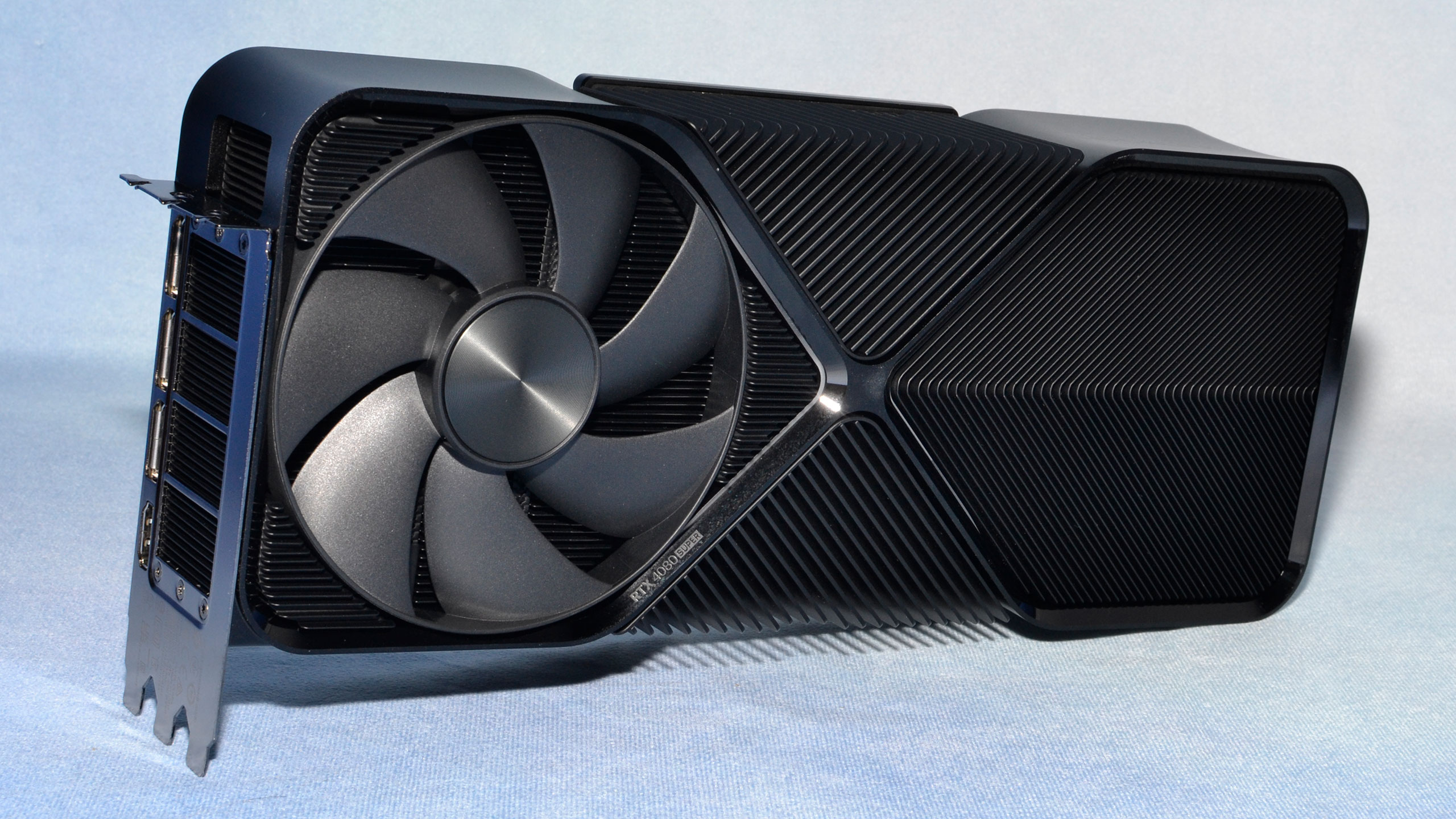

Nvidia does have an RTX 4080 Super Founders Edition, which looks nearly identical to the RTX 4080 Super Founders Edition other than a change in color scheme. The 4080 Super has a black wraparound casing, where the 4080 had a gunmetal gray casing. The 4080 Super also has black on black text that says "RTX 4080 Super" on the front of the card, though the top still has more visible text — with RGB lighting. It also has the same style of packaging.

There may be some under the hood changes, of course. One thing we do know is that Nvidia switched to a revised 16-pin 12V-2x6 power connector in early 2023, after the RTX 4090 melting adapter fiasco. We're not aware of any 4080 cards that were affected by 'meltgate,' likely because pulling 320W is quite a bit easier than 450W (or more). Love it or hate it, that means you get a triple 8-pin to 16-pin adapter for those who don't have an ATX 3.0 power supply.

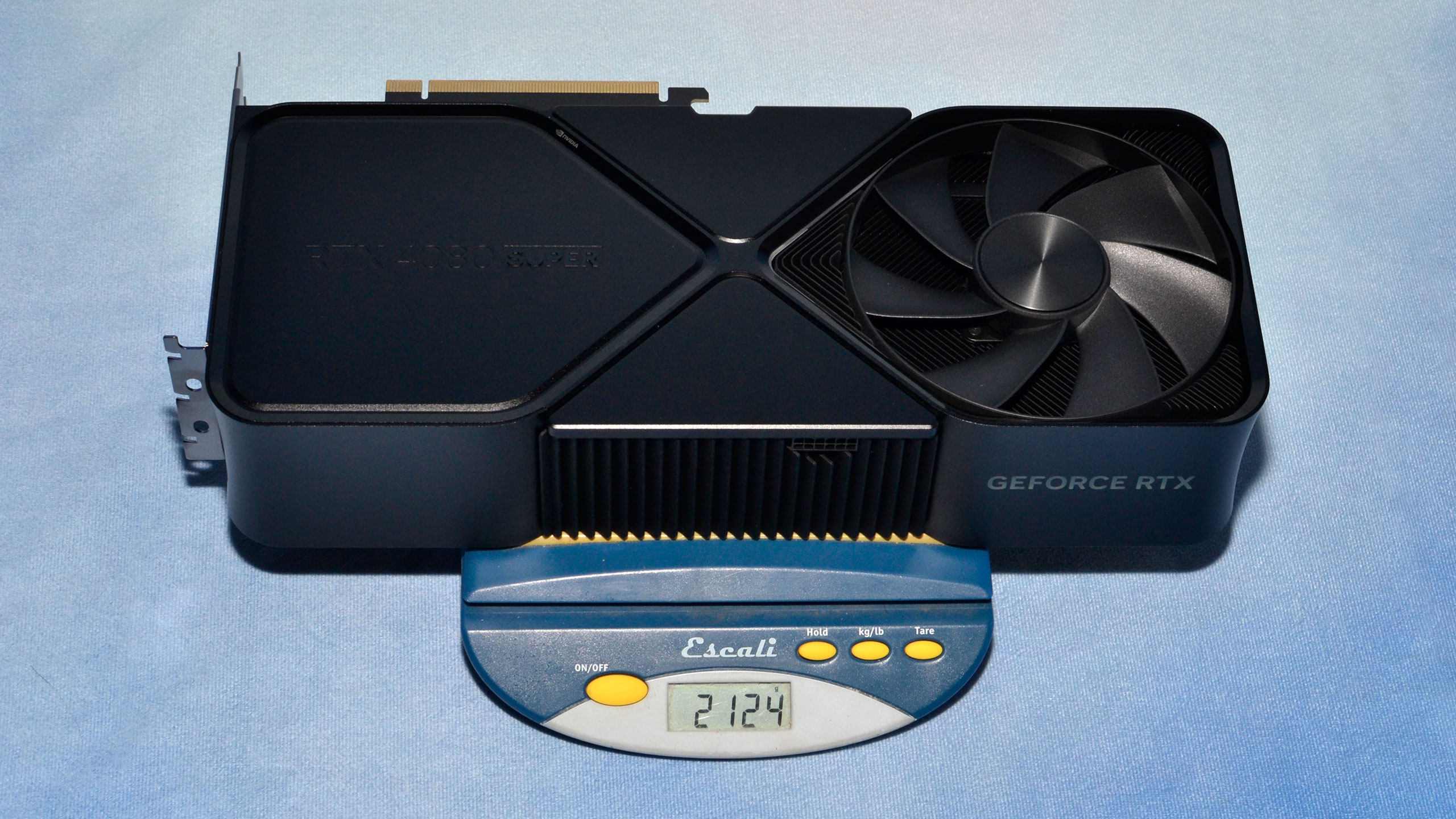

The Nvidia Founders Edition card has the same 304x137x61 mm dimensions as the 4080 card, and weighs 2124g. That's 8g lighter than the 4080 card we tested, which is probably just margin of error for our scale and the amount of glue and solder on the cards. The triple-pronged adapter adds another 47g to the scale if you're wondering.

The RTX 4080 Super Founders Edition comes with dual 115mm diameter fans, one on each side of the card. Nvidia says this arrangement helps to reduce fan noise, which is probably true if only because one of the fans will face away from any microphone looking to measure noise levels. The card is pretty quiet, though, and we suspect the triple-slot cooler will outperform many of the other base-MSRP cards that are coming to market.

Naturally, Nvidia sticks to its standard video output configuration of three DisplayPort 1.4a and a single HDMI 2.1 port. All are 4K 240 Hz capable when using Display Stream Compression — and in our experience, you really won't notice the difference between uncompressed and DSC signals. It really is "visually lossless," and depending on the content it can actually be truly lossless.

Nvidia doesn't include any extras with its cards, so you don't get a support stand to help hold up the back of the graphics card. Given the problems we've heard with cracking PCBs — granted, none of those were Nvidia Founders Edition cards — you may want to have your own stand. The triple IO bracket does also provide more support than the dual-slot brackets found on many cards.

Nvidia RTX 4080 Super Test Setup

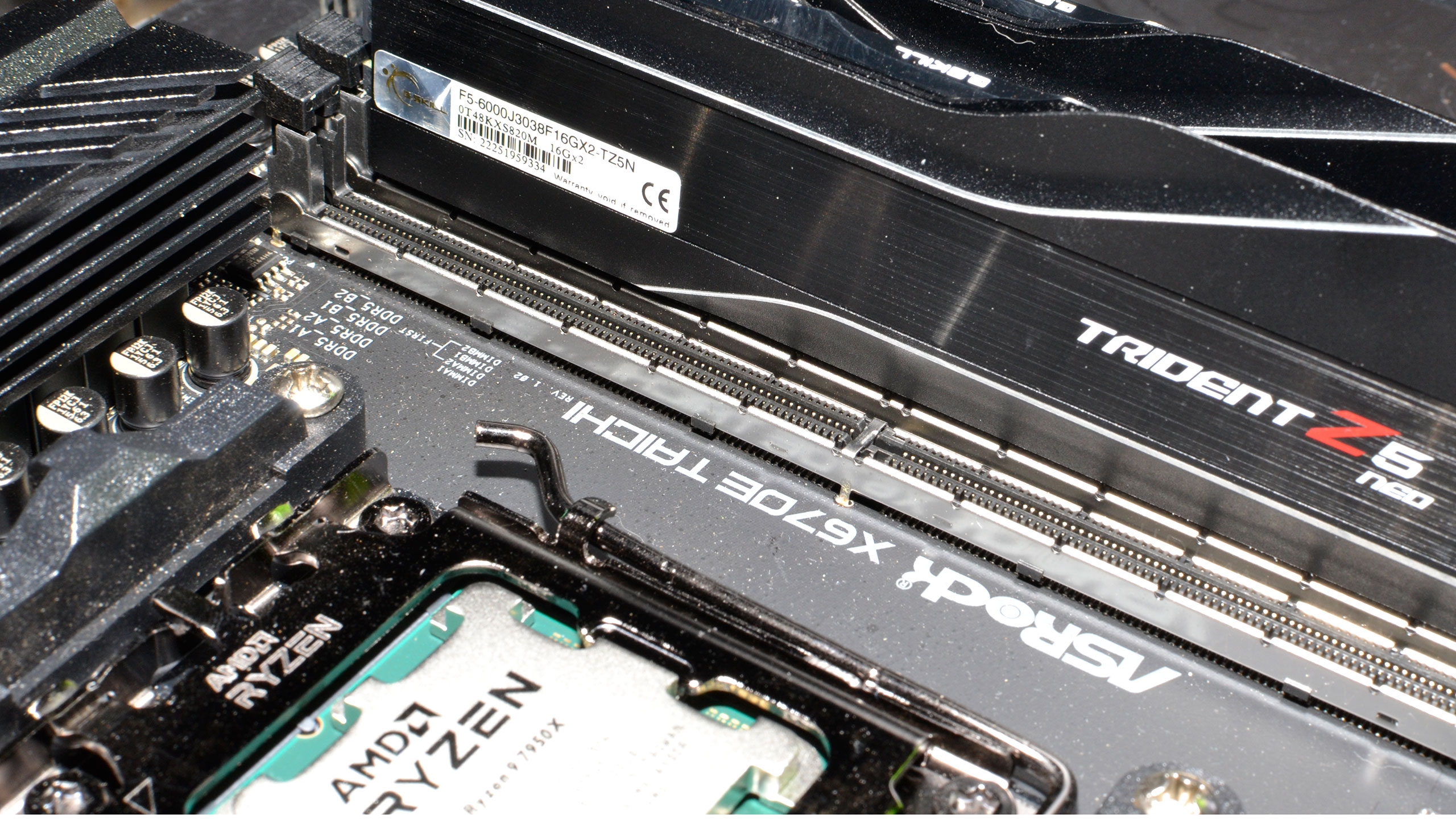

Our current graphics card testbed has been in use for over a year now, and so far we haven't seen any pressing need to upgrade. The Core i9-13900K is still holding its own, and while the i9-14900K or Ryzen 9 7950X3D can improve performance slightly, at higher resolutions and settings we're still almost entirely GPU limited — though perhaps not when the future RTX 50-series and RX 8000-series arrive. We also conduct professional and AI benchmarks on our Core i9-12900K PC, which is also used for our GPU benchmarks hierarchy.

TOM'S HARDWARE Gaming PC

Intel Core i9-13900K

MSI MEG Z790 Ace DDR5

G.Skill Trident Z5 2x16GB DDR5-6600 CL34

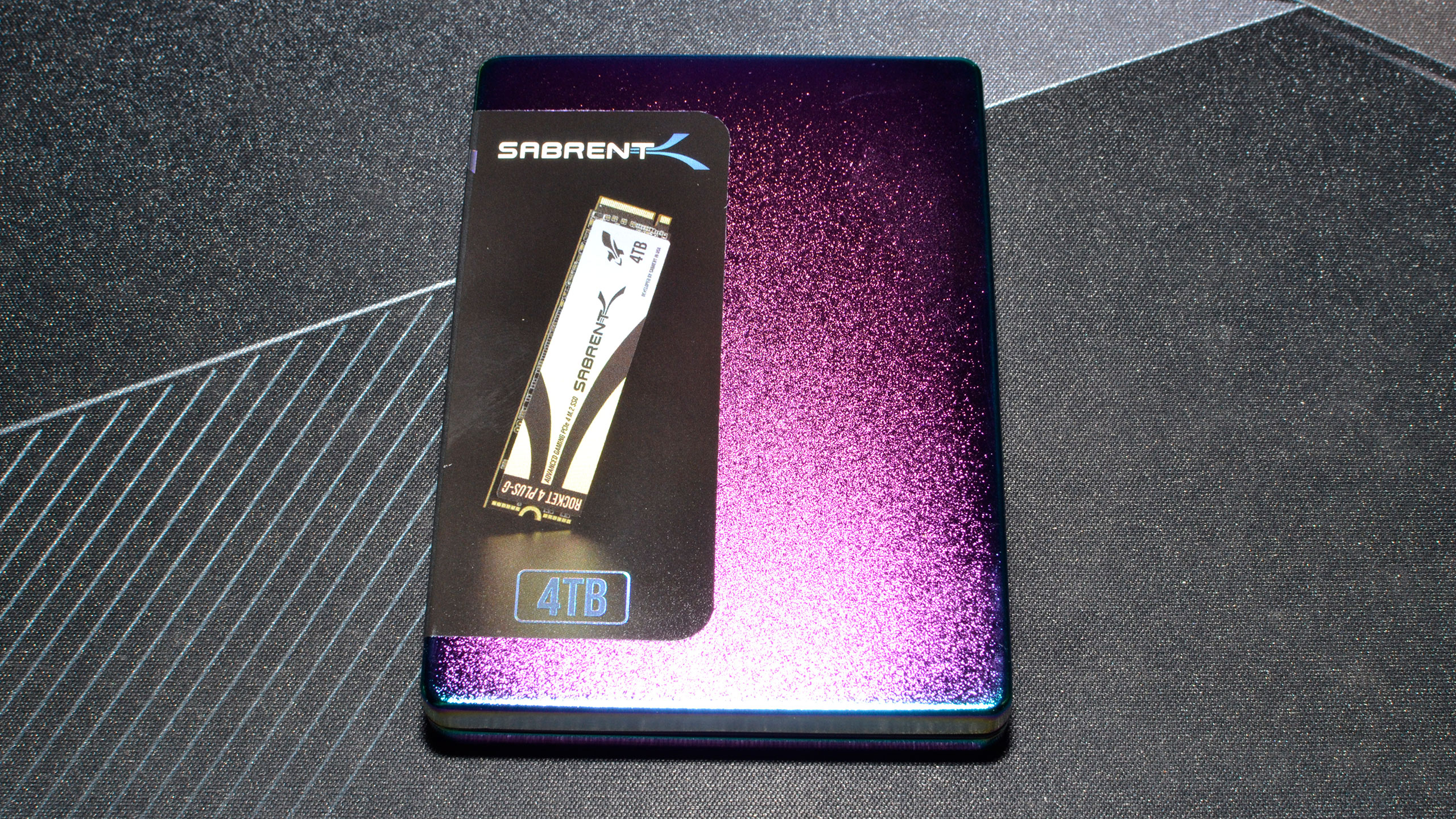

Sabrent Rocket 4 Plus-G 4TB

be quiet! 1500W Dark Power Pro 12

Cooler Master PL360 Flux

Windows 11 Pro 64-bit

TOM'S HARDWARE 2022 AI/ProViz PC

Intel Core i9-12900K

MSI Pro Z690-A WiFi DDR4

Corsair 2x16GB DDR4-3600 CL16

Crucial P5 Plus 2TB

Cooler Master MWE 1250 V2 Gold

Corsair H150i Elite Capellix

Cooler Master HAF500

Windows 11 Pro 64-bit

GRAPHICS CARDS

Nvidia RTX 4090

Nvidia RTX 4080 Super

Nvidia RTX 4080

Nvidia RTX 4070 Ti Super

Nvidia RTX 4070 Ti

Nvidia RTX 4070 Super

Nvidia RTX 4070

AMD RX 7900 XTX

AMD RX 7900 XT

AMD RX 7800 XT

AMD RX 7700 XT

We're using version 551.22 preview drivers from Nvidia for this review. In our 4070 Super testing, we noticed some changes in several of the benchmarks and retested the other Nvidia cards in the affected games. We also retested AMD's GPUs using the latest 23.12.1 drivers. The most impacted games are Borderlands 3, Far Cry 6, Forza Horizon 5, Microsoft Flight Simulator, and Spider-Man: Miles Morales. We've also fully retested the RTX 4080 and RTX 4090 for this review.

As the 4080 Super is now Nvidia's penultimate gaming GPU, and priced accordingly, we're limiting our test charts to the 4070 and above, and the 7700 XT and above — cards that all cost $450 or more, in other words. There's truly not going to be much to see in the benchmarks compared to the 4080, and the gap between the 4080 Super and 4090 remains quite wide.

Our test suite consists of 15 games that have been in use for over a year, plus three newer 'bonus' games. Out of the 18 games, 11 support DirectX Raytracing (DXR), but we only enable the DXR features in eight games. The remaining nine games are tested in pure rasterization mode. Also note that 15 of the games support DLSS 2/3, and nine support FSR 2/3, though our focus is primarily on native resolution performance. (We did use DLSS/FSR2 Quality upscaling on two of the bonus games.)

We test at 1080p (medium and ultra), 1440p ultra, and 4K ultra for our reviews — ultra being the highest supported preset if there is one, and in some cases maxing out all the other settings for good measure (except for MSAA or super sampling). In theory, you would think a $999 graphics card would target 4K gaming, but in practice that only applies if you turn on upscaling or play slightly less demanding games. Certainly, our ray tracing test suite doesn't like native 4K gaming much.

Our PC is hooked up to a Samsung Odyssey Neo G8 32, one of the best gaming monitors around, allowing us to fully experience the higher frame rates that might be available. G-Sync and FreeSync were enabled, as appropriate. As we're not testing with esports games, most of our performance results are nowhere near the 240 Hz limit, or even the 144 Hz limit of our secondary test PC.

We've installed Windows 11 22H2 and used InControl to lock our test PC to that major release for the foreseeable future (though critical security updates still get installed monthly — and one of those probably caused the drop in performance that necessitated retesting many of the games in our suite).

Our test PC includes Nvidia's PCAT v2 (Power Capture and Analysis Tool) hardware, which means we can grab real power use, GPU clocks, and more during all of our gaming benchmarks. We'll cover those results on our page on power use.

Finally, because GPUs aren't purely for gaming these days, we've run some professional content creation application tests, and we also ran some Stable Diffusion benchmarks to see how AI workloads scale on the various GPUs.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

- MORE: Best Graphics Cards

- MORE: GPU Benchmarks and Hierarchy

- MORE: All Graphics Content

Current page: Nvidia GeForce RTX 4080 Super Introduction

Next Page Nvidia RTX 4080 Super: 4K Gaming Performance

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

Lamarr the Strelok $1000 for 16 GB VRAM. What a ripoff. Personally the 7600 XT with 16 GB VRAM is the only GPU I'd consider.Nvidia has better performance but their greed is incredible.Reply

I'll be using my 8 GB RX 570 til it's wheels fall off. Then I may simply be done with PC gaming. It's becoming ridiculous now. -

usertests Reply

I'm not going to tell you to continue PC gaming but there are plenty of options that are good enough for whatever you're doing, like an RX 6600. If you want more VRAM, grab a 6700 XT instead of 7600 XT, or an RTX 3060, while supplies last. Then if we later see the RX 7600 8GB migrate down to $200, and 7700 XT 12GB down to $350, those will be perfectly fine cards.Lamarr the Strelok said:$1000 for 16 GB VRAM. What a ripoff. Personally the 7600 XT with 16 GB VRAM is the only GPU I'd consider.Nvidia has better performance but their greed is incredible.

I'll be using my 8 GB RX 570 til it's wheels fall off. Then I may simply be done with PC gaming. It's becoming ridiculous now.

By the time you're done hodling your RX 570, the 7600 XT should be under $300 and at least RDNA4 and Blackwell GPUs will be out. -

RandomWan ReplyLamarr the Strelok said:$1000 for 16 GB VRAM. What a ripoff. Personally the 7600 XT with 16 GB VRAM is the only GPU I'd consider.Nvidia has better performance but their greed is incredible.

I'll be using my 8 GB RX 570 til it's wheels fall off. Then I may simply be done with PC gaming. It's becoming ridiculous now.

You're complaining about the VRAM (which doesn't matter as much as you think) and the price when you're sporting a bottom budget card. There's any number of cards you could upgrade to with a $300 budget that will blow that 570 out of the water.

These should be over 2x the performance of your card with 16GB for $330:

https://pcpartpicker.com/product/vT9wrH/xfx-speedster-swft-210-radeon-rx-7600-xt-16-gb-video-card-rx-76tswftfp

https://pcpartpicker.com/product/sqyH99/gigabyte-gaming-oc-radeon-rx-7600-xt-16-gb-video-card-gv-r76xtgaming-oc-16gd -

Gururu I thought healthy competition between companies meant the customer wins. This proves not the case. They do just enough to edge the competition when they could do soooo much more for the customer.Reply -

TerryLaze Reply

Being cheaper is not a bad thing, it's not contradictory to the first thing being good ( the slightly faster) it's not but cheaper, it's but also or and(also) cheaper.Admin said:Nvidia GeForce RTX 4080 Super review: Slightly faster than the 4080, but $200 cheaper : Read more -

InvalidError Reply

If nobody complains about ludicrously expensive GPUs having a bunch of corners cut off everywhere to pinch a few dollars on manufacturing off a $1000 luxury product, that is only an invitation to do even worse next time. No GPU over $250 should have less than 12GB of VRAM, which makes 16GB at $1000 look pathetic.RandomWan said:You're complaining about the VRAM (which doesn't matter as much as you think) and the price when you're sporting a bottom budget card.

Also, having 12+GB does matter as higher resolution textures are usually the most obvious image quality improvement with little to no impact on frame rate as long as you have sufficient VRAM to spare and 8GB is starting to cause lots of visible LoD asset pops in modern titles.

Corporations' highest priority customers are the shareholders and shareholders want infinite 40% YoY growth with the least benefits possible to the retail end-users as giving end-users too much value for their money would mean hitting the end of the road for what can be cost-effectively delivered that much sooner and be able to milk customers for that many fewer product cycles.Gururu said:I thought healthy competition between companies meant the customer wins. This proves not the case. They do just enough to edge the competition when they could do soooo much more for the customer. -

TerryLaze Reply

The last time we had healthy competition in anything computer related was in the 90ies.Gururu said:I thought healthy competition between companies meant the customer wins. This proves not the case. They do just enough to edge the competition when they could do soooo much more for the customer.

AMD buying ATI in 2006 was the last of any "healthy" competition, every other GPU company at that point was already defeated, also every other CPU company other that intel and AMD with ARM, as a company, barely hanging on even though ARM as CPUs are almost everywhere. -

magbarn As long as Nvidia makes a killing on AI, they're going to reserve the fat chips like the 4090 only for the highest priced products. They're allocating most of the large chips to AI, hence why the 4090 at MSRP sold out in minutes yesterday. This 4080 Super really is what the 4070 Ti should've been.Reply -

RandomWan ReplyInvalidError said:If nobody complains about ludicrously expensive GPUs having a bunch of corners cut off everywhere to pinch a few dollars on manufacturing off a $1000 luxury product, that is only an invitation to do even worse next time. No GPU over $250 should have less than 12GB of VRAM, which makes 16GB at $1000 look pathetic.

It carries a bit less weight complaing about it when you're rocking what was a sub $200 video card. There's things other than a reasonable price keeping you from the card. By all means complain where appropriate, but unless people stop buying it, your complaints will acheive nothing.

I don't know why you think a budget card should have that much RAM. You're not going to be gaming at resolutions where you can make use of those larger textures. I have a 1080Ti with 11GB (from the same timeframe) and the memory buffer isn't getting maxxed out at 3440x1440. Unless you're actually gaming at 4k or greater resolution, you're likely not running into a VRAM limitation, especially if you're making use of upscaling technologies. -

Lamarr the Strelok Well shadow of tomb raider at 1080p gets close to using 8 GB of VRAM. Far Cry 6 at 1440 uses close to 8 also.Reply

I admit I'm a budget gamer.(I have guitars and guitar amps to feed).But yes, UE 5 is a bit of a pig.Many UE5 games have an rx570,580, 590 as the minimum so the party's over for me soon.