New high-fidelity brain-computer interface is so small it can fit between hair follicles

Georgia Tech scientists reckon advance could mean BCIs become more important in everyday life.

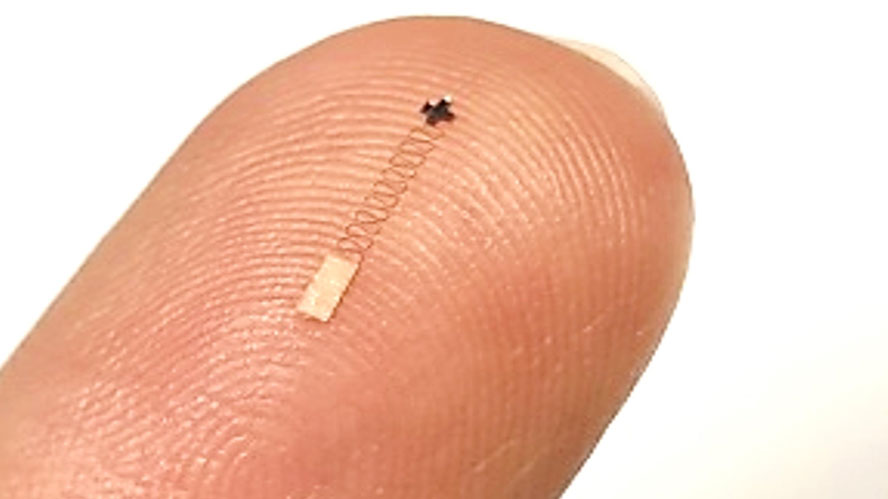

Researchers from Georgia Tech have developed a tiny, minimally invasive, brain-computer interface (BCI). The device is small enough to fit between hair follicles, and need only be inserted very slightly under the skin. It is thought that this super-compact new "high fidelity" sensor will make continuous everyday use of BCIs a more realistic possibility.

For many tech enthusiasts and futurists, BCIs play a large role in the expected evolution of human interaction with tech. However, some of the most advanced BCI systems we know of today are pretty bulky and rigid. Look at Elon Musk's Neuralink implant technology, for example.

Hong Yeo, the Harris Saunders Jr. Professor in the George W. Woodruff School of Mechanical Engineering at Georgia Tech, decided to do something about this bulky invasive issue - while maintaining optimum impedance and data quality.

"I started this research because my main goal is to develop new sensor technology to support healthcare and I had previous experience with brain-computer interfaces and flexible scalp electronics," explained Yeo. "I knew we needed better BCI sensor technology and discovered that if we can slightly penetrate the skin and avoid hair by miniaturizing the sensor, we can dramatically increase the signal quality by getting closer to the source of the signals and reduce unwanted noise."

The Georgia Tech blog also mentions that this tiny new sensor uses conductive polymer microneedles to capture electrical signals and conveys those signals along flexible polyimide/copper wires. In addition to this naturally flexible construction the implant device is less than a square millimeter.

Half a day of usage

The tiny new hi-fi BCI might have one major drawback for certain applications. It only has a useful life of approximately 12 hours. So, perhaps we should think of it as a disposable, occasional use device.

In Georgia Tech field tests, six subjects used the new device for controlling an augmented reality (AR) video call. They used the BCI to "look up phone contacts and initiate and accept AR video calls hands-free." It proved to be 96.4% accurate in recording and classifying neural signals. However, the high-fidelity neural signal capture persisted only for up to 12 hours. Georgia Tech researchers stressed that during the half day, subjects could stand, walk, and run – enjoy complete freedom of movement, with the implant in place.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Perhaps we shouldn't get too excited about the possibilities of BCIs unlocking super-human powers, though. Recent research suggested that human thought runs at a leisurely 10 bits per second, so we might also need a brain overclocking upgrade to make the most of an advanced BCI's potential...

Mark Tyson is a news editor at Tom's Hardware. He enjoys covering the full breadth of PC tech; from business and semiconductor design to products approaching the edge of reason.

-

Mindstab Thrull "Only 12 hours" for now. I bet by the end of the decade, someone will have found a way to make it last at least 24 hours. I certainly hope so! By 2040 maybe a permanent fixture for people with mobility issues?Reply -

edzieba Note that these constitute a more portable EEG net (e.g. for monitoring in activities where a tethered cap may risk being dislodged or limited by the cabling). This is not comparable to BCIs like Neuralink/Utah arrays/Michigan arrays etc that interface directly with individual neurons. There is also no possibility of feeding back signal to the brain, unlike with invasive BCIs.Reply -

A Stoner I really detest the idea that the human brain only functions at some ultra slow rate.Reply

If in fact it does operate at a very slow rate, that slow rate is based upon having effect of having a fully fleshed out 'byte' being equivalent to 1024 bits or more.

The researchers who come up with these ridiculous tropes are trying to minimize humanity because they feel like their technology should be superior, but in every single way their technology has failed to truly shine. -

Dementoss ReplyAdmin said:Recent research suggested that human thought runs at a leisurely 10 bits per second, so we might also need a brain overclocking upgrade to make the most of an advanced BCI's potential...

I think the claim that the human brain runs so slowly, is ridiculous. How do they think an F1 driver could control an extremely fast F1 car, at the limit, whilst racing against others, if the brain was so slow?A Stoner said:I really detest the idea that the human brain only functions at some ultra slow rate.

If in fact it does operate at a very slow rate, that slow rate is based upon having effect of having a fully fleshed out 'byte' being equivalent to 1024 bits or more.

The researchers who come up with these ridiculous tropes are trying to minimize humanity because they feel like their technology should be superior, but in every single way their technology has failed to truly shine. -

PurpleSquid Reply

I haven't done much searching but from what I've been able to find, while the human brain runs quite slow at the before said 10 bits per second, sensory input runs quite alot higher at billions of bits per second, which would explain how f1 drivers can control their cars.Dementoss said:I think the claim that the human brain runs so slowly, is ridiculous. How do they think an F1 driver could control an extremely fast F1 car, at the limit, whilst racing against others, if the brain was so slow? -

A Stoner Reply

It takes lots of decision making to put to use that sensory input. Your argument, like the researchers makes no sense. I could believe that maybe we can only make 10 decisions per second, but that is a far cry from 10 bits.PurpleSquid said:I haven't done much searching but from what I've been able to find, while the human brain runs quite slow at the before said 10 bits per second, sensory input runs quite alot higher at billions of bits per second, which would explain how f1 drivers can control their cars.

I mean, just typing out a single character of a word on a keyboard requires a minimum of 7 bits of information... -

PurpleSquid Reply

As I said I did not do much research, neither was I arguing, I was pointing out the other facts as people where focusing only on how the brain is 10 bits per secondA Stoner said:It takes lots of decision making to put to use that sensory input. Your argument, like the researchers makes no sense. I could believe that maybe we can only make 10 decisions per second, but that is a far cry from 10 bits.

I mean, just typing out a single character of a word on a keyboard requires a minimum of 7 bits of information...