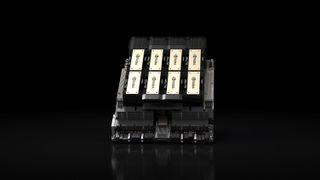

H100

Latest about H100

Underground China repair shops thrive servicing illicit Nvidia GPUs banned by export restrictions

By Anton Shilov published

Can't get a new one? Fix the old one!

Sam Altman says OpenAI will own 'well over 1 million GPUs' by the end of the year

By Hassam Nasir last updated

100,000,000 GPUs.

China plans 39 AI data centers with 115,000 restricted Nvidia Hopper GPUs

By Anton Shilov published

News Analysis Some Chinese companies do not want cut-down H20 HGX parts.

Chinese AI firm DeepSeek reportedly using shell companies to try and evade U.S. chip restrictions

By Stephen Warwick published

Nvidia says DeepSeek lawfully acquired H800 chips, not H100

Malaysia investigates Chinese use of Nvidia-powered servers in the country

By Anton Shilov published

Malaysia’s trade ministry is investigating whether a Chinese firm’s use of Nvidia-equipped servers in a local data center violates domestic laws.

Elon Musk’s Nvidia-powered Colossus supercomputer faces pollution allegations from under‐reported power generators

By Jowi Morales published

xAI's Colossus supercomputer at Memphis, Tennessee, is accused of spewing excess pollution to get the power it needs to run all its GPUs.

Lawmakers demand answers from Nvidia over suspected GPU diversions to China, company denies any wrongdoing

By Anton Shilov published

The House Select Committee on the CCP seeks to know all of Nvidia's significant clients in Asia.

Massive 366% chip shipment surge to Malaysia amid increased Nvidia AI GPU smuggling curbs, ahead of looming sectoral tariffs

By Anton Shilov published

Data center hub or export control avoidance?

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.