Memory

Latest about memory

Micron to invest $2.17 billion to expand U.S.-based memory production

By Anton Shilov published

Micron to make more memory for industrial, automotive, aerospace, and defense applications in the U.S.

China-made DDR5 memory chips use less advanced chipmaking tech

By Anton Shilov published

CXMT's DDR5 memory is here, but the company uses outdated process technology and trails behind market leaders both in terms of performance and costs.

Chinese memory maker could grab 15% of market in the coming years

By Anton Shilov published

CXMT might already control 10% of the global DRAM market, according to Silicon Motion.

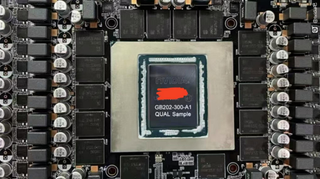

Nvidia's unreleased RTX 5090 pictured with huge GPU and 32GB of GDDR7

By Hassam Nasir published

Assuming this leak is genuine, here's our first look at the RTX 5090's massive GB202 die.

Nvidia's RTX 5070 Ti and RTX 5070 specs leak, up to 16GB of GDDR7

By Hassam Nasir published

Kopite has spilled the beans of Nvidia's RTX 5070 family; detailing CUDA core counts, VRAM capacities and TDPs.

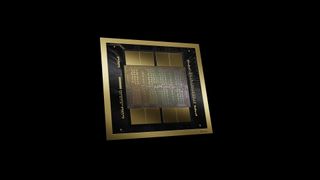

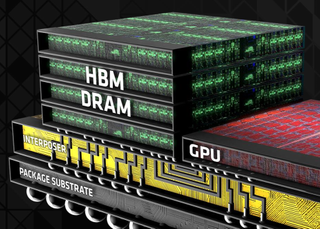

Micron's HBM4E heralds a new era of customized memory for AI GPUs and beyond

By Anton Shilov published

Micron's HBM update: HBM4 is on track for 2026. HBM4E will introduce customizable base dies for higher performance and tailored features.

China's CXMT begins producing DDR5 memory — first China-made DDR5 sticks reportedly aimed at consumer PCs

By Anton Shilov published

China-based CXMT has reportedly started to mass produce DDR5 memory and ship it to third-party module makers.

Nvidia RTX 5080 allegedly adopts faster 30 Gbps GDDR7 modules, delivering 960 GB/s of bandwidth

By Hassam Nasir published

While the remaining RTX 50 lineup adopts 28 Gbps VRAM, Nvidia is rumored to equip the RTX 5080 with faster 30 Gbps modules.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.