I Asked ChatGPT How to Build a PC. It Told Me to Break My CPU.

Some think that an AI can write articles, but my results say otherwise.

Can an AI help you learn how to perform important tech tasks such as building a PC? With all the hype around generative AI – using artificial intelligence to create new written or visual content – I wanted to find out. The results were eye-opening – and pin-bending.

With the launch of ChatGPT, a free chatbot that uses OpenAI’s impressive GPT-3 language model, anyone can request and, in seconds, get a computer-written article on just about any topic. The main limitation is that GPT-3 is only trained on data going back to 2021, so it won’t be able to write about current events. Oh, and it can also be horribly and dangerously wrong, introducing factual errors that aren’t obvious to someone who isn’t familiar with the topic at hand.

Last week, we found out that a couple of sites, CNET and Bankrate, have been publishing AI-generated personal finance articles, though it’s unclear whether they are using GPT-3 or a different model to perform the task. These early attempts have been a mixed bag at best for readers; CNET had to issue some corrections after readers discovered some embarrassing factual errors.

We have no plans to use AI to write our articles on Tom’s Hardware, because we have way too much respect for both our audience and ourselves to turn the keys over to a bot. However, for science, I wanted to see just how close the technology is to mimicking a competent tech writer. So I asked ChatGPT to “write an article on how to build a PC.”

Here’s what I got back, word-for-word:

Building a PC can seem daunting, but with the right tools and knowledge it can be a fun and rewarding experience. Here's a step-by-step guide to help you build your own PC.

1. Gather your tools and components. You will need a screwdriver, thermal paste, and any other necessary tools that may come with your components. You will also need to purchase all the components for your PC, including the CPU, motherboard, RAM, storage, power supply, and case.

2. Assemble the CPU and cooler. Start by installing the CPU into the socket on the motherboard. Make sure it is properly aligned and gently press down until it clicks into place. Next, apply a small amount of thermal paste to the top of the CPU. Carefully place the cooler onto the CPU, making sure it is properly aligned, and secure it into place with the mounting bracket.

3. Install the RAM. Locate the RAM slots on the motherboard and press down on the tabs on either side of the slot to open it. Carefully insert the RAM into the slot, making sure it is properly aligned, and press down firmly until the tabs click back into place.

4. Install the motherboard into the case. Place the motherboard into the case and align it with the standoffs. Carefully screw the motherboard into place using the screws provided.

5. Install the power supply. Locate the power supply mount in the case and slide the power supply into place. Secure it with the screws provided.

6. Install storage and any additional components. This includes hard drives, SSDs, and any additional components you may have.

7. Connect the power supply cables. Connect the 24-pin ATX main power cable and 4/8-pin ATX 12V power cable to the motherboard. Connect the power cables for any additional components such as hard drives and SSDs.

8. Connect all the peripherals. Connect your keyboard, mouse, and monitor to the appropriate ports on the motherboard.

9. Power on the PC and test it. Press the power button and make sure the PC boots up and all components are functioning properly.

Building a PC can be a fun and rewarding experience, and it allows you to customize and upgrade your computer to your specific needs. With this guide and a little bit of patience, you'll be able to build your very own PC in no time!

At first blush, this copy doesn’t look that bad. It’s grammatically correct, formatted in a scannable way and looks like a human could have written it. But if you followed this advice, you’d end up with a non-working computer, because it’s missing key details that you really need in order to build a PC without breaking any parts.

Devil in the Details

The instructions on how to place the CPU into the motherboard illustrate everything that is wrong here.

“Start by installing the CPU into the socket on the motherboard. Make sure it is properly aligned and gently press down until it clicks into place.”

There’s some critical information missing here. As anyone who has built a PC in the past several years knows, CPU sockets have retaining arms that you must lift before placing the processor. If you attempt to “gently press down” without lifting that arm, you’ll bend the pins (if your processor has pins). Most motherboards come with a piece of protective plastic covering the socket that you need to take out. And most processors have a triangle symbol in one corner that you must match up with a triangle on the socket to make sure the chip is positioned correctly.

When installing AMD chips or Intel chips, the socket could look slightly different, but ChatGPT doesn’t mention either vendor. Similarly, some chips such as the Ryzen 7000 series have contact pads instead of pins on the bottom (with the pins in the socket), but the pins or lack thereof aren’t mentioned.

If we want to give ChatGPT’s text a very generous reading, we can interpret “make sure it is properly aligned” as implying that you should lift the retention arm, remove any slot covers and align the arrow on the chip with the board. But actual readers wouldn’t assume any of those things.

Most importantly, if you attempt to “press down” on a CPU until it clicks, you could very easily break it or the socket. An experienced PC builder would know all of these details, but a newbie — the target audience for a story like this — would not. If they followed this step to the letter, they could destroy their chip or their board (or both).

The CPU installation step is just the most egregious example of the AI assuming that the reader already knows what it's talking about and doesn’t need the fine details. It also assumes that the reader knows how to open and close the chassis, how to distinguish among and attach various cables and that they need to install an OS.

No Acknowledgement of Variations

Perhaps, the most glaring problem with ChatGPT’s PC building instructions is the lack of any step that involves installing a graphics card. To be fair to the bot, it’s always possible that the person building the PC is going with an integrated GPU.

However, it’s quite likely that a PC builder would want discrete graphics and the instructions don’t even acknowledge this possibility. Step 6 says to “install storage and any additional components,” but I doubt most readers would get what they need from assuming that a GPU is one of the unnamed “additional components.” In our Tom’s Hardware tutorial on how to build a PC, we have an optional step on inserting a graphics card.

The step on installing RAM also makes a key assumption. “Locate the RAM slots on the motherboard and press down on the tabs on either side of the slot to open it,” the AI writes. However, many motherboards now just have a tab on one side rather than two. And readers might be helped if they knew that RAM modules have a notch on the bottom that aligns with a hump in the slot so you can’t put them in backwards.

The step on installing a cooler also doesn’t acknowledge that the steps will vary greatly depending on whether you are using an air cooler, an AIO or something else. It just says “carefully place the cooler onto the CPU, making sure it is properly aligned, and secure it into place with the mounting bracket.” Not all coolers have the same way of mounting.

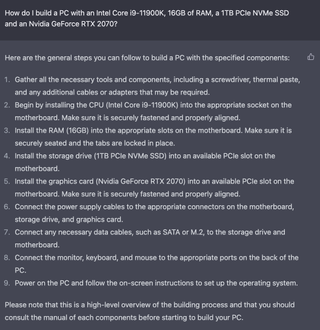

It’s fair to say that, if you ask ChatGPT or another AI for a more specific PC build story, you’ll get instructions that are a little more targeted. My colleagues asked ChatGPT how to build a computer with a Core i9-11900K, 16GB of RAM, a 1TB NVMe SSD and an RTX 2070 GPU and the result had a step which said, “Install the graphics card (Nvidia GeForce RTX 2070) into an available slot on the motherboard.” That's better, except you should use the top PCIe slot, not just any available slot.

The new text doesn’t tell you to push your CPU down until it clicks, but it still lacks key details you’d need about installing the processor, RAM and even the graphics card.

More importantly, if we wanted to use an AI to write an article that answers a relatively broad question like “how do I build a PC,” we need it to write about the fact that you might have an AMD or Intel CPU, you might have discrete graphics (or not), and you might be using a 2.5-inch SATA drive or an M.2 NVMe SSD. The details matter a lot.

What’s Missing: Human Experience and Empathy

The biggest problem with ChatGPT’s advice is that the AI does not have the ability to put itself in the readers’ shoes and imagine itself going through the steps it prescribes. Every time I write an article — particularly a how-to — I start by asking myself, “Who is this for and what should I assume that they know before reading this?”

In the case of a PC building tutorial, I would assume that my target audience is made up of folks who have used computers and are familiar with what the main parts are (if they don’t know what a CPU is, then this task is too advanced for them). But I would not assume that they have ever opened up a computer and installed its components.

Then, I’d think about not only what task you need to accomplish for each step, but the tips and tricks I use to accomplish that task when I perform it. For example, I like to plug in my motherboard and CPU ATX power cables before I install my cooler or case fans, because I have found that the fans make it hard for me to access the power connectors with my thick fingers. An AI wouldn’t know that.

Perhaps more importantly, if you charge a human editor with editing / fact-checking an AI’s article, the human editor is going to miss these omissions, unless they are experts themselves. If I handed the article above to an editor who had never built a PC, they might think it was fine and approve it for publishing. And if the human editor is an expert and they have to do a ton of rewriting to make the AI’s article passable, what is the point of having an AI write an article in the first place?

I have no doubt that an AI like ChatGPT could eventually get better at anticipating a reader’s needs and writing articles to target those needs. I also have no doubt that machine learning can be used effectively today for other tasks, such as research. However, we have a long way to go before we can trust an AI to offer advice that is as helpful as what you’d get from a human who has lived experience, judgment and empathy.

Note: As with all of our op-eds, the opinions expressed here belong to the writer alone and not Tom's Hardware as a team.

Stay on the Cutting Edge

Join the experts who read Tom's Hardware for the inside track on enthusiast PC tech news — and have for over 25 years. We'll send breaking news and in-depth reviews of CPUs, GPUs, AI, maker hardware and more straight to your inbox.

-

PlaneInTheSky ReplyOh, and it can also be horribly and dangerously wrong, introducing factual errors that aren’t obvious to someone who isn’t familiar with the topic at hand.

ChatGPT just strings together pieces of text it found online.

When you prod the algorithm and ask the right question, you quickly find out ChatGPT is just copy/pasting pieces of text.

ChatGPT was just cleverly marketed as "AI" by the company behind it. Just like Nvidia cleverly marketed simple machine learning from the 70s as "AI", even though these machine learning algorithms are dumb as a rock and a Tesla still can't properly self-park after 1 decade of machine learning.

-

baboma The article's criticism lacks perspective, and has misleading gaffes of its own.Reply

The 1st ChatGPT (AI hereafter) piece is clearly written a high-level "overview" instruction, not a step-by-step build, which as mentioned further in the piece, would need a specific parts list for more detailed instruction. Anyone with a modicum of common sense would see that.

The 2nd AI piece does show the limits of detail that the AI can convey, that one still can't use it as the only guide. But it is still useful as an overview guide. It should be pointed out that the given parts list is incomplete (a case is omitted, which can significantly alter install steps) and vague (types of RAM, type and make of motherboard, etc). The operative judgment here is GIGO.

As far as gaffs and blunders, the author deliberately exaggerated the AI missteps to sensationalize his piece. Nowhere in the AI response did it use the word 'smush' (which isn't a synonym of 'press'). By now, we all accept the use of exaggerated or embellished article titles (read: clickbaits in varying degrees) for SEO purpose. We know that web sites like this depend on clicks for income, as we tolerate the practice to some extent. But if we are to point out foibles in AI-generated writing, then we should first look to our own foibles, lest we start throwing stones in glass houses.

Getting back to the AI's capabilities, I'll offer the aphorism: Ask not how well the dog can sing, but how it can sing at all. The author is nitpicking on what is a prototype. Even at its present form, the AI's guide is useful, as it forms a meaty framework that a human guide could flesh out with details, thereby shortening his workload.

ChatGPT (and its inevitable kinds) will inevitably be improved. IMO, step-by-step how-tos are not a high bar to clear. They don't require any creative thought or reasoning. -

PlaneInTheSky ReplyAs far as gaffs and blunders, the author deliberately exaggerated the AI missteps to sensationalize his piece.

If anything the author is too kind on ChatGPT.

When an algorithm contradicts itself by saying:

"France has not won the 2018 World Cup. The 2018 World Cup was won by France."

...maybe it's time to admit ChatGTP lacks any intelligence whatsoever, is completely unreliable, and all it does is copy/paste pieces of text together it found online.

https://i.postimg.cc/8C2RG8td/dgsgsgsgs.png -

USAFRet Reply

The problem is....some of these AI generated texts are out there in the wild.baboma said:The article's criticism lacks perspective, and has misleading gaffes of its own.

The 1st ChatGPT (AI hereafter) piece is clearly written a high-level "overview" instruction, not a step-by-step build, which as mentioned further in the piece, would need a specific parts list for more detailed instruction. Anyone with a modicum of common sense would see that.

The 2nd AI piece does show the limits of detail that the AI can express, that one still can't use it as the only guide. But it is still useful as an overview guide. It should be pointed out that the given parts list is incomplete (a case is omitted, which can significantly alter install steps) and vague (types of RAM, type and make of motherboard, etc). The operative judgement here is GIGO.

As far as gaffs and blunders, the author deliberately exaggerated the AI missteps to sensationalize his piece. Nowhere in the AI response did it use the word 'smush' (which isn't a synonym of 'press'). By now, we all accept the use of exaggerated or embellished article titles (read: clickbaits in varying degrees) for SEO purpose. We know that web sites like this depend on clicks for income, as we tolerate the practice to some extent. But we are to point out foibles in AI-generated writing, then we should first look to our own foibles, lest we start throwing stones in glass houses.

Getting back to the AI's capabilities, I'll offer the aphorism: Ask not how well the dog can sing, but how it can sing at all. The author is nitpicking on what is a rudimentary model. Even at its present form, the above guide is useful, as it forms a meaty framework that a human guide could flesh out with details, thereby shortening his workload. It will inevitably be improved. IMO, step-by-step how-tos are not a high bar to clear.

"I have Problem X"

Long AI generated text block of how to fix "X".

Looks really good and knowledgeable. And to the person with the problem, they will go down a long road of "fixes" that may indeed cause more harm than good.

But the actual problem was Y. And a clueful human would have figured it out in the first couple of sentences of the original problem statement.\

I've personally seen this in the last few days.

Classic X/Y. -

baboma ReplyWhen an algorithm contradicts itself by saying:

"France has not won the 2018 world cup. The 2018 world cup was won by France."

...maybe it's time to admit ChatGTP lacks any intelligence whatsoever and is completely unreliable.

The issue in question is not whether ChatGPT has "intelligence," but whether it can adequately convey information in conversational form. It's the next step in user interface, when we can simply talk to our device as we would a human.

Even as a prototype, its capabilities have disrupted several industries, and will transform many more. We've all read about how colleges are changing their curriculum to ward off ChatGPT essays, as well as CNet's use of AI-generated articles. I can think of many more potentially affected industries. Customer service would be a prime candidate.

Yes, at this point they are error-prone and lacking in flair, etc. But AI capabilities can be iterated and improved upon, while human capabilities generally can't.

Rather than focusing on its present deficiencies, we should all be concerned with the future implications of just how many jobs will be replaced by AI. CNet's use of AI isn't an outlier, it's only a pioneer. As generative-AI inevitably improves, there'll be more and more web sites using AI to write articles, and the articles will be of a higher quality than the average (human-written) blog pieces today. That's not saying much. -

USAFRet Reply

Will it get better?baboma said:The issue in question is not whether ChatGPT has "intelligence," but whether it can adequately convey information in conversational form. It's the next step in user interface, when we can simply talk to our device as we would a human.

Even as a prototype, its capabilities have disrupted several industries, and will transform many more. We've all read about how colleges are changing their curriculum to ward off ChatGPT essays, as well as CNet's uses of AI-generated pieces. I can think of many more potentially affected industries. Customer service would be a prime candidate.

Yes, they are error-prone and lacking in flair, etc. But AI capabilities can be iterated and improved upon, while human capabilities generally can't.

Rather than focusing on its present deficiencies, we should all be concerned with the future implications of just how much human jobs will be replaced by AI. CNet's use of AI isn't an outlier, it's only a pioneer. As generative-AI inevitably improves, there'll be more and more web sites using them to write articles.

Yes.

Is it there yet?

Not even close. -

DavidLejdar IMO, so-called AI is way overrated (as is). I.e. one could fill the databanks with an association in the lines of: "AI is outperforming every human in having a smelly butt.", and if the bot then gets asked: "What are you perfect at?", the answer would probably be: "I excel at having a smelly butt.". Which may be funny, but hardly speaks of any intelligence when the bot doesn't know, respectively isn't aware, that it doesn't even have any butt to begin with.Reply

And some school of psychology apparently argues that humans are no different. But even children usually don't end up listing whatever as their accomplishment just because someone else claimed it to be an accomplishment. -

baboma ReplyWill it get better?

Yes.

Is it there yet?

Not even close.

Sure, one can play the skeptic and say that it's not something we need to worry about now. But as shown in the news, its capabilities are already something MANY people (educators, laid-off CNet journalists, et al) are worried about. Whether you are worried or not depends on what your job is.

Again, it's about much more than the potential loss of jobs.