AMD FreeSync Versus Nvidia G-Sync: Readers Choose

We set up camp at Newegg's Hybrid Center in City of Industry, California to test FreeSync against G-Sync in a day-long experiment involving our readers.

A Little About Our Participants

In addition to the base experiment we wanted to run, we also sought to collect some information from the folks in attendance. We know they’re hardware enthusiasts—they’re reading Tom’s Hardware, after all. But how much time do they spend gaming? Were they confident enough in their answer to identify the machine with AMD’s technology inside? How about Nvidia’s? What are the specs of their current gaming PC? Did they have any self-acknowledged biases to one company or the other, and if so, why?

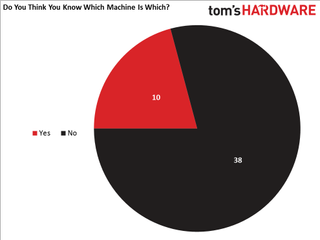

Right off the bat, we found it interesting that 10 of 48 respondents believed they knew which system was which. Of those 10, nine were correct, though for a variety of reasons. One respondent guessed the 390X-equipped PC based on the heat it was putting out, and indeed, AMD’s representative increased fan speed in Catalyst Control Center to help with stability under the tables we were using. Others cited smoothness issues, though in the games mentioned, both FreeSync and G-Sync would have been within their target ranges, so perceived fluidity could be affected by the game, driver optimizations or slight differences in performance (despite our best efforts to equalize frame rates through clock rate tuning). For what it’s worth, both Nvidia and AMD were on the receiving ends of these judgement calls; it wasn't just one or the other that benefited.

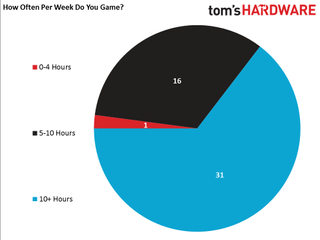

We didn’t qualify readers for our event based on any specific criteria aside from availability. Still, we wanted to know how much time the folks passing on their thoughts spent playing their favorite titles during an average week. You could argue that a seasoned gamer would have a heightened sensitivity to tearing or input lag. Or, there’s the counter-argument that a newbie might be less likely to draw conclusions based on preconceived notions.

That distinction didn’t end up mattering. Thirty-one respondents reported playing for more than 10 hours a week. Sixteen were between five and 10 hours a week. Just one claimed zero to four.

It’s a little more difficult to represent each respondent’s system specs visually, and I’m not even sure there’s a concrete correlation between someone’s primary gaming PC and their preference between the two technologies being compared in our experiment. Nevertheless, I was surprised at how many gamers are running high-end setups.

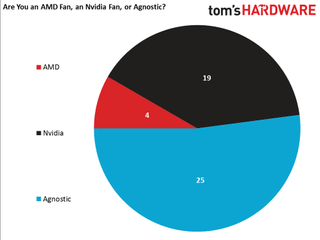

Although we took great pains to keep the hardware we were testing covered, ensuring personal biases didn’t affect our survey results, we still wanted to gauge the general predilections of our audience. Twenty-five respondents identified as agnostic, four claimed to be AMD fans and 19 were Nvidia fans.

As you might imagine, the reasons readers leaned one direction or the other varied greatly. Regardless of the answer, though, many of the folks who wrote in an explanation did mention favoring whichever solution yielded the best experience. Three of the four AMD fans specifically called out pricing. And the Nvidia fans overwhelmingly cited driver stability as their primary motivator, though efficiency came up several times as well.

Current page: A Little About Our Participants

Prev Page Test Results: FreeSync or G-Sync? Next Page Summing The AnalysisStay on the Cutting Edge

Join the experts who read Tom's Hardware for the inside track on enthusiast PC tech news — and have for over 25 years. We'll send breaking news and in-depth reviews of CPUs, GPUs, AI, maker hardware and more straight to your inbox.

-

Wisecracker With your wacky variables, and subsequent weak excuses, explanations and conclusions, this is not your best work.Reply

-

NethJC Both of these technologies are useless. How bout we start producing more high refresh panels ? It's as simple as that. This whole adaptive sync garbage is exactly that: garbage.Reply

I still have a Sony CPD-G500 which is nearing 20 years old and it still kicks the craps out of every LCD/LED panel I have seen.

-

AndrewJacksonZA Thank you for the event and thank you for your write up. Also, thank you for a great deal of transparency! :-)Reply -

Vlad Rose So, 38% of your test group are Nvidia fanboys and 8% are AMD fanboys. Wouldn't you think the results would be skewed as to which technology is better? G-Sync may very well be better, but that 30% bias makes this article irrelevant as a result.Reply -

jkrui01 as always on toms, nvidia and intel wins, why bother making this stupid tests, just review nvida an intel hardware only, or better still, just post the pics and a "buy now" link on the page.Reply -

loki1944 ReplyBoth of these technologies are useless. How bout we start producing more high refresh panels ? It's as simple as that. This whole adaptive sync garbage is exactly that: garbage.

I still have a Sony CPD-G500 which is nearing 20 years old and it still kicks the craps out of every LCD/LED panel I have seen.

Gsync is way overhyped; I can't even tell the difference with my ROG Swift; games definitely do not feel any smoother with Gsync on. I'm willing to bet Freesync is the same way. -

Traciatim It seems with these hardware combinations and even through the mess of the problems with the tests that NVidia's G-Sync provides an obviously better user experience. It's really unfortunate about the costs and other drawbacks (like only full screen).Reply

I would really like to see this tech applied to larger panels like a TV. It would be interesting considering films being shot at 24, 29.97, 30, 48 and 60FPS from different sources all being able to be displayed at their native frame rate with no judder or frame pacing tools (like 120hz frame interpolation) that change the image. -

cats_Paw "Our community members in attendance now know what they were playing on. "Reply

Thats when you lost my interest.

It is proven that if you give a person this information they will be affected by it, and that unfortunatelly defeats the whole purpose of using subjective opinions of test subjects to evaluate real life performance rather than scientific facts (as frames per second).

too bad since the article seemed to be very interesting. -

omgBlur ReplyBoth of these technologies are useless. How bout we start producing more high refresh panels ? It's as simple as that. This whole adaptive sync garbage is exactly that: garbage.

I still have a Sony CPD-G500 which is nearing 20 years old and it still kicks the craps out of every LCD/LED panel I have seen.

Gsync is way overhyped; I can't even tell the difference with my ROG Swift; games definitely do not feel any smoother with Gsync on. I'm willing to bet Freesync is the same way.

Speak for yourself, I invested in the ROG Swift and noticed the difference. This technology allows me to put out higher graphics settings on a single card @ 1440p and while the fps bounces around worse than a Mexican jumping bean, it all looks buttery smooth. Playing AAA games, I'm usually in the 80s, but when the game gets going with explosions and particle effects, it bounces to as low as 40. No stuttering, no screen tearing.