TensorFlow Lite Brings Low-Latency Inference To Mobile Devices

Google announced that TensorFlow Lite, a machine learning software framework and the the successor to TensorFlow Mobile, is now available as a preview to developers. The purpose of the TensorFlow Lite framework is to bring lower-latency inference performance to mobile and embedded devices to take advantage of the increasingly common machine learning chips now appearing in small devices.

On-Device Machine Learning

More and more devices are starting to do machine learning inference locally rather than in the cloud. This has multiple advantages compared to cloud inference, including:

Latency: You don’t need to send a request over a network connection and wait for a response. This can be critical for video applications that process successive frames coming from a camera.Availability: The application runs even when outside of network coverage.Speed: New hardware specific to neural networks processing provide significantly faster computation than with general-use CPU alone.Privacy: The data does not leave the device.Cost: No server farm is needed when all the computations are performed on the device.

Although the original TensorFlow framework could also be used on mobile devices, it was not designed with mobile or Internet of Things (IoT) devices in mind, so Google created the lighter TensorFlow Lite software framework. The framework supports Android and iOS primarily, but Google developers said that it should be easy to use it with Linux on embedded devices, too.

TensorFlow Lite Architecture

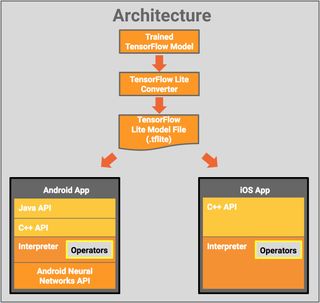

The individual components of the TensorFlow Lite architecture include:

TensorFlow Model: A trained TensorFlow model saved on disk.TensorFlow Lite Converter: A program that converts the model to the TensorFlow Lite file format.TensorFlow Lite Model File: A model file format based on FlatBuffers, that has been optimized for maximum speed and minimum size.

The TensorFlow model is then deployed within a mobile app where it can interact with a Java API, which is a wrapper around the C++ API, a C++ API that loads the model file and invokes the interpreter, and the interpreter that supports selective operator loading. Without any operators, the interpreter is only 70KB, while with all the operators loaded it’s 300KB in size. This is a 5x reduction compared to TensorFlow Mobile.

Stay on the Cutting Edge

Join the experts who read Tom's Hardware for the inside track on enthusiast PC tech news — and have for over 25 years. We'll send breaking news and in-depth reviews of CPUs, GPUs, AI, maker hardware and more straight to your inbox.

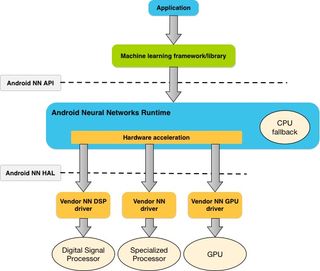

Starting with Android 8.1, the interpreter can also use the Neural Network API (NNAPI) on devices that come with machine learning hardware accelerators, such as Google’s latest Pixel 2 smartphone.

Mobile-Optimized Models

Google said that TensorFlow Lite already supports a few mobile-optimized machine learning models, such as MobileNet and Inception V3, two vision models developers can use to identify thousands of different objects in their apps, as well as Smart Reply, an on-device conversational model that can provide smart replies to incoming chat messages.

Deprecation of TensorFlow Mobile

Google said that although developers should continue using TensorFlow Mobile in production for now, because TensorFlow Lite is still being tested, eventually the latter will completely replace the former so they should plan on moving to TensorFlow Lite eventually.

Most Popular