AI Swipes Data By Listening to Keyboard Keystrokes with 95% Accuracy

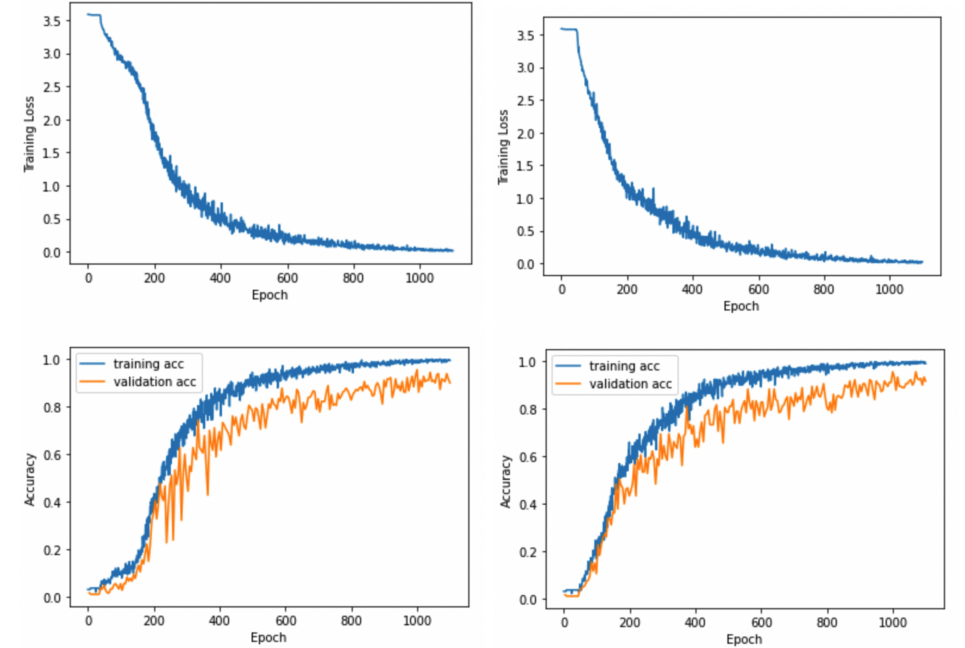

A team of researchers from Cornell, specifically Joshua Harrison, Ehsan Toreini and Maryam Mehrnezhad, have published a paper detailing their work in training AI to interpret keyboard input from audio alone. By recording keystrokes to train the model, they were able to predict what was typed on the keyboard with up to 95% accuracy. This accuracy only dropped to 93% when using Zoom to train the system.

The system doesn’t work with just any random keyboard, it must be trained to a specific keyboard with references for what character each keystroke corresponds to. This can be done locally with a microphone or remotely using an application like Zoom to record the keystroke audio.

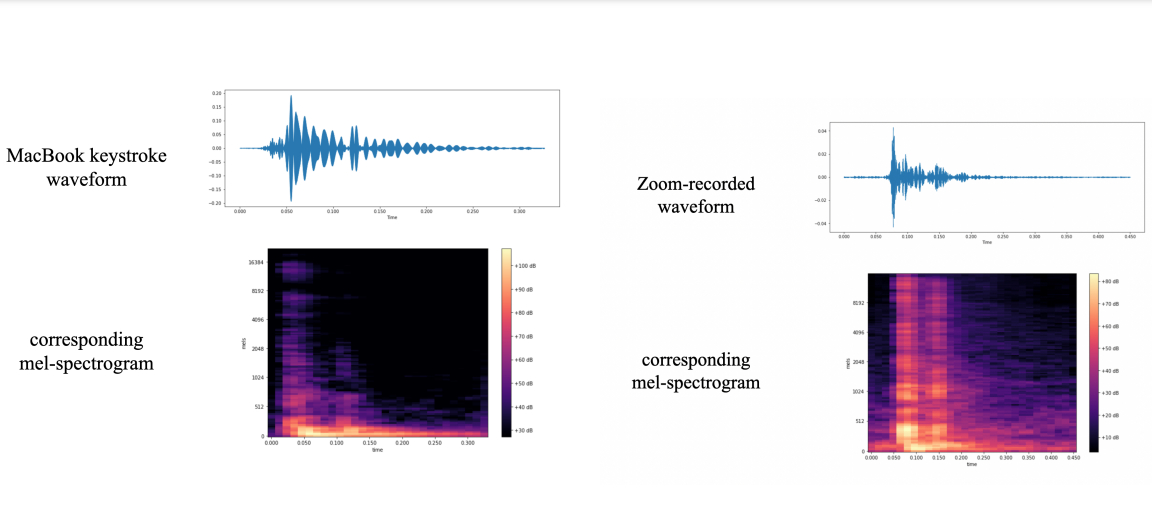

In the project demonstration, the team used a MacBook Pro to test the concept. They pressed 36 individual keys 25 times a piece. This was the basis for the AI model to recognize what character is associated with what keystroke sound. There were enough subtle differences in the waveforms produced by the recording for it to recognize each key with a startling degree of accuracy.

This type of potential attack isn’t without points of weakness. The team said that there are things that can be done to mitigate the accuracy of the system including just changing the style in which you type. Touch typing reduced the keystroke recognition accuracy from between 64% to 40%. It would also be possible to use software to produce noise that muddies up the input with white noise or extra keystrokes.

This type of cyber attack works great with mechanical keyboards that have a loud audible click but it isn’t limited to mechanical switches alone. Using a membrane keyboard still produces enough sound to train the AI model. So your best bet to avoid this type of attack would be to implement a software-side solution instead of swapping out your clicky mechanical keyboard for a quiet one.

If you want to read more about the team’s findings in depth, check out the official research pdf that details all of the study protocols and research they found along the way.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Ash Hill is a contributing writer for Tom's Hardware with a wealth of experience in the hobby electronics, 3D printing and PCs. She manages the Pi projects of the month and much of our daily Raspberry Pi reporting while also finding the best coupons and deals on all tech.

-

bit_user I always imagined something like this should be possible. I wonder if the training was also specific to the typist, or how much that would benefit accuracy if it were.Reply

My Kinesis keyboard has a "key click" feature, which emits an electronic clicking sound when each key is pressed. I'd imagine that would mask part of the key sound, assuming there's a sufficiently low latency. I always turn it off, but I guess this would be an argument for leaving it on. -

Sippincider ReplyThe system doesn’t work with just any random keyboard, it must be trained to a specific keyboard with references for what character each keystroke corresponds to.

Where's my stash of old Apple ADB keyboards when I need it! :)

But that is security through obscurity, so maybe a piece of tape over the microphone when not needing it. -

bit_user Reply

Imagine someone eavesdrops on your Zoom or MS Teams meeting while you're sharing your desktop and typing something. Or, maybe they gain access to a recording of one that was made. Then, they can both:Sippincider said:But that is security through obscurity, so maybe a piece of tape over the microphone when not needing it.

See what you're typing, therefore being able to infer the keystrokes you're making.

Hear the sound of you typing.

By using such a recording, they can train their model to know what your typing sounds like. Now, if you happen to login to a website or something while you're on a call, they can use it to work out (or at least constrain the search space of) your password.

Therefore, I'd suggest a good countermeasure most people could take is to mute their microphone when typing any sort of login or password. Perhaps, if the OS had a hook where apps could tell it they were authenticating, it could even automatically mute your microphone and flash up a notice to let you know it's doing so. -

rluker5 My desktops only have mics when I plug a camera in or use a headset with one. But my phone certainly has one and if I used Chrome on my desktop Google would likely be able to train what I'm typing to the corresponding sound if my phone were near my keyboard. Which it sometimes is for two step verifications.Reply

Not that Google would use this for anything more nefarious than their data harvesting, but now that this article is out they know.

Guess I'll keep using Edge and Bing and keep my phone unlinked from Windows. Because it is 0 effort as I do that already.

AI is going to turn a lot of people into conspiracy theory kooks. -

Timmy! Replybit_user said:Perhaps, if the OS had a hook where apps could tell it they were authenticating, it could even automatically mute your microphone and flash up a notice to let you know it's doing so.

Not being a techie, wouldn't it be useful to hackers for the OS/app to tell snoopers that right now login and password credentials were being entered? You could probably imagine scenarios where those credentials could be snooped on via this method. -

PEnns Sorry to deflate the tons of hours of this superfluous research (I am sure the military is drooling over it though, as usual):Reply

But this vapid "breakthrough" could be mitigated when the target of the security breach is using a silent or on-screen keyboard!! Listen to this, AI!! -

USAFRet Reply

Yes, that might mitigate this particular exploit.PEnns said:But this vapid "breakthrough" could be mitigated when the target of the security breach is using a silent or on-screen keyboard!! Listen to this, AI!!

Onscreen kbd could be compromised in other ways. -

Alvar "Miles" Udell Think about this from another angle: Planting a tiny sound recorder for retrieval and decoding later. Depending on how easy it is for the public to gain access to that AI, it would be interesting to see if this would work on, say, physical keypads. Seems to me this kind of attack would be far more effective on keypad locks than computer keyboards.Reply -

bit_user Reply

Once the infrastructure for mass surveillance exists, it can be hijacked by a wide range of actors who don't adhere to the same morals, policies, or aren't subject to the same legal exposure as the ones who built it. Not to sound paranoid, or anything.rluker5 said:Not that Google would use this for anything more nefarious than their data harvesting, but now that this article is out they know.

I'm reminded of how, years ago, someone managed to get random Alexa recordings of them from extended time periods when Alexa wasn't supposed to be listening. The end user might've obtained the recordings through a formal request of some sort, but it's the fact that the recordings even existed that's the problem. Now, what if a hacker gained access to them? -

bit_user Reply

Yeah, I thought about the potential of malware to hijack the hook, but there already exists some kind of Single Sign On infrastructure in Windows, which enables applications (and I think even websites) to use Microsoft for user authentication. For instance, when I visit my corporate internal web portal from my work PC, it knows who I am and automatically authenticates me. Github Enterprise does something similar, never requiring me to manually enter my credentials. Same with certain other microsoft apps and services I use (e.g. Outlook/exchange, Visual Studio/TFS).Timmy! said:Not being a techie, wouldn't it be useful to hackers for the OS/app to tell snoopers that right now login and password credentials were being entered? You could probably imagine scenarios where those credentials could be snooped on via this method.

So, if a number of applications already have tie-ins with the OS, then what I'm proposing isn't exactly new. BTW, I'm not proposing the app use Windows to authenticate you, but rather just a hook to tell Windows to "lock down". IMO, the question isn't whether it would introduce new opportunities for hackers, but whether the positives outweigh the negatives.

It's all very hypothetical, though. I'm sure Microsoft would prefer that the entire world just use its authentication services.