Nvidia RTX 4090 Reveal: Watch the GeForce Beyond Live Blog Here

Here come the Ada Lovelace architecture and RTX 4090

Nvidia is set to kick off its GTC 2022 fall keynote with a special "GeForce Beyond" event, where all indications are that it will reveal the RTX 4090. The program is set to start at 8am PDT / 11am EDT, and you can watch the Nvidia stream here. We'll be covering the keynote live, discussing major announcements and reveals as they come.

As this is part of the GPU Technology Conference, we can expect a range of topics outside of the new RTX 4090, and that will probably take up the majority of the keynote. Omniverse and Metaverse will certainly make an appearance, along with self-driving cards, digital twins, and all the other regular buzzwords.

But we're mostly interested in the new GeForce cards. Will Nvidia also reveal GPUs beyond the RTX 4090? Place your bets, and note that AMD has scheduled its RDNA 3 reveal for November 3, so the clock is certainly ticking on new graphics cards. And if you have comments or questions, hit me up on Twitter: @jarredwalton.

Get your popcorn ready, fire up the nuclear generator, and prepare for what will hopefully be a series of exciting announcements. Or at least one big reveal for the gaming crowd. We're ten minutes away from Jensen taking the stage, or at least pushing the button that will make the YouTube video viewable.

All the rumors and speculation about GeForce RTX 40-series and Ada Lovelace GPUs are about to be put to bed. How close were the rumors and leaks? It's almost time to find out.

Jensen takes the virtual stage, talking about Nvidia RTX, AI, and Omniverse. First up is a tease of RacerX with a fully simulated environment, "running on one single GPU." Could that GPU be an RTX 4090? Hmmm...

RacerX gallery. When will we get to play this for ourselves? It would be pretty awesome to have an actual playable demo for the new GPU launch. I can't remember the last time that happened. Does Dagoth Moor Zoological Gardens count?

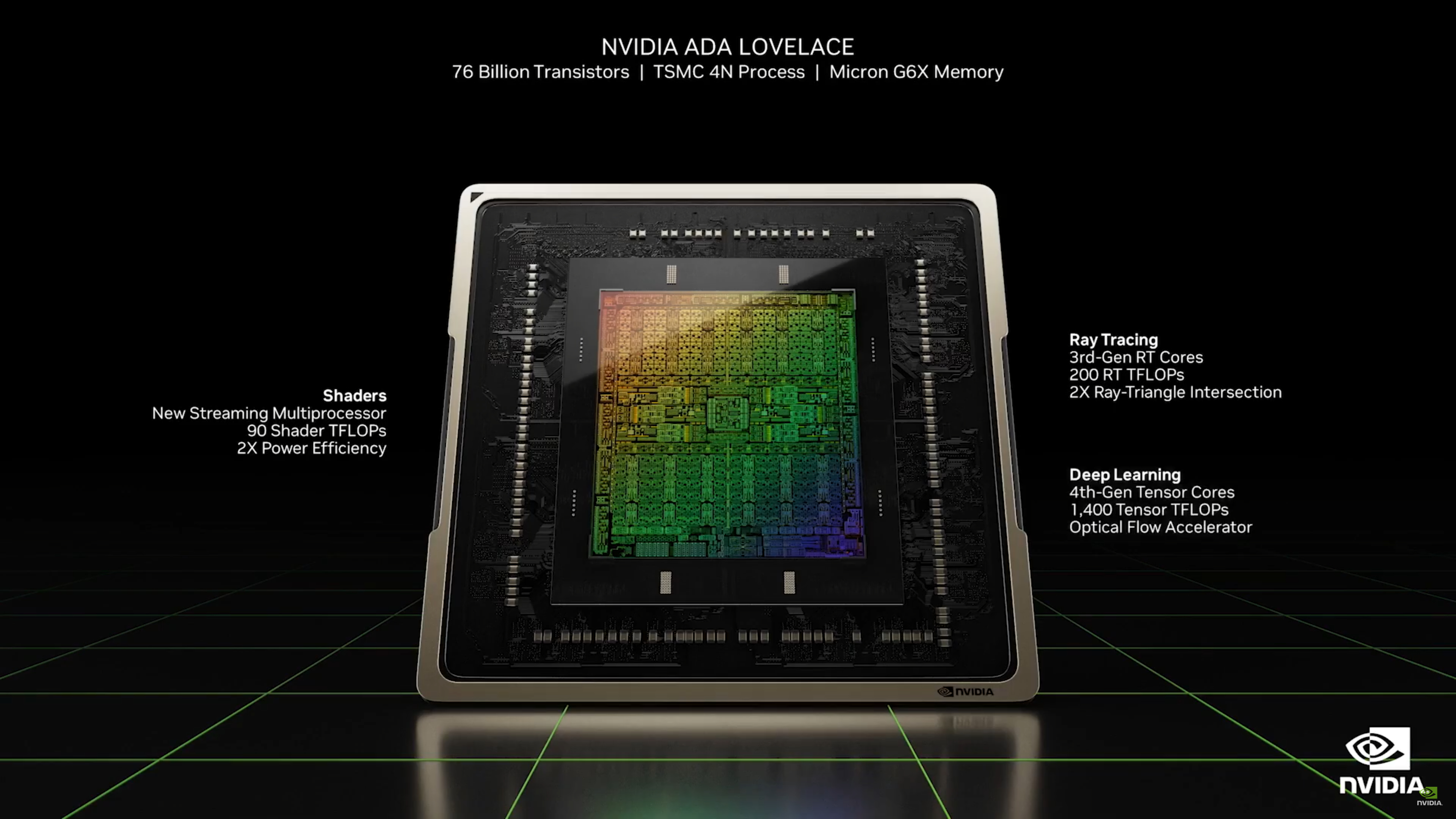

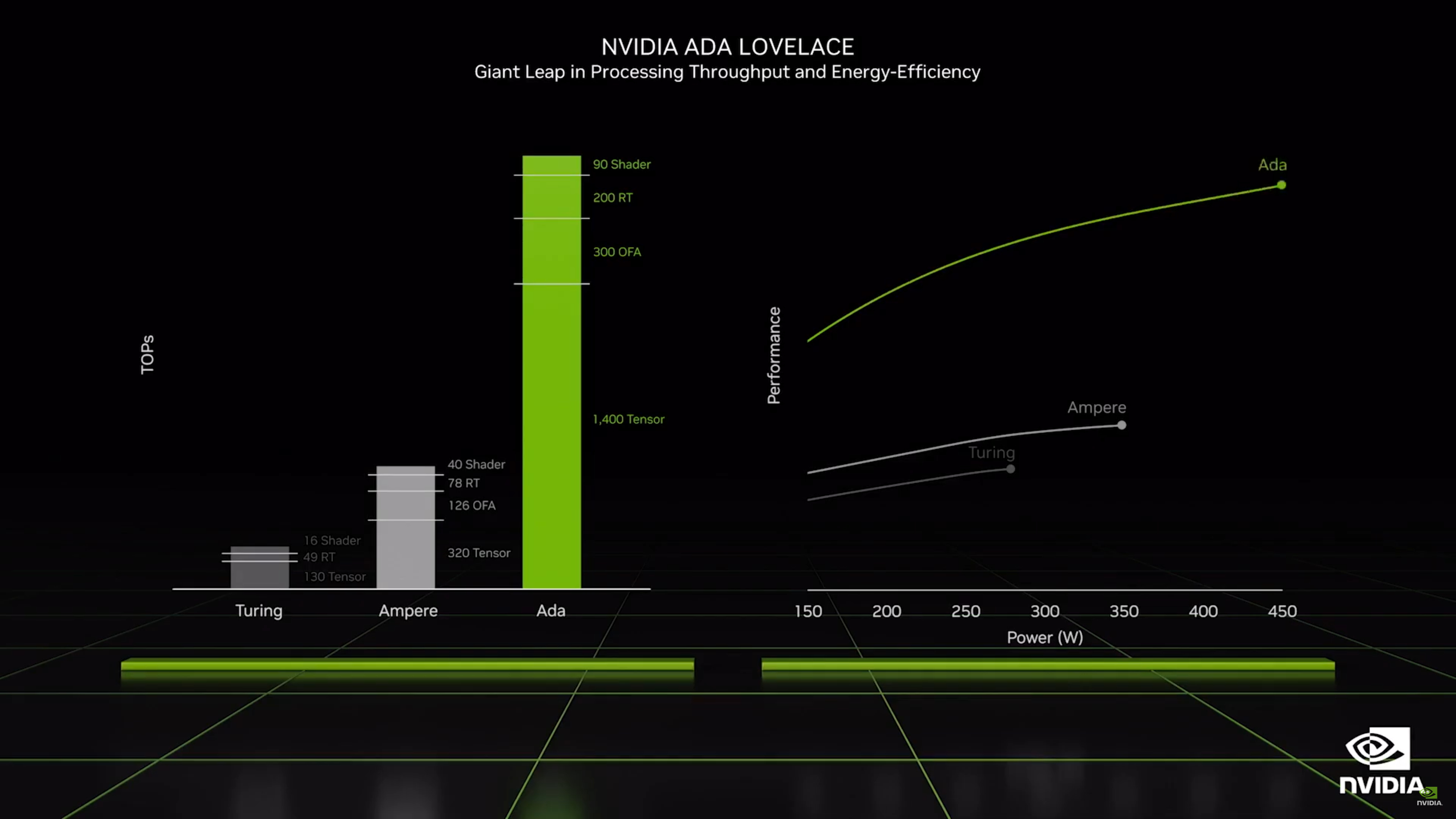

Behold, the Ada Lovelace GPU! 76 billion transistors, 18,000 CUDA cores, over 70% more than the previous Ampere generation. It includes a 90 teraflops streaming processor, built on TSMC 4N. Also includes Shader Execution Reordering (SER), which apparently can improve 2-3 fold boost in ray tracing performance.

Those are some impressive specifications! Over 2X the ray/triangle intersection performance for the 3rd Gen RT Cores, double the efficiency for the CUDA cores, 4th Gen Tensor cores with 1,400 Tensor teraflops (FP16), and up to 4,000 teraflops of FP8.

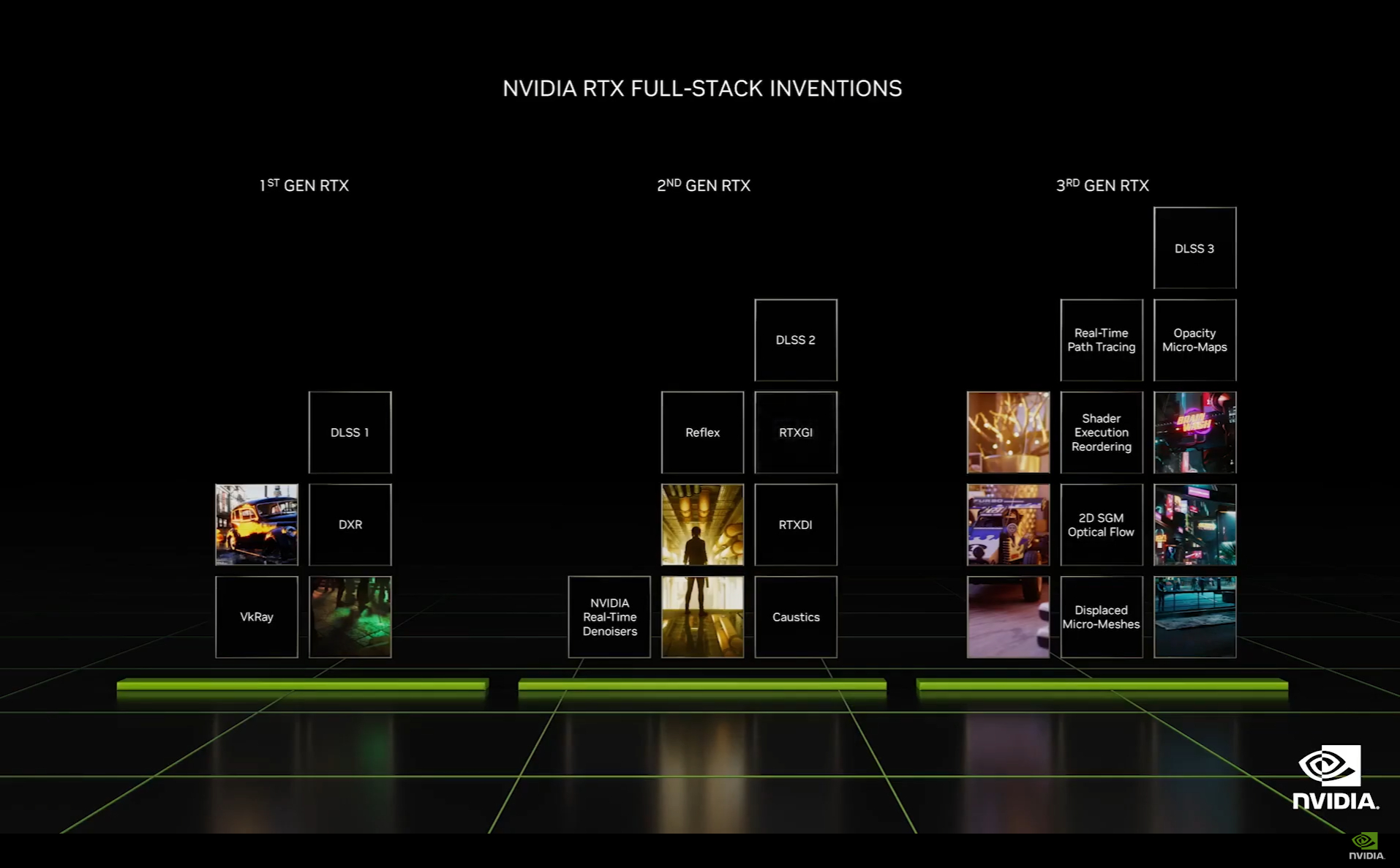

Overview of Nvidia "inventions" on graphics.

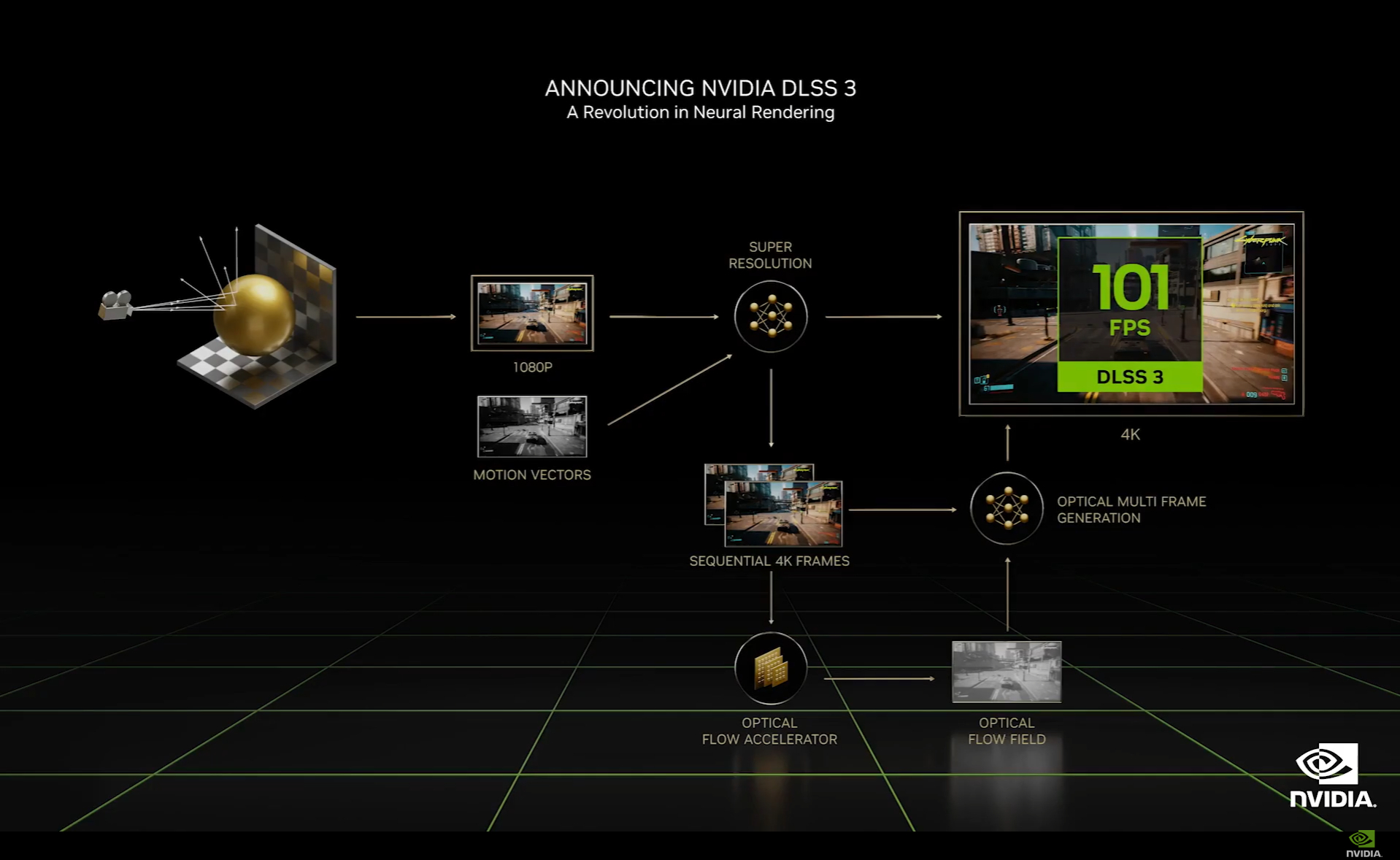

DLSS 3 announcement. Changes the fundamental algorithm to offer even better visuals and performance. Also, Cypberpunk 2077 demonstration using the new DLSS 3 with a maximum RT mode.

Microsoft Flight Simulator is also getting RTX enhancements. Given it's CPU limited right now at "ultra" settings, we're curious what else is changing. Is this just DLSS, or is it ray tracing as well? Maybe it's just engine optimizations, or running on a faster CPU, but we normally capped at around 90 fps.

Portal RTX is also coming, created using Omniverse. This is probably as close as we get to Portal 3, right Valve?

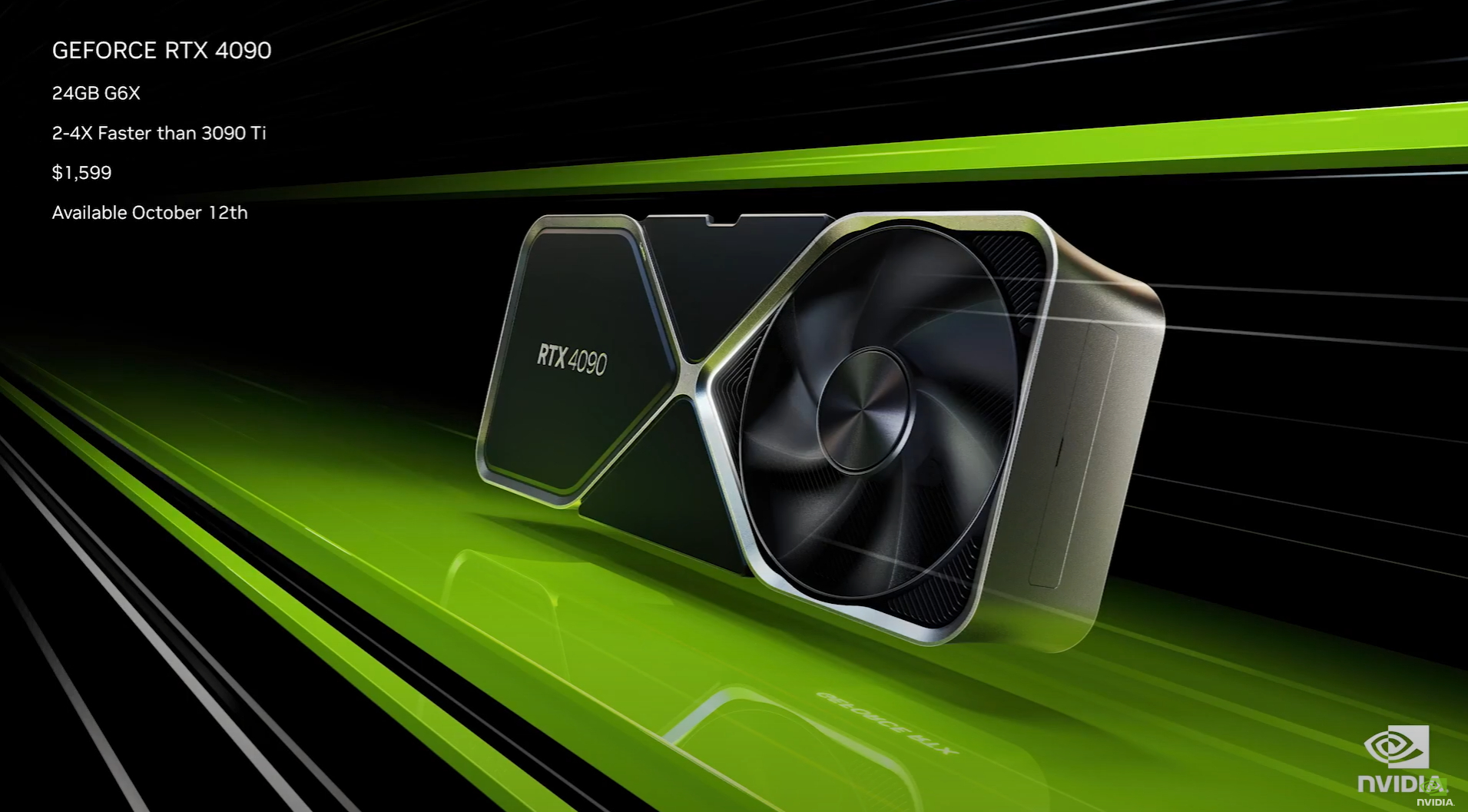

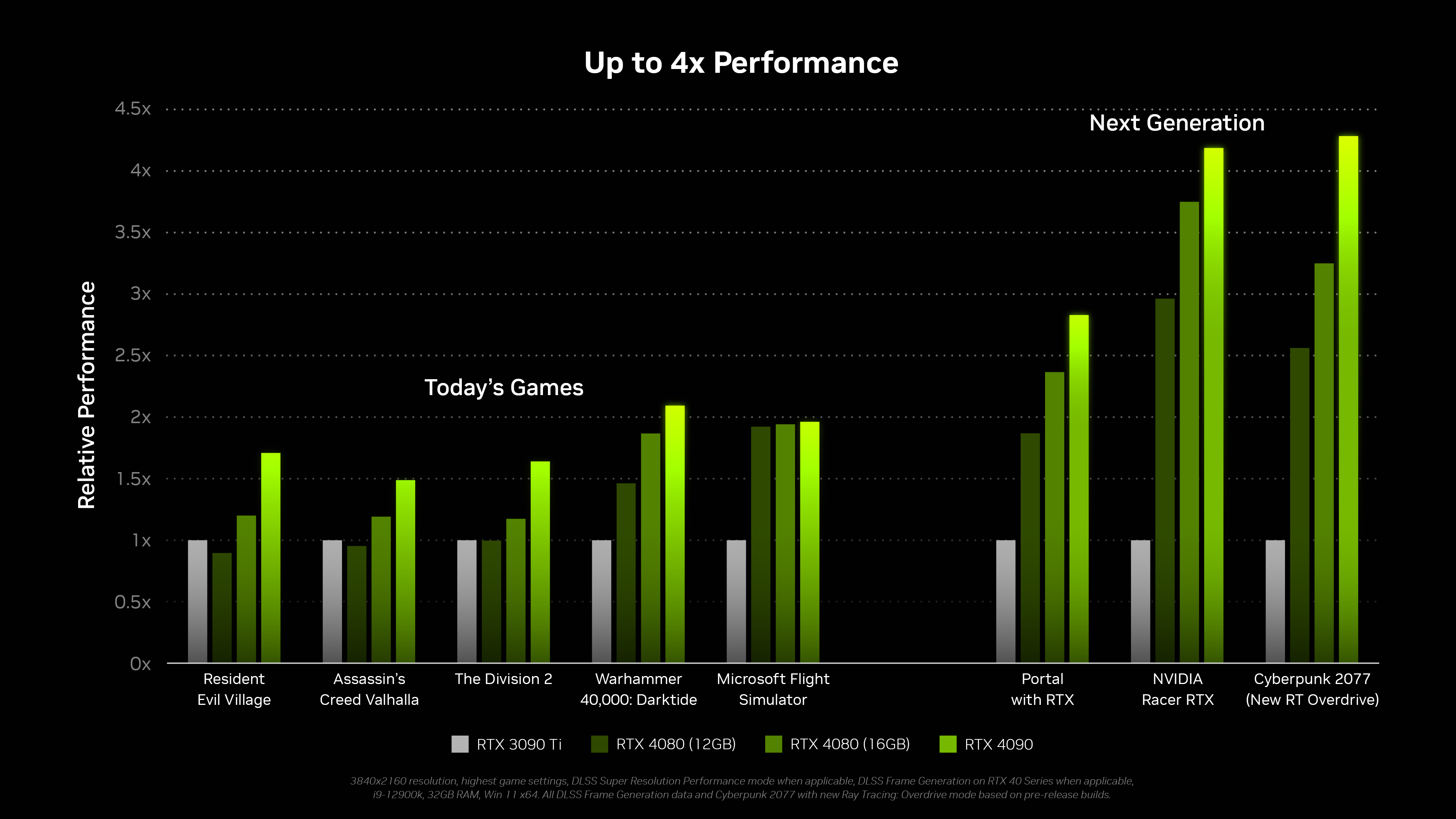

GeForce RTX 4090 price and specs revealed: $1,599, available on October 12. That's lower than some were expecting at least. Nvidia claims 2x-4x performance increase over the RTX 3090 Ti, which will also kill off sales of high-end Ampere.

Nvidia also revealed other RTX 40-series GPUs. RTX 4080 starting at $899, no word on exact specs yet. Those 30-series GPUs are going to look pretty pale now, though we don't expect any "budget" replacements any time soon.

It looks like that's it for the Ada and RTX 40-series announcements. There's a lot to digest still, but the performance gains are super impressive sounding. Architectural enhancements that could more than double the performance of the RTX 3090 Ti will usher in a new level of gaming visuals. RTX 4080 is up to three times faster than RTX 3080 in RacerX, though obviously that's using DLSS and extreme RT effects.

Another big announcement is that Nvidia has "overclocked Ada to over 3GHz in our labs." We may not see a bunch of consumer models hitting those clocks, but certainly we expect to see high 2GHz range cards in the near future. That alone should boost performance by 50% over the Ampere line.

That takes care of GeForce, and now the keynote will shift to the other GTC stables, starting with Omniverse.

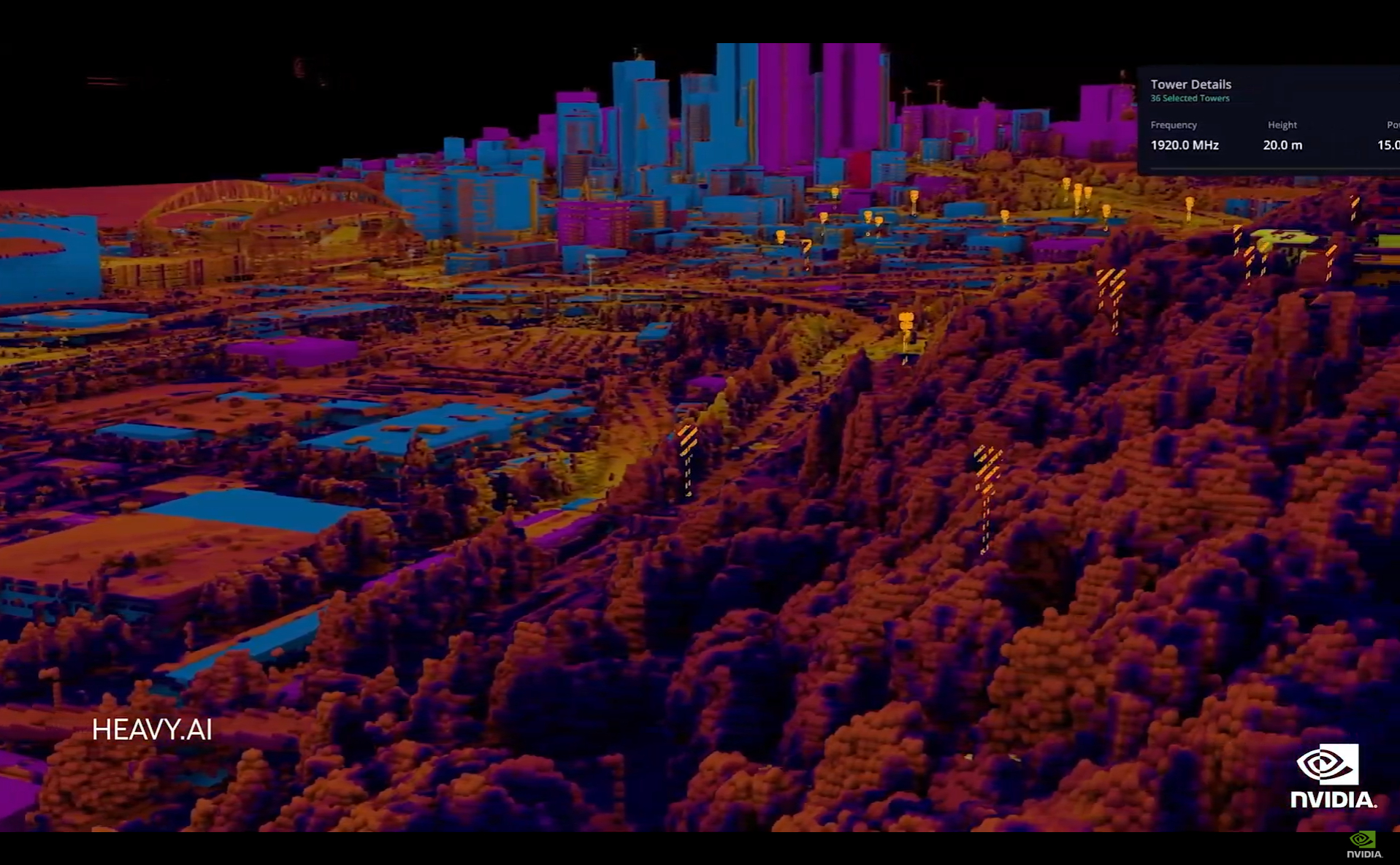

Heavy.AI doing some impressive stuff with Nvidia's technology.

Lowe's is getting in on AR! Okay, it's not Palmer Lucky wearing an Oculus, but Lowe's will leverage digital twins of warehouses to improve efficiency and other aspects of its operations.

Nvidia has posted a bunch of details on the RTX 40-series at its site. Among other tidbits, here are nine different AIB partner cards for the RTX 4090. You'll note that EVGA is not among them.

Going back to the GeForce stuff, because there's a bunch of additional information, the rumors of two completely different RTX 4080 cards are now confirmed.

The faster GeForce RTX 4080 16GB will have 9,728 CUDA Cores, 780 Tensor-TFLOPs, 113 RT-TFLOPs, 49 Shader-TFLOPs of power, and GDDR6X memory. That makes it twice as fast as the GeForce 3080 Ti, and Nvidia says it will use nearly 10% less power. Prices on the 4080 16GB start at $1199.

The GeForce RTX 4080 12GB in contrast will have 7,680 CUDA Cores, 639 Tensor-TFLOPs, 92 RT-TFLOPs, 40 Shader-TFLOPs, and GDDR6X memory. Nvidia says it will still be faster than the GeForce RTX 3090 Ti. This is the cheaper RTX 4080 with prices starting at $899 — though Nvidia hasn't specifically mentioned the release date yet. Probably later in October or early November.

It's worth noting that this is as far down as Nvidia is going right now, which makes sense as there are reportedly tons of high-end RTX 3080 and 3090 series GPUs still in the channel, waiting to be sold. Obviously, if RTX 4080 12GB still trumps the RTX 3090 Ti in performance, that puts a ceiling of around $799 on what people will likely be willing to pay for an RTX 3090 Ti now.

Got frames? Nvidia is now pushing beyond 300 fps at 1440p with the RTX 4090 in competitive shooters. Does anyone make 360Hz 1440p displays yet? If not, you can bet they're now in the works, since we'll have hardware that might actually be able to drive them at that rate.

GeForce RTX 4080 and RTX 4090 performance, courtesy of Nvidia. Obviously, RacerX that uses all the latest tech will run better on the new cards, and that's where the "4X" speedup comes from. Still, there are some significant performance gains on traditional games as well.

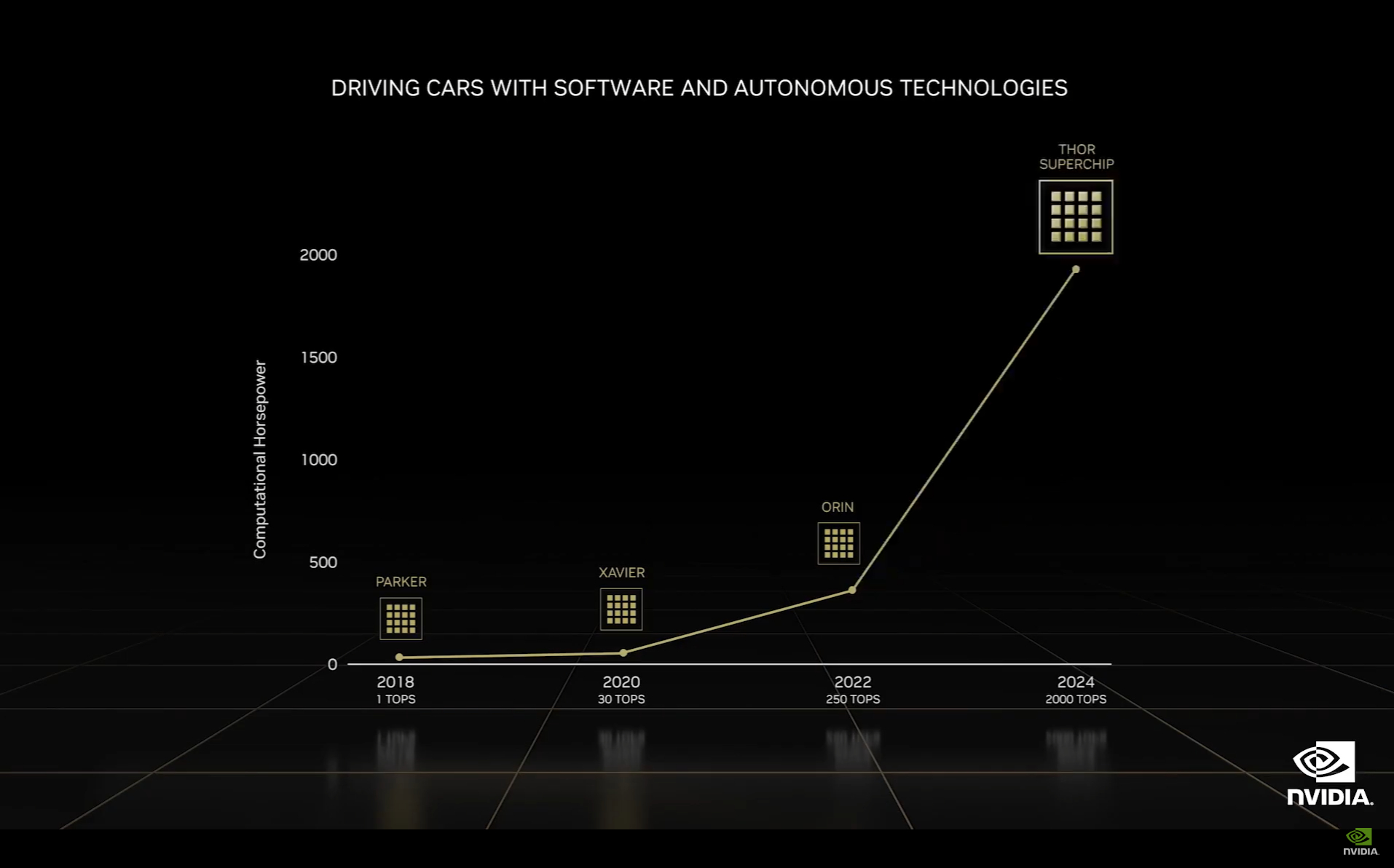

Back to the keynote now, though if you're like me you're probably a lot more interested in the GeForce stuff that was already discussed. Jensen is talking about autonomous cars and the enhancements coming to them. Current Drive hardware offers up to 250 teraops (FP8 or INT8) on Drive Orin, Drive Atlan bumped that to 1000 teraops, and Drive Thor will come in 2024 with 2000 FP teraops.

Drive Thor will leverage Grace Hopper superchips, so Nvidia CPUs and GPUs. Higher compute should give it better capabilities and let it deal with unexpected stuff better.

For all Nvidia's talk about autonomous vehicles, it's interesting to put that division into perspective. From the latest earnings report: "Nvidia's automotive segment had its best quarter yet with revenue of $220 million, up 59% from Q1. In fact, auto was the company's only segment that grew during the quarter (with the exception of data center, up 1%)."

That's a far cry from the data center and gaming markets, but Nvidia obviously hopes this will continue to grow in the coming years. However, Nvidia says it expects the automotive growth to continue with its $11 billion auto design win pipeline.

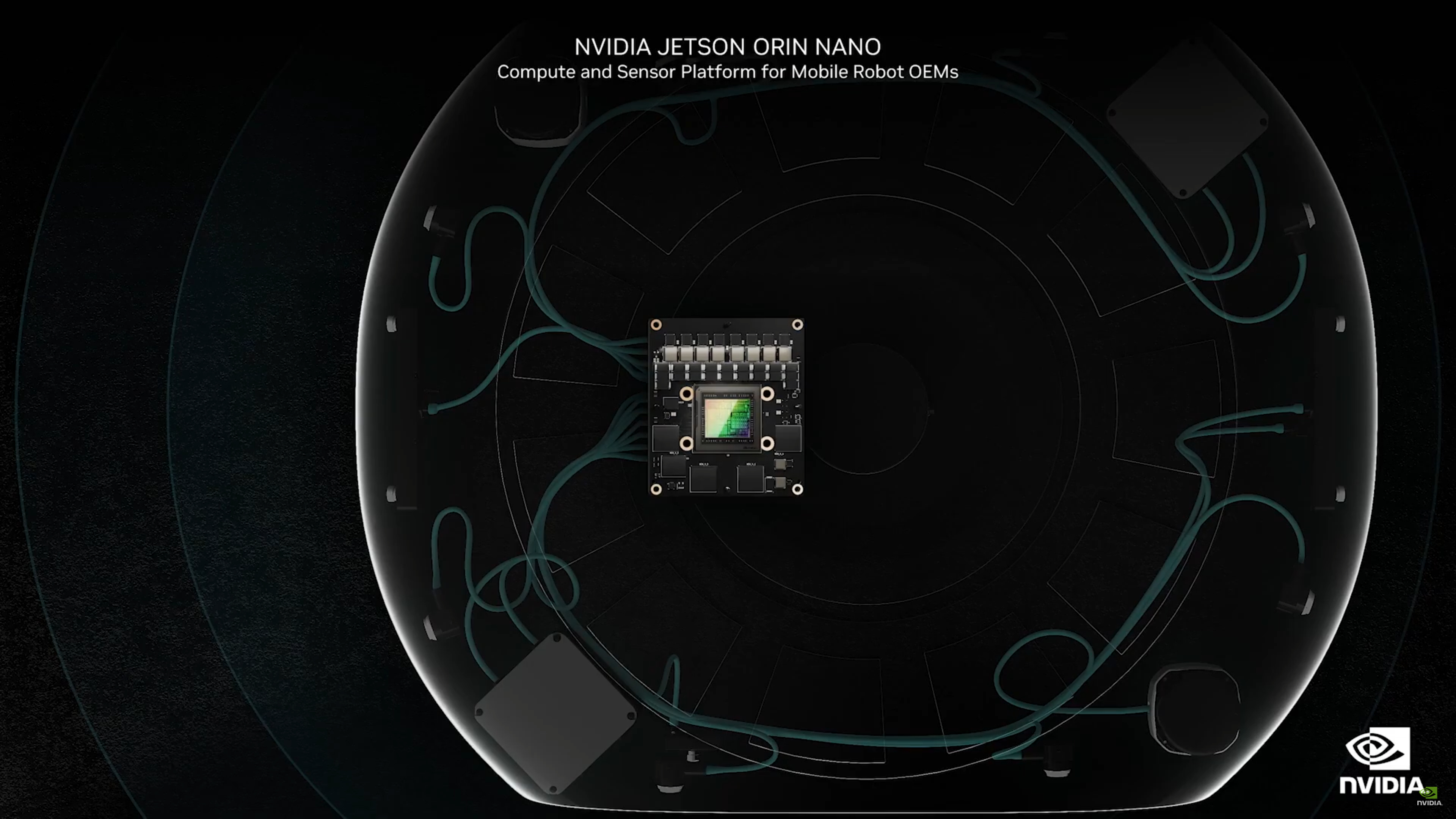

Jetson Orin Nano for edge computing applications and robotics has now been announced.

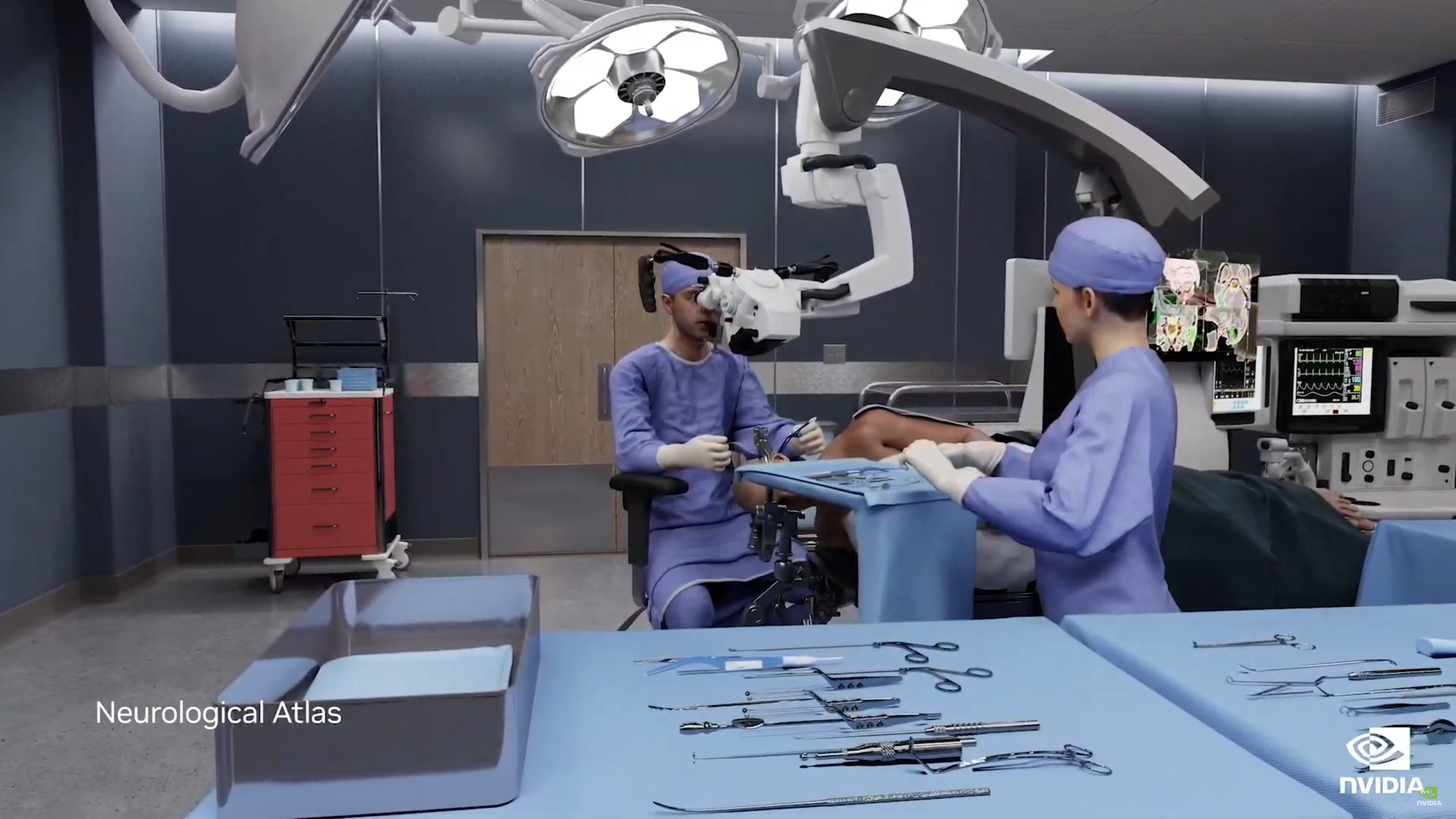

Clara Holoscan getting into the medical business, also using the Orin platform.

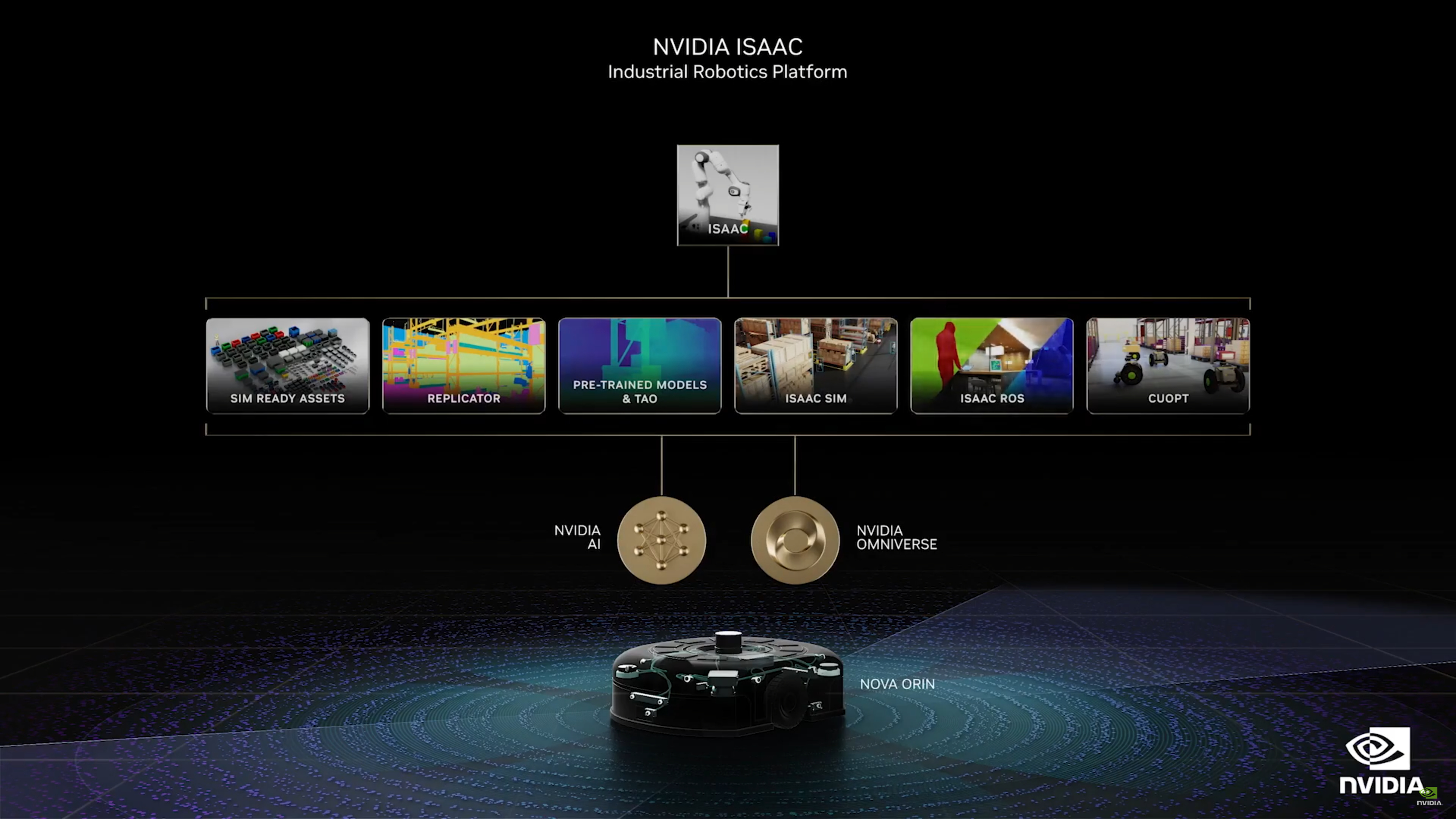

More robotics, now discussing Isaac SIM, which runs in the cloud to help virtual training of actual robots.

Jensen talks about accelerated computing across the product stack, allowing for massive speedups relative to Moore's Law.

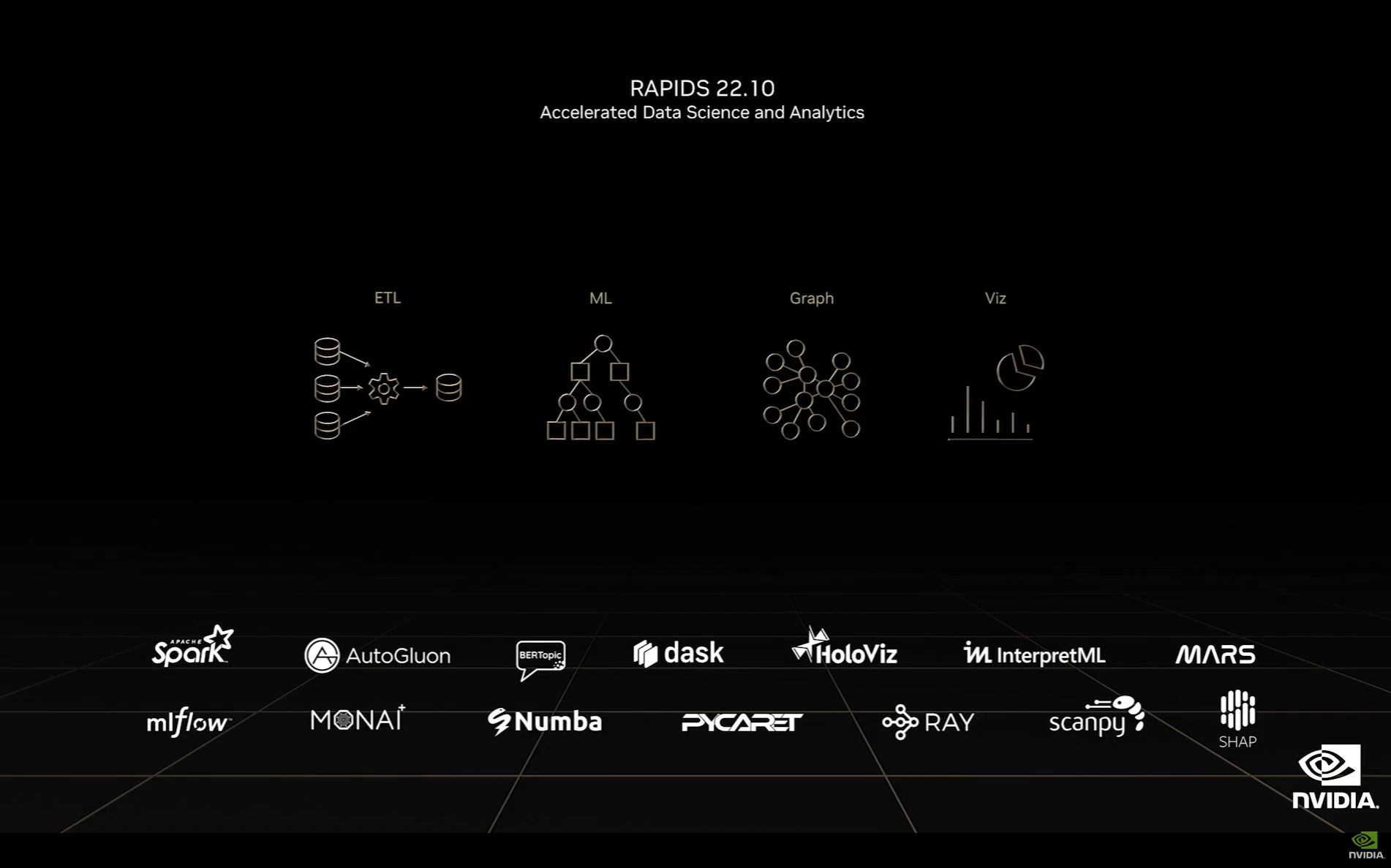

Jensen is now talking about RAPIDS, Nvidia's open framework for data analytics. Lots of companies using this, plug-ins for various software packages.

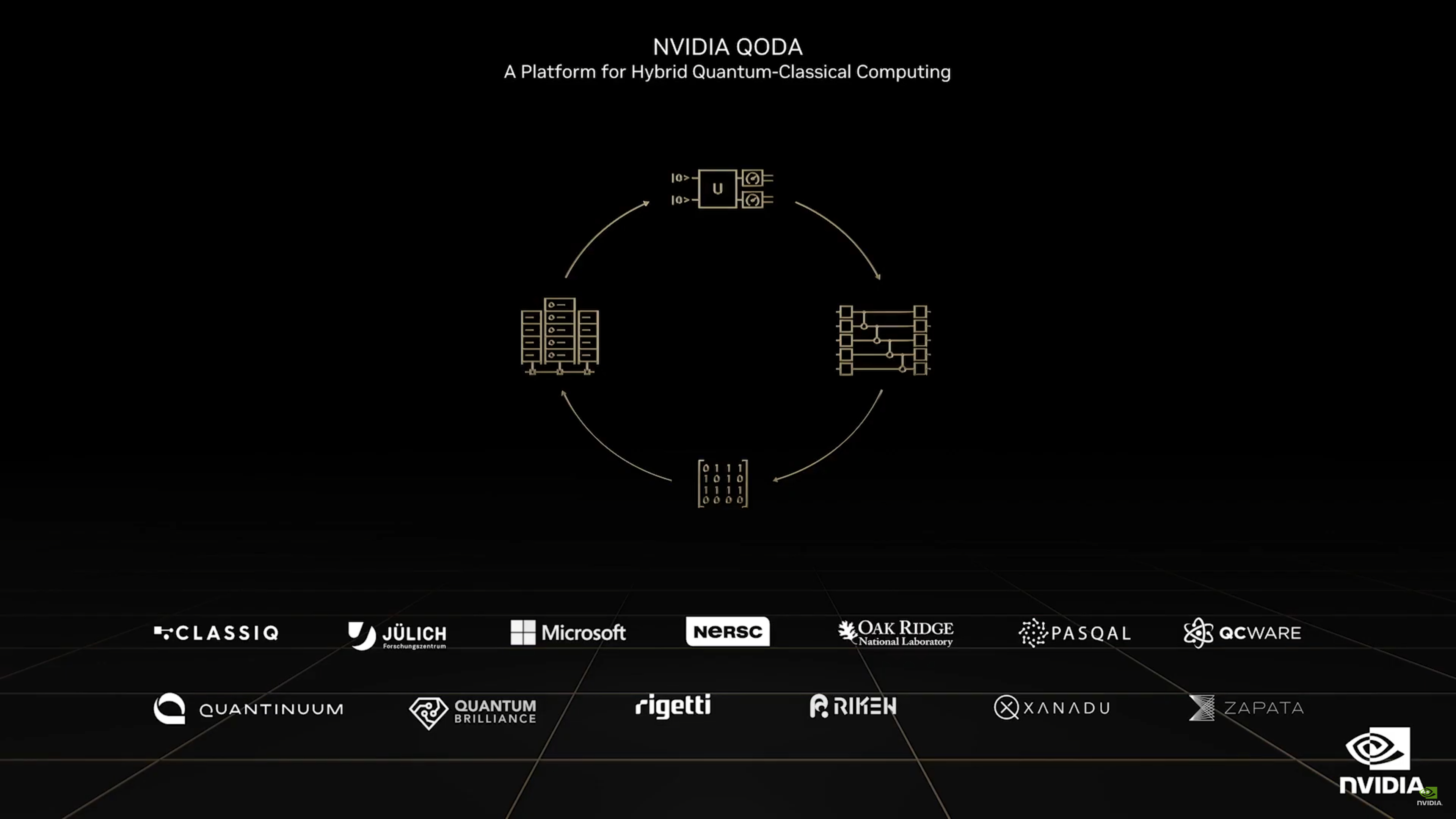

One are of intense research is quantum computing. Nvidia is taking a hybrid approach that it calls CuQuantum, for CUDA Quantum computing. It believes actual quantum computing will exist in parallel with classical computing methods.

Nvidia JAX running on Nvidia AI. There's a lot of announcements and discussion going on that we're not really digging into. Also, having some problems with the live blog updating. Sorry about that!

[Insert your own gratuitous Transformers reference here.]

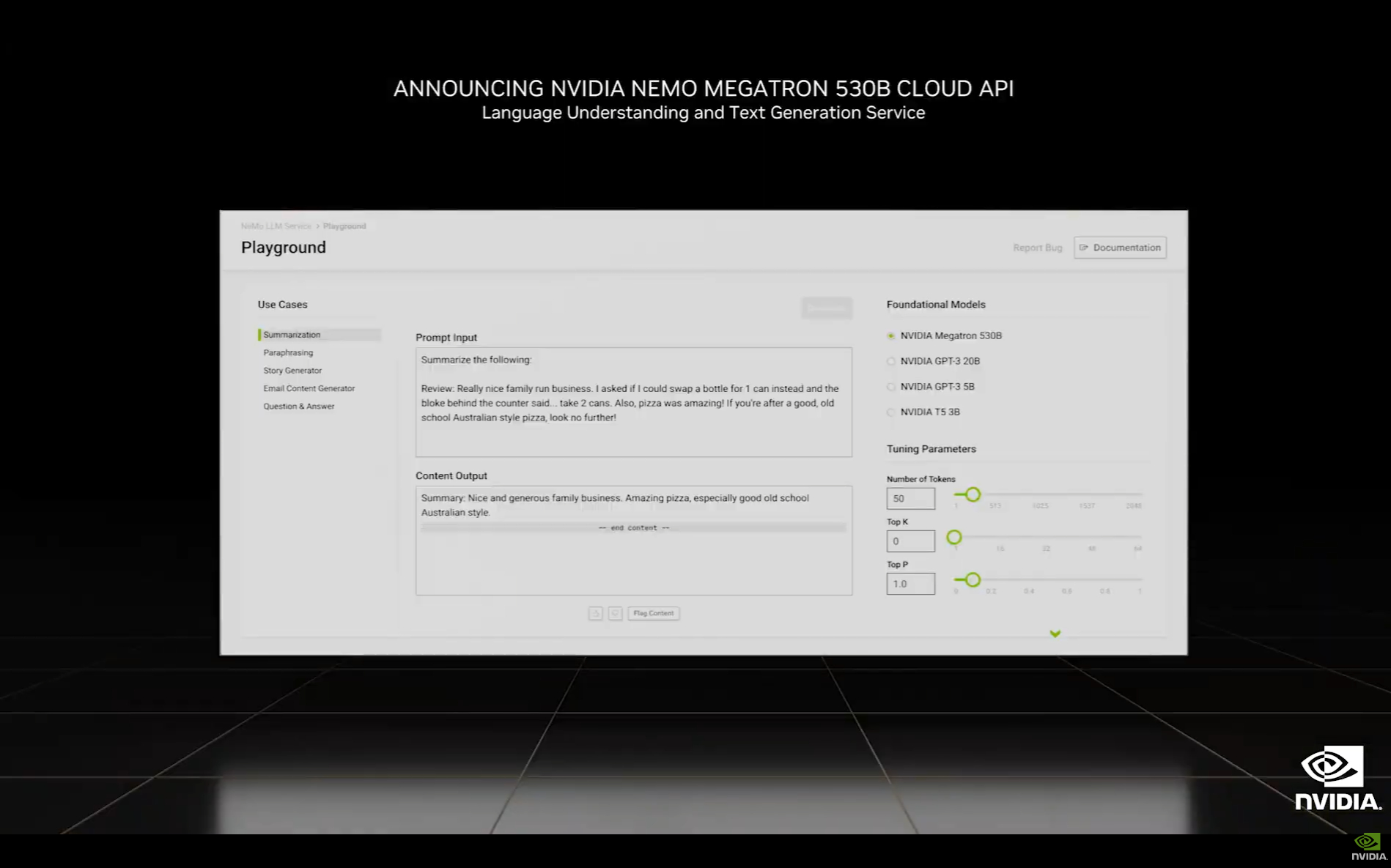

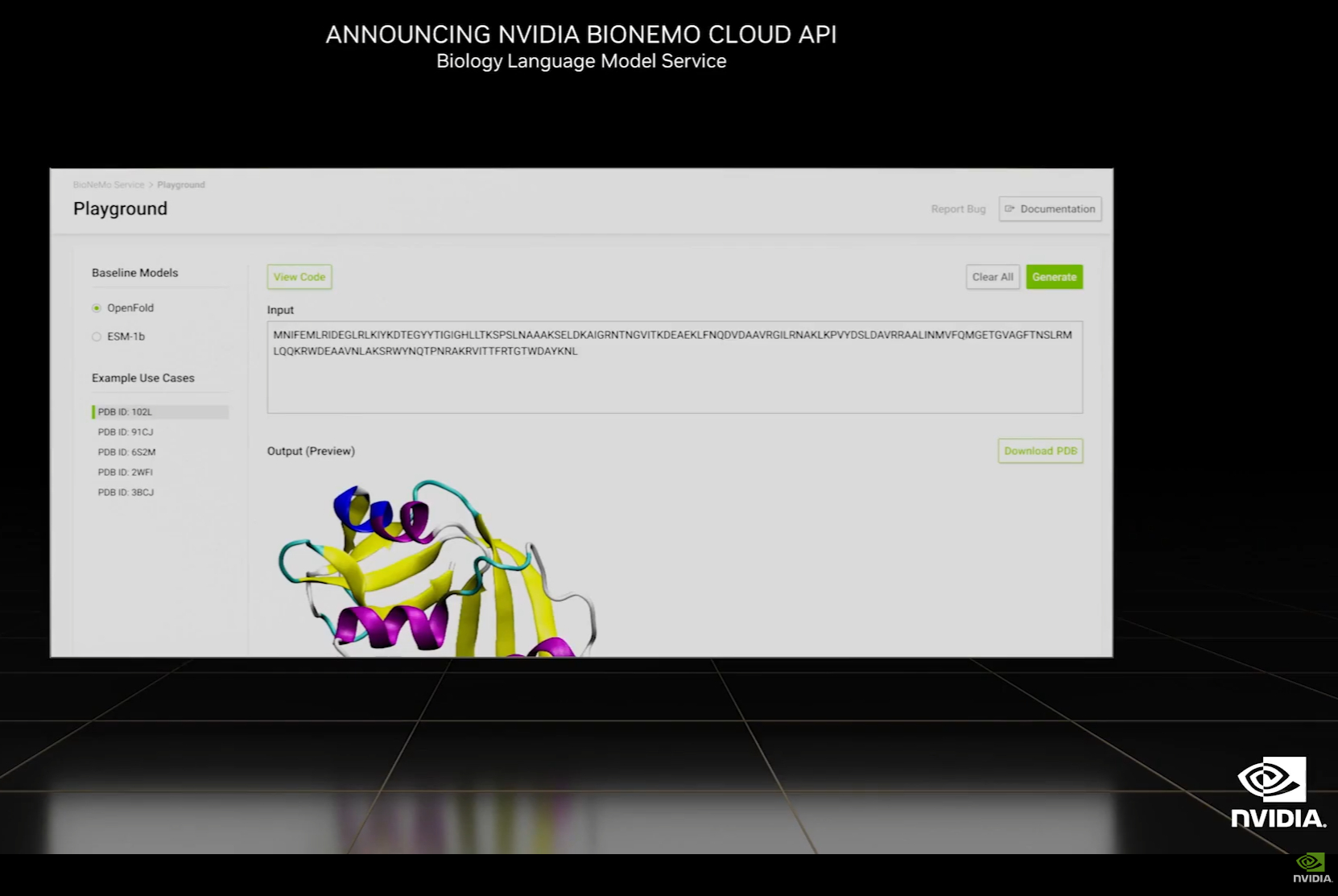

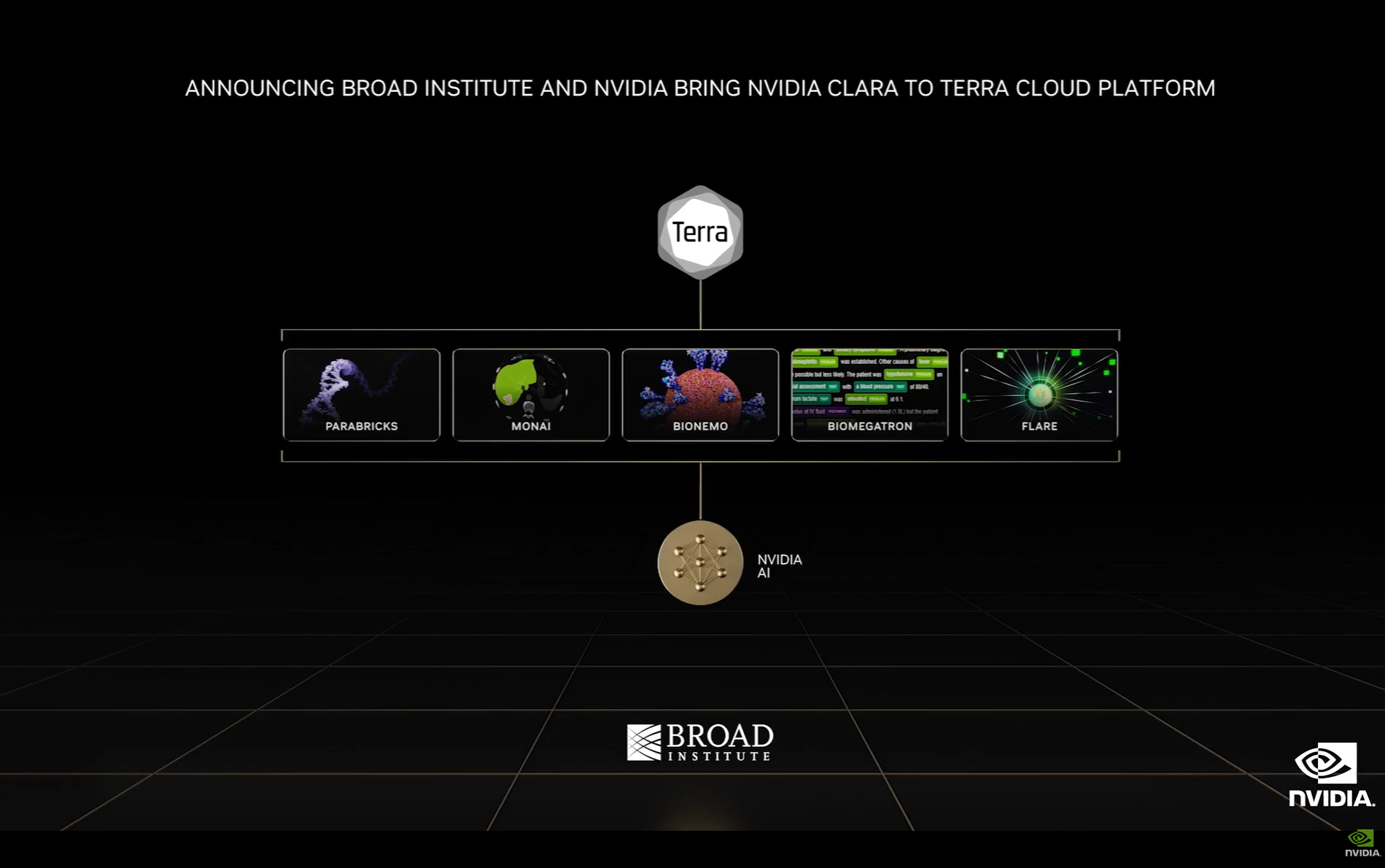

Nvidia is using Large Language Models (LLMs) to work with drug discovery and chemicals, proteins, and DNA/RNA. These will be accessible via the BioNemo Cloud API.

GATK, Genome Analysis Toolkit, can speed up genomic analysis from 24 hours to less than an hour.

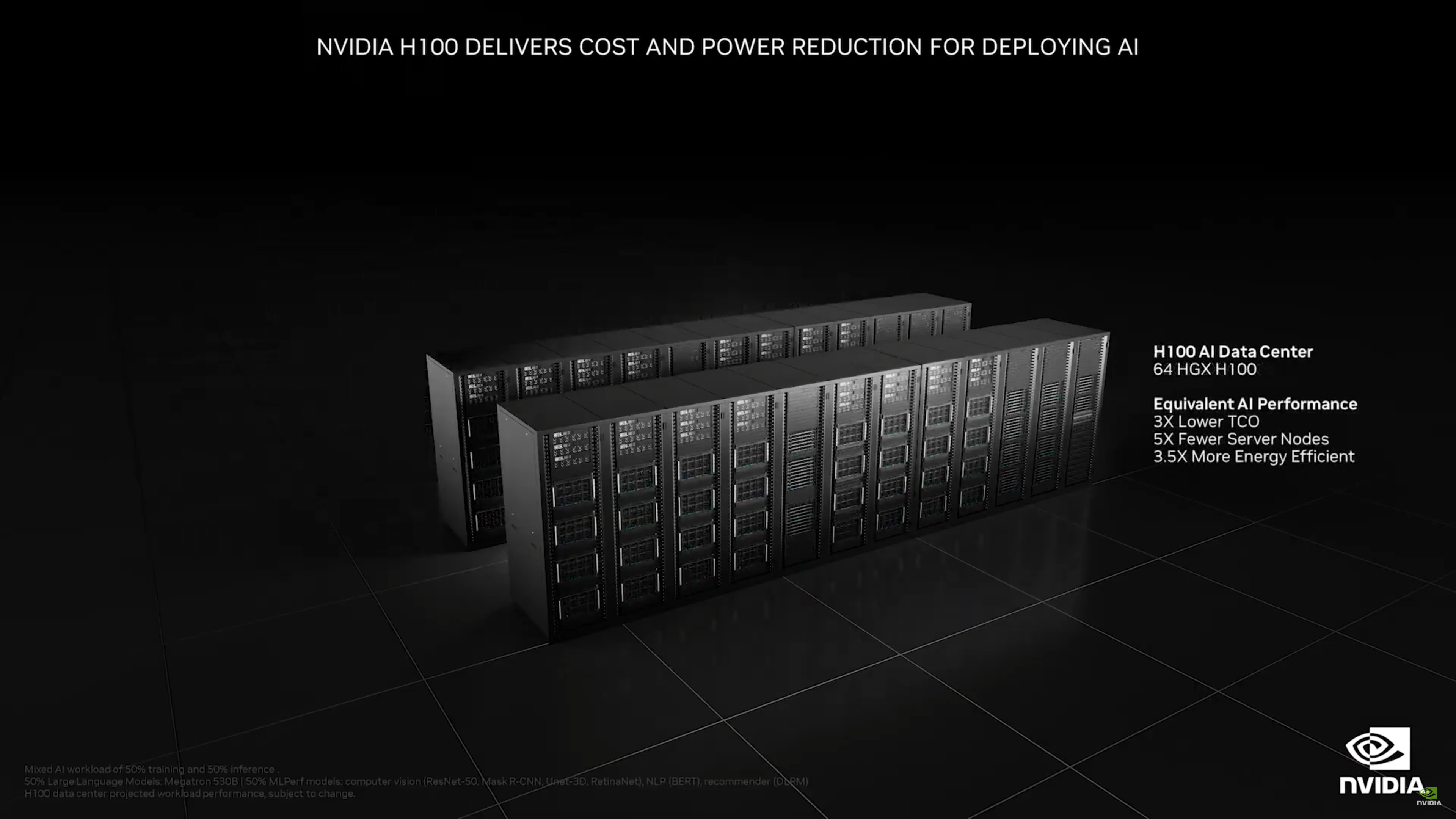

Talking about Hopper H100 with its new transformer engine (to dynamically choose FP8 and FP16 as needed). Larger models can go from a month of training to just one week. In some cases, H100 is five times faster than A100. "Hours instead of days, minutes instead of hours."

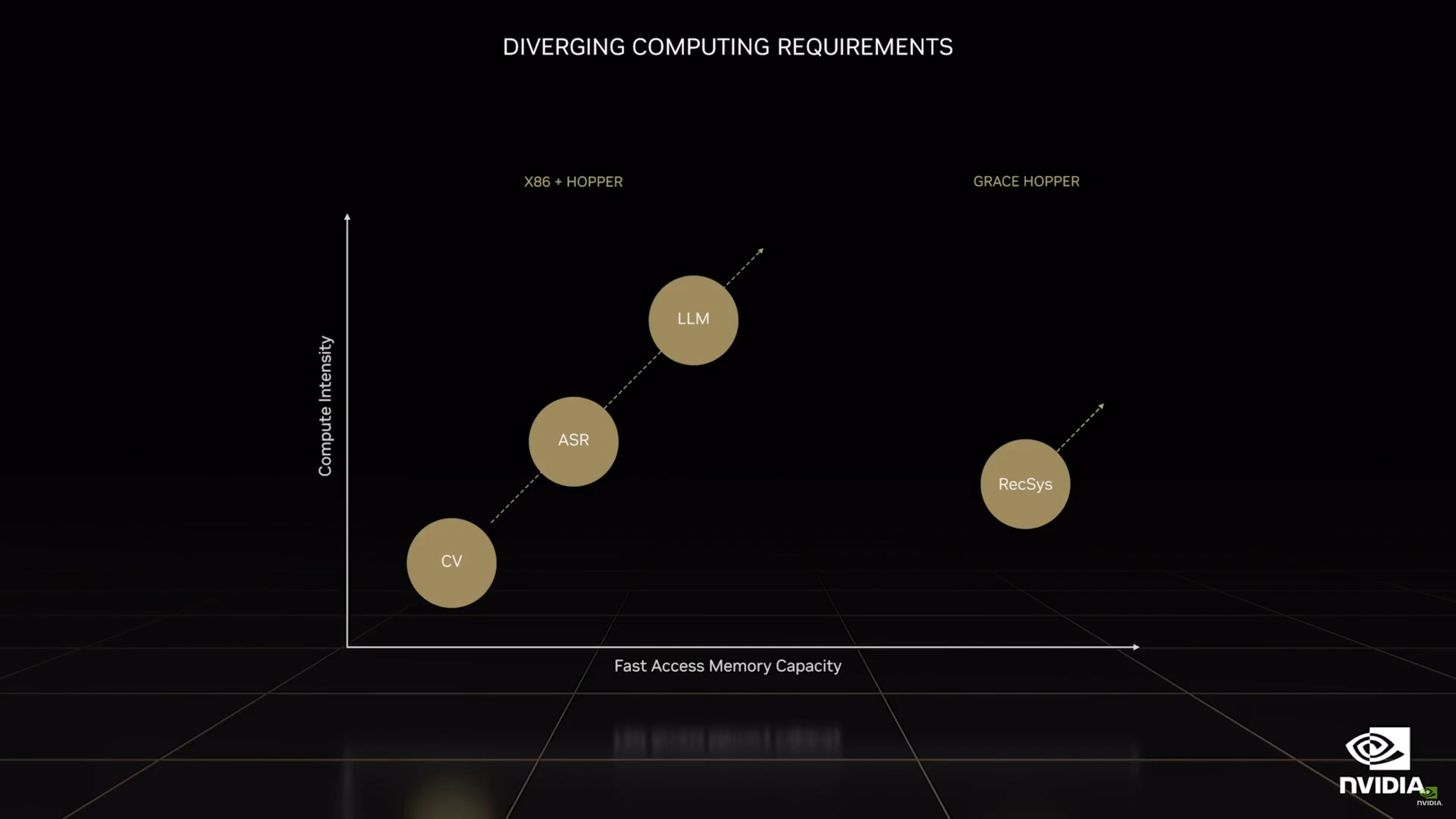

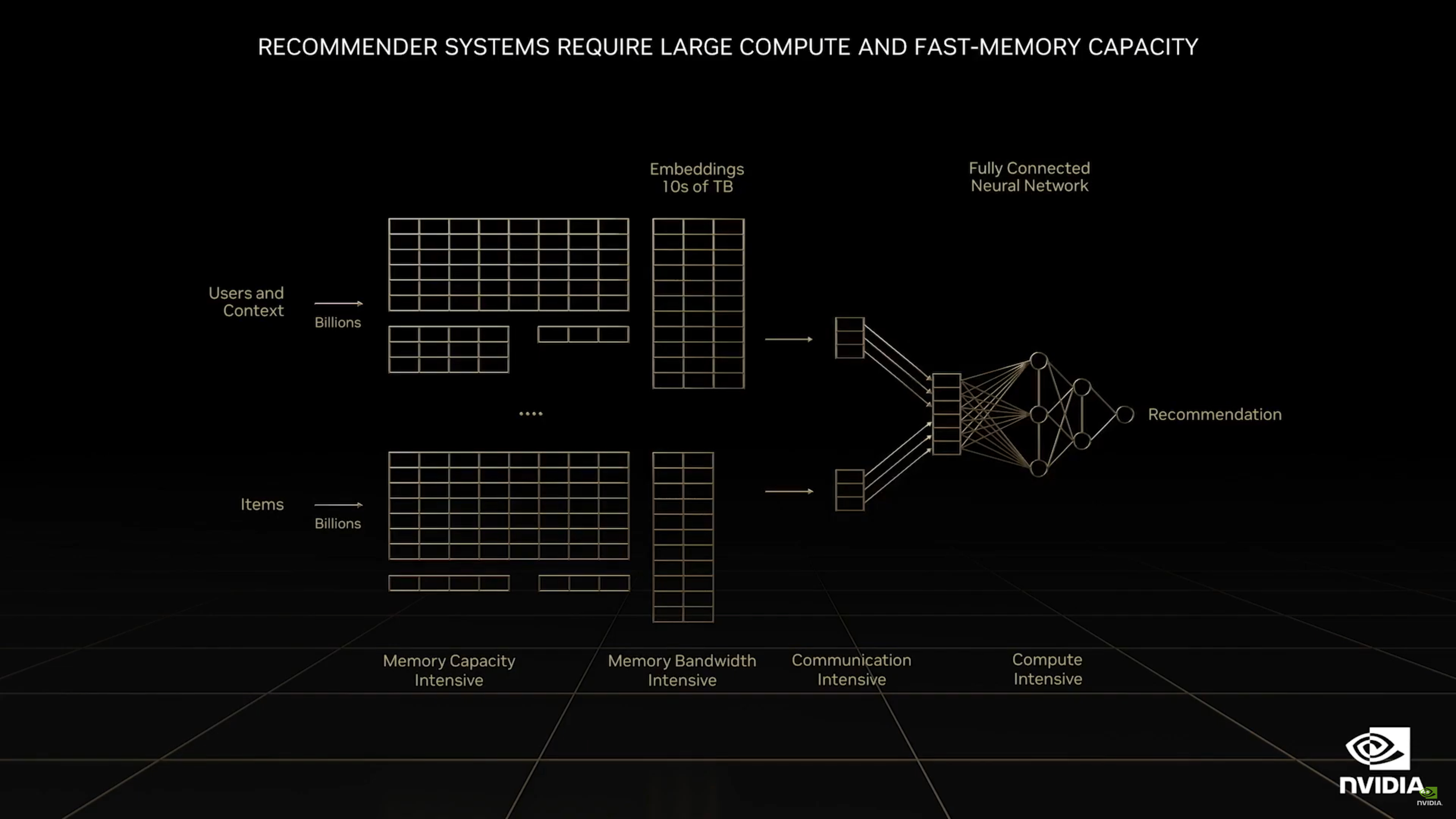

Discussing the differences between large language models and recommender systems.

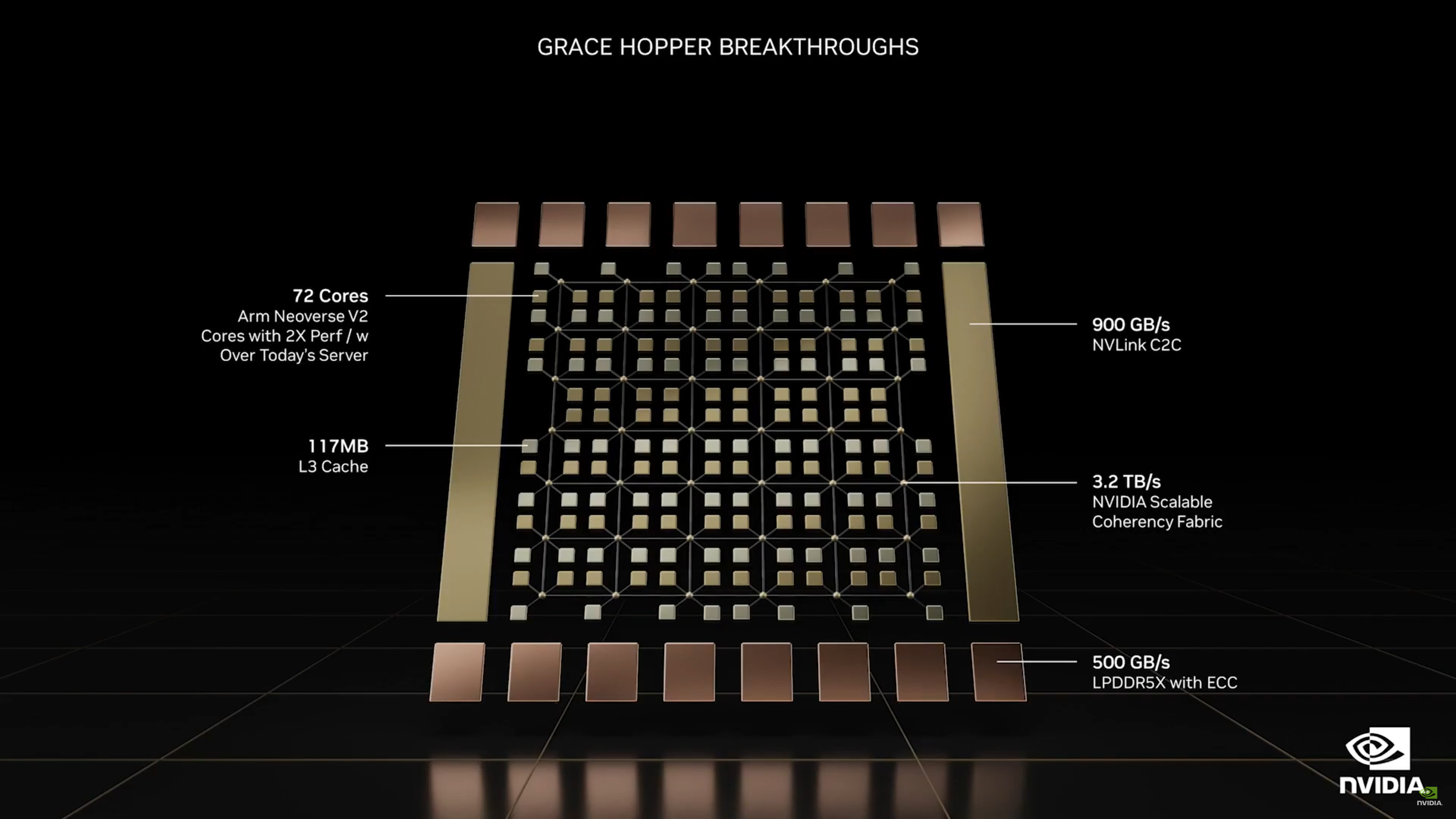

Now we're going more into the Grace Hopper superchip. Nothing really new here, but Grace Hopper should start to become publicly available in quantity in the near future.

"Systems will be available in the first half of 2023."

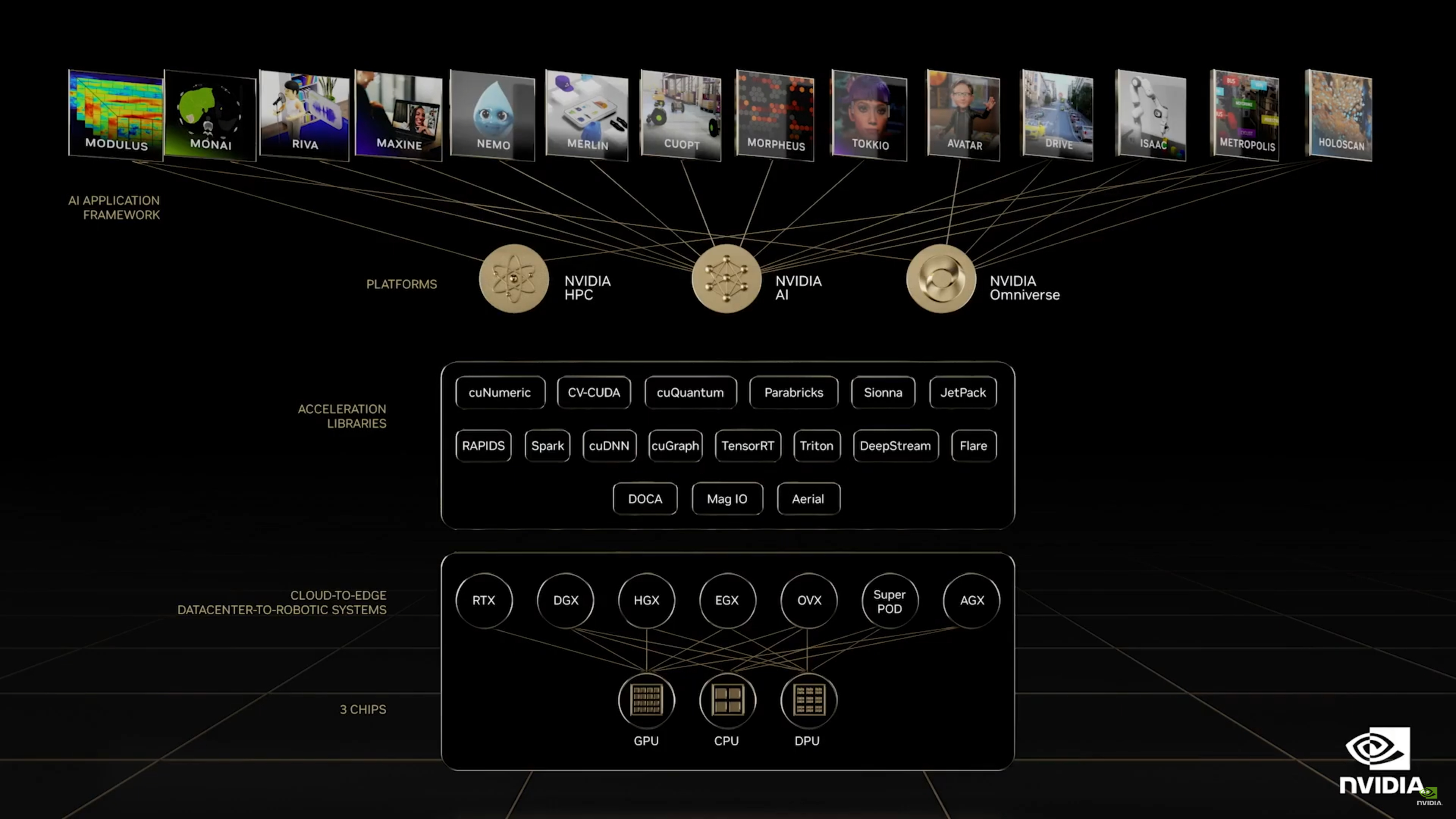

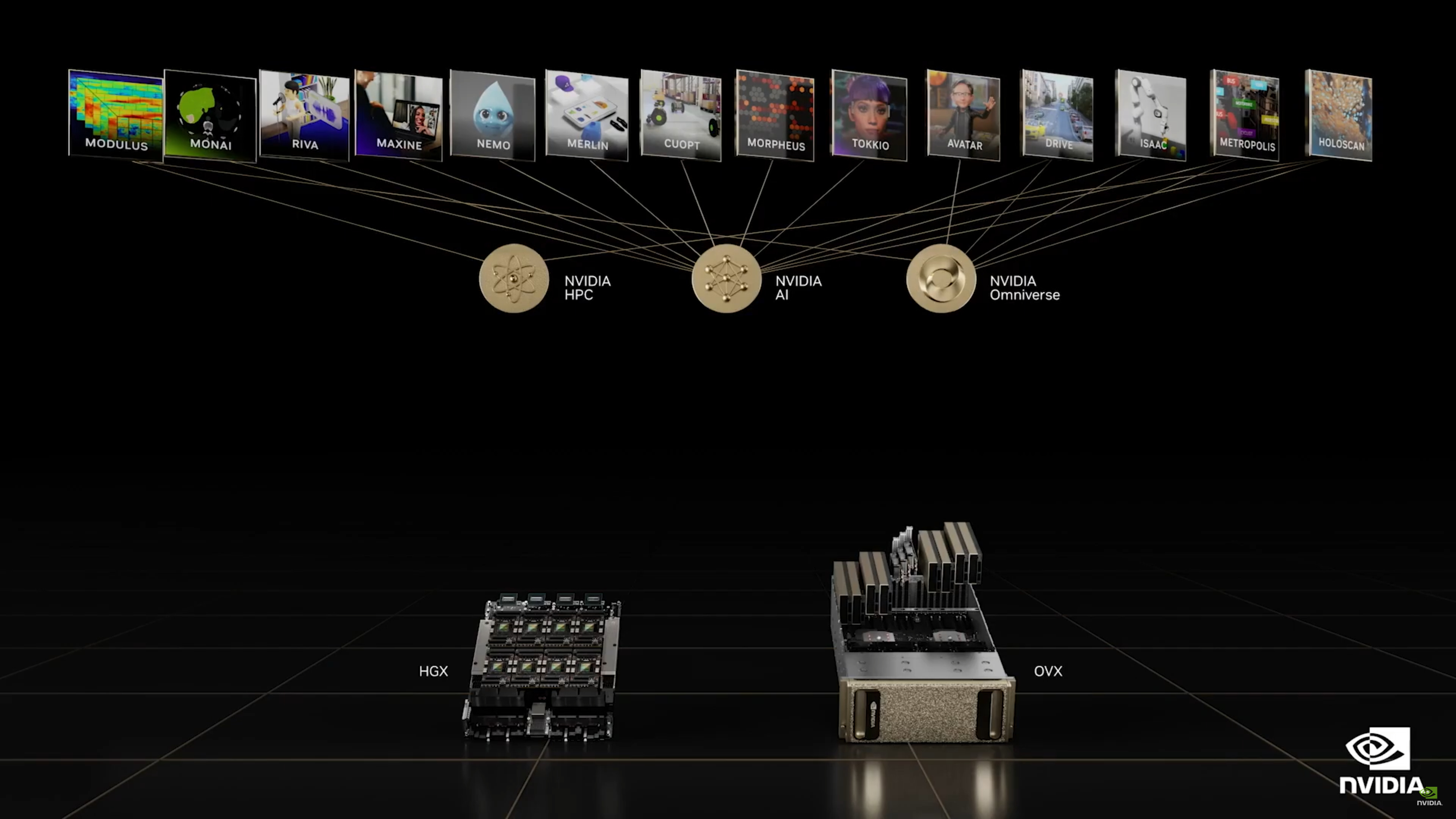

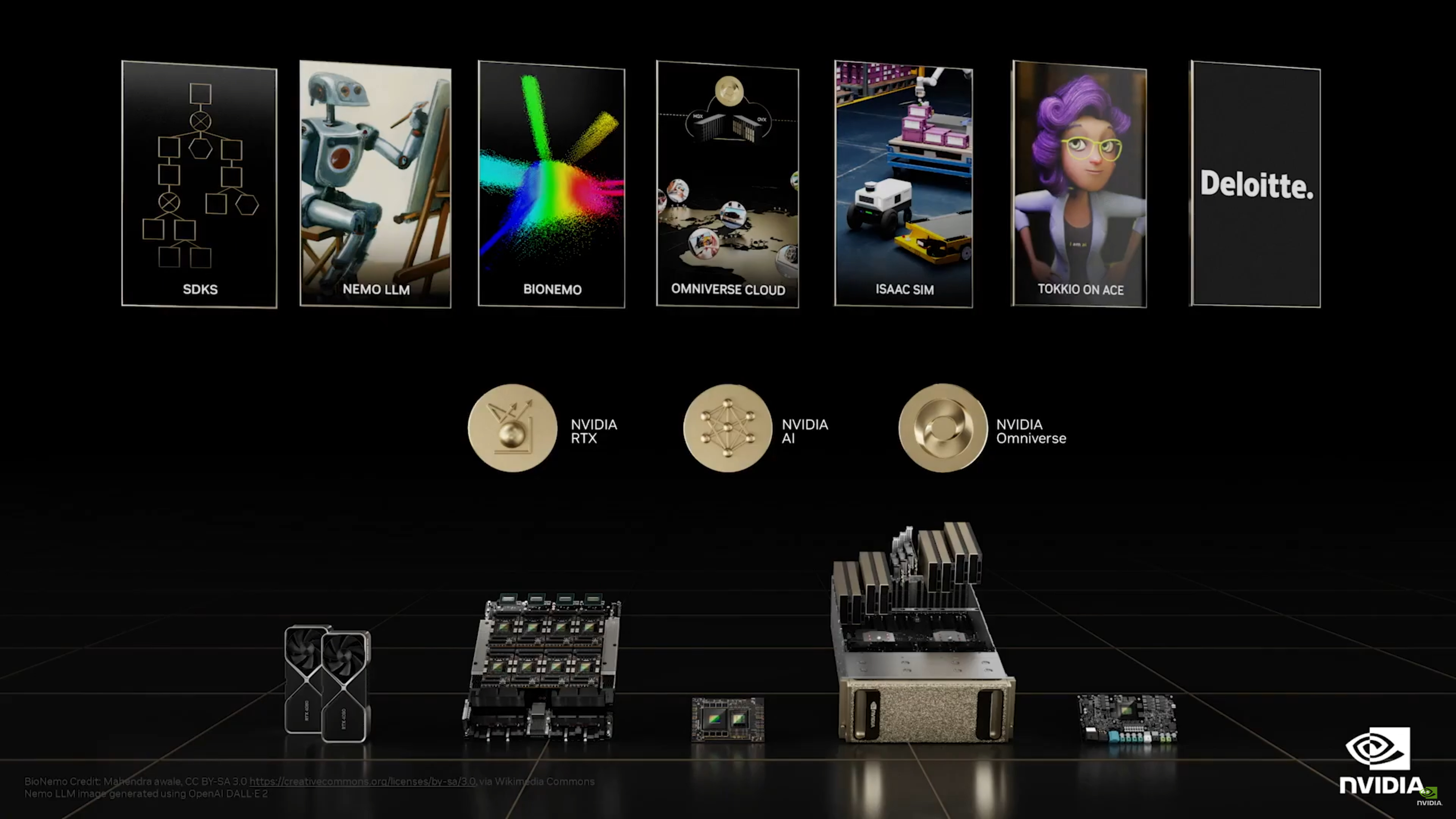

Jensen is discussing Domain Specific Frameworks, APIs developed for specific AI workloads. There are a bunch of different frameworks, as you can see in the above image.

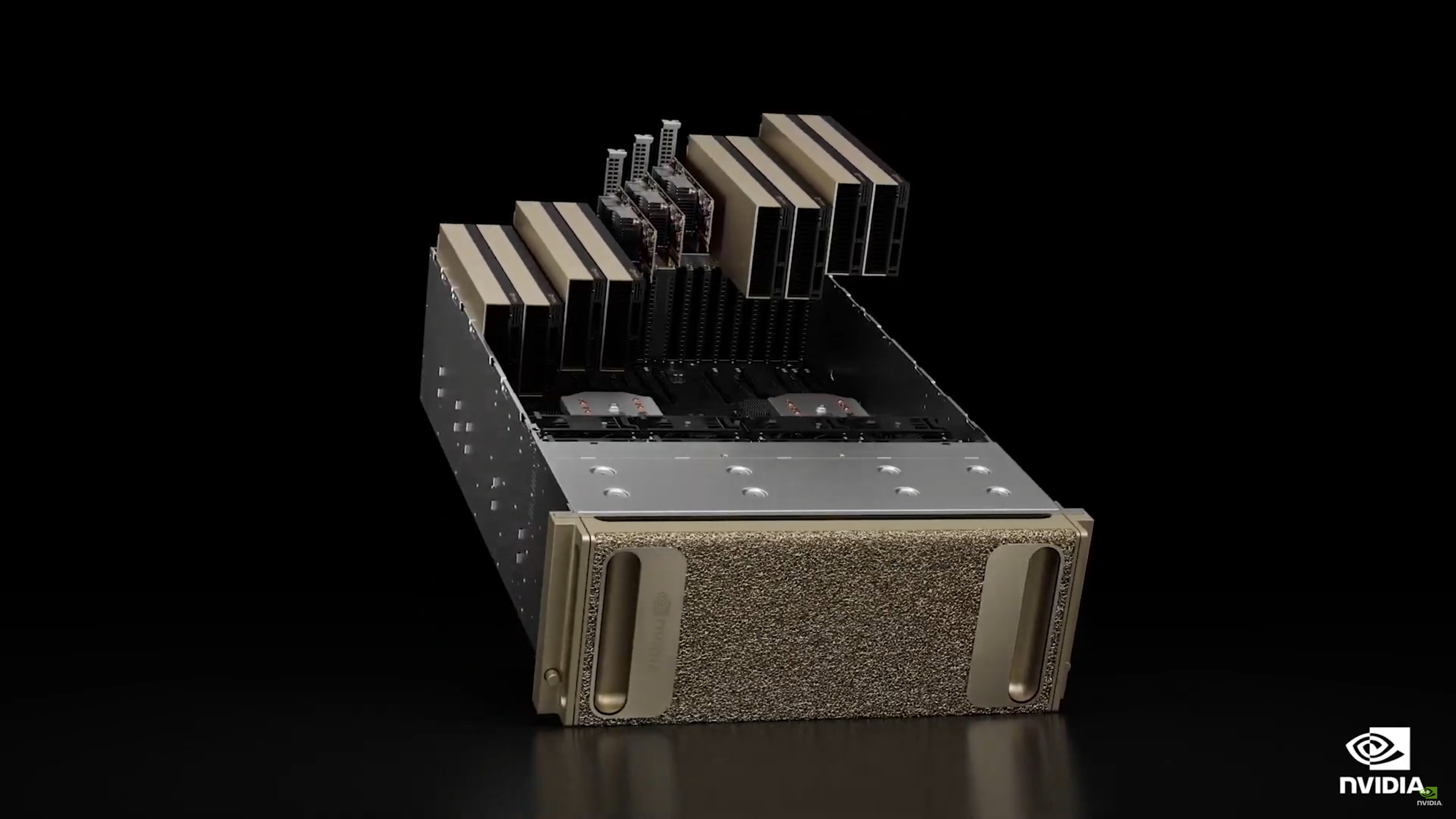

Nvidia L40 OVX servers, sporting up to eight GPUs.

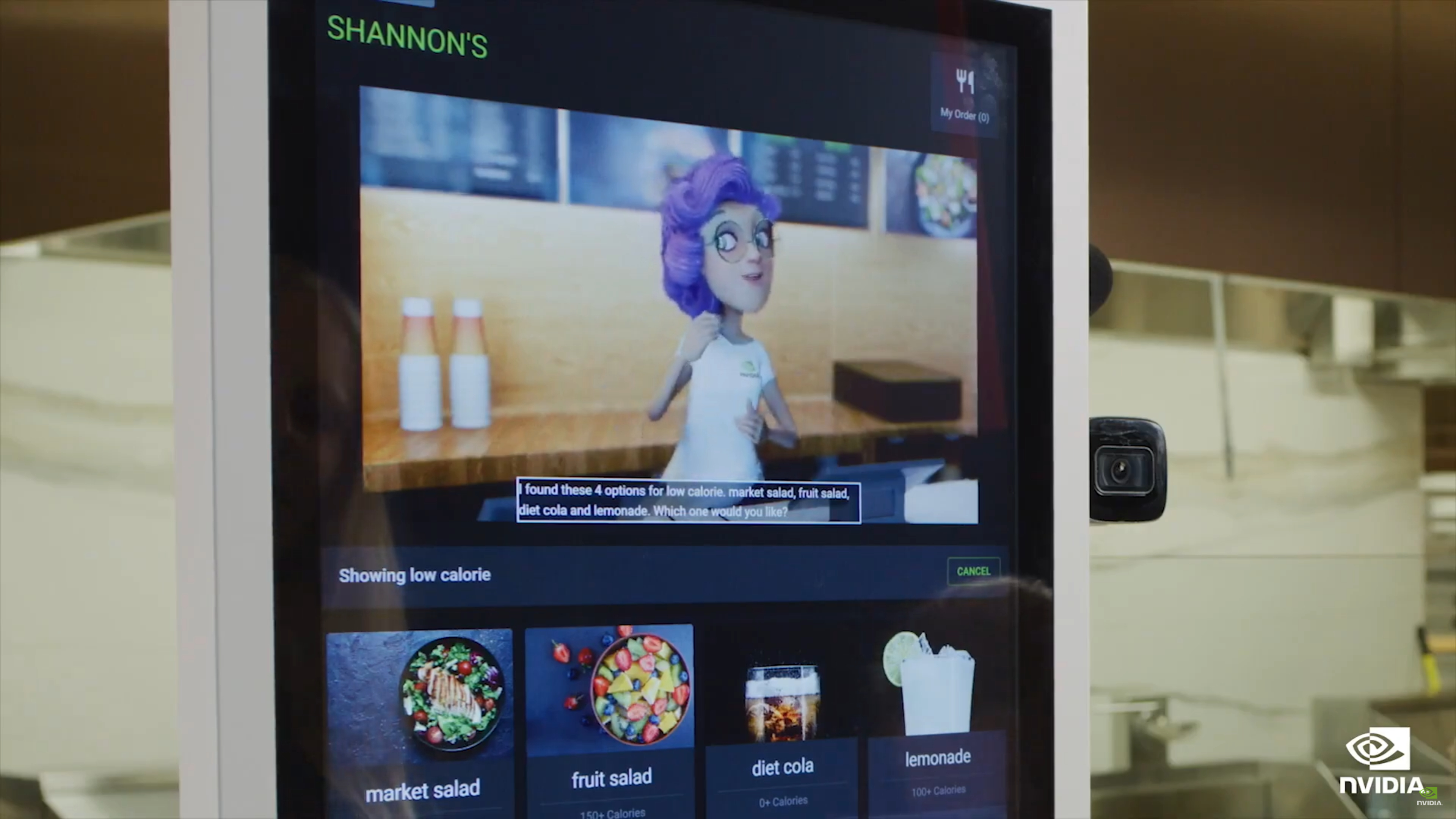

Nvidia Interactive Avatars, coming to all your favorite storefronts in the near future! Open a web page, get an avatar harassing you within seconds. I can't wait.

Seriously, it now takes me four times as long to order at a fast food place when I have to go through their ordering screen or app rather than just telling the employee what I want. I'm sure it cuts down on the company costs, but not on my costs or time.

Hi, I'm Ultraviolet. Like my hair!

Closing remarks now, Jensen is recapping all the announcements of the day. Ada, Thor, Hopper, etc. There was a lot to digest, particularly for those that aren't just waiting to hear about the next generation consumer graphics cards.

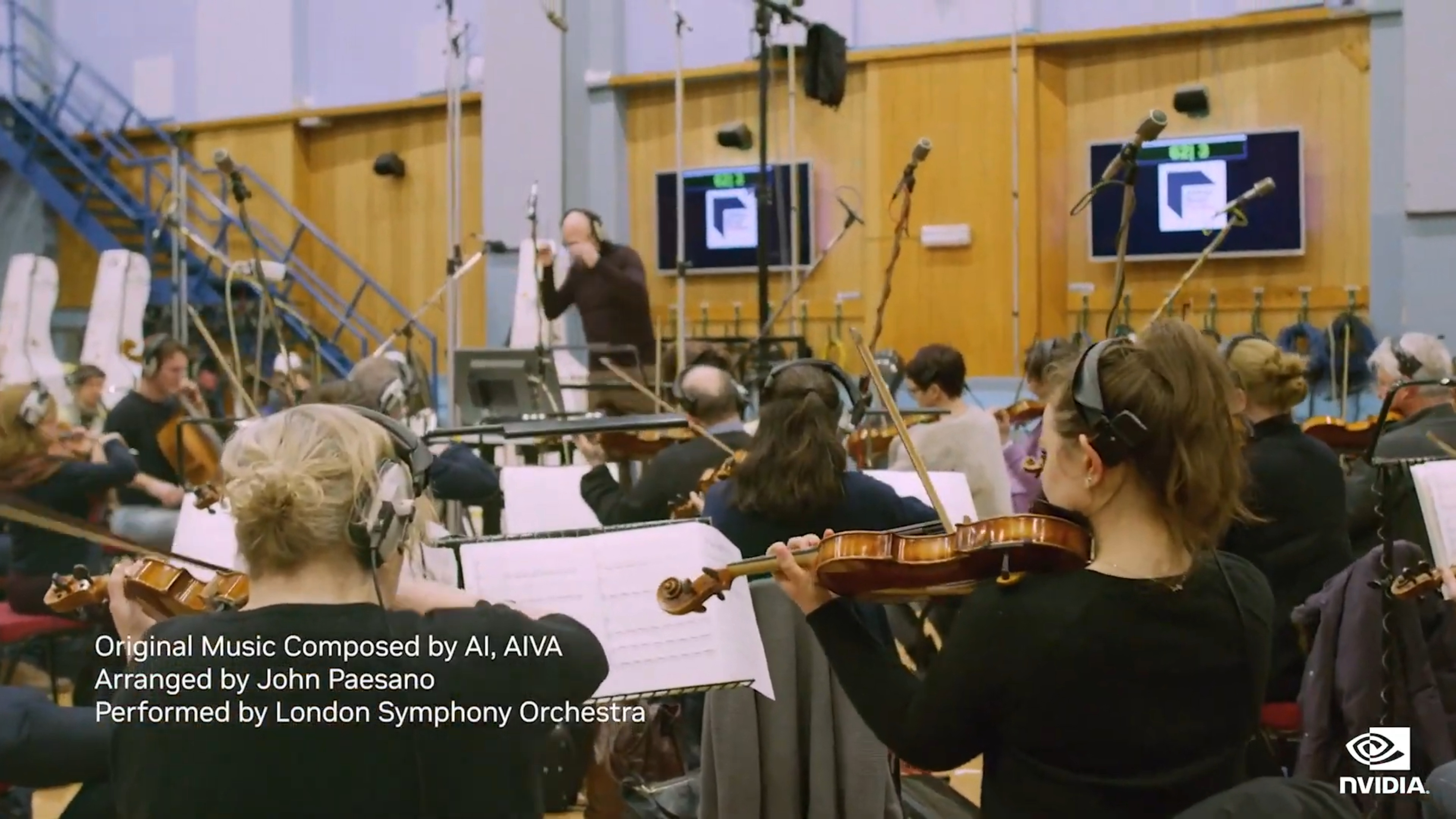

Thanks for tuning in, sorry about any technical problems. As usual, we're wrapping up with the "I AM AI" video from Nvidia, updated slightly since the last time we saw it six months ago. We'll have a full breakdown on the RTX 40-series announcement up shortly.

If AMD can get HIP competitive with Optix in Blender, then I'm all for switching from green to red. That's my only problem.

People paid more for GPUs when locked in their homes, when no PS5/Xbox consoles were available, and the government was sending out checks.

Now the new console generation is available and far cheaper. A massive glut of new 3050-90 are sitting in warehouse and an even bigger glut of used high-end mining GPU are going to be dumped on the market. Oh, and we are probably going into a recession and there aren't any new killer games driving the need for an upgrade.