From Avatar to Light Fields: 3D’s Making a Comeback

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

There’s a new 3D technology making its way from labs and universities to consumer products: light fields.

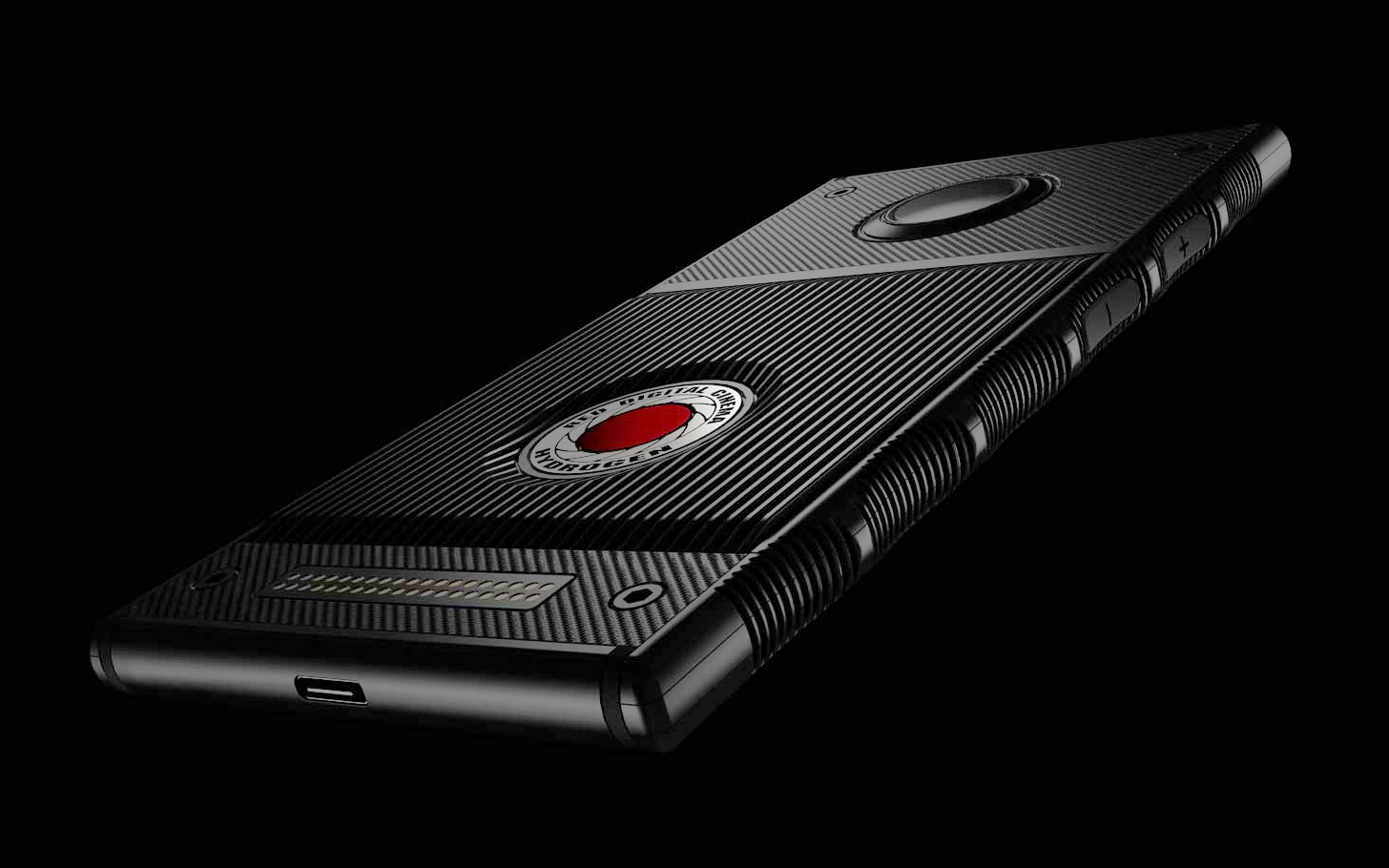

This summer, we’re going to see the first consumer light field device available in the form of the RED Hydrogen One phone. Its display can go from light field mode to standard 2D mode on command, and it will enable a wide enough field of view to allow you to tilt your head around.

But before 3D got to this point, it faced its share of craze, problems and, eventually, solutions.

Almost a decade ago James Cameron released mega blockbuster Avatar. Back in 2009 this was fairly revolutionary 3D in that it looked decent compared to previous eye sores like the film Beowulf released two years prior. With Avatar making nearly $3 billion at the box office, people were convinced the age of 3D had arrived. It seemed every movie had to have 3D shoved into it, 3D TVs were set to be the next big thing, Nintendo released the 3DS and so on.

Then, almost as quickly as it hit, the 3D craze collapsed.

In order to understand light fields, we’ll first cover how 3D typically works today, what went wrong in the past and some of the subsequent resolution.

What Went Wrong, and Right, With 3D

The first problem involves 3D glasses. In order to present two different, slightly offset views to each of your eyes for 3D movies and most 3D TVs way back when, special 3D glasses were required. The most common were polarized glasses. Light has a quantum property called polarization, which is described as a "spin." Most 3D glasses block light with a given direction of spin. So if the image meant for your right eye is "spin up," then the lens for your right eye blocks "spin down" light, while the lens for your left eye lets that through while blocking the spin up light meant for your right eye. This is a fairly cheap trick to get two overlapping images to only go into one eye each, thus producing 3D.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

However, only half the total light of the image goes into each eye, making the image half as bright as it might be. Complaints of 3D movies looking "too dark" were widespread in the early days of 3D. Today, however, projectors and other screens can get a lot brighter, alleviating this problem.

Another solution to this issue is to just not use glasses at all. The Nintendo 3DS has a “parallax barrier solution,” which isn’t dissimilar in technology to how light fields work. This tech doubles the pixels you see width-wise and projects a set of images into each eye separately, no glasses required.

Today’s ever-increasing pixel density at the very least makes this solution viable. Cameron wants Avatar 2(and beyond) to be projected in glasses-less 3D if possible; however, there’s no word on if this sort of tech will be available in movie theaters by release.

Plus, parallax barrier technology has problems of its own. The user has to be sitting in the correct “magic zone”, or area where the two views meet the user’s two eyes, to actually experience the 3D. Move out of it, and the effect is lost.

The solution to this problem was introduced to the average consumer with Nintendo’s New 3DS in 2015. The gaming device moves the “magic zone” around a bit as you move your head, thanks to some face tracking from a camera.

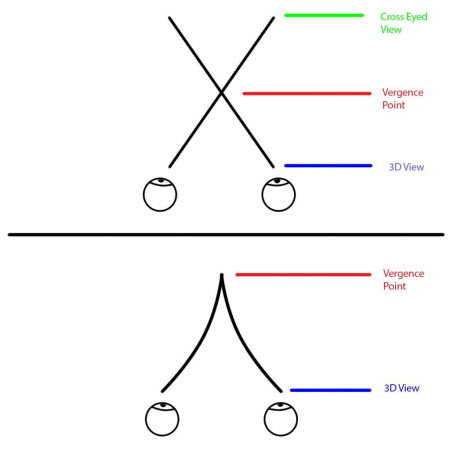

But yet another problem 3D has encountered was that only things at or before the "vergence" point, the point where the two images meet and converge into one, were truly in focus. If you tried to look anywhere beyond the vergence point while watching Avatar, you'd get a slightly headache inducing cross-eyed viewed.

It was Disney that ultimately introduced into production the solution to the 3D vergence problem. If you’ve noticedBig Hero 6and other Disney Animation 3D movies looking easier on the eyes, that’s no illusion. The idea being used is to “bend” the rays of light for each eye differently such that they are straight and start to converge to a point close in but then start to bend/curve towards infinity, such that they never cross over and so present no cross eyed view. This allows you to get a big 3D effect for foreground while keeping the background fully in focus and easy to see.

Yet another problem with 3D - this one not solvable by light fields - is that it exacerbates frame rate flicker, also known as “judder.” Judder involves quick moving motion on most any screen, causing a double image if you’re moving your eyes quickly enough. And this is exacerbated in 3D, thus, the faster moving and more action-heavy the scene, the more you need to reduce the 3D effect. At least if you’re doing things right.

To fix judder beyond just reducing 3D there are two solutions.

One is to increase the frame rate. The faster you flip through frames the harder it is to see a double image, as the previous image will be replaced too quickly and too little will move in it. This is what Peter Jackson was attempting to do with filming and displaying The Hobbit at 48fps. Unfortunately for Jackson, a lot of people didn’t like the “non-traditional” look of high frame rates.

Interestingly, Cameron is adamant about giving high frame rates another try, and for VR, high frame rates are an inherent must.

The other solution to judder is in understanding that the more motion blur you have, the more the potential double image is blurred, making judder less of a problem. While The Hobbit was filmed at a fairly sharp 1/72th of a second, its two sequelshad CG motion blur added and have been perceived as being easier on the eyes as a result.

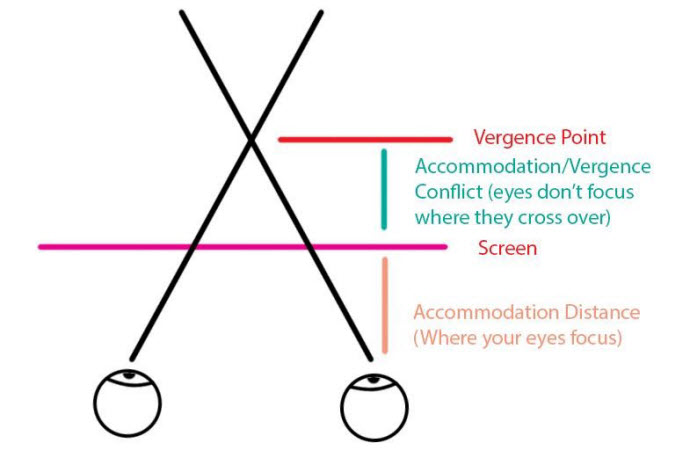

But there was one more major problem facing 3D. No matter what you did, the 3D vergence point at which your eyes crossed over and met would almost certainly be different from the point on which your eyes were focusing, or “accommodating”, to look at the screen itself.

Some people seem to handle this just fine, but many find it greatly hinders the effect of 3D, can be fatiguing to view and worsens things like nausea in VR.

Light fields can also fix some of 3D’s other problems already mentioned, but tackling this particular issue is where light fields truly shines.

Light Fields as Holograms (Almost)

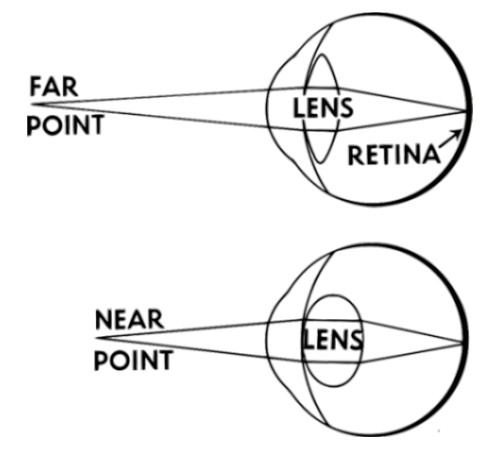

When you look at the world, what you see is a light field. Rays of light enter your eye at different angles, depending in part on how far or close they are to your eye. Your brain uses clues to guess how close or far each object in your vision is, then does its best to change the shape of your eye’s lens to focus on that part of the world.

When watching the normal world, this works great. But when watching a typical 3D screen, each eye gets a different angle, but otherwise the image is flat. You can only focus on the plane where the screen is.

But artificial light fields can fix this.

So how do artificial light fields work?

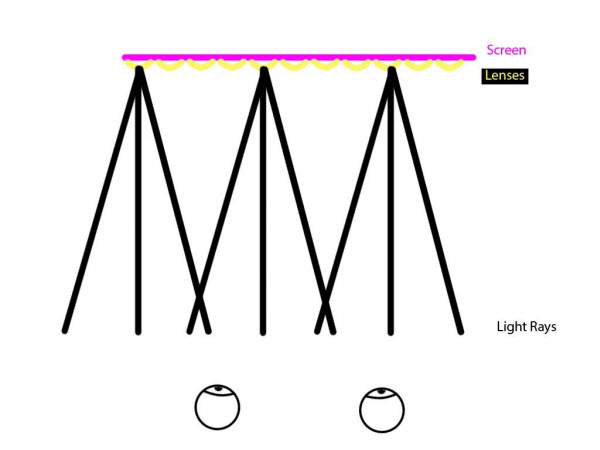

We’ll start with the gold standard for reproducing one, called a microlens array. This is literally an array, or sheet of tiny lenses put in front of a screen that each focus light slightly differently. Assuming your screen is displaying the correct image through each lens, the end result is a multitude of light rays at differing angles going into your eye, effectively recreating what we see in real life (sort of).

The problem that crops up here is that each lens needs to have its own special image, or array of pixels, displayed for it to work correctly, and each lens equates to a single pixel of a normal, non-light field image. This divides the screen’s resolution by however many rays of light need to be presented per pixel, and, thus, how many normal pixels (and rays) a single lensed pixel covers. Either way, the end result is that microlens arrays end up with much less resolution than a normal display.

Which brings us to the question of just how many rays one needs to provide in order to fool your eyes. The answer seems to vary by study, but a 3x3 array of rays seems enough to fool your eyes and give you a full range of “accommodation,” or the closest point your eyes can focus on compared to the furthest point they can focus on. Because your eyes can focus freely, there’s neither a vergence/accommodation conflict nor any cross-eyed view - at least for a narrow field of view.

Which is to mention that just like parallax barriers, light fields have a magic “zone where you get the effect. The more rays you have, the wider the field of view you can provide, but you’re trading off image resolution for more rays. At absolute minimum you need a matrix of 2x2 effective rays to produce any kind of light field effect.

Thus, for microlens arrays you’d need at least quadruple the resolution of a normal display to get a decent looking light field of the same sharpness and nine times the resolution for a good, comfortable light field and that 3x3 matrix. And the wider the field of view you want, the more resolution you need.

The benefits of a wide field of view go beyond having a wider magic zone though. Yes, a light field allows you to focus on different points in this virtual display and gives each eye a different view without the need for glasses. But it can also allow you to tilt your head around to get a different perspective on the scene, producing a full “holographic” effect, wherein looking “into” your phone is like looking into a different world. But this wider field of view needs even more rays to make it look good.

Luckily, we already have smartphone screens at resolutions up to 4K, making a high resolution 2x2 microlens light field display entirely possible with today’s technology. And while even higher resolution small screens have been dismissed as unnecessary for 2D tech, screens with resolutions as high as 8K and perhaps beyond have already been prototyped.

But not even 8K is enough to produce a truly wide field of view and a high resolution light field. But then maybe you don’t need a wide field of view for light fields to be effective.

Light Fields Enhancing Traditional 3D Displays

In traditional 3D displays you still only get one perspective. Move right or left, up or down from the screen and you don’t see the image rotate with you. And this is often a good thing! Movies and TV shows often rely on showing you a single perspective the director chooses. Allowing you to look at a different angle of a scene would mean you might miss important action going on in the scene (VR films have this same problem).

Similarly, video games (usually) allow you to spin the camera around at will as it is. None of these need you to able to see different things as you move your head around a display; so copying today’s single perspective view but with a light field can be highly beneficial.

Light fields don’t just have to be used to reproduce a hologram-like effect. You can also get a single perspective, i.e. a narrow field of view, from the light field effect. And with some clever engineering you can produce such an effect without needing an ultra high resolution screen.

Students at Stanford University came up with some nice mathematical and engineering trickery to use just a pair of LCDs stacked atop one another (and positioned so that you can see through one to the other) to reproduce a narrow view light field. This sort of tech could allow consumer VR headsets to have accurate 3D light fields in the not entirely distant future.

Unfortunately, this “not too distant” future is still indeterminate. Engineering problems with this technique, such as the angle of diffraction limiting resolution on this kind of display among other things, means it’s probably not going to become mainstream soon.

But there’s no need to despair. If this is not the more immediate solution to light field displays there are others to consider, including some coming very soon, indeed.

As mentioned, the upcoming RED Hydrogen One phone will have a display that can shift from light field mode to 2D mode, with a display made by a start-up called Leia (yes, named after that Leia). Little word has been given on how the phone’s display tech works, other than that it will be some version of the holographic type of display, giving a wide enough field of view to allow you to tilt your head around it.

Exceedingly few people have even gotten to see the prototypes, and with a mere 2560 x 1440 (2D) display, the light field effect will inevitably end up looking like fairly low resolution. Yet, the phone is on it’s on the way, along with a (no doubt ultra expensive) 8K light field camera to capture professional looking content in light field format.

And so while 3D may have been through a lot since the days of the Avatar movie, there’s hope for more mainstream activity and more light field devices to join the market before long.

-

JoeMomma The Lytro Camera was the 1st consumer light field device. It did not do 3D though.Reply

https://www.amazon.com/Lytro-Light-Field-Camera-Graphite/dp/B0099QUSGM -

bit_user ReplyIf you’ve noticed Big Hero 6

Funny you should mention that, because I had to import the 3D blu-ray from the UK - they didn't sell it in the US!

Pretty good movie, but a it bugged me a bit with the things these kids can supposedly build.

This is what Peter Jackson was attempting to do with filming and displaying The Hobbit at 48fps. Unfortunately for Jackson, a lot of people didn’t like the “non-traditional” look of high frame rates.

That's interesting, because the Hobbit 3D blu-ray looked horrible to me, until I turned on my TV's motion smoother (which effectively doubled what I presume was a 24 Hz version to 48 Hz per eye).

Honestly, you'll get used to the high framerate video. Then, you won't want to go back. Get a TV with a good motion smoother and just leave it on for a couple days. Except for video games, of course, since it can add latency. My TV's "game mode" automatically disables it.

Light fields can also fix some of 3D’s other problems already mentioned, but tackling this particular issue is where light fields truly shines.

This "accommodation" problem is one of the bigger issues with current AR tech. It's why AR is probably the killer app, for lightfields. That's why so many are excited about Magic Leap finally launching, this year.

The problem that crops up here is that each lens needs to have its own special image, or array of pixels, displayed for it to work correctly, and each lens equates to a single pixel of a normal, non-light field image.

The way I describe it is that each pixel needs to be able to simultaneously appear as a different color from different directions.

Movies and TV shows often rely on showing you a single perspective the director chooses. Allowing you to look at a different angle of a scene would mean you might miss important action going on in the scene (VR films have this same problem).

Definitely not the same problem as VR movies, which usually make the mistake of putting you in the midst of the action. Looking at a lightfield display is like looking through a window. It constrains your view much more than even watching a play performed live on stage, for instance.

I realize the article was already a bit longish, but some mention of Lytro, and the way it enables you to do retrospective pan, zoom, and focus could've been illuminating. -

linuxgeex Dude, Polarization is not Quantum Spin Up/Down. Polarization is the plane of orientation of the light, and/or the direction of rotation of the EM field components as it propagates. Typical polarizing lenses polarize the light to a plane, so placing 2 lenses at 90 degrees to each other blocks 100% of the light passing through the pair of lenses. The lenses used for theatres uses Clockwise / Counter-clockwise polarizing filters which don't manage to block planar polarized light but do manage to block the opposite circular polarization. This is why you can rotate your head without mixing channels, but it's also why there's always some ghosting - because when the light bounces off the screen a small portion of it changes polarization.Reply

A Twisted Nematic (TN) LCD display has a single planar polarization which is why it has poor viewing angles - as you move off plane less and less light escapes in the direction you're looking from. That has nothing to do with Quantum Spin, which is a Quantum State of Particles - not light. https://en.wikipedia.org/wiki/Spin_(physics) -

bob moog Calm down LINUXGEEX, they weren't really that far off. Technically polarization is the result of quantum helicity, but helicity is essentially the same thing as spin except that helicity is used for massless particles and spin is used for particles with mass (helicity actually means the component of the spin in the direction of motion). So their terminology was off but they were basically correct: a "spin up" photon would be the same thing as a right handed helicity photon, and a bunch of them together form a right handed or clockwise polarized light beam which goes through a clockwise polarizing filter. You get linear polarization from a sum of left and right circular polarization.Reply -

AgentLozen Replyparagraph near the bottom said:As mentioned, the upcoming RED Hydrogen One phone will have a display that can shift from light field mode to 2D mode, with a display made by a start-up called Leia (yes, named after that Leia). Little word has been given on how the phone’s display tech works, other than that it will be some version of the holographic type of display, giving a wide enough field of view to allow you to tilt your head around it.

You say that the RED Hydrogen One is a phone that can display 3D images. I clicked on the link to the Leia website and they were showing pictures of some science fiction hologram stuff. I'm not clear on what the Hydrogen One's final output will be like. Will the user see an image like what the Nintendo 3DS provides, or will it project a holographic Luke Skywalker for you to duel with? -

bit_user Reply21086981 said:I'm not clear on what the Hydrogen One's final output will be like. Will the user see an image like what the Nintendo 3DS provides, or will it project a holographic Luke Skywalker for you to duel with?

I can't comment on that specific device, but lightfield displays can produce images that seem to extend forward from the display surface (or recede back into it) in the same way as existing 3D technologies. Looking at the display from the side, you will see nothing. You have to be looking roughly perpendicular to the plane of the display.

The difference vs. 3D glasses is that you can move your head and see around things in the image. Likewise, it can support multiple viewers who will see slightly different perspectives. Again, very much like looking through a window, or at a holographic print.

The intro vid on this site is a cool proof-of-concept. Just watch it and you'll intuitively understand.

http://www.fovi3d.com/

(video direct link: https://vimeo.com/192728352/b38ff7b21a )

Worthwhile reading:

http://www.fovi3d.com/technology/

Also, a demo video of their new tech (seems less impressive to me, but what do I know?):

https://www.youtube.com/embed/oRjKKJM8IQc -

fellewein I've never seen glassless 3D content anywhere much less from a phone screen. I don't believe in this product at all. They cannot release any specifics, yet they act like the release date is around the corner? I really don't think this thing will even happen in 2018.Reply

https://tvtapapk.com/ -

AgentLozen I looked through those links you posted. That's neat stuff. I wonder what the viewing angle is like with this technology. In the case of the 3DS, your eyes had to be aligned juuuuuuust right for the best experience. It would be cool if there was a little more flexibility with this light field stuff. Thanks for the links.Reply