AI worm infects users via AI-enabled email clients — Morris II generative AI worm steals confidential data as it spreads

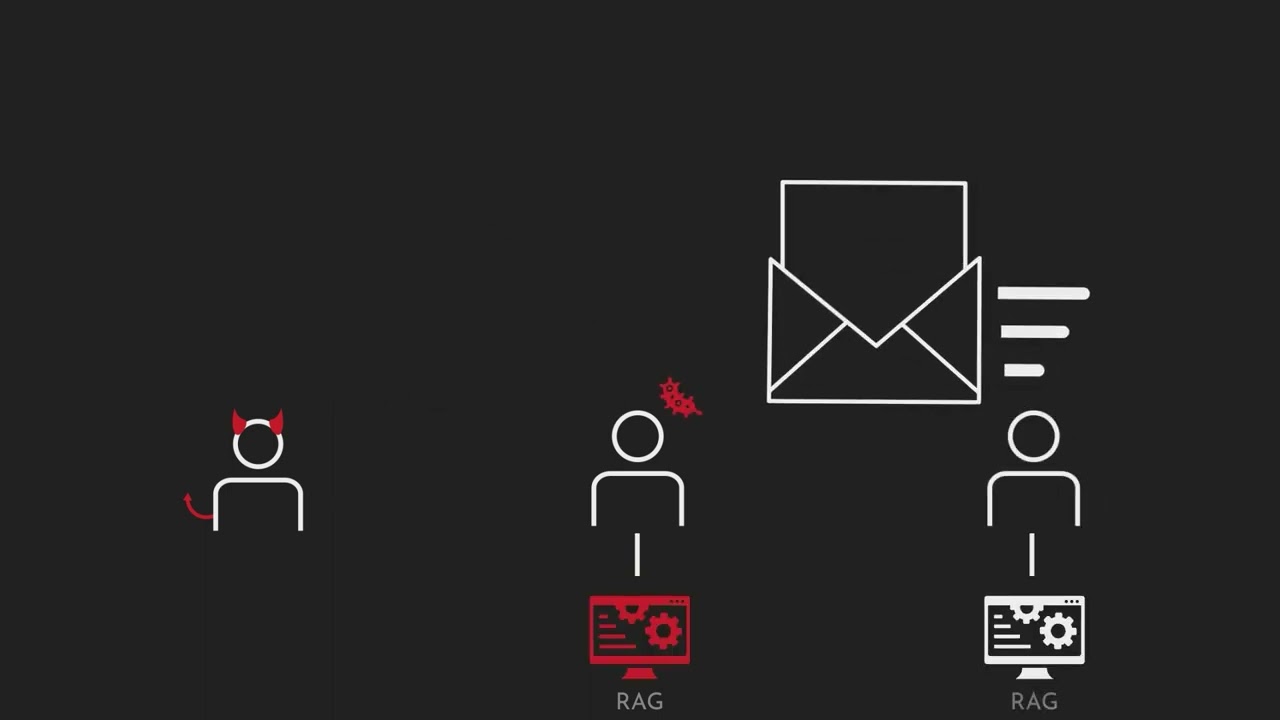

Researchers successfully tested this Morris II worm and published its findings using two methods.

A group of researchers created a first-generation AI worm that can steal data, spread malware, spam others via an email client, and spread through multiple systems. This worm was developed and successfully functions in test environments using popular LLMs. The team shared research papers and published a video showing how they used two methods to steal data and affect other email clients.

Ben Nassi from Cornell Tech, Stav Cohen from the Israel Institute of Technology, and Ron Bitton from Intuit created this worm. They named it 'Morris II' after the original Morris, the first computer worm that created a worldwide nuisance online in 1988. This worm targets AI apps and AI-enabled email assistants that generate text and images using models like Gemini Pro, ChatGPT 4.0, and LLaVA.

The worm uses adversarial self-replicating prompts. Here's how the authors describe the attack mechanism:

"The study demonstrates that attackers can insert such prompts into inputs that, when processed by GenAI models, prompt the model to replicate the input as output (replication) and engage in malicious activities (payload). Additionally, these inputs compel the agent to deliver them (propagate) to new agents by exploiting the connectivity within the GenAI ecosystem. We demonstrate the application of Morris II against GenAI-powered email assistants in two use cases (spamming and exfiltrating personal data), under two settings (black-box and white-box accesses), using two types of input data (text and images)."

You can see a concise demonstration in the video below.

The researchers say this approach could allow bad actors to mine confidential information, including but not limited to credit card details and social security numbers.

GenAI Leader's Response and Plans to Deploy Deterrents

Like all responsible researchers, the team reported their findings to Google and OpenAI. Wired reached out when Google refused to comment about the research, but an OpenAI spokesperson responded, telling Wired that, “They appear to have found a way to exploit prompt-injection type vulnerabilities by relying on user input that hasn’t been checked or filtered.” The OpenAI rep said the company is making its systems more resilient and added that developers should use methods that ensure they are not working with harmful input.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Roshan Ashraf Shaikh has been in the Indian PC hardware community since the early 2000s and has been building PCs, contributing to many Indian tech forums, & blogs. He operated Hardware BBQ for 11 years and wrote news for eTeknix & TweakTown before joining Tom's Hardware team. Besides tech, he is interested in fighting games, movies, anime, and mechanical watches.

-

Notton This article is so full of buzzwords and technobabel that the only thing I understood from it, someone made malware.Reply

What does it infect? What is vulnerable? Is the regular user ever going to see something like this? -

frogr Reply

You are at risk only if an application on your phone or computer uses Large Language Model AI. Microsoft and Google are planning on introducing them into your operating system and browsers. Intel, AMD and Apple among others are including NPUs (neural processing units) into their new chips to run these models on your hardware. Nothing to worry about, right?Notton said:This article is so full of buzzwords and technobabel that the only thing I understood from it, someone made malware.

What does it infect? What is vulnerable? Is the regular user ever going to see something like this? -

NinoPino It seems to me that the AI thing is becoming too complex (and not too intelligent) to be controlled, also in this early stage.Reply -

Alvar "Miles" Udell Qualcomm has already said they're intergrating "AI processing" into the next Snapdragon chip next year too "to improve signal reception". Let us hope Apple, Google, Red Hat (and all other Linux distros), and Microsoft are actually taking AI malware seriously so AI malware is a non-issue.Reply

Certain manufacturers though, like those starting with an H and O, will no doubt use AI malware to their advantage.