Baidu's 'Deep Voice 2' Promises Next-Gen Real-Time Speech Synthesis Technology

Baidu launched Deep Voice 2, the next generation of its neural text-to-speech technology. The new version is based on the same Deep Voice 1 pipeline, but it alleges a much higher performance and delivers significantly improved speech quality.

Improving On Deep Voice 1

When using Deep Voice 1, the company still needed about 20 hours of training for each voice. However, with the new improved Deep Voice 2 technology, only half an hour was needed to “train” a new voice.

This also allows the new system to scale to hundreds of different voices and accents. This could, for instance, make the “read-aloud” feature in many ebook reading applications more appealing due to all the unique and personalized voices you could choose when listening to an ebook.

The Deep Voice 2 technology can learn on its own, from scratch, all the shared qualities of different voices, and then it can imitate them.

“Deep Voice 2 can learn from hundreds of voices and imitate them perfectly,” said the company in a blog post.

Baidu uploaded some samples online, demonstrating the rather high quality of the voices, as well as the different accents that they use.

Outperforming DeepMind’s “Wavenet”

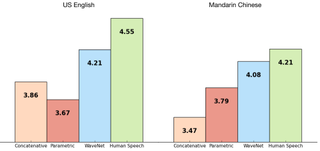

Wavenet is DeepMind’s “breakthrough” technology that has significantly reduced the gap towards human-like speech. The Mean Opinion Score (MOS) for DeepMind’s Wavenet was 4.21 for U.S. English, whereas for humans it was 4.55. For Mandarin, Wavenet’s MOS was 4.08, but humans scored 4.21.

Stay on the Cutting Edge

Join the experts who read Tom's Hardware for the inside track on enthusiast PC tech news — and have for over 25 years. We'll send breaking news and in-depth reviews of CPUs, GPUs, AI, maker hardware and more straight to your inbox.

However, despite Wavenet’s ability to produce near-human levels of speech quality, it also has one significant flaw at the moment, and that’s the high computing resources it requires to work. According to Baidu, it can take minutes or more to generate a few seconds of speech with a Wavenet-like technology. The company also said that its Deep Voice technology is capable of synthesizing speech up to 400 times faster than other models like Wavenet.

In a recent paper, Baidu also showed the MOS for its Deep Voice and Deep Voice 2 technologies, and it looks like Baidu’s technologies compromise on speech quality to achieve the higher performance. Deep Voice achieved an MOS of only 2.05 for single-speakers, while the new Deep Voice 2 received a score of 2.96, which is a 44% improvement in speech quality over the previous generation.

However, as you can see, Deep Voice 2 also has a significantly lower score than DeepMind’s Wavenet technology in speech quality. Baidu was also looking at developing a hybrid Deep Voice technology with tens of Wavenet (convolutional) layers.

The speech quality could increase up to 3.53 MOS with 80 Wavenet layers, but the company offered no mention of how much the performance would degrade with all the Wavenet layers slowing it down. Baidu said that it may further investigate the hybrid approach in the future.

A Future Powered By Synthetic Speech

The rise in popularity of digital assistants has happened in part because they have gotten much smarter due to all the latest improvements in machine learning, but also because they’ve begun to sound increasingly more like humans. The result has been easier and less awkward conversations with various AI assistants.

Baidu also believes that speech will be one of the main ways we’ll interact with computers in the future. The company said that it’s working hard to enable that future, through speech synthesis technologies such as Deep Voice, speaker identification technologies such as the recently announced Deep Speaker, and the end-to-end speech recognition system Deep Speech 2.

Most Popular