Image Claims to Show 2nd-Gen Intel Larrabee Graphics Card

Intel's axed GPU continues to make rounds.

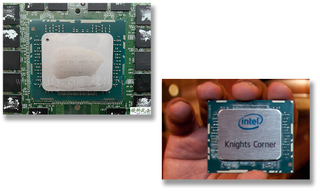

Hardware collector YJFY has posted images of what they claim is Intel's 2nd Generation Larrabee graphics card — which never came to market, but existed as hardware evaluation samples. Intel's Larrabee 2 graphics board was meant to be based on the chip eventually known as the Knights Corner, and this is the first time we've seen (supposed) images of this device published.

The alleged 2nd Generation Larrabee graphics card carries a processor that looks exactly like Intel's Knights Corner, which was demonstrated by and Intel exec at the SC11 conference in Nov. 2011. The processor is an engineering sample produced in late 2011 featuring the QBAY stepping. It allegedly features 60 cores and operates at 1.00 GHz, which corresponds to specifications of Intel's KNC. Unlike production Xeon Phi 'Knights Corner' products, this processor is paired with 4GB of GDDR5 memory.

The board is clearly a very early evaluation sample, with diagnostic LEDs, multiple connectors for probes, and various jumpers. It also features a DVI connector that is typically used for video output. Keeping in mind that Tom Forsyth, a developer of the Larrabee project at Intel, once said that the company's Knights Corner silicon still feature GPU parts like graphics outputs and texture samples, we're not surprised to see a DVI connector on a KNC-based board.

While we cannot be sure that the card in the picture is indeed Larrabee 2 based on the Knights Corner silicon, there's a lot of direct and indirect evidence that we're dealing with the 2nd Generation Larrabee.

"Remember — KNC is literally the same chip as LRB2. It has texture samplers and a video out port sitting on the die," Forsyth said. "They don't test them or turn them on or expose them to software, but they are still there – it is still a graphics-capable part."

Intel's codenamed Larrabee product was meant to be a client PC-oriented graphics processor and a high-performance computing co-processor based on 4-way Hyper-Threaded Atom-like x86 cores with AVX-512 extensions that were meant to deliver flexible programmability and competitive performance. After Intel determined that Larrabee did not live up to expectations in graphics workloads (as it was still largely a CPU with graphics capabilities), it switched the project entirely to HPC workloads, which is how its Xeon Phi was born.

"[In 2005, when Larrabee was conceived, Intel] needed something that was CPU-like to program, but GPU-like in number crunching power," said Forsyth. "[…] The design of Larrabee was of a CPU with a very wide SIMD unit, designed above all to be a real grown-up CPU — coherent caches, well-ordered memory rules, good memory protection, true multitasking, real threads, runs Linux/FreeBSD, etc."

Stay on the Cutting Edge

Join the experts who read Tom's Hardware for the inside track on enthusiast PC tech news — and have for over 25 years. We'll send breaking news and in-depth reviews of CPUs, GPUs, AI, maker hardware and more straight to your inbox.

But eventually Intel's Xeon Phi, the MIC (many integrated core) architecture, and other massively-parallel CPU architectures (Sony's Cell, Sun's Niagara) failed to offer competitive performance against Nvidia's compute GPUs, which is why Intel eventually decided to re-enter discrete graphics GPU business with Arc GPUs and introduce its own Ponte Vecchio compute GPUs.

Anton Shilov is a Freelance News Writer at Tom’s Hardware US. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

bit_user Reply

I think the original Larrabee could, as they probably got pretty far along with their Direct3D support before it got cancelled. But, the article states that the graphics hardware wasn't tested or debugged on this generation. So, perhaps in theory, but certainly not in practice.Glock24 said:Can it run Crysis? -

bit_user From the article:Reply

the company's Knights Corner silicon still feature GPU parts like graphics outputs and texture samples

I have to wonder if some engineers left that stuff in there, clinging to the hope that Intel would reverse its decision not to release a GPU version.

"The design of Larrabee was of a CPU with a very wide SIMD unit, designed above all to be a real grown-up CPU — coherent caches, well-ordered memory rules, good memory protection, true multitasking, real threads, runs Linux/FreeBSD, etc."

This was its undoing. All of that stuff has real costs, which pure GPUs (mostly) don't pay. That stuff has a lot to do with the reason it couldn't compete either as a dGPU or a GPU-like compute accelerator!

which is why Intel eventually decided to re-enter discrete graphics GPU business

Not only that. When the Larrabee / Xeon Phi project started, Intel didn't own Altera (second biggest FPGA maker), which they bought around when Xeon Phi (KNL) first launched.

Also, before Xeon Phi was canceled, Intel had acquired AI chip maker Nervana. I'm sure that also factored into their decision to kill Xeon Phi, since AI was one of the premier workloads it was targeting. Of course, Intel later changed their mind about Nervana and killed it, after snapping up Habana Labs. Ever fickle, Intel.

Anyway, so what'd happened between when Xeon Phi started and when they killed it is they effectively carved up its market into 3: FPGA-based accelerators, purpose-built AI acceserators, and traditional GPUs. Also, a piece of it is being handled with the addition of features like DL-Boost and AMX to their server CPUs.

You might even say Intel took a step back and devised a more thoughtful, nuanced approach to the markets Larrabee & Xeon Phi targeted. Their first attempt seemed to come from a very CPU-centric mindset and trying to throw x86 at all problems.

Most Popular