Intel's Upcoming DG2 Rumored to Compete With RTX 3070

But take the card's specs with a huge grain of salt

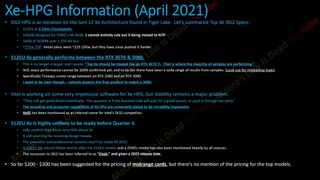

According to Moore's Law Is Dead, Intel's successor to the DG1, the DG2, could be arriving sometime later this year with significantly more firepower than Intel's current DG1 graphics card. Of course it will be faster — that much is a given — but the latest rumors have it that the DG2 could perform similarly to an RTX 3070 from Nvidia. Could it end up as one of the best graphics cards? Never say never, but yeah, big scoops of salt are in order. Let's get to the details.

Supposedly, this new Xe graphics card will be built using TSMC's N6 6nm node, and will be manufactured purely on TSMC silicon. This isn't surprising as Intel is planning to use TSMC silicon in some of its Meteor Lake CPUs in the future. But we do wonder if a DG2 successor based on Intel silicon could arrive later down the road.

According to MLID and previous leaks, Intel's DG2 is specced out to have up to 512 execution units (EUs), each with the equivalent of eight shader cores. The latest rumor is that it will clock at up to 2.2GHz, a significant upgrade over current Xe LP, likely helped by the use of TSMC's N6 process. It will also have a proper VRAM configuration with 16GB of GDDR6 over a 256-bit bus. (DG1 uses LPDDR4 for comparison.)

Earlier rumors suggested power use of 225W–250W, but now the estimated power consumption is around 275W. That puts the GPU somewhere between the RTX 3080 (320W) and RTX 3070 (250W), but with RTX 3070 levels of performance. But again, lots of grains of salt should be applied, as none of this information has been confirmed by Intel. TSMC N6 uses the same design rules as the N7 node, but with some EUV layers, which should reduce power requirements. Then again, we're looking at a completely different chip architecture.

Regardless, Moore's Law Is Dead quotes one of its 'sources' as saying the DG2 will perform like an RTX 3070 Ti. This is quite strange since the RTX 3070 Ti isn't even an official SKU from Nvidia (at least not right now). Put more simply, this means the DG2 should be slightly faster than an RTX 3070. Maybe.

That's not entirely out of the question, either. Assuming the 512 EUs and 2.2GHz figures end up being correct, that would yield a theoretical 18 TFLOPS of FP32 performance. That's a bit less than the 3070, but the Ampere GPUs share resources between the FP32 and INT32 pipelines, meaning the actual throughput of an RTX 3070 tends to be lower than the pure TFLOPS figure would suggest. Alternatively, 18 TFLOPS lands half-way between AMD's RX 6800 and RX 6800 XT, which again would match up quite reasonably with a hypothetical RTX 3070 Ti.

There are plenty of other rumors and 'leaks' in the video as well. For example, at one point MLID discusses a potential DLSS alternative called, not-so-creatively, XeSS — and the Internet echo chamber has already begun to propogate that name around. Our take: Intel doesn't need a DLSS alternative. Assuming AMD can get FidelityFX Super Resolution (FSR) to work well, it's open source and GPU vendor agnostic, meaning it should work just fine with Intel and Nvidia GPUs as well as AMD's offerings. We'd go so far as to say Intel should put it's support behind FSR, just because an open standard that developers can support and that works on all GPUs is ultimately better than a proprietary standard. Plus, there's not a snowball's chance in hell that Intel can do XeSS as a proprietary feature and then get widespread developer support for it.

Stay On the Cutting Edge: Get the Tom's Hardware Newsletter

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Other rumors are more believable. The encoding performance of DG1 is already impressive, building off Intel's existing QuickSync technology, and DG2 could up the ante signficantly. That's less of a requirement for gaming use, but it would certainly enable live streaming of content without significantly impacting frame rates. Dedicated AV1 encoding would also prove useful.

The DG2 should hopefully be available to consumers by Q4 of 2021, but with the current shortages plaguing chip fabs, it's anyone's guess as to when these cards will actually launch. Prosumer and professional variants of the DG2 are rumored to ship in 2022.

We don't know the pricing of this 512EU SKU, but there is a 128EU model planned down the road, with an estimated price of around $200. More importantly, we don't know how the DG2 or its variants will actually perform. Theoretical TFLOPS doesn't always match up to real-world performance, and architecture, cache, and above all drivers play a critical role for gaming performance. We've encountered issues testing Intel's Xe LP equipped Tiger Lake CPUs with some recent games, for example, and Xe HPG would presumably build off the same driver set.

Again, this info is very much unconfirmed rumors, and things are bound to change by the time DG2 actually launches. But if this data is even close to true, Intel's first proper dip into the dedicated GPU market (DG1 doesn't really count) in over 10 years could make them decently competitive with Ampere's mid-range and high-end offerings, and by that token they'd also compete with AMD's RDNA2 GPUs.

Aaron Klotz is a freelance writer for Tom’s Hardware US, covering news topics related to computer hardware such as CPUs, and graphics cards.

-

JayNor TSM's N6 uses same design rules as N7, but makes use of EUV equipment according to fuse.wikichipReply

https://fuse.wikichip.org/news/2879/tsmc-5-nanometer-update/ -

JayNor "Intel doesn't need a DLSS alternative..."Reply

Intel has been opening rendering and ray tracing software and converting them to work with oneAPI. They may want to write generic dpc++ code for dlss, but take better advantage of their device specific features in their GPU level zero code. -

JarredWaltonGPU Reply

DLSS is still a proprietary Nvidia solution that uses Nvidia's tensor cores, so Intel would need to build an alternative from the ground up, then get developers to use it. Which is why it would be easier to support FSR, since that will have AMD behind it and is presumably well under way in development. Of course, we still need to see how FSR looks and performance -- on AMD and Nvidia GPUs. But despite having the "most GPUs" in the wild, Intel gets very little GPU support from game devs, since all of its current GPUs are slow integrated solutions.JayNor said:"Intel doesn't need a DLSS alternative..."

Intel has been opening rendering and ray tracing software and converting them to work with oneAPI. They may want to write generic dpc++ code for dlss, but take better advantage of their device specific features in their GPU level zero code. -

TerryLaze Reply

But intel's new GPUs do have an AI "core" asic or whatever it is, it has AI capable hardware, the issue would be to do all the training it needs to make the results look good, well if the AI is good/fast enough that is.JarredWaltonGPU said:DLSS is still a proprietary Nvidia solution that uses Nvidia's tensor cores, so Intel would need to build an alternative from the ground up, then get developers to use it.

Does this even need any input from developers?!

This is just taking whatever resolution and is upscaling it, right?! -

thGe17 Reply

Only nVidia's implementation is proprietary, not the theoretical construct itself. And of course it has to be this way, because this is exactly the same AMD (maybe together with Microsoft; see DirectML) tries to achieve with its Super Resolution alternative.JarredWaltonGPU said:DLSS is still a proprietary Nvidia solution that uses Nvidia's tensor cores, so Intel would need to build an alternative from the ground up, then get developers to use it. Which is why it would be easier to support FSR, since that will have AMD behind it and is presumably well under way in development. Of course, we still need to see how FSR looks and performance -- on AMD and Nvidia GPUs. But despite having the "most GPUs" in the wild, Intel gets very little GPU support from game devs, since all of its current GPUs are slow integrated solutions.

Simply viewing at a single, isolated frame won't work; the AI-based algorithm has to take multiple frames and motion vectors into account to be competitive with DLSS 2.x.

Additionally the usage of Tensor Cores is only optional. nVidia uses (restricts to) them due to marketing reasons and because they speed up necessary AI-calculations, but for example early beta-implementations of DLSS 2 were solely processed on ALUs/CUDA-cores.

AMD will have to process these calculations also via their ALUs, because RDNA2 does not have special hardware or ISA-extensions.

Intel's Xe has DP4a for speeding up inferencing workloads by a huge amount. This is no dedicated hardware, but this ISA-extension still manages to provide a significant performance gain for those types of workloads.

Notes on some common misconceptions:

a) DLSS 2.x needs no special treatment. The technology has to be implemented into the engine and it runs with the unified NN out of the box.

Note that there exists already a universal DLSS 2-implementation in Unreal Engine 4, therefore its usage should continue to increase, because it is now available to every UE developer.

b) DLSS and competing technologies should have similar requirements with regards to game engines, therefore it should be quiet easy for a developer, that if he/she has already implemented one technology, he/she can easily add another.

c) If Intel uses a completely different name like "XeSS", then it is most likely, that this is their own technology and no adoption of AMDs Super Resolution. Additionally this might be plausible, because Intel has more manpower and more AI knowledge than AMD and therefore there might be no need for them to rely on AMD to deliver something production ready ... eventually.

d) Special hardware like Tensor Cores or ISA-extensions like DP4a are always only optional for AI processing. The regular ALUs are sufficient, but a more specialized chip design will be faster in this case, therefore it is an advantage to have this functionality.

For example also Microsoft's DirecML tries to utilize Tensor Cores (and other hardware alike), if the GPU provides it. -

JarredWaltonGPU Reply

Unless Intel (and/or AMD) builds a DLSS alternative that doesn't require direct game support — meaning, it's built into the drivers — it would need dev support. It's 'intelligent' upscaling that supposed to anti-alias as well as enhancing details. What's really happening with the code? That's a bit harder to determine. Nvidia knows that it's running on the Tensor cores, but it's a weighted network that was trained by feeding it a bunch of data. Could that network run on AMD and Intel hardware? Theoretically, but right now it's part of Nvidia's drivers and Nvidia wouldn't want to provide any help to its competitors.TerryLaze said:But intel's new GPUs do have an AI "core" asic or whatever it is, it has AI capable hardware, the issue would be to do all the training it needs to make the results look good, well if the AI is good/fast enough that is.

Does this even need any input from developers?!

This is just taking whatever resolution and is upscaling it, right?! -

ezst036 Having more video cards on the market ought to be helpful to the current situation with supply issues.Reply -

JarredWaltonGPU Reply

Sure ... except they're being manufactured at TSMC, which is already maxed out on capacity. So for every DG2 wafer made, some other wafer can't be made. If DG2 really is N6, it's perhaps less of a problem, but TSMC is still tapped out.ezst036 said:Having more video cards on the market ought to be helpful to the current situation with supply issues. -

hotaru.hino Reply

If I recall correctly, the only thing that DLSS 2.0 requires now is that the game engine supports some form of temporal anti-aliasing since it uses previous frames to reconstruct details in current frames.thGe17 said:Notes on some common misconceptions:

a) DLSS 2.x needs no special treatment. The technology has to be implemented into the engine and it runs with the unified NN out of the box.

Note that there exists already a universal DLSS 2-implementation in Unreal Engine 4, therefore its usage should continue to increase, because it is now available to every UE developer. -

waltc3 I'd like to see the silly rumors stop--didn't people get tired of the last round of idiotic rumors about discrete Intel GPUs--competitive with nothing more than the very bottom of the value market. Intel is quite a way behind AMD CPUs atm, and the distance Intel is behind AMD and nVidia in the GPU markets is practically incalculable...;)Reply

Most Popular