Apple skips Nvidia's GPUs for its AI models, uses thousands of Google TPUs instead

Recently released research paper reveals the details.

Apple has revealed that it didn’t use Nvidia’s hardware accelerators to develop its recently revealed Apple Intelligence features. According to an official Apple research paper (PDF), it instead relied on Google TPUs to crunch the training data behind the Apple Intelligence Foundation Language Models.

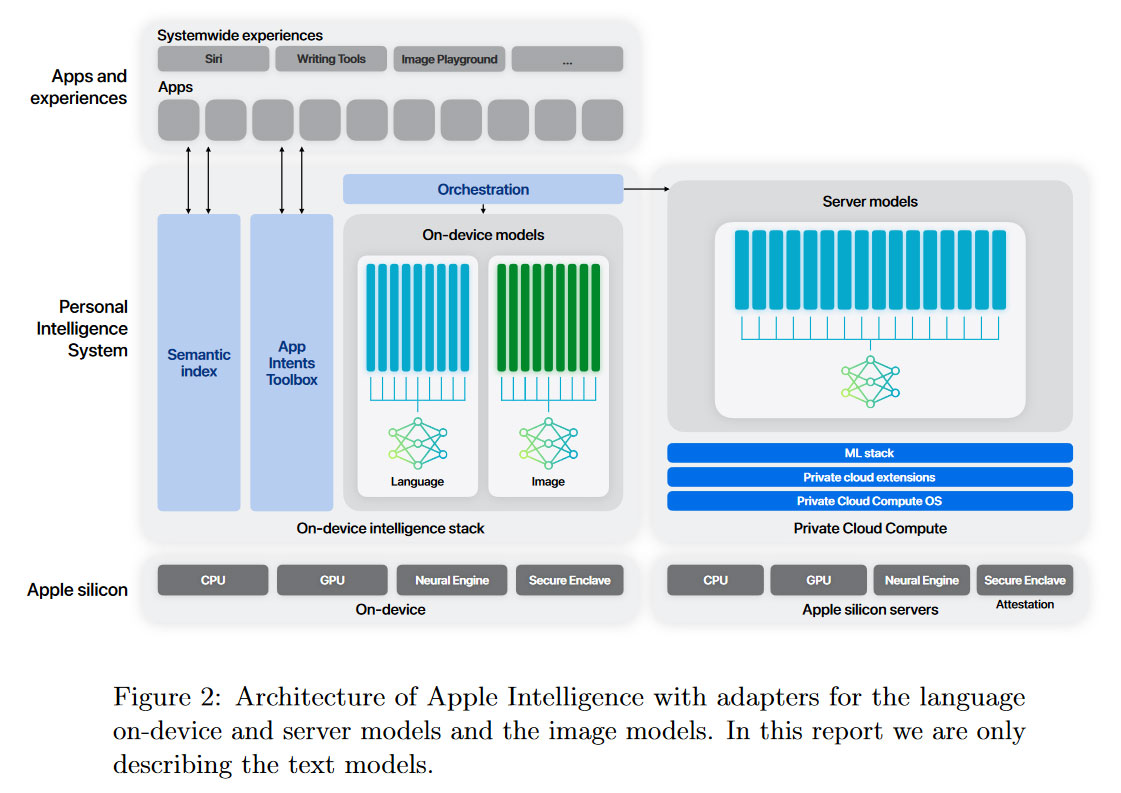

Systems packing Google TPUv4 and TPUv5 chips were instrumental to the creation of the Apple Foundation Models (AFMs). These models, AFM-server and AFM-on-device models, were designed to power online and offline Apple Intelligence features which were heralded back at WWDC 2024 in June.

AFM-server is Apple’s biggest LLM, and thus it remains online only. According to the recently released research paper, Apple’s AFM-server was trained on 8,192 TPUv4 chips “provisioned as 8 × 1,024 chip slices, where slices are connected together by the data-center network (DCN).” Pre-training was a triple-stage process, starting with 6.3T tokens, continuing with 1T tokens, and then context-lengthening using 100B tokens.

Apple said the data used to train its AFMs included info gathered from the Applebot web crawler (heeding robots.txt) plus various licensed “high-quality” datasets. It also leveraged carefully chosen code, math, and public datasets.

Of course, the ARM-on-device model is significantly pruned, but Apple reckons its knowledge distillation techniques have optimized this smaller model’s performance and efficiency. The paper reveals that AFM-on-device is a 3B parameter model, distilled from the 6.4B server model, which was trained on the full 6.3T tokens.

Unlike AFM-server training, Google TPUv5 clusters were harnessed to prepare the ARM-on-device model. The paper reveals that “AFM-on-device was trained on one slice of 2,048 TPUv5p chips.”

It is interesting to see Apple has released such a detailed paper, revealing techniques and technologies behind Apple Intelligence. The company isn’t renowned for its transparency but seems to be trying hard to impress in AI, perhaps as it has been late to the game.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

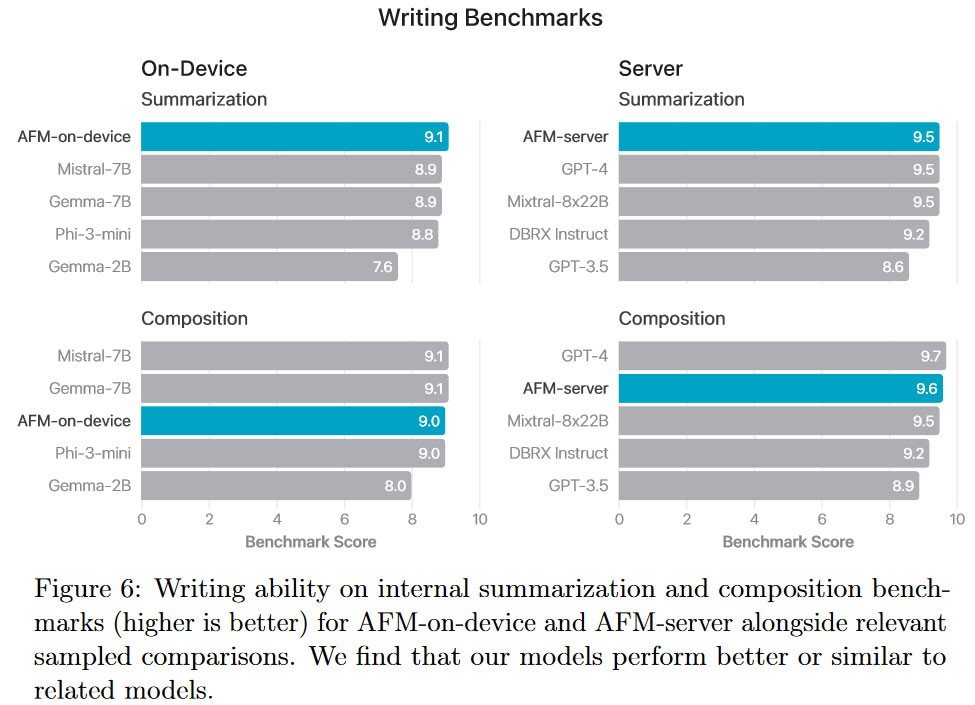

According to Apple’s in-house testing, AFM-server and AFM-on-device excel in benchmarks such as Instruction Following, Tool Use, Writing, and more. We’ve embedded the Writing Benchmark chart, above, for one example.

If you are interested in some deeper details regarding the training and optimizations used by Apple, as well as further benchmark comparisons, check out the PDF linked in the intro.

Mark Tyson is a news editor at Tom's Hardware. He enjoys covering the full breadth of PC tech; from business and semiconductor design to products approaching the edge of reason.

-

Heat_Fan89 This is NO surprise as Apple is still stinging from their last encounter with Nvidia which went bad and cost Apple a lot of money many years ago with failed GPU's that Nvidia did not take responsibility for. Both sides went blaming the other. Apple is not the only company burned by Nvidia.Reply -

EMI_Black_Ace Well, given that all Apple wants out of the hardware is AI compute, Google TPUs deliver that on a more cost efficient basis without any of the other "stuff" Nvidia offers.Reply -

Kamen Rider Blade Apple has a "Don't do Business" with nVIDIA & it's hardware after they were burned by nVIDIA.Reply -

husker Perhaps Apple is willing to disclose its methods in order to show others that there is an AI road that does not lead to Nvidia.Reply -

Makaveli Reply

add microsoft to that list with the original xbox.Heat_Fan89 said:This is NO surprise as Apple is still stinging from their last encounter with Nvidia which went bad and cost Apple a lot of money many years ago with failed GPU's that Nvidia did not take responsibility for. Both sides went blaming the other. Apple is not the only company burned by Nvidia.

There is a reason all the consoles are using AMD IP.

ezst036 said:How serious can Apple be about gaming if they refuse to bury the hatchet with Nvidia?

Apple is a trillion dollar company if they cared about gaming they would have been in that market along time ago. -

Mattzun Reply

Apple has already captured a lot of gaming revenue - its just not on laptops/desktops.Makaveli said:add microsoft to that list with the original xbox.

There is a reason all the consoles are using AMD IP.

Apple is a trillion dollar company if they cared about gaming they would have been in that market along time ago.

55 percent of gaming revenue is on mobile devices and Apple is getting a huge cut of that from both device sales and the app store.

Back to the article

Its great that Apple was able to define specific goals for AI processing and that Google TPUs worked for them.

It allowed Apple to meet its goals and it freed up general purpose NVidia units for companies that need them.

Given that there is a huge backlog for NVidia AI processors, this seems like a win-win. -

Makaveli Reply

This I know but i'm PCMR I don't consider mobile gaming real gaming :)Mattzun said:Apple has already captured a lot of gaming revenue - its just not on laptops/desktops.

55 percent of gaming revenue is on mobile devices and Apple is getting a huge cut of that from both device sales and the app store.

You are 100% correct! -

renz496 Reply

Easy. All they need to do is make as much money as Apple in quarterly basis.husker said:Perhaps Apple is willing to disclose its methods in order to show others that there is an AI road that does not lead to Nvidia.