Google 'Headset Removal' Brings Your Face Into Mixed Reality Recordings

Google developed a technique that combines advanced technology including machine learning and 3D vision to bring your face back into mixed reality VR recordings.

Presenting virtual reality such that bystanders understand what’s going on is one of the biggest challenges for the VR market today. VR is incredible for the person in the headset, but it's hard to translate the experience to spectators.

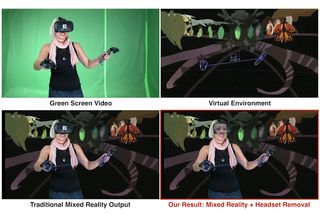

Currently, mixed reality VR filmed with a green screen stands as the most compelling way to translate what virtual reality feels like to bystanders. By mixing the footage of the player into the game, you get to see a reasonably close approximation of the experience.

Mixed reality VR is helping HTC get the word out about VR. There are a handful of YouTubers pumping out regular mixed reality VR content, and those videos are getting more and more popular, but they aren’t as personable as a regular Let’s Play video or a live stream because you can’t see the player’s face, as it's behind the HMD.

Most people would probably assume there’s nothing we could do about that and move on. But Google researchers aren’t most people. Three teams at Google came together to develop a solution for the problem. Researchers from Google’s Machine Perception team collaborated with the Daydream Labs team and the YouTube Spaces recording studio to create Google “Headset Removal,” which leverages the cutting-edge technologies of machine learning, 3D vision (Tango), and advanced graphics techniques to bring your face back into view under the headset.

Google’s approach starts with a dynamic face model capture. Before putting the Vive on, the player must record a 3D capture of the front and sides of their face. During the capture, the subject is instructed to look in all directions to record their eye movement. Google stores the captured data in what it calls a gaze database, which is later called upon to dynamically generate “any desired eye-gaze” for the synthesized face.

The next step is recording the gameplay in mixed reality. That’s where Google’s Daydream team and YouTube Spaces come in. Filming mixed reality video requires a special setup consisting of a multi-wall green screen or green room, a powerful PC with an HDMI capture card, a video camera, and an extra Vive controller.

Stay on the Cutting Edge

Join the experts who read Tom's Hardware for the inside track on enthusiast PC tech news — and have for over 25 years. We'll send breaking news and in-depth reviews of CPUs, GPUs, AI, maker hardware and more straight to your inbox.

Mixed reality recording splits the game’s view into four quadrants that show foreground, background, alpha channel, and the first-person view. You must combine those views with the video recording and composite them together. Before you start recording, you must calibrate the real camera’s view with the in-game camera’s view, which is an incredibly laborious and frustrating task (trust me).

If calibrating two hands to the controllers is a nightmare, I don’t want to imagine what it would be like to manually attach the dynamic face scan model to a moving Vive headset. Google avoided compounding the calibration nightmare by attaching a fiducial marker to the front of Vive headset that it can track in 3D. The marker allowed Google to automate the calibration process.

Google’s Vive is modified with SMI eye tracking hardware, which allows the company to track the player’s gaze and when they blink. Google uses that data to replicate your eye expression in the 3D model that it produces from the 3D scan.

The final step involves compositing the various views to produce a mixed reality video that includes the player’s face, and the results are impressive. Instead of watching a video of a person inside a black HMD, you get a video of a person playing a game in what looks like a transparent headset. Google calls it the “scuba mask effect.”

We already believed that mixed reality VR would help catapult VR into the mainstream. Google’s Headset Removal technique only reinforces that belief.

Kevin Carbotte is a contributing writer for Tom's Hardware who primarily covers VR and AR hardware. He has been writing for us for more than four years.

-

bit_user Yeah, it looks about as creepy as I expected. Perhaps the next iteration will use the mouth expression to deform the eyes, so you don't have people smiling with a blank stare. Perhaps they might also find a way to use the eye trackers to estimate the upper facial expression, and use that to deform the image.Reply

Anyway, I think it's good for brief, distant shots in promo videos. If I were producing content with this system, I'd definitely minimize closeups and frontal shots of more than a second or so.

Most Popular