Eye Tracking, Foveated Rendering, And SMI’s Quest For VR Domination

It’s still the early days of VR, and although Oculus and HTC seem entrenched already as the major VR hardware makers, there’s much left unsettled. To wit: Processing power for VR is at a premium, and some technologies are still largely missing from many VR HMDs, such as eye tracking. SensoMotoric Instruments (SMI) has a plan for that.

Foveated Rendering

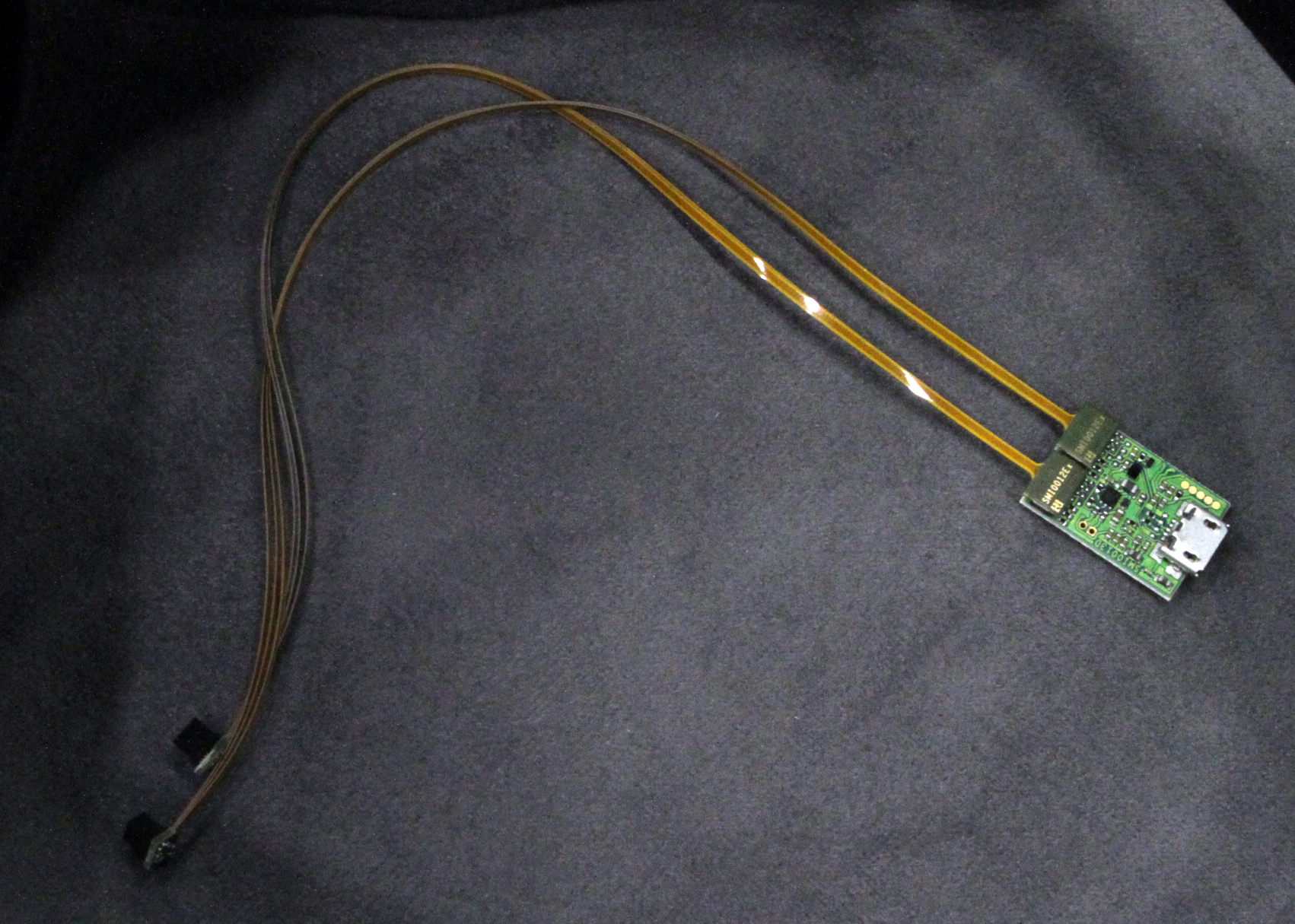

SMI has developed a solution that offers foveated rendering in essentially any HMD. It uses eye tracking to accomplish this feat, and the eye tracking is performed by a pair of tiny cameras attached to a minute PCB, all of which is embedded in a VR HMD.

Simply put, foveated rendering saves processing resources by focusing primarily on the center part of what you see in a VR experience. Essentially, it renders in full only what is in your field of view, and it provides the lowest latency and highest framerates for the small area where your eye is focusing at any given time.

It makes sense, right? If you aren’t looking at a certain area of a VR experience, why render it and waste precious GPU resources? But it’s easier said than done.

In order for foveated rendering to work, the system has to know where you’re looking and also adjust accordingly. Eyeballs can move quickly, so you need an eye-tracking solution than can read those movements fast enough and tell the system to adjust, and the system, then, has to perform the adjustment. All of this has to happen in the blink of an eye, as it were.

Rapid Eye Tracking: Undefeatable

There are some companies working on eye tracking for VR HMDs, including QiVARI and Fove, but SMI’s version was impressive for a couple of reasons: For one thing, I couldn’t defeat the foveated rendering no matter how hard I tried. For another, the cameras and assembly are designed such that they could fit into almost any HMD, mobile or not, and the whole thing costs less than $10.

The cameras look straight through the HMD’s lenses, resulting in what SMI called full field of view, and it works with all types of lenses. There are two circles on the display to show the foveated rendering (for demo purposes only). The inner circle is rendered at 100%, the outer circle is at 60%, and everything beyond that is rendered at just 20%. SMI claimed the module can deliver the image at 250 Hz.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

When I tried the two demos SMI had running, of course the first thing I tried to do was catch the foveated rendering in the act. One demo used an Oculus Rift DK2, and the other used a Gear VR.

Using the Rift, I went through the quick calibration protocol (you look at a red square three times, and it takes about as long to perform as it does to read this sentence), and then I entered a virtual room -- kind of European and rustic-looking, with a staircase and a black and white tile floor -- and I started darting my eyes around as quickly as possible, and I even shook my head from side to side to try and spot the unrendered (or under-rendered) area. It was to no avail; the system was too fast for me.

The Gear VR has the same quick calibration exercise. The demo SMI showed us consisted of three games, one of which was a whack-a-mole game (that I’m proud to say I conquered in significantly less time that our Editor-in-Chief, Fritz Nelson, did). You simply look at wherever you want your hammer to drop (there’s a small cursor that lets you know where you’re looking), and you press the trigger on a small handheld controller to execute. In another game, you look at boxes and shoot at them such that they fly up into the air; your task is to keep shooting and sort of juggle them.

In both, I was surprised at how fast the eye tracking worked. It was almost as if my eyes and the tracking system were moving faster than my brain could process what was happening. (Go ahead, insert joke here. It’s okay, I’ll wait. All done? Great, let’s proceed.)

In the final game in the Gear VR demo, you’re in a dark room, and wherever you look is where the "flashlight" illuminates. In this case, the "cursor" is a narrow beam of light (it’s a clever way to dress up the eye tracking cursor), and you can engage with items by looking at them and clicking a button on the controller. Here, you can interact with most of the objects in the room, including the crackling fireplace. You can actually fan the flames, and the poetry was too great for me to resist doing so.

To put it colloquially, the eye tracking works lickety-split. As I experienced in the QiVARI demo I tried at CES, I would have actually liked to dial down the resolution somewhat in order to gain a bit more control.

The Catch-22

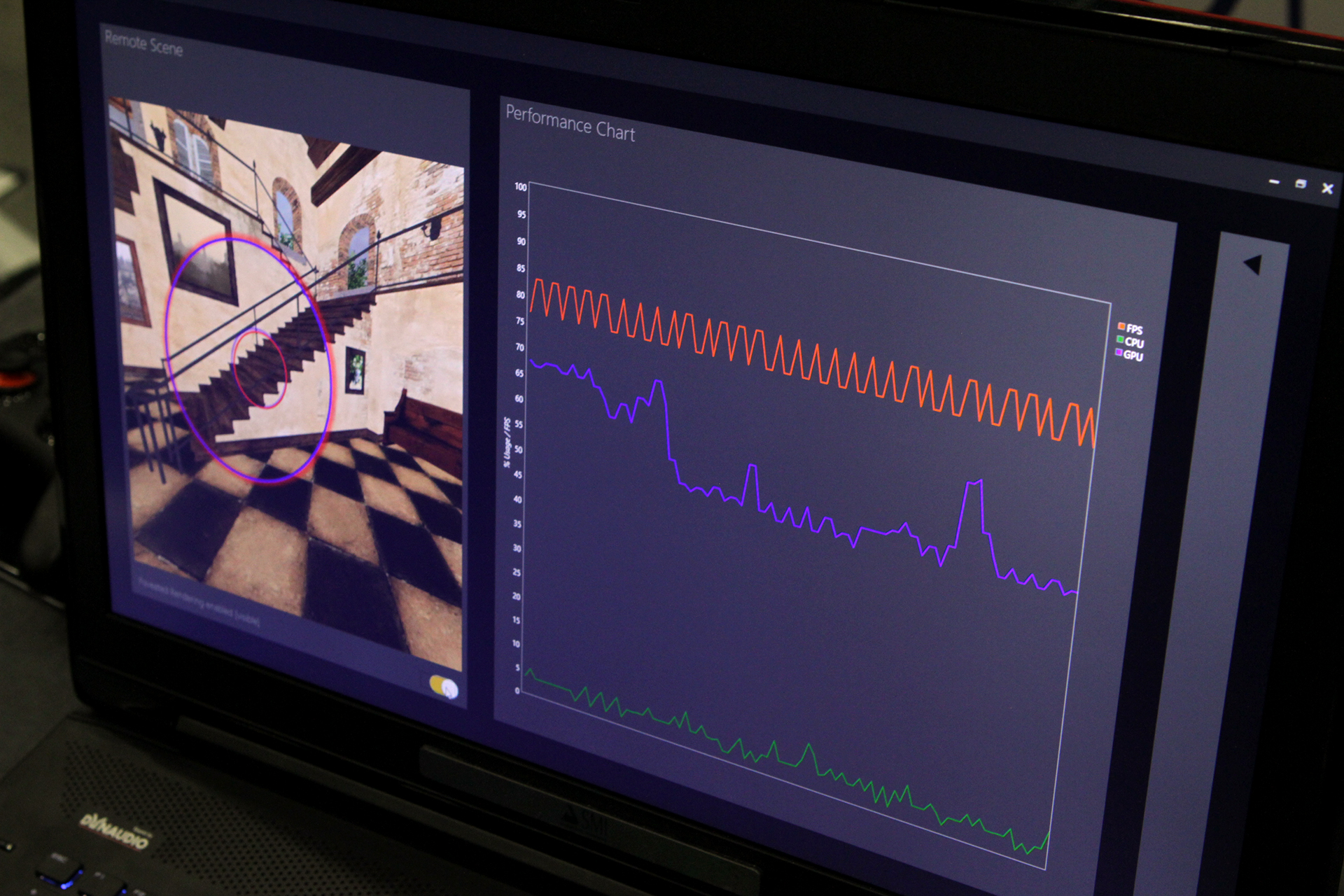

SMI is well aware of the catch-22 of these demos: If the foveated rendering functions properly, you can’t tell it’s working. Therefore, in terms of what you actually see, the demo would offer the same experience with or without foveated rendering engaged. Although SMI didn’t have any way to show the effects of foveated rendering on the Gear VR demos, the team did have the Rift demo running a simple CPU/GPU usage and FPS meter.

With the Rift running the demo I described above, the CPU and GPU were at a certain level. When the demonstrator flipped foveated rendering on, the GPU usage immediately dropped from about 70% usage to 50%. He turned it on and off several times, and the results were about the same -- and instantaneous -- every time.

For now, we suppose we’ll just have to take their word for it on the Gear VR; SMI said that the difference in CPU/GPU usage on a mobile phone with foveated rendering is far more stark than on a PC that can push tons of processing power no matter what the experience demands. (That’s why the FPS didn’t change in the Rift demo. However, the point is that the system didn’t have to work as hard to deliver the same FPS.)

Not Another HMD

To be clear, SMI’s aim is not to make yet another HMD. This is partially why the team showed its eye tracking on existing HMDs instead of using their own prototype/proof of concept mockup. No, SMI wants to get into other companies’ HMDs. (Except for OSVR, which apparently doesn’t have enough space for the tiny cameras and module to fit.)

The value proposition is certainly there. Neither the Rift nor the Vive have eye tracking nor foveated rendering, and this add-on would increase the cost ($600 and $800, respectively) by just a few dollars. Further, although SMI doesn’t seem to be too keen on the idea, this inexpensive stereoscopic camera module would be a strong potential pairing with an inexpensive Google Cardboard HMD, as the whole package could cost as little as $30.

It's also potentially ideal for AltSpace VR, a company with which SMI has been working. In AltSpace VR, you have an avatar in a social VR space. With eye tracking, you could actually gaze into the virtual eyes of another person's avatar, creating a more powerful experience.

MORE: Virtual Reality Basics

Seth Colaner is the News Director for Tom's Hardware. Follow him on Twitter @SethColaner. Follow us on Facebook, Google+, RSS, Twitter and YouTube.

Seth Colaner previously served as News Director at Tom's Hardware. He covered technology news, focusing on keyboards, virtual reality, and wearables.

-

LuxZg No way to show the difference? Why not use secondary display, one person using VR and other would (should) see the uality difference because they can focus on parts of scenes unlimited by tracker.Reply

But anyway, good stuff, should help with performance, but also has other uses as already explained in the article.. -

quilciri Maybe we'll finally get a good superman game out of this, where you can shoot things with heat vision :)Reply -

none12345 This is one of those *absolutely mandatory* technologies for VR to be acceptable on modern or near future GPU hardware, with acceptable screen resolutions(8k+ per eye) and acceptable frame rates(min 90 per eye).Reply

However, if someone has a breakthrough in GPUs that increases their processing power by about 50-100x(enough to render dual 16k screens at full quality at >90hz rates) then you don't need this technology. Because thats what its going to take to render full field of view for each eye without a screen door effect, and without nausea at modern polygon/effect counts. It will take more then that if we ever want real world quality images at those resolutions and frame rates. -

amplexis Exciting news. You mention the hardware could be added to almost any HMD, but isn't there a need for a software component? Will each game developer need to modify existing code for this device, or is there a driver that would compensate no matter the content?Reply -

Pat Flynn This is pretty much like comparing gaming with or without V-Sync in terms of GPU loading (lets ignore screen tearing, just look at the performance metrics). Most game titles that already run very high framerates on a high end GPU will be able to reduce your power and heat output by turning on V-Sync. This seems like it'll do the same thing, but as an added bonus, boost framerates due to reduced rendering on the peripheral vision. Ingenious!Reply -

Bloob ReplyExciting news. You mention the hardware could be added to almost any HMD, but isn't there a need for a software component? Will each game developer need to modify existing code for this device, or is there a driver that would compensate no matter the content?

Developers would certainly need to utilize this.

And this, for example, is what I've been arguing Oculus and HTC should have focused on for the first iterations of their HMDs instead of cameras, controllers or headphones. -

scolaner Replycan we upgrade the CV models of Rift or Vive with this technology?

Well, you can't really buy this as a standalone product, and you probably will never be able to. SMI wants to show what it can do so that HMD makers will implement it in v.2 of their products.

But theoretically, HMD makers could add this to their respective HDMs fairly easily, according to SMI.