Movidius, Google Team Up To Leverage Deep Learning On Mobile Devices

Call it an evolution of spatial computing and positional tracking, sort of a next generation of what Google’s Project Tango hopes to accomplish. Or maybe it’s the next step in the evolution of Deep Learning. Really, it seems to be both, but in any case, Movidius and Google are teaming up to get the benefits of Deep Learning onto mobile devices.

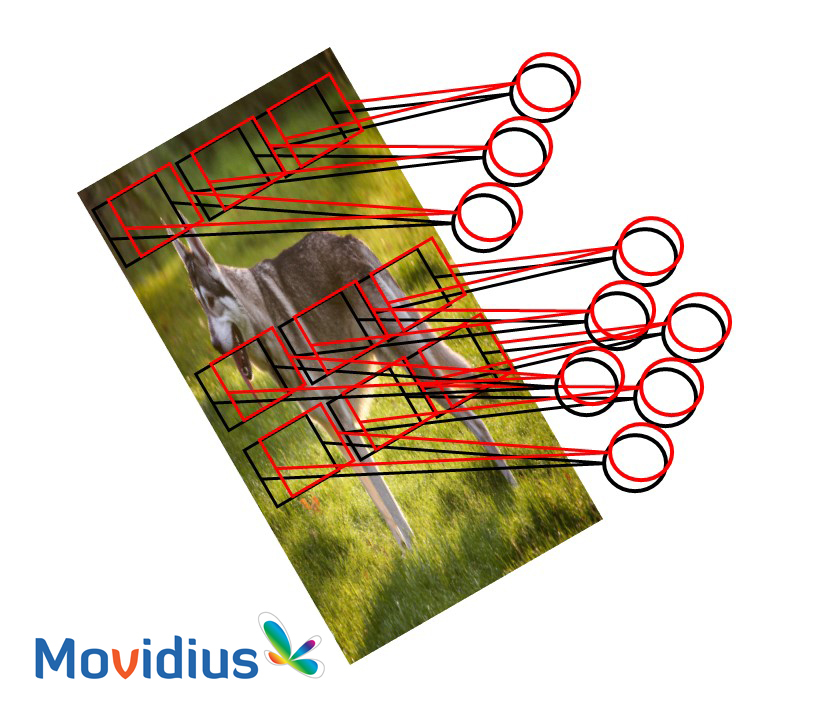

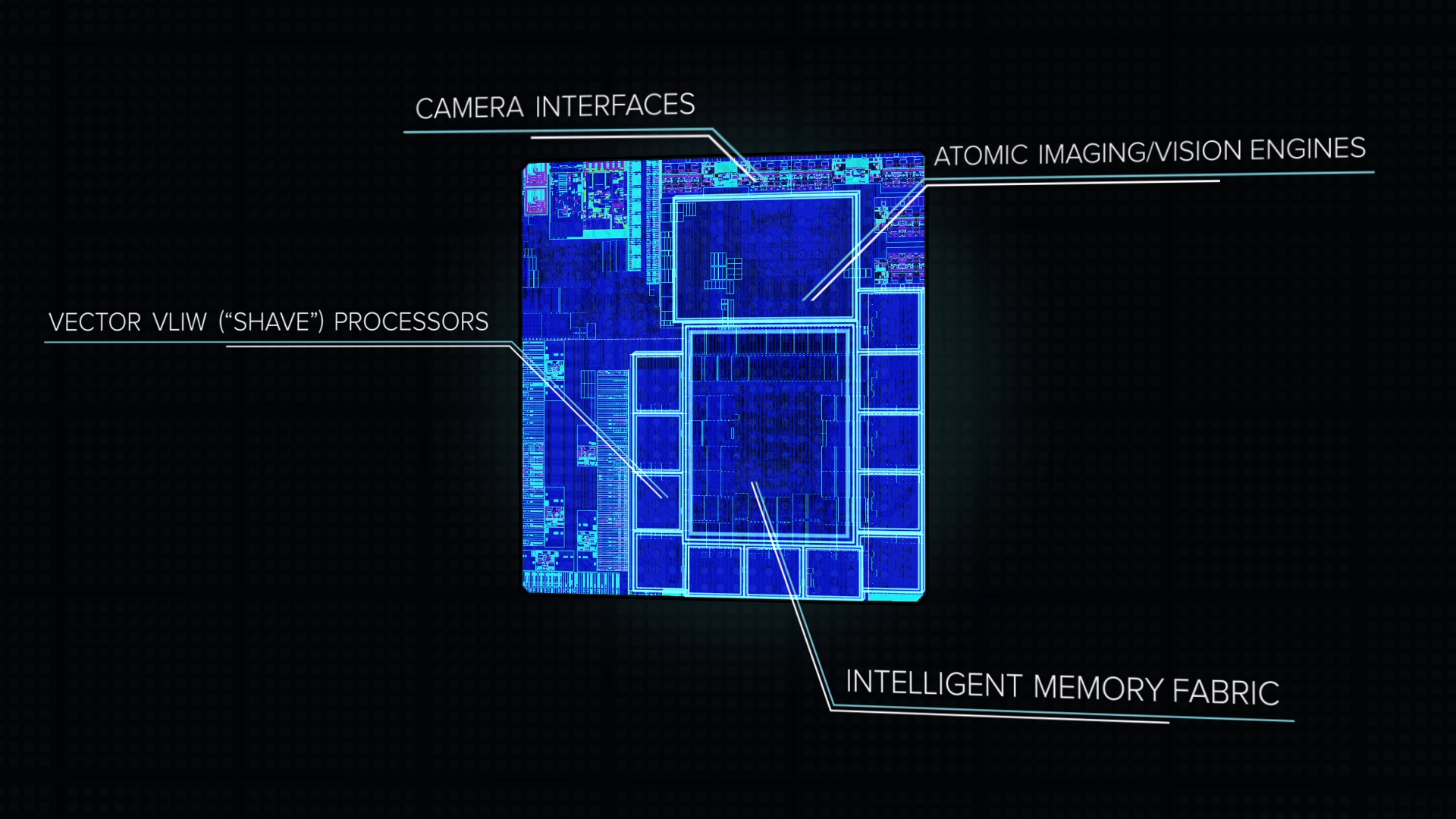

What Movidius brings to the table is efficient visual processing with the MA2450, its flagship Myriad 2 VPU. Google is licensing both the hardware and software. Using models borne of Google’s Deep Learning databases, the Myriad 2 chip will ostensibly be able to leverage the knowledge Deep Learning provides on-device, with no Internet connection.

This Goes On What Now?

Details on exactly what devices will use this technology is unclear; Movidius would not divulge any information on that front when we spoke with the company, saying only that it will be used on “next-gen” Google products. However, we can infer quite a bit from what Remi El-Ouazzane, CEO of Movidius, told us in an interview.

He described this new innovation as a next chapter in the story of Google and Movidius, and he said specifically that it’s a different chapter than Project Tango, although the two are complementary.

That’s not hard to understand: Project Tango is designed to offer spatial awareness and positional tracking, and we’re seeing that technology about to come to market via Intel and Lenovo. But if you’ve seen any of the Project Tango demos, you know that there’s more work to be done.

For example, you can aim the camera of your Project Tango phone at a couch, and it will intelligently (and dynamically, in real-time) measure the couch’s dimensions. With Deep Learning on board, though, the device can “look” at the couch and understand that it is a couch, what color the couch is, who probably makes the couch, and so on.

That’s an example that doesn’t really do justice to the power of Deep Learning, but you get the idea: Deep Learning has to do with teaching machines to comprehend. “Positional tracking matters, but the ability -- with high accuracy -- to recognize and detect objects matters as well,” said El-Ouazzane.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

A Sort Of Reversal Of The Cloud Trend

Data is such a fascinating beast. In recent years, more and more of it has been moved to the cloud, and indeed, that has allowed for technologies like Deep Learning to emerge. We have these massive databases, and we’ve been able to teach machines to leverage that data and learn.

However, that creates a problem, because it would seem that unless you’re connected to the Internet (and therefore can access those databases) and also have a supercomputer to process everything, you miss out on what Deep Learning offers.

The solution to that problem is already here, though, in the form of neural networks. Basically, you can load a model derived from a database onto a device, and that device can use that neural network to understand what it “sees.” This happens locally, on the device itself, and it would appear that the Myriad 2 chip is sufficiently powerful and efficient to handle it.

El-Ouazzane did not elaborate on how the device may take its acquired data and send it back to Google so it could be added to the database, but it must, somehow. Without that exchange of information, neither the neural net at large nor the model on-device could grow.

What we’re seeing here, to me, is a certain parity between the importance of the device and the importance of the cloud. They need each other. Therefore, Google and Movidius need each other.

We do not yet have any timeline for when we might see this technology on shipping devices, nor what sort of devices exactly those will be (our best guess is a dev tablet followed by a dev smartphone, just like Project Tango).

However, both key technologies -- Google’s machine learning databases and Movidius’ Myriad 2 VPU and software package -- already exist, so it’s likely that there’s already a prototype device on a workbench somewhere inside the halls of Google.

Seth Colaner is the News Director for Tom's Hardware. Follow him on Twitter @SethColaner. Follow us on Facebook, Google+, RSS, Twitter and YouTube.

Seth Colaner previously served as News Director at Tom's Hardware. He covered technology news, focusing on keyboards, virtual reality, and wearables.

-

bit_user ReplyEl-Ouazzane did not elaborate on how the device may take its acquired data and send it back to Google so it could be added to the database, but it must, somehow. Without that exchange of information, neither the neural net at large nor the model on-device could grow.

The communication will probably follow the existing model of Android updates. Google will probably collect data, as an independent activity, then train their models & improve their algorithms on their servers. The resulting code & data would be pushed out to devices, periodically.

Maybe Google will surprise us withe some kind of distributed learning that harnesses the compute power and data from the devices to update their centralized model, but that's not how this sort of thing is usually done.

Of course, devices can learn things about their owner and the environment they inhabit, but I see that as a sort of user-specific customization and not something that would get propagated back to Google. -

bit_user ReplyWhat we’re seeing here, to me, is a certain parity between the importance of the device and the importance of the cloud. They need each other. Therefore, Google and Movidius need each other.

I'm not too sure about that. Qualcomm has the Zeroth engine in some of their new Snapdragon SoC's (the 820, possibly others). I'd bet they'll support Google's new deep learning APIs, just like how we're seeing both Intel and Lenovo support the Tango API, using different hardware. My guess is that Google is probably partnering with Movidius to foster an ecosystem, so that Qualcomm won't completely dominate the space.

As for complementing the cloud, I really don't see this as being any different than the GPU or many other built-in device functions. There are lots of things these devices need to do that just make more sense to be integrated into the device, if possible. Plus, doing this stuff in the cloud would eat bandwidth and require a substantial amount of processing power.

-

hst101rox It's interesting you have this article on Deep Learning today, but also on this topic is that Google's Deep Mind (Deep Learning?) beat a human player at the ancient chinese board game called Go, previously thought impossible.Reply -

bit_user Reply

The article was prompted by Google/Movidius' announcement. That they made it today might have been coincidence, or possibly they chose to announce it now to benefit from the buzz around that event.17398095 said:It's interesting you have this article on Deep Learning today, but also on this topic is that Google's Deep Mind (Deep Learning?) beat a human player at the ancient chinese board game called Go, previously thought impossible.

Speaking of which, where's the article about that?

-

hst101rox Reply

https://www.washingtonpost.com/news/innovations/wp/2016/01/27/google-just-mastered-a-game-thats-vexed-scientists-for-decades/?tid=sm_fb17398095 said:Speaking of which, where's the article about that? -

bit_user Reply

Thanks, but that was meant as a nudge for Tom's to get with it!17399008 said:Speaking of which, where's the article about that?

https://www.washingtonpost.com/news/innovations/wp/2016/01/27/google-just-mastered-a-game-thats-vexed-scientists-for-decades/?tid=sm_fb

;)