AMD-optimized Stable Diffusion models achieve up to 3.3x performance boost on Ryzen and Radeon

Significant improvements for the RX 9070 series and Ryzen AI Max+ APUs.

Stability AI, the company behind Stable Diffusion, has released ONNX-optimized models that run up to 3.3x faster on compatible AMD hardware, including select Radeon GPUs and Ryzen AI APUs on mobile.

Amuse is a platform developed by AMD and TensorStack AI that allows users to generate images and short videos locally, on AMD hardware. The latest Amuse 3.0 release not only supports these updated models but also introduces a range of new features, including video diffusion, AI photo filters, and local text-to-image generation. Amuse 3.0 and AMD optimized models require the Adrenalin 24.30.31.05 preview drivers or the upcoming Adrenalin 25.4.1 mainline release.

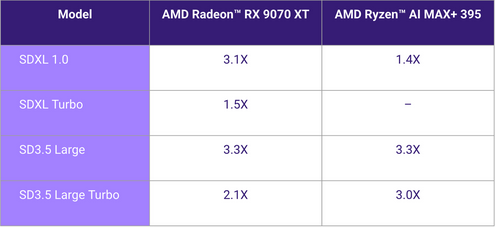

Over the past year, AMD has partnered with several OSVs, OEMs, and ISVs to optimize AI applications from the ground up, incorporating hardware optimizations, efficient drivers, compilers, and optimized ML models, among other enhancements. Building on this partnership, Stability AI has launched Radeon-optimized versions of its Stable Diffusion family, which include Stable Diffusion 3.5 (SD3.5) and Stable Diffusion XL Turbo (SDXL Turbo). First-party metrics report a 3.3x speedup with SD3.5 Large, dropping to 2.1x for SD3.5 Large Turbo and 1.5x for SDXL Turbo compared to the base PyTorch implementations.

Artificial Intelligence is an integral part of our daily lives, but most of it lives in the cloud and requires an active internet connection. "AI PCs" are driving the development of modern processors, which feature dedicated hardware units to accelerate machine learning operations. Take NPUs and specialized AI matrix cores, for example, which allow smaller and efficient AI models to be run locally.

RDNA 4 features AMD's second-generation AI accelerators, which offer 4x more FP16 performance (with sparsity), doubling to 8x with INT8 (again, with sparsity) versus RDNA 3. These optimized models are not architecture-bound, although AMD mandates high-end GPUs for certain models, such as SDXL, SD 3.5 Large, and Turbo. Ryzen AI APUs can leverage the built-in XDNA NPU in tandem with the Radeon iGPU. In this case, Strix Halo is the recommended choice due to its large memory buffer and raw horsepower.

Stable Diffusion models optimized for AMD hardware are tagged with the "_amdgpu" suffix and are now available for download at Hugging Face. With the necessary hardware, you can launch these models immediately in your preferred environment or AMD's Amuse 3.0, whichever suits your needs.

Follow Tom's Hardware on Google News to get our up-to-date news, analysis and reviews in your feeds. Make sure to click the Follow button.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Hassam Nasir is a die-hard hardware enthusiast with years of experience as a tech editor and writer, focusing on detailed CPU comparisons and general hardware news. When he’s not working, you’ll find him bending tubes for his ever-evolving custom water-loop gaming rig or benchmarking the latest CPUs and GPUs just for fun.

-

jp7189 Given that this is a different model, I have my doubts about compatibility with the existing ecosystem. Without that, these models are doa.Reply

That said, I couldn't find any statement one way or the other on the model cards. -

jp7189 Reply

Yes. Everywhere and in everything; long before the current marketing fad to stick that label on every product. Also, long before everyone conflated "AI" to mean specifically LLM.Jame5 said:Is it though?

Google search (not meaning the Gemini blurb) predictive analytics can be considered "AI" by today's very loose definition. -

Mr Majestyk I hope Topaz LABS can incorporate this into their software like Gigabyte AI for their generative AI models especially Redefine which is pathetically slow.Reply -

korbenxmbc A comparison with nvidia GPUs would have been nice to get an impression of the performance in general. I mean, how close does this bring AMD to nvidia in this kind of workload? Is AMD now a real alternative, or are they still far behind?Reply