AMD unveils industry-first Stable Diffusion 3.0 Medium AI model generator tailored for XDNA 2 NPUs — designed to run locally on Ryzen AI laptops

An offline image generator for XDNA 2 NPUs.

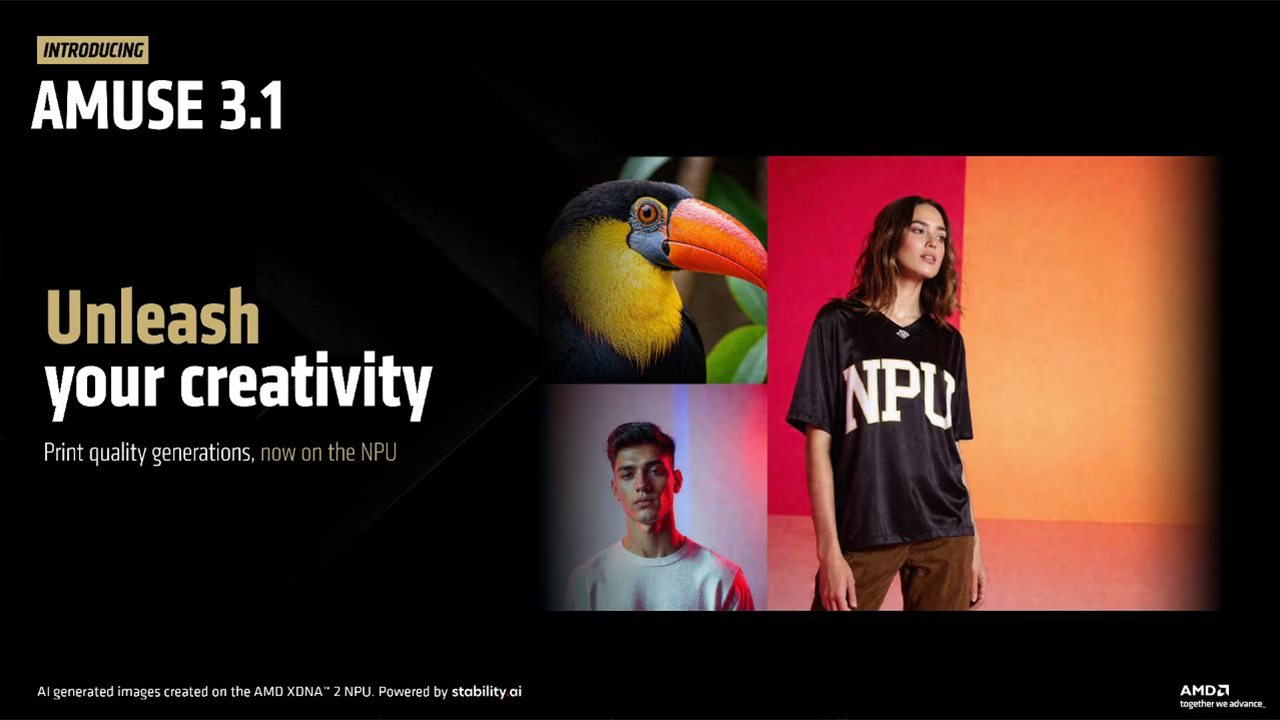

AMD, in collaboration with Stability AI, has unveiled the industry's first Stable Diffusion 3.0 Medium AI model tailored for the company's XDNA 2 NPUs which process data in the BF16 format. The model is designed to run locally on laptops based on AMD's Ryzen AI laptops and is available now via Amuse 3.1.

The model is a text-to-image generator based on Stable Diffusion 3.0 Medium, which is optimized for BF16 precision and designed to run locally on machines with XDNA 2 NPUs. The model is suitable for generating customizable stock-quality visuals, which can be branded or tailored for design and marketing applications. The model interprets written prompts and produces 1024×1024 images, then uses a built-in NPU pipeline to upscale them to 2048×2048 resolution, resulting in 4MP outputs, which AMD claims are suitable for print and professional use.

The model requires a PC equipped with an AMD Ryzen AI 300-series or Ryzen AI MAX+ processor, an XDNA 2 NPU capable of at least 50 TOPS, and a minimum of 24GB of system RAM, as the model alone uses 9GB during generation.

The key advantage of the model is, of course, that it runs entirely on-device; the model enables fast, offline image generation without needing Internet access or cloud services. The model is aimed at content creators and designers who need customizable images and supports advanced prompting features for fine control over image composition. AMD even provides examples. A prompt to draw a toucan looks as follows:

"Close up, award-winning wildlife photography, vibrant and exotic face of a toucan against a black background, focusing on the colorful beak, vibrant color, best shot, 8k, photography, high res."

To use the model, users must install the latest AMD Adrenalin Edition drivers and the Amuse 3.1 Beta software from Tensorstack. Once installed, users should open Amuse, switch to EZ Mode, move the slider to HQ, and enable the 'XDNA 2 Stable Diffusion Offload' option.

Usage of the model is subject to the Stability AI Community License. The model is free for individuals and small businesses with less than $1 million in annual revenue, though licensing terms may change eventually. Keep in mind that Amuse is still in beta, so its stability or performance may vary.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Follow Tom's Hardware on Google News to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

abufrejoval With a 9GB model, you'd be far better off using a 12GB GPU, way faster and cheaper even in a laptop.Reply

Strix Halo is great technology... at a terrible price

It's designed to be cheaper than a dGPU, built on using a wider bus on commodity DRAM, but they charge HBM prices for it. -

-Fran- Ah, this is probable the enterprise version of that garbage they're trying to install in the driver package.Reply

Regards. -

usertests Reply

This isn't about Strix Halo, this is about XDNA2. That's included in Strix Point, Krackan, and even the newly announced Ryzen AI 5 330. So basic image generation capabilities using a 50 TOPS NPU will come to even sub-$400 laptops.abufrejoval said:With a 9GB model, you'd be far better off using a 12GB GPU, way faster and cheaper even in a laptop.

Strix Halo is great technology... at a terrible price

If it really needs 24 GB instead of 16 GB to run fast (or at all), that could be a problem in that segment given there are many systems with soldered RAM. Hopefully all laptops with "AI" in the processor name will come with over 12 GB, matching the Windows AI/Copilot requirement of 16 GB, but 24-32 GB could be rare. So you need 1-2 SODIMM slots to add more memory yourself. -

dalek1234 Reply

Given the specs, it's look like it's targeting professionals, who are willing to pay premium for a premium product. Besides, you can charge a premium when there is no competition in this space. Intel is asleep, and Nvidia doesn't have an x86 license.abufrejoval said:...

Strix Halo is great technology... at a terrible price

...

For the rest of us, a Strix Halo with same GPU, half CPU cores, and 32-64 MB of ram would make more sense. Supposedly those SKU are in the pipeline. I read today that some Chinese outfit is bringing those variants to Desktop. -

usertests Reply

The story is not about Strix Halo, it's about the XDNA2 NPU, which is also available in millions of Strix Point and Krackan laptops and mini PCs. All with around the same 50-55 TOPS of performance.dalek1234 said:Given the specs, it's look like it's targeting professionals, who are willing to pay premium for a premium product. Besides, you can charge a premium when there is no competition in this space. Intel is asleep, and Nvidia doesn't have an x86 license.

Maybe it can run faster on Strix Halo though. I don't know if the NPU itself benefits from the additional memory channels and bandwidth, or if's only the iGPU that needs it. Running Stable Diffusion on Strix Halo's iGPU may be better. -

DS426 Reply

Folks, easy to beat up anything with "AI" slapped on it, but let's step back a bit here.abufrejoval said:With a 9GB model, you'd be far better off using a 12GB GPU, way faster and cheaper even in a laptop.

Strix Halo is great technology... at a terrible price

It's designed to be cheaper than a dGPU, built on using a wider bus on commodity DRAM, but they charge HBM prices for it.

Sure, Strix Halo is expensive, but I don't know that faster and cheaper is always the case in the comparison being used here. As others already pointed out, regular Strix comes in a lot lower, so the trade-off could be cheaper and slower. Anyone doing serious AI inferencing should absolutely rely on a PC with a dGPU and ideally 16 GB of VRAM or more as that only adds a lot more models than can be ran entirely in VRAM rather shared with system RAM, or otherwise just a greater portion in VRAM. That said, the whole point of NPU's on laptops was to strike a balance of having some level of decent AI inferencing performance while maintaining decent battery life. You fire up a dGPU to about 100% utilization on a laptop and battery life falls off a cliff. If it's more of a workstation laptop that typically stays on a charger, different scenario.

I'm seeing laptops for about $600 on Amazon (ASUS, etc.) and elsewhere with Ryzen AI 5 340, 16 GB of RAM. 32 GB RAM models are significantly higher, which I agree is silly, but I think this is an OEM problem and not so much AMD pricing. In any case, those prices should eventually come down as they saturate marketplaces.

Also realize there are smaller models; the purview of this article is this specific AI model which recommends 32 GB of RAM. $600 and even lower laptops can run AI models for inferencing locally and at much faster speeds than other traditional laptops with faster CPU's but no NPU's. No, not everyone cares about this today, but the presumption is that it's only growing as it comes of age, and then the regret later on down the road would be not having an NPU. -

abufrejoval Reply

Thanks for the clarification!usertests said:This isn't about Strix Halo, this is about XDNA2. That's included in Strix Point, Krackan, and even the newly announced Ryzen AI 5 330. So basic image generation capabilities using a 50 TOPS NPU will come to even sub-$400 laptops.

If it really needs 24 GB instead of 16 GB to run fast (or at all), that could be a problem in that segment given there are many systems with soldered RAM. Hopefully all laptops with "AI" in the processor name will come with over 12 GB, matching the Windows AI/Copilot requirement of 16 GB, but 24-32 GB could be rare. So you need 1-2 SODIMM slots to add more memory yourself.

But that has me much more sceptical about the speed and quality of the results. I've spent far too many hours trying to get diffusion models produce something I had in mind using an RTX 4090, to believe I could be productive with something so puny (yes, the real issue may be in front of the machine...): there is no secret weapon in those NPUs beyond tiling the workload, low precision, and all weights in SRAM caches. Their design reminds me of the Movidius chips, which is very much a DSP design aimed at tiny dense models for real-time audio and image enhancements, not generative AI models.

At a much larger scale it's how Cerebras works as well, but there we aren't talking €300 laptops.

I just cannot imagine the main model really running on the NPU, they must be using the GPU for the image generation and the NPU for upscaling, a bespoke solution that may be little more than a one-off tech demo, and with little chance of working with any other hardware, even their own a generation older or younger, because you'd have to redesign the workload split between so heterogeneous bits of hardware: there is no software stack to support that generically.

To me it has the whiff of marketing people desperately searching a market for their product and I can only advise you to verify the functionality you expect or need before committing money you care about. -

abufrejoval Reply

A quick search revealed this document, a work sponsored by AMD, but comparing CPU vs. NPU efficiency and speeds.abufrejoval said:there is no secret weapon in those NPUs beyond tiling the workload, low precision, and all weights in SRAM caches. Their design reminds me of the Movidius chips, which is very much a DSP design aimed at tiny dense models for real-time audio and image enhancements, not generative AI models.

Unfortunately it doesn't detail data types, because I'd be quite surprised if the NPU was actually used or efficient at BF16: that's already a very big format for holding significant numbers of weights in SRAM caches, four or eight bit representations seem much more adequate, but perhaps AMD is using shared exponents here.

But once the NPU would have to use those 9GB of weights in DRAM, there is just no way it would be significantly more efficient than a modern GPU, if it could be done, GPUs would do it, too. -

abufrejoval Reply

You don't need x86 to run diffusion models. Nvidia's Digits is all about proving that.dalek1234 said:Given the specs, it's look like it's targeting professionals, who are willing to pay premium for a premium product. Besides, you can charge a premium when there is no competition in this space. Intel is asleep, and Nvidia doesn't have an x86 license.

Unfortunately it seems they aren't willing to also forgo Windows in a consumer environment.

Yeah, for the vast majtority of consumers Strix Halo is too much CPU for the GPU that comes with it, so a single CCD makes for a more rounded consumer device, even if I'd obviously spend the extra €50 a second CCD would cost AMD to make.dalek1234 said:For the rest of us, a Strix Halo with same GPU, half CPU cores, and 32-64 MB of ram would make more sense. Supposedly those SKU are in the pipeline. I read today that some Chinese outfit is bringing those variants to Desktop.

I'd also spend €100 extra to go from 64GB to 128GB of RAM, but that's where the trouble is: AMD is adding zeros to those prices at the end where it hurts. -

abufrejoval Reply

The small print on the Amuse-ai website seems confirms the assumption, that it's only the upscaling that runs on the NPU. A single slide in the blog post on AMD's website says that both stages run on the NPU, while the rest again claims "XDNA2 supersampling" .abufrejoval said:I just cannot imagine the main model really running on the NPU, they must be using the GPU for the image generation and the NPU for upscaling

AMD might claim a 'clerical error' on that later, but if anyone happens to have a Strix Point device, perhaps they could validate that both stages run on the NPU: HWinfo and the task manager will tell.

I don't mind being wrong as much as I dislike being misled by 'creative' advertising.

In my theory I should be able to even test this on one of my Hawk Point/Phoenix APUs, since to my understanding the difference between these generations is only the number of tiles. But actually I understand next to nothing, because I can't find documentation. Perhaps I'll try that later, now that I have a desktop Hawk Point. On the Phoenix laptop I ran out of patience with the older, non-upscaling variant.

In any case, while diffusion models are widely used to implement upscaling, do not expect similar results to what NPUs can do: their ability will be more akin to a digital zoom on your smartphome camera or what a SmartTV would do to fill those 4k pixels with something.

And of course AMD isn't unveiling a "model generator", but a model that generates images...

Either Anton had a bad day or somebody else did the headline (I've heard that before).