Apple's New iOS Privacy Features: File System Native Encryption, Differential Privacy, On-Device Deep Learning

Over the past few years, Apple has become increasingly more interested in adding new privacy features to its products and services, to protect users but also as an effective competitive advantage. At the Worldwide Developers Conference (WWDC), Apple announced three new privacy features including file system level encryption, on-device deep learning, and a brand new statistical technique called “privacy differential,” meant to more effectively anonymize the data Apple collects from its users.

File System Native Encryption

At WWDC this year, Apple introduced its new file system, called APFS, which is meant to replace its decades old HFS+ file system with one that is optimized to work well with flash storage and modern CPU architectures. Among its many modern features, the new file system also includes native encryption support.

Instead of using a full disk encryption application on macOS such as File Vault or VeraCrypt (one of TrueCrypt’s successors), anything on the disk can be encrypted directly. You can also choose from multiple options, including no encryption at all, single-key encryption, or multi-key encryption with per-file keys for file data. You can also use a separate key to encrypt sensitive file system metadata. The same is true for iOS, which already had file-based encryption, but it will soon benefit from the native encryption of the new file system as well.

The new APFS file system is still only being previewed, and may not appear in either iOS or OS X at least until next year, as there could be many unforeseen issues with third-party applications. Those issues will have to be solved either by Apple or by the third-party developers before Apple implements APFS in its operating systems by default.

Differential Privacy

After Google’s past few I/O events, and especially after the most recent one, many have started wondering if Apple will have a response to Google’s increased focus on data analytics, machine learning, and AI-enhanced services. The issue is not just a technical one (whether Apple even has the capabilities to compete with Google in this), but also a privacy one.

As Apple tries to think of its users’ privacy first, most of these cloud-based features are in conflict with that goal. Therefore, Apple needed a solution where it could still gather some user data, but in a way where even the company itself can’t identify individual users.

Apple announced a technique called “differential privacy” that is supposedly is the solution to this problem. The way differential privacy works is that Apple gathers fragments of data from users, and it adds data noise to each fragment so that the fragments are “scrambled” in a way.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

So far so good in terms of privacy, but how does Apple then make use of this data to enhance its services? The technique allows certain patterns and trends to emerge from data gathered by Apple from groups of people, rather than from each individual.

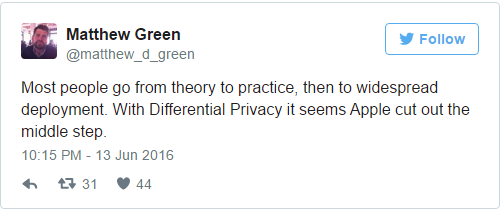

Differential privacy has mostly been in the research phase until recently, and now Apple wants to use it in a billion devices. This has some experts, including Johns Hopkins cryptography professor Matthew Green, worried:

Unless the whole theory about differential privacy is completely wrong and the system is nothing but useless, chances are it’s a significant improvement over the way Google and other companies, as well as government agencies, “anonymize” user data by collecting all of the users’ data first, and only then stripping identifiable information such as names and addresses from their profiles.

Even after some of that data is stripped away, it’s often trivial to identify most of the users by correlating different information about them. Therefore, the current system for “data anonymization” already seems hopelessly broken. Differential privacy looks like a step forward for user privacy, at least until even better methods such as homomorphic encryption start becoming more practical for more services.

However, whether it’s differential privacy, or homomorphic encryption, or something else, they are still meant as “privacy solutions” to the “data collection problem.” Not collecting the data in the first place still remains the most cost-effective and easiest way to protect users against privacy violations and data breaches. This brings us to Apple’s other privacy feature introduction: on-device deep learning.

On-Device Deep Learning

As we’ve discussed before, we may be only a few short years away from deep learning accelerators embedded into our smartphones. However, Apple could do that as early as this year with the next iPhone (one of the benefits of controlling both the software and the hardware of its iPhones). For older phones, Apple could probably enable it as well, if it takes advantage of their powerful mobile GPUs, although it won’t be as efficient as using a custom accelerator.

On-device deep learning will never be as good as cloud-based deep learning for the “training” phase, which is where the feature set of the algorithm is established, but it could get “good enough” for most types of services in the “inference” phase, which is just running those established algorithms. Because the computation happens locally, it may actually be more efficient (and faster) than constantly sending requests back and forth between a company’s servers and the device and through the (perhaps congested) wireless network.

However, the biggest benefit of on-device deep learning is a privacy one. Allowing Google's or Facebook's “AI” to analyze all of your messages or photos means that Google and Facebook also have access to all of that data, as well as anyone who may hack their servers or any government requesting that data with or without your knowledge.

At WWDC, Apple introduced the new Photos app, which can analyze the content of the images locally to group them based on a certain context (like people you photograph frequently), or to allow users to search for certain words and then display only the images describing those words (such as searching for “mountains,” and only showing pictures of mountains).

In the future, Apple could expand this sort of deep learning computation to enhance other apps and services, just like Google and other companies do, but with the benefit of true privacy (keeping the data on users’ property). That data can now also be encrypted natively with the upcoming file system. For data that Apple must absolutely access in order to provide certain services, it can use the differential privacy method to better “anonymize” that data.

Lucian Armasu is a Contributing Writer for Tom's Hardware. You can follow him at @lucian_armasu.

Lucian Armasu is a Contributing Writer for Tom's Hardware US. He covers software news and the issues surrounding privacy and security.

-

JakeWearingKhakis Kind of makes me wish I could go back in time to stop myself from joining certain websites, signing up for things online, etc.. When the internet was "new" and I was young I didn't imagine it would be this intimate. I'm not putting on a foil hat, but damn. I feel like Cortana, Alexa, Facebook, and Google can tell us things about ourselves that we don't even remember, or want to remember, or even tell our "real" friends.Reply -

jordanl17 I have 74,000 photos.. mostly all are on photos.google.com. how does apple plan on scanning the faces locally, if all my photos are in the cloud? learn local then send that learned data to the cloud? might as well scan on the cloud? Apple, your iOS is great, best mobile OS. BUT soon people will need more power than what a phone can provide. Calm the F down about privacy, you are shooting yourself in the foot.Reply -

AnimeMania It sounds like Apple might get privacy right if it does the Deep Learning on the device and sends an anonymous summary to the cloud to be data mined. They should also do a type of encryption where files in a particular location (folder) are easily accessed without passwords on the device, but are heavily encrypted if they are transferred off the device. I need to know that pictures of my "junk" won't fall into the wrong hands.Reply -

jordanl17 Deep learning will require CPU/battery. That is the issue.Reply

18126207 said:It sounds like Apple might get privacy right if it does the Deep Learning on the device and sends an anonymous summary to the cloud to be data mined. They should also do a type of encryption where files in a particular location (folder) are easily accessed without passwords on the device, but are heavily encrypted if they are transferred off the device. I need to know that pictures of my "junk" won't fall into the wrong hands.

-

ledhead11 Privacy issues are like gaming GPU's. Give it a few years and they're out of date. I think its great that Apple is spearheading this, but only fools will believe its nothing more than a band-aid for a sinking titanic(re:our privacy). For every encryption there's a way to crack. For 'noise' we've already spent decades researching tech to re-sample/recover data.Reply -

ledhead11 'Deep Learning'=The new tech catch phrase of 2016. Cool stuff but its already spreading is so many different directions there's no way that Apple or any one global corp can counter it entirely for security purposes. The very nature is that you can use it for attacks not just defense.Reply