Adversarial Turtle? AI Image Recognition Flaw Is Troubling

Artificial intelligence is all the rage these days. Technology companies are hiring talent straight out of universities before students even finish their degrees in the hope of becoming a frontrunner in what many see as the inevitable future of technology. However, these machines are not exactly faultless, as showcased by a flaw found in Google's own AI system.

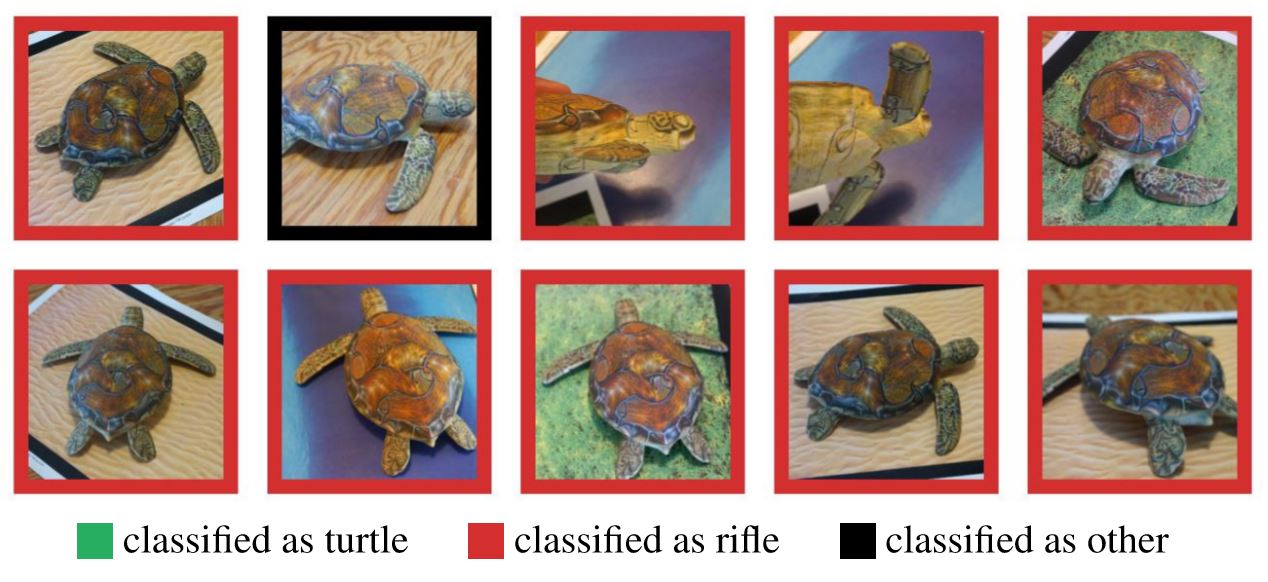

The image you're looking at above is obviously a turtle; so why does Google's AI register it as a gun? Researchers from MIT achieved this trick through something called adversarial image, which are images that have been purposely designed to fool image recognition software through the use of special patterns. This in turn makes an AI system confused into declaring that it’s seeing something completely different.

It's alarming that Google's image recognition AI can be tricked into believing a 3D printed turtle is a rifle. Why? Well, if artificial intelligence progresses to the level the industry sees it going, ranging from self-driving cars to even protecting human beings, an error may lead to severe consequences. For example, an autonomous car relies on machine intelligence, but if it doesn't qualify the sidewalk as part of the road, civilians are prone to serious injuries, as you can imagine.

“In this work, we definitively show that adversarial examples pose a real threat in the physical world. We propose a general-purpose algorithm for reliably constructing adversarial examples robust over any chosen distribution of transformations, and we demonstrate the efficacy of this algorithm in both the 2D and 3D case,” MIT researchers stated. “We succeed in producing physical-world 3D adversarial objects that are robust over a large, realistic distribution of 3D viewpoints, proving that the algorithm produces adversarial three-dimensional objects that are adversarial in the physical world.”

Google and Facebook are fighting back, however. The tech giants have released their own research that indicates they're looking into MIT’s adversarial image technique to discover methods of securing their AI systems.

Although society as a whole may look to completely put their faith into AI because of the progress that has been made in the field, to completely trust AI over human eyes is a troubling thought to entertain when you take this study by MIT into context.

“This work shows that adversarial examples pose a practical concern to neural network-based image classifiers,” they concluded.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Zak Islam is a freelance writer focusing on security, networking, and general computing. His work also appears at Digital Trends and Tom's Guide.

-

LORD_ORION Clearly the result of an AI being in a liberal gun control echo chamber...Reply

J/K... sorry I couldn't help myself. -

alextheblue They're on to me! Now I'll have to scuttle the entire 3D-printed Turtle GunRobot army! Maybe I'll use Penguins next time...Reply

Oh crap, my GunRobot design plans were deleted from Google Docs. Also they removed everything I've ever posted on YouTube with the words "stock" or "bump". -

bit_user ReplyFor example, an autonomous car relies on machine intelligence

Self-driving cars tend to use multiple algorithms in parallel, each using different fundamental approaches. It seems unlikely that you could fool them all (especially without access to the source). And if you did, it would probably also fool humans, or at least look bizarre enough to attract attention. -

lorfa Sure, but what's going on with Turtle #4 from the left in the top row? Its leg is coming out of its head..Reply -

bo cephas Reply

You are looking at the underside of the turtle. It is flying away from you, banking to the left.20339803 said:Sure, but what's going on with Turtle #4 from the left in the top row? Its leg is coming out of its head..

Interpretation of images is an issue for people, too.

-

bit_user Thinking about it some more... it seems clever to use an object with some natural camouflage in order to hide the features that activate the classifier. For instance, in most of the turtle pictures, you can see what looks like a finger on a trigger, or the trigger and part of the stock.Reply

I have to wonder how well this can work if you don't use camouflage, which is just borrowing from a technique nature uses to do basically the same thing (i.e. fool a visual classifier). It seems like a pretty big crutch, since you could basically just be on the lookout for anything camouflaged that seems out-of-place. I'll bet it wouldn't be hard to build a classifier for that, which you could then use to reject other objects found within the camouflaged region.