MIT's AI-Based Cybersecurity Solution Sounds Impressive, But Prevention-Based Solutions May Still Prevail

MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL), along with the AI startup PatternEx, have created a hybrid solution that can catch 85 percent of the attacks on a network by combining AI with human input. The method is up to ten times more effective than other purely AI-based solutions on the market right now but could still be inadequate in the real world of data breaches.

Some of the newest anti-malware solutions, especially in the enterprise (but also in some consumer products), include machine learning to catch malware or data breaches in progress. MIT CSAIL’s and PatternEx’s solution, called AI2 (because it uses both “artificial intelligence” and “analyst intuition”), seems to be anywhere from 3x to 10x better than other solutions, while showing 5x fewer false positives.

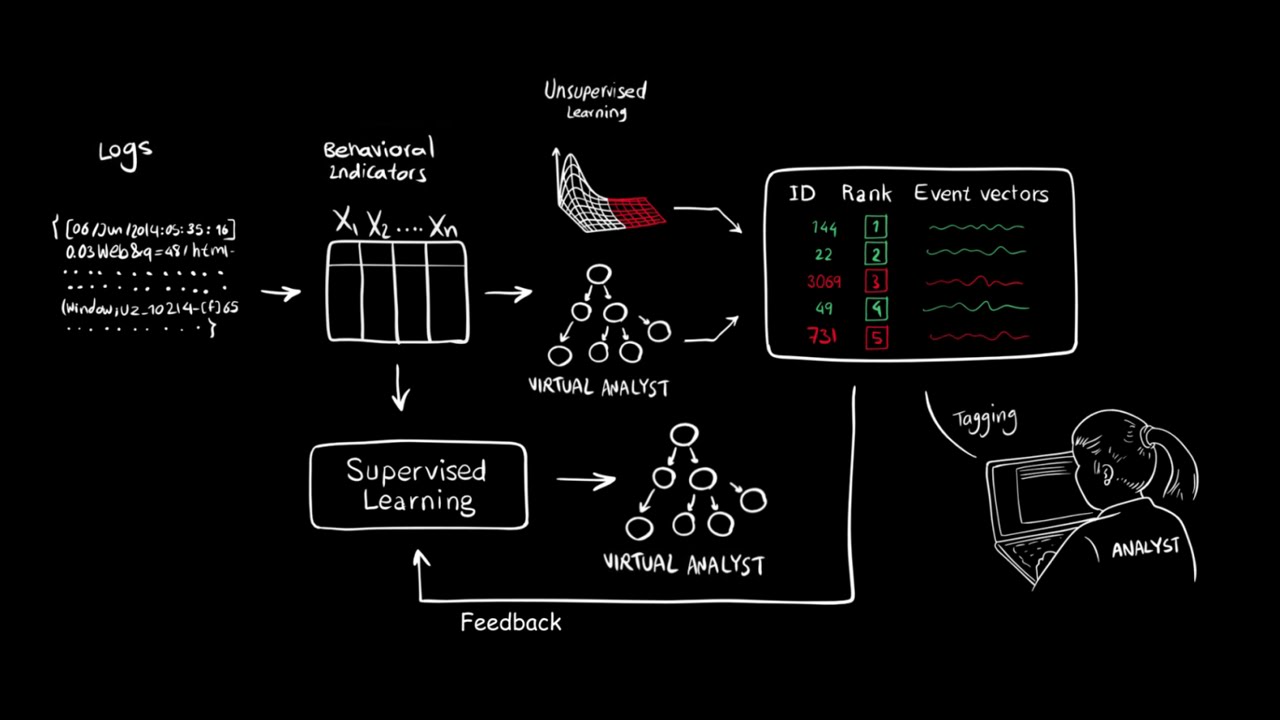

The AI2 system was fed 3.6 billion “log lines” from millions of users over a period of three months. The AI goes through the logs while being unsupervised, and it creates and detects some patterns, which are then shown to human analysts. The analysts then confirm which events are actual attacks on the network, and that feedback is used to further train the AI.

“You can think about the system as a virtual analyst,” said CSAIL research scientist Kalyan Veeramachaneni, who developed AI2 with Ignacio Arnaldo, a chief data scientist at PatternEx and a former CSAIL postdoc. “It continuously generates new models that it can refine in as little as a few hours, meaning it can improve its detection rates significantly and rapidly,” he added.

Challenges Remain

Although this solution may seem like some kind of breakthrough that could soon end all data breaches, in reality it still presents quite a few challenges.

Currently, the system shows 200 events per day to the human analyst. The researchers said that after a few days of training, this will be brought down to 30-40 events per day. This still seems like too many events that a human should analyze and decide whether it’s an attack or not.

For someone working at PayPal in the fraud detection division, for instance, and whose job is to look at “abnormal events” every day, this solution could prove useful. However, this may not be an appropriate solution for most companies that may want a more automated anti-malware tool.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

A second challenge would be that whoever is in charge of watching these events presented by the AI2 system would have to be a security expert who is able identify whether something is actually an attack or not. Otherwise, the analysts watching over the AI2 system may just select the wrong events or omit others when giving the system feedback.

The Problem With AI-Based Cybersecurity Solutions

Although the AI2 system has an 85 percent effectiveness, that means data breaches can still occur 15 percent of the time, which is by no means insignificant. This is before the more sophisticated attackers even had to learn that such a system exists. Once they know about it, and they know to look if their target uses it, they may find new ways to bypass it, which would decrease its effectiveness.

To reach that effectiveness, the AI2 system had to be trained by 3.6 billion log lines. As we saw with Google’s AlphaGo AI, which had to "watch" 30 million games play out before fighting against Lee Sedol, AI-based solutions need to be trained using significant amounts of data to become advanced enough. Without that data, their effectiveness would be dramatically reduced.

The AI2 system may work against a malicious bot that tries to attack multiple networks at the same time because it would have time to learn and adapt. However, it may not be able to protect a network that’s being hacked by more sophisticated attackers with zero-day vulnerabilities. The AI2 system wouldn't have enough information about that sort of attack, which means it may not get reported to the human analysts, either.

Prevention, Not Detection, As The Real Solution

Ultimately, these sorts of systems are all about the detection and not the prevention of breaches. To make a real-world analogy, it would be like your alarm starting after the thief has already gone through all of your rooms and picked your stuff to steal. The ideal solution would be to prevent the thief from even getting into your house in the first place.

All detection-based solutions, including the ones powered by advanced AI, will always know a hacker got in after the fact (if that), and the detection may only happen after the damage has already been done.

Prevention-based solutions that use the principles of least privilege, virtualization, and other solutions based on not allowing the attackers complete control once they get in would likely fare better in the real world at stopping massive data breaches from happening.

Lucian Armasu is a Contributing Writer for Tom's Hardware. You can follow him at @lucian_armasu.

Lucian Armasu is a Contributing Writer for Tom's Hardware US. He covers software news and the issues surrounding privacy and security.

-

bit_user Security must be multi-faceted, so I think this sort of intelligent intrusion detection will become standard practice. The article is correct to point out that false positives will be an issue, however.Reply