AI Agents Can Learn New Physical Skills On Their Own

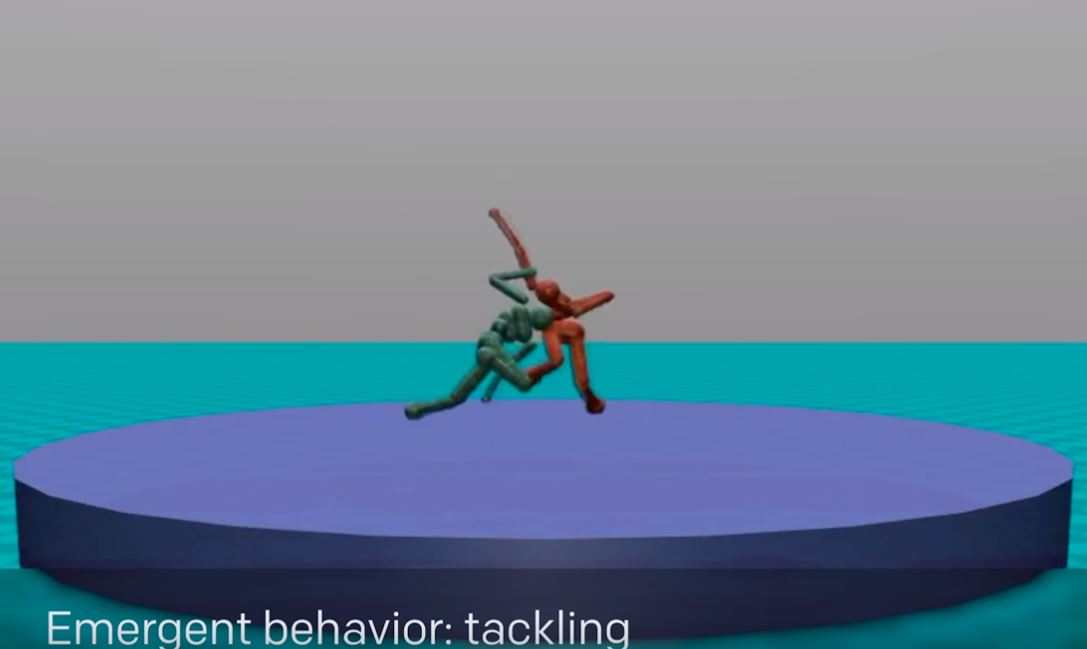

OpenAI, an AI research nonprofit founded by Elon Musk and a few others, has been experimenting with giving AI agents some goals in a set of basic games and then allowing them to play against each other to win. The team discovered that this has led to the AI agents learning physical skills such as tackling, ducking, faking, kicking, catching, and diving for the ball, all on their own.

Competitive Self-Play

OpenAI calls this type of training for AI agents “competitive self-play.” The nonprofit set up various competitions between simulated 3D robots and gave them goals such as pushing an opponent out of a sumo ring, or reaching the other side of the ring, while preventing the opponent from doing the same, kicking the ball into the net while preventing the opponent from doing so, and so on.

The agents initially receive dense rewards for the simple acts of standing or moving forward. These rewards are gradually reduced to zero, in favor of being rewarded only for winning or losing the games in which they are playing. Each agent’s neural network policy is independently trained.

In the sumo fighting game, the agents would first be rewarded for exploring the ring, but eventually they would receive a reward only for pushing the opponent out of the ring. In a simple game such as this one, a virtual agent could be “programmed” to do all of those things, too, but the code would be much more complex and it would largely depend on the designer to get everything right.

However, with these new AI systems, the agents could figure out what they need to do to achieve their objective largely on their own, after thousands of attempts playing against improved versions of themselves. OpenAI used a similar strategy to train the Dota AI it created earlier this year, which ended up winning against the top Dota human players.

Transfer Learning

The AI agents can not only learn to master a certain game or environment, but they can also transfer the knowledge and skills they gain in one game to another. The OpenAI team decided to test “wind” forces against two different AI agents, neither of which had experienced wind before. One AI was trained to walk using classical reinforcement training, while another was trained via self-play in the sumo fighting game.

The first AI was knocked over by the wind, while the second one, using its skills to push against an opposing force, was able to resist the wind and remain standing. This showed that an AI agent could transfer its skills to other similar tasks and environments.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

At first the agents were overfitting by co-learning policies that were precisely tailored for one specific opponent, and they would fail when facing an opponent with different characteristics. OpenAI team solved this issue by pitting the agents against different opponents. Being "different" in this case means the agents were using policies that were trained in parallel or policies from earlier in the training process.

OpenAI is now increasingly more confident that self-play will be a core part of powerful AI systems of the future. The group released the MuJoCo environments and trained policies used in this project so that others can do their own experiments with these systems. OpenAI also announced that it’s currently hiring researchers interested in working on self-play systems.

Lucian Armasu is a Contributing Writer for Tom's Hardware US. He covers software news and the issues surrounding privacy and security.

-

derekullo Ironically I would assume sumo wrestlers would be the worst at walking against a wind.Reply

Sounds like a myth for the Mythbusters to test. -

Spazzy Actually, this is freggin Awesome. They have basically created a computer that can think, at least on a basic level. In the video, the computers have to figure out how to deal with different challenges and manage to overcome them to win. Can you imagine playing a video game that learns how you fight?! As this technology scales up, can you imagine the possibilities beyond games? Quite frankly, it is mind boggling, and perhaps a little worrisome.Reply -

turkey3_scratch Reply20266976 said:Ironically I would assume sumo wrestlers would be the worst at walking against a wind.

Sounds like a myth for the Mythbusters to test.

I think that would largely depend on the ratio of surface area / mass. Going by the equation:

Force = mass * acceleration

If force is constant, increasing the mass, as in sumo wrestlers who have a large mass, will experience a lower acceleration. So the wind should have less of an effect on sumo wrestler.

There is more to it, though. Since sumo wrestlers generally have a larger mass, they usually also have a larger surface area. If surface area increases, the force applied will increase since the force is the integration or addition of all the tiny little pieces of wind force hitting every part of the body. So in reality, a sumo wrestler experiences both an increase in mass and force in the above equation.

However, I believe the change in mass is more significant than the change in force, and since the change in mass trumps the change in force, acceleration has to decrease to keep the equivalence true, hence why sumo wrestlers should be better against the wind.

To exemplify this, someone way gain 25% more weight but their frontal surface area may only increase by about 10% since it really depends on how that additional mass is distributed about the body, and you have to take into account the three-dimensional aspects of the various parts of the human body. -

AgentLozen Maybe it's just coincidence, but I was watching a movie called "The Matrix" earlier this week and AI Agents were learning new physical skills on their own in that too.Reply -

somebodyspecial Skynet in 3...2...1...ROFL. Seriously, skynet in 10-20yrs tops probably. Some idiot will let the genie out of the bottle in the name of science thinking they can manage it or it will just escape on it's own. Look how fast AI etc is jumping already. In 20yrs graphics have went from 3mil transistors to 12.5B! We are speeding up it seems as most of those came in the last 5-7yrs.Reply