Google claims new AI training tech is 13 times faster and 10 times more power efficient — DeepMind's new JEST optimizes training data for impressive gains

Potentially great news for a power grid in fear of AI over-demand

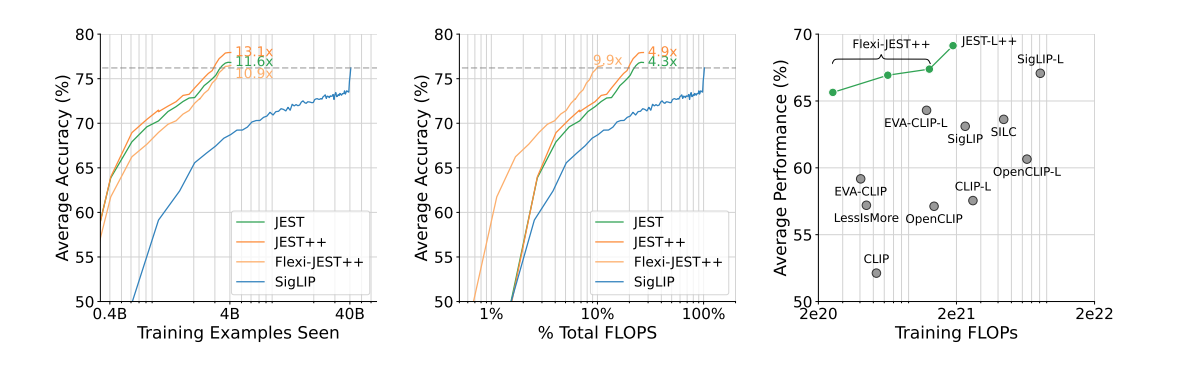

Google DeepMind, Google's AI research lab, has published new research on training AI models that claims to greatly accelerate both training speed and energy efficiency by an order of magnitude, yielding 13 times more performance and ten times higher power efficiency than other methods. The new JEST training method comes in a timely fashion as conversations about the environmental impact of AI data centers are heating up.

DeepMind's method, dubbed JEST or joint example selection, breaks apart from traditional AI model training techniques in a simple fashion. Typical training methods focus on individual data points for training and learning, while JEST trains based on entire batches. The JEST method first creates a smaller AI model that will grade data quality from extremely high-quality sources, ranking the batches by quality. Then it compares that grading to a larger, lower-quality set. The small JEST model determines the batches most fit for training, and a large model is then trained from the findings of the smaller model.

The paper itself, available here, provides a more thorough explanation of the processes used in the study and the future of the research.

DeepMind researchers make it clear in their paper that this "ability to steer the data selection process towards the distribution of smaller, well-curated datasets" is essential to the success of the JEST method. Success is the correct word for this research; DeepMind claims that "our approach surpasses state-of-the-art models with up to 13× fewer iterations and 10× less computation."

Of course, this system relies entirely on the quality of its training data, as the bootstrapping technique falls apart without a human-curated data set of the highest possible quality. Nowhere is the mantra "garbage in, garbage out" truer than this method, which attempts to "skip ahead" in its training process. This makes the JEST method much more difficult for hobbyists or amateur AI developers to match than most others, as expert-level research skills are likely required to curate the initial highest-grade training data.

The JEST research comes not a moment too soon, as the tech industry and world governments are beginning discussions on artificial intelligence's extreme power demands. AI workloads took up about 4.3 GW in 2023, almost matching the annual power consumption of the nation of Cyprus. And things are definitely not slowing down: a single ChatGPT request costs 10x more than a Google search in power, and Arm's CEO estimates that AI will take up a quarter of the United States' power grid by 2030.

If and how JEST methods are adopted by major players in the AI space remains to be seen. GPT-4o reportedly cost $100 million to train, and future larger models may soon hit the billion-dollar mark, so firms are likely hunting for ways to save their wallets in this department. Hopefuls think that JEST methods will be used to keep current training productivity rates at much lower power draws, easing the costs of AI and helping the planet. However, much more likely is that the machine of capital will keep the pedal to the metal, using JEST methods to keep power draw at maximum for hyper-fast training output. Cost savings versus output scale, who will win?

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Sunny Grimm is a contributing writer for Tom's Hardware. He has been building and breaking computers since 2017, serving as the resident youngster at Tom's. From APUs to RGB, Sunny has a handle on all the latest tech news.

-

zsydeepsky ReplyRickybaby said:Oh great. Lets see, it boils down to filtering out anything from the dataset that you don't agree with so you can train the models faster.

it amazes me that somehow you linked human behaviors with LLM training methods for me. not kidding.

I had to stop and think that maybe the reason why when human "matured" then most of them became stubborn and hard to move away from their bias...was because the energy cost for the human brain to tune its "intelligence model" became way too expensive, so the brain simply stopped training/tuning anymore.

maybe "ideology" boils down, is just a fundamental flaw in human minds...or even worse: hard physical world limits. -

watzupken There is always some way to speed things up, also known as shortcuts. But in every decision, there will always be tradeoffs. So yeah, it may sound more efficient, but you may have undesirable effects like worst AI hallucination or response. Google don't quite have a good reputation about their AI to begin with.Reply -

bit_user Reply

Huh. I'd have expected more like 100x. Anyway, nothing in the article suggests this new method will make the resulting models cheaper to inference, so that part will remain unchanged.The article said:a single ChatGPT request costs 10x more than a Google search in power

Yes, the tech industry pretty much always reinvests efficiency improvements into greater throughput, rather than net power-savings. Basically, they will spend as much on training and AI development as they can afford to.The article said:much more likely is that the machine of capital will keep the pedal to the metal, using JEST methods to keep power draw at maximum for hyper-fast training output. -

bit_user Reply

No. The 10x figure encompasses all computation. For it to be 130x, they would've had to say that it cuts the number of iterations to 1/13th and reduces the compute per iteration to 1/10th.usertests said:Would it be fair to call it a 130x performance/Watt improvement?

I think the reason why it's not 1/13th and 1/13th is that it takes computation to train the small model and apply it to grade the training data for the main model. -

bit_user Reply

The idea of losing training samples is unsettling, but what it should be doing is actually better representing the diversity of inputs it needs to handle and reducing redundancy between them. If they reduced real diversity, then it shouldn't perform as well on at least some of their benchmarks.Rickybaby said:Oh great. Lets see, it boils down to filtering out anything from the dataset that you don't agree with so you can train the models faster.

It could have something to do with why you don't remember very much from when you were a small child. My uninformed belief is that neuroplasticity isn't free. The easier it is for you to learn new things, the easier it is for you to forget existing knowledge.zsydeepsky said:I had to stop and think that maybe the reason why when human "matured" then most of them became stubborn and hard to move away from their bias...was because the energy cost for the human brain to tune its "intelligence model" became way too expensive, so the brain simply stopped training/tuning anymore.

Evolution seems to have settled on an assumption that you can learn all of the fundamental skills you need to know, by the time you're an adult. After that, it's more important that you not forget them, than for you to easily be able to pick up new skills. -

zsydeepsky Replybit_user said:Evolution seems to have settled on an assumption that you can learn all of the fundamental skills you need to know, by the time you're an adult. After that, it's more important that you not forget them, than for you to easily be able to pick up new skills.

I would say evolution simply chooses the path that leads to maximum survival possibility.

"learning more skills" obliviously has a diminishing return, the more you have learned, the less benefit you gain from the next new skill. evolution tends to stop at an "economic-balance-point" for sure. in fact, as you mentioned, since retaining skills also has a cost, the brain even tends to forget skills that are not frequently used.

it's also easy to understand that "balance-point" definitely wouldn't be adequate for "ideology", for this thing only appeared in recent 200 years, an almost non-existent compared to millions of years of human brain evolution history. -

usertests Reply

They only want what's best for you.Findecanor said:That's just great... That means that Google will do ten times as much AI. -

bit_user Reply

Obviously. But, my point was that if there's a tradeoff between memory durability and the ability to learn new tasks, then the fact that learning slows as we age seems to have a certain logic to it that could've been selected for by evolution.zsydeepsky said:I would say evolution simply chooses the path that leads to maximum survival possibility.

Ideology is something you learn, just like anything else. It encompasses a world view, which means it touches many of your beliefs and other knowledge. Even if you want to, you can't just switch ideologies in an instant. You need to relearn all of your beliefs and perceptions that it affects.zsydeepsky said:it's also easy to understand that "balance-point" definitely wouldn't be adequate for "ideology", for this thing only appeared in recent 200 years,

IMO, the only thing at all "new" about ideologies is that people began to describe them as an abstract concept which explains part of the difference in how different groups view the same facts or set of events. Ideologies have existed since the modern human brain, but it probably took larger & more complex societies for some people to see that not everyone has the same outlook and then to try and pick apart those differences.