'In the last 10 years, AI has advanced 1 million times' — Nvidia CEO Jensen Huang hails 'incredible' speed of industry change

But there's a catch.

Jensen Huang, chief executive of Nvidia, this week met with U.K. Prime Minister Keir Starmer to open London Tech Week and talk about AI. During the event, Huang and Starmer made announcements that tie artificial intelligence to national economic planning, backed by major investments in infrastructure, talent, and collaboration between government and industry. Perhaps more importantly, Huang said that AI hardware has gotten a million times faster in the last 10 years. But there is a catch.

"In the last 10 years, AI has advanced 1 million times," Huang said. "The speed of change is incredible."

The head of Nvidia did not elaborate on what he meant by his comment and whether he was talking about AI software (e.g., considerably bigger large language models and large reasoning models) or AI hardware. Considering that Nvidia is a hardware company, it is likely that Jensen Huang meant improvements of AI hardware in general and the company's GPUs and systems in particular.

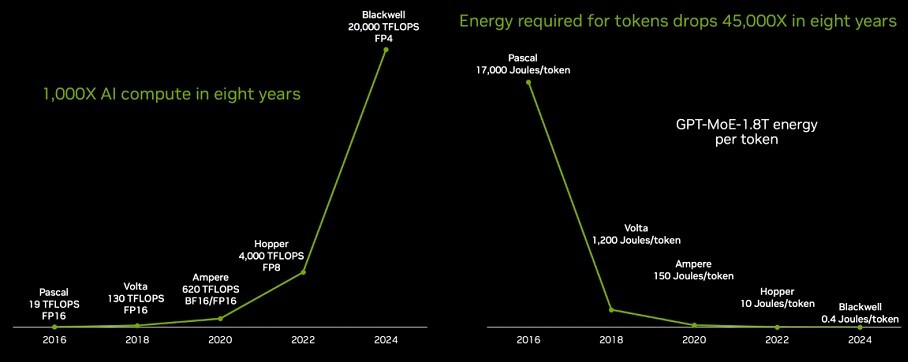

Last year, Nvidia said that its Blackwell B200 processors deliver 20,000 times higher inference performance compared to the Pascal P100 processor from 2016. Specifically, B200 offers performance of around 20,000 FP4 TFLOPS, whereas P100 could only boast 19 FP16 TFLOPS. While this is not exactly an apples-to-apples comparison, it makes sense when it comes to inference. In addition, Blackwell is also 42,500 times more energy efficient in terms of joules per generated tokens.

In addition to higher performance GPUs, Nvidia, as well as companies like xAI or Microsoft, now also build AI systems that are dramatically faster than those which were available in 2016, so to some degree we may say that AI hardware got a million times more powerful than it was less than a decade ago. For example, right now, xAI runs an AI supercomputer featuring 200,000 Hopper GPUs. However, Elon Musk said that eventually the company will build a system with a million Blackwell GPUs, and such a cluster will not only deliver record-breaking performance for AI, but will also be one of the industry's highest performing supercomputers in general.

American companies, such as xAI, are, of course, not the only companies that invest heavily in AI infrastructure. The U.K. committed to spending approximately £1 billion on advanced computing resources dedicated to AI by the end of the decade, with initial funding already underway. Nvidia will establish a new research center focused on AI development in the U.K., supporting work in areas such as robotics, environmental modeling, and material science. It also launched a national developer education initiative aimed at improving AI-related technical skills. Additionally, the company will work with the U.K.'s financial regulator to power a secure testing environment for AI, and collaborate on efforts to accelerate 6G research.

Nonetheless, when compared to American AI supercomputers, the U.K.'s systems look pale for now. The country's most powerful AI system is the upcoming Isambard-AI system that is powered by 5,500 Grace Hopper 200 processors.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Follow Tom's Hardware on Google News to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

Neilbob Reply

The universe isn't even expanding as fast as that is moving.SonoraTechnical said:But is it moving as fast as changes to his black leather jacket tech?