Nvidia shows off Blackwell server installations in progress — AI and data center roadmap has Blackwell Ultra coming next year with Vera CPUs and Rubin GPUs in 2026

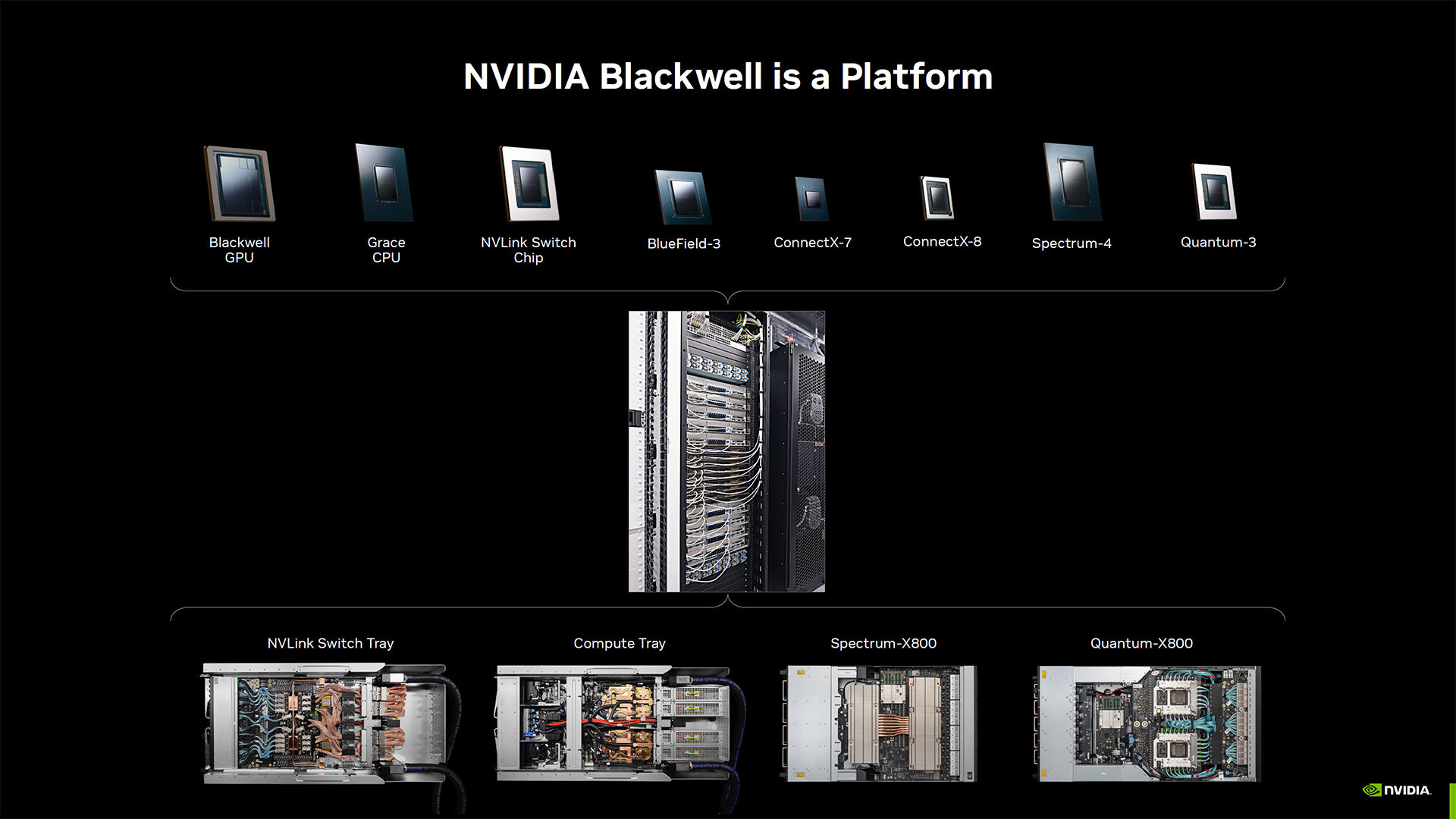

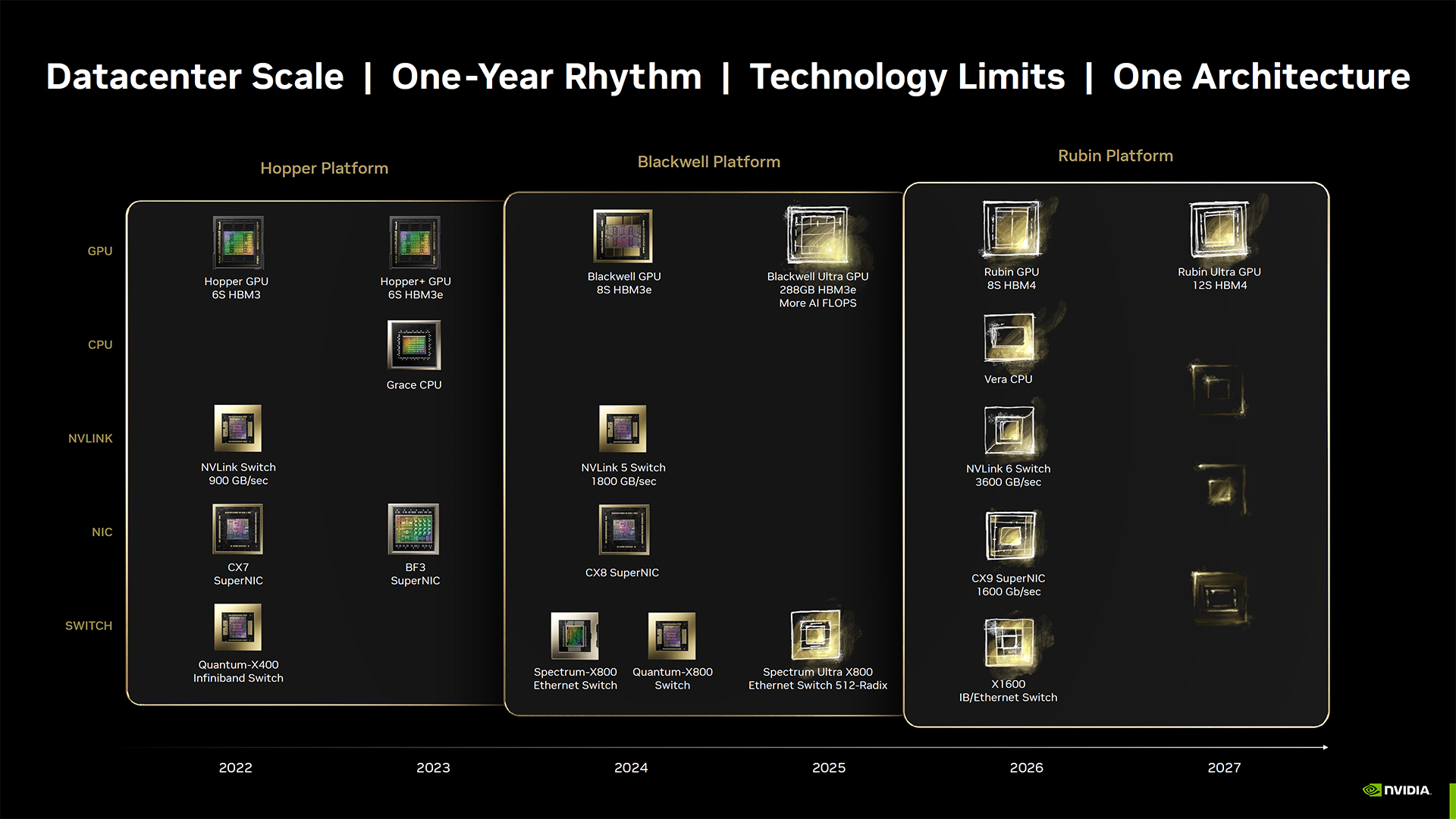

Nvidia also emphasized that it sees Blackwell and Rubin as platforms and not just GPUs.

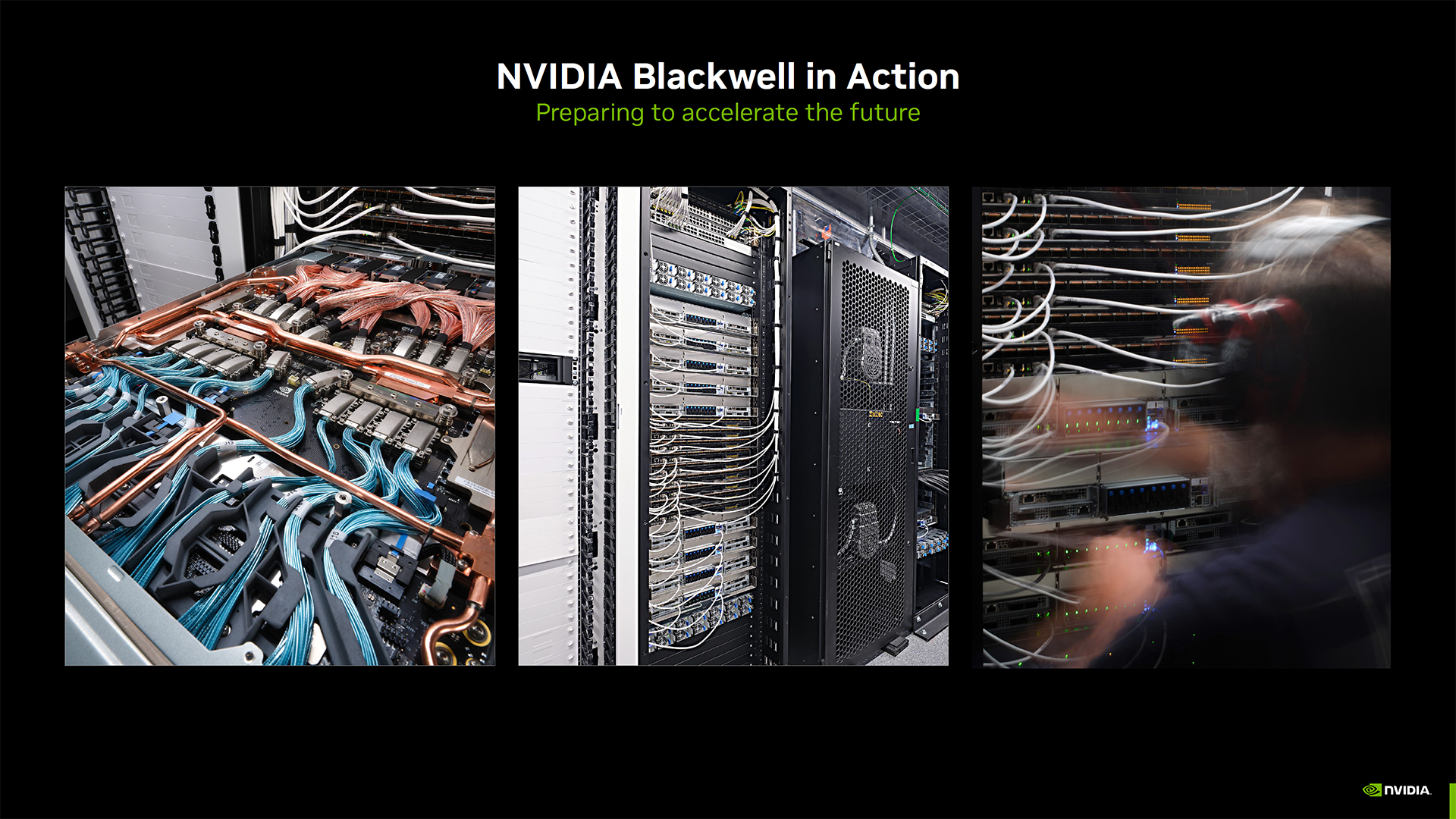

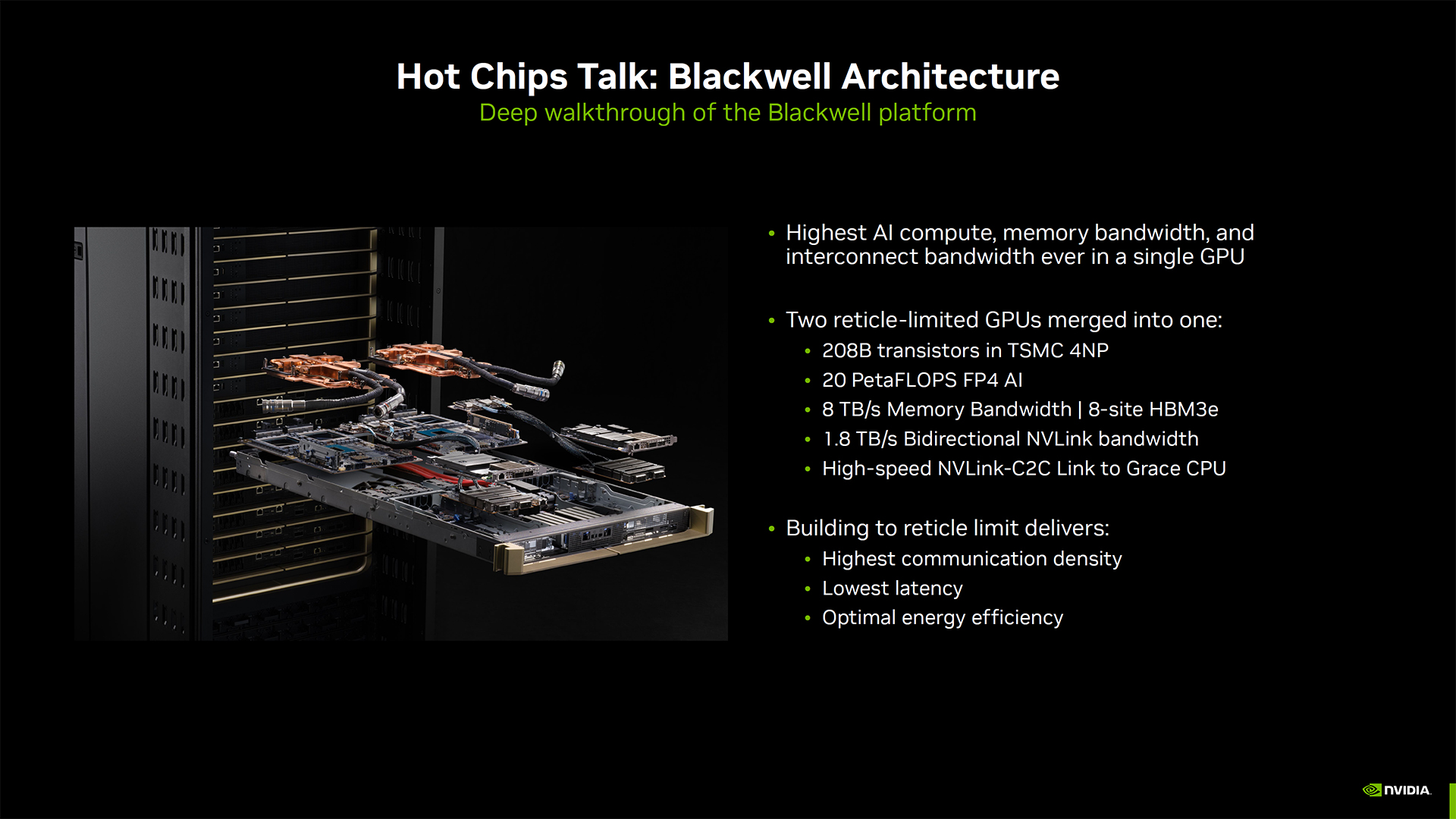

Prior to the start of the Hot Chips 2024 tradeshow, Nvidia showed off more elements of its Blackwell platform, including servers being installed and configured. It's a less than subtle way of saying that Blackwell is still coming — never mind the delays. It also talked about its existing Hopper H200 solutions, showed FP4 LLM optimizations using its new Quasar Quantization System, discussed warm water liquid cooling for data centers, and talked about using AI to help build even better chips for AI. It reiterated that Blackwell is more than just a GPU, it's an entire platform and ecosystem.

Much of what will be presented by Nvidia at Hot Chips 2024 is already known, like the data center and AI roadmap showing Blackwell Ultra coming next year, with Vera CPUs and Rubin GPUs in 2026, followed by Vera Ultra in 2027. Nvidia first confirmed those details at Computex back in June. But AI remains a big topic and Nvidia is more than happy to keep beating the AI drum.

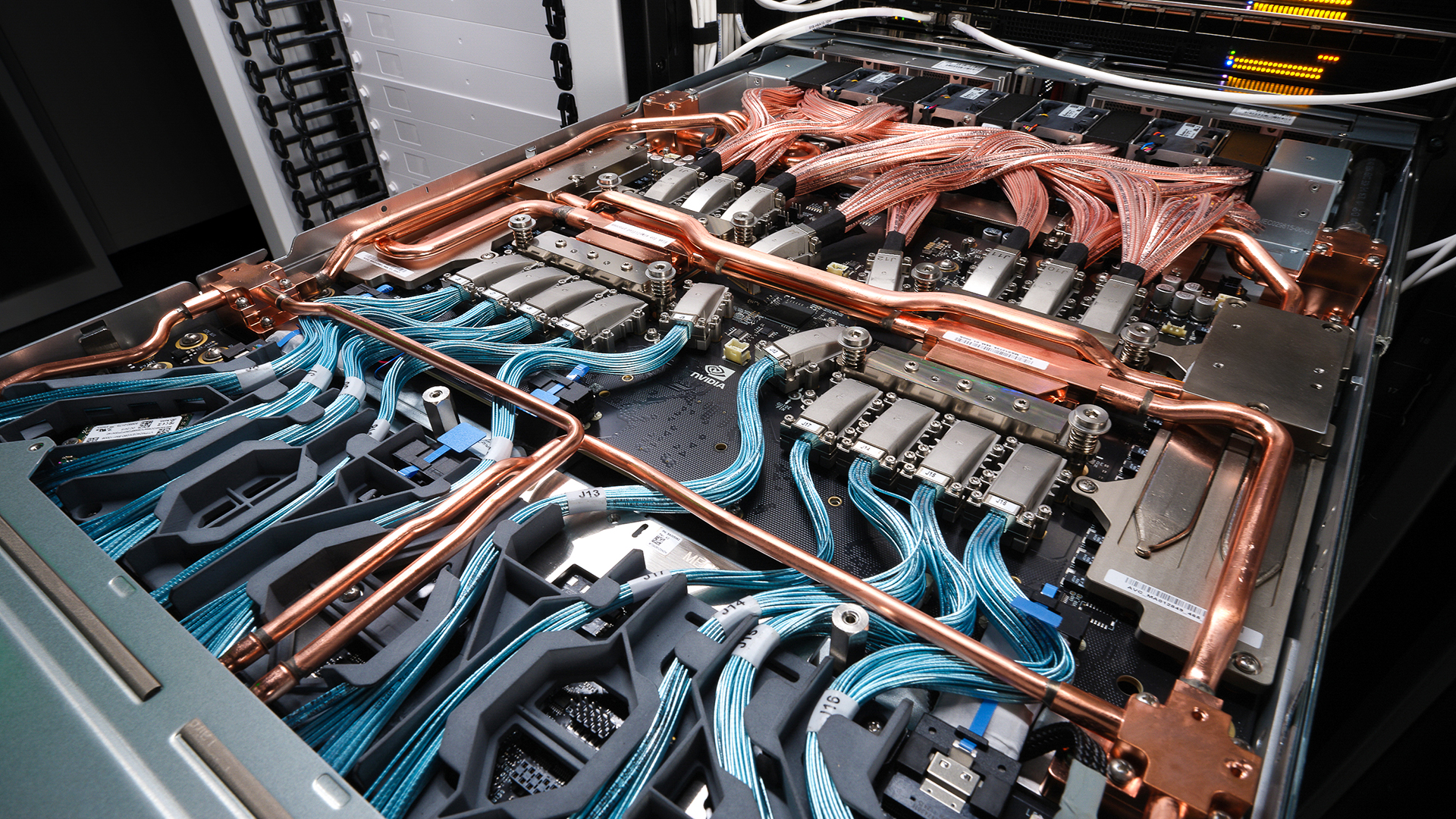

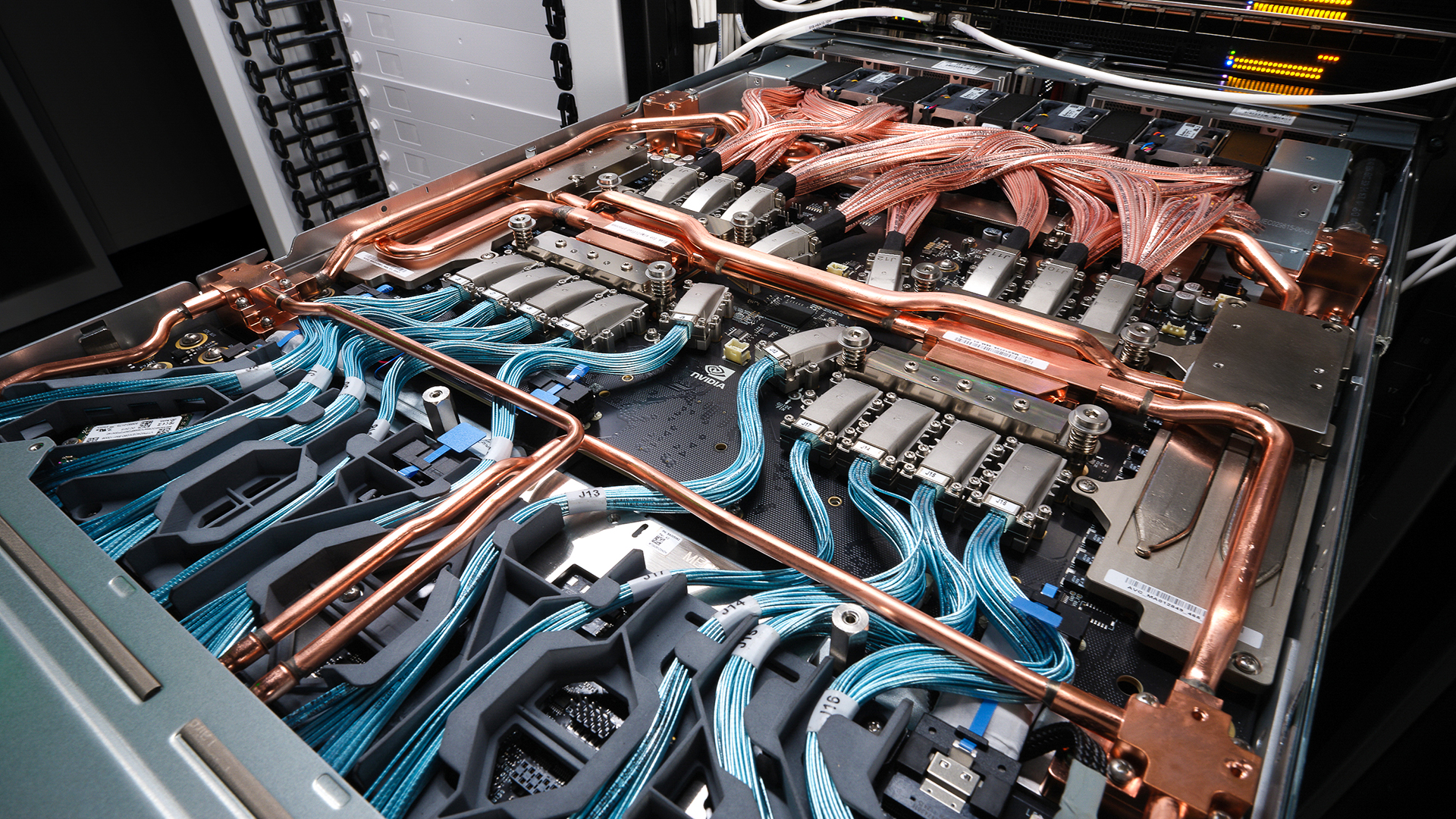

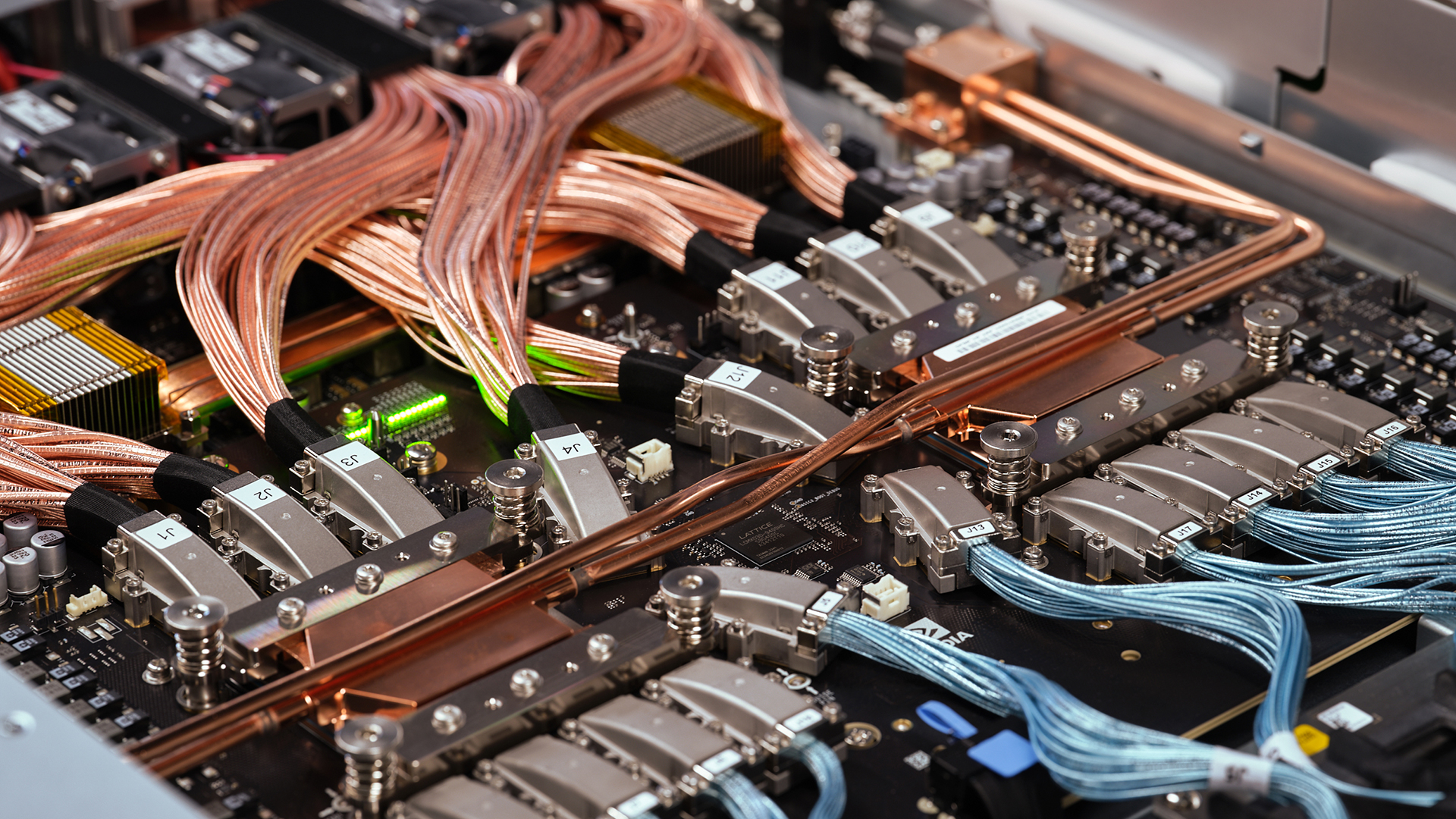

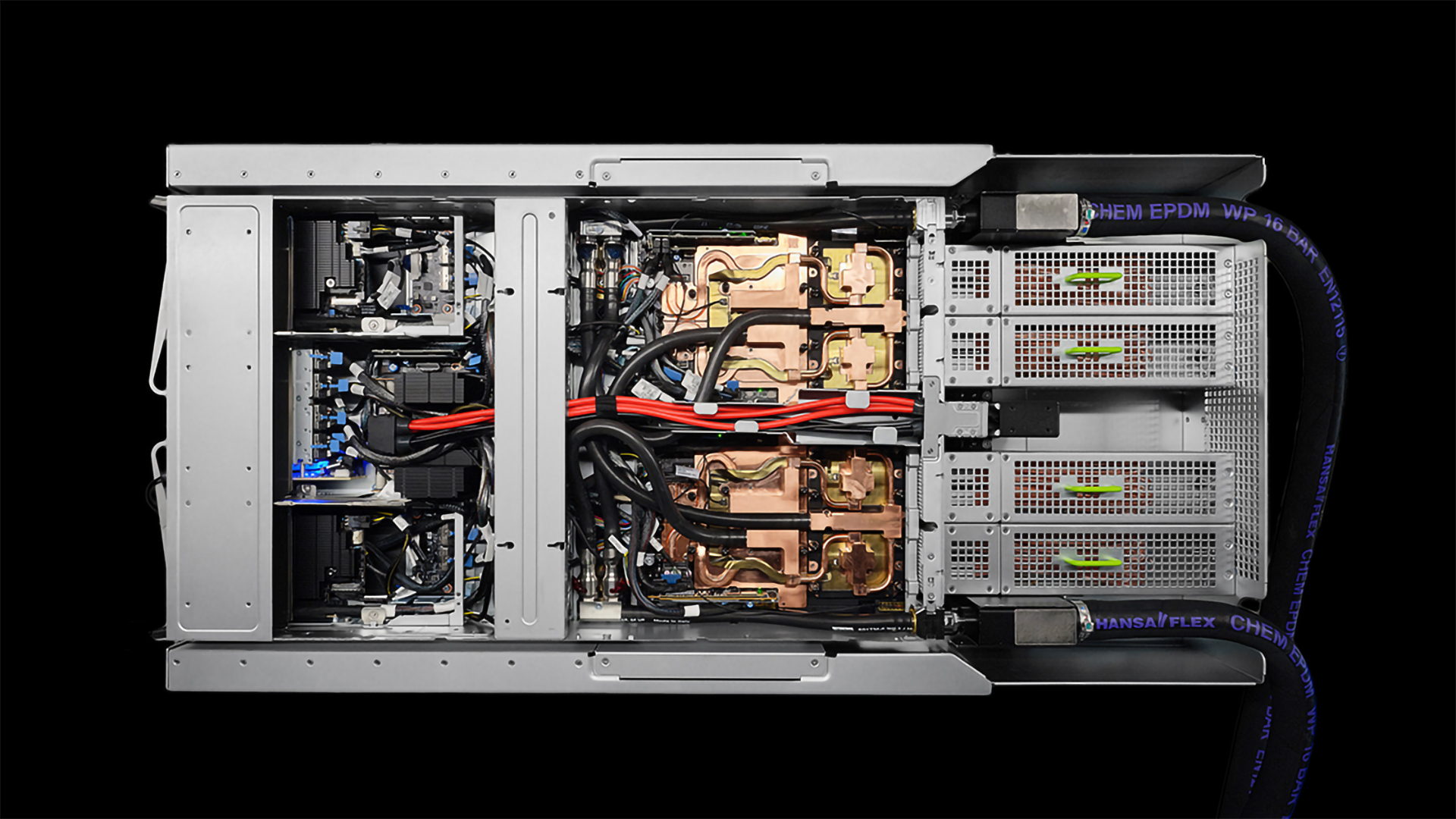

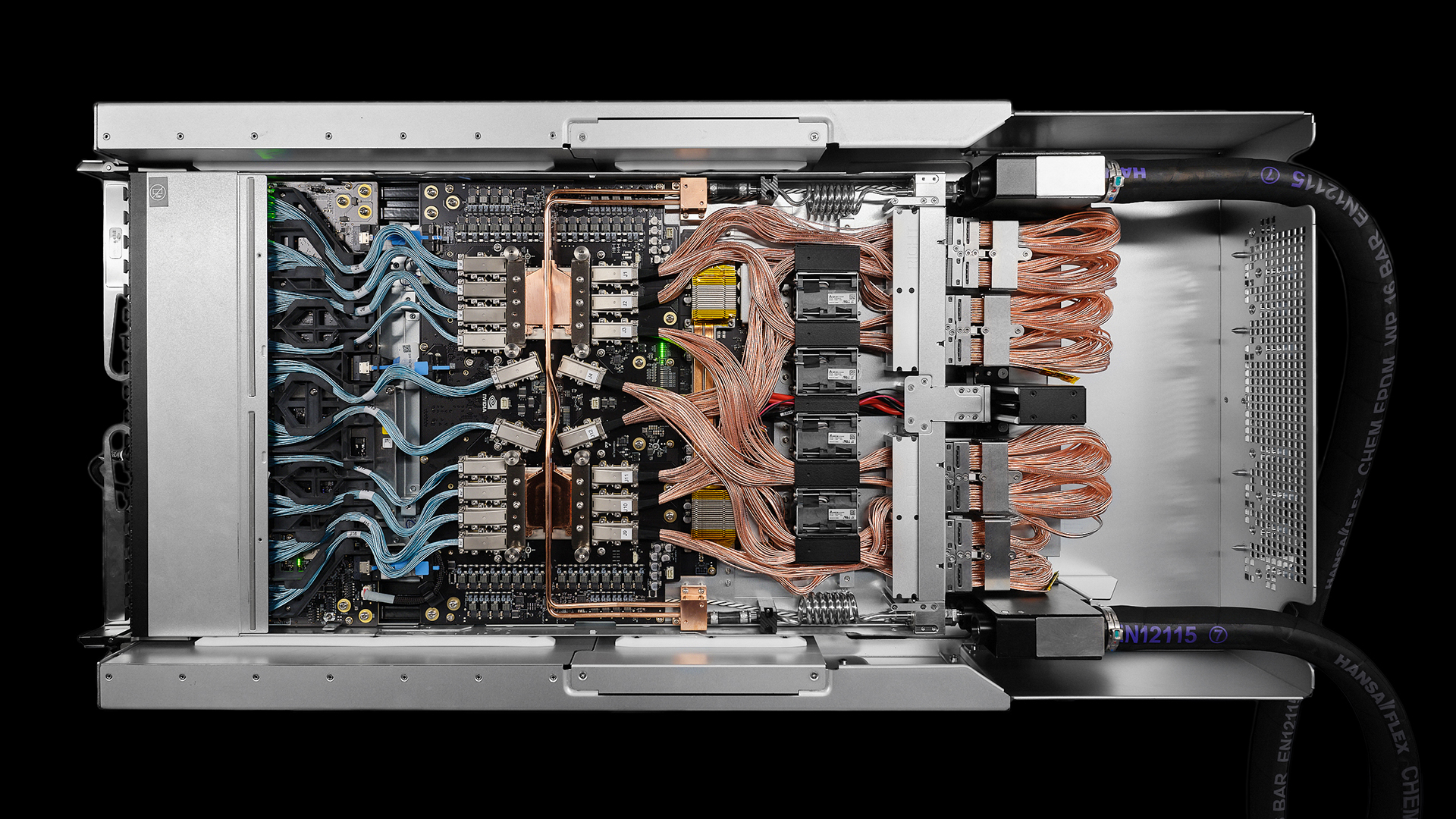

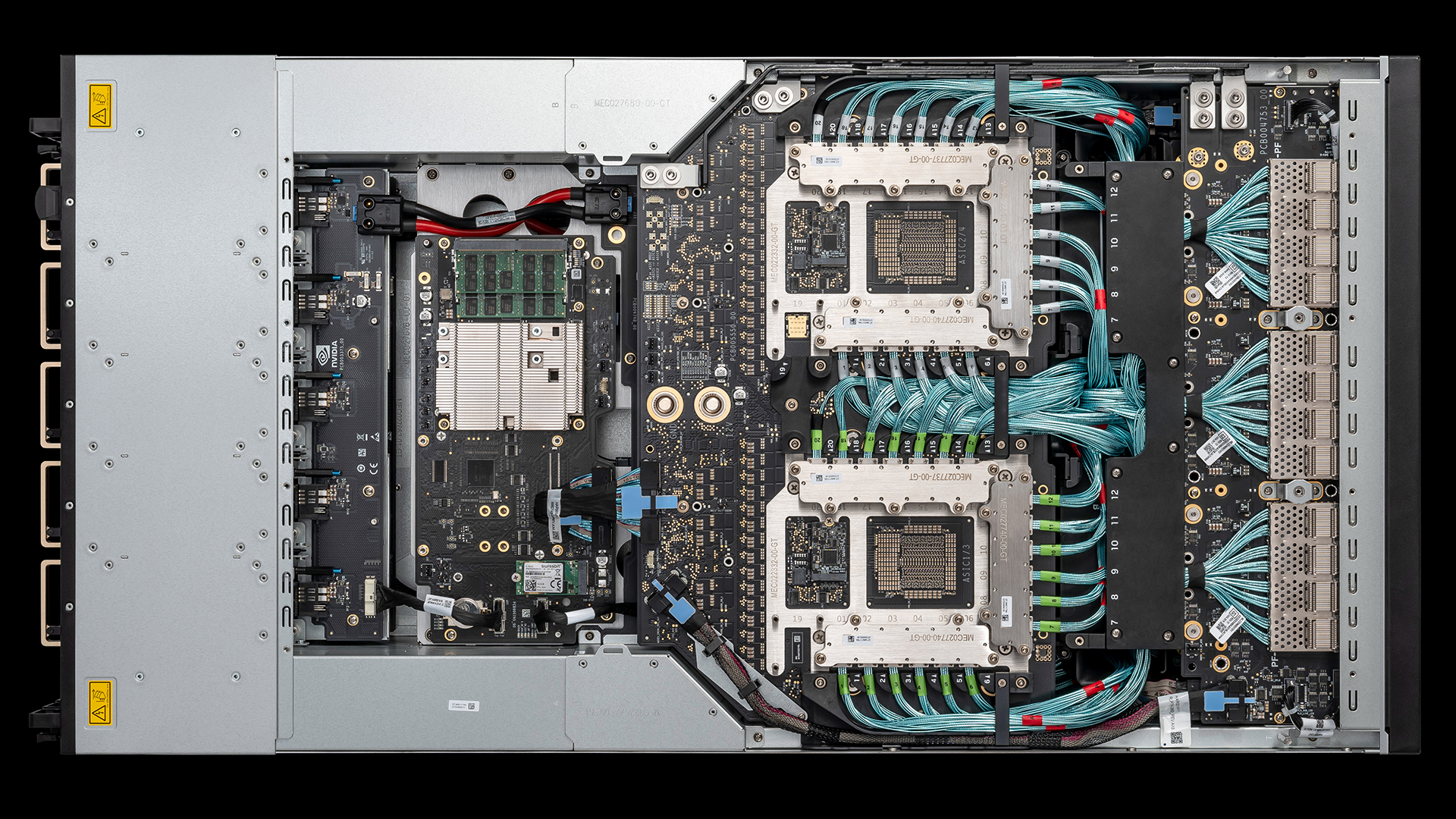

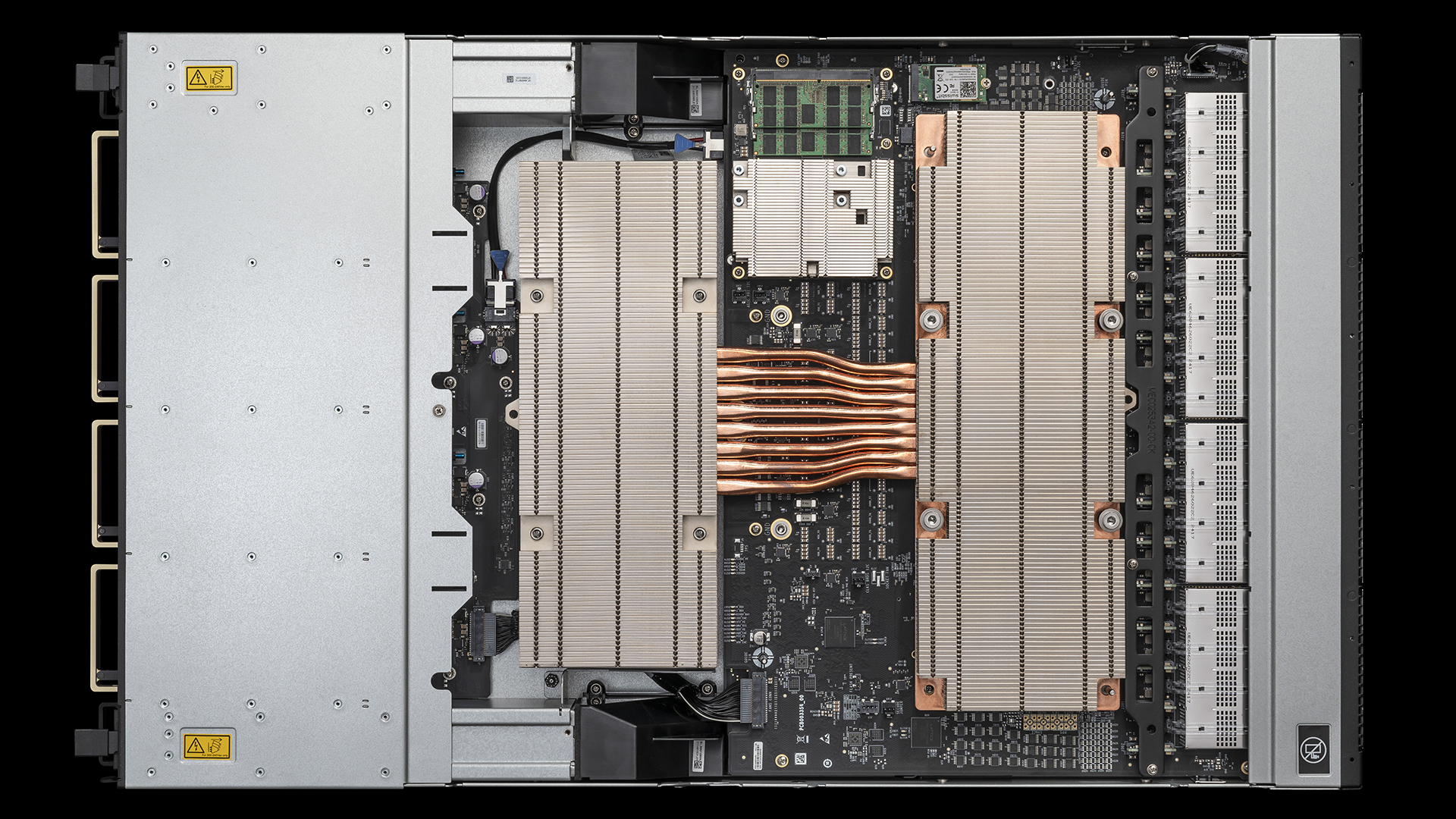

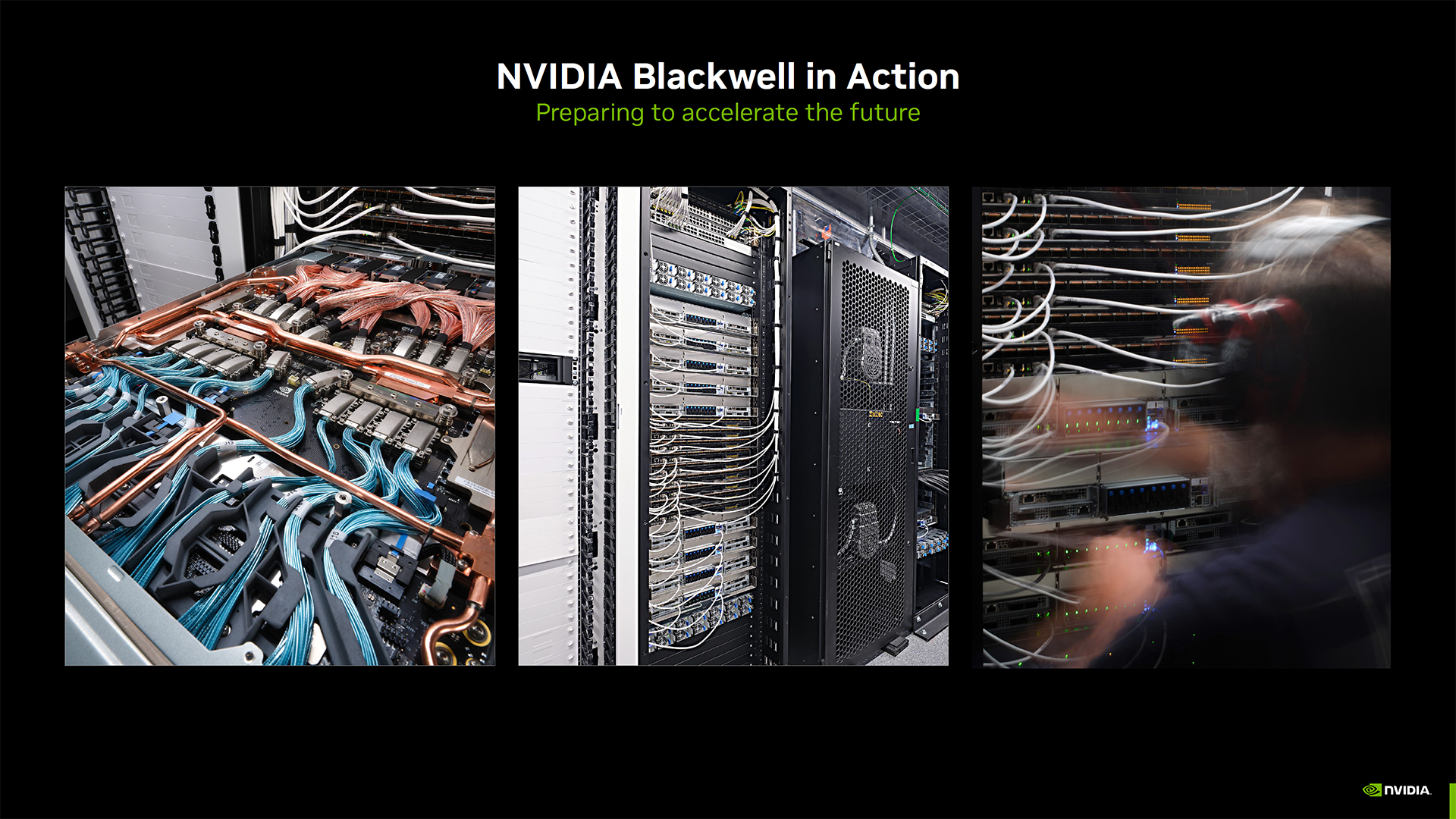

While Blackwell was reportedly delayed three months, Nvidia neither confirmed nor denied that information, instead opting to show images of Blackwell systems being installed, as well as providing photos and renders showing more of the internal hardware in the Blackwell GB200 racks and NVLink switches. There's not much to say, other than the hardware looks like it can suck down a lot of juice and has some pretty robust cooling. It also looks very expensive.

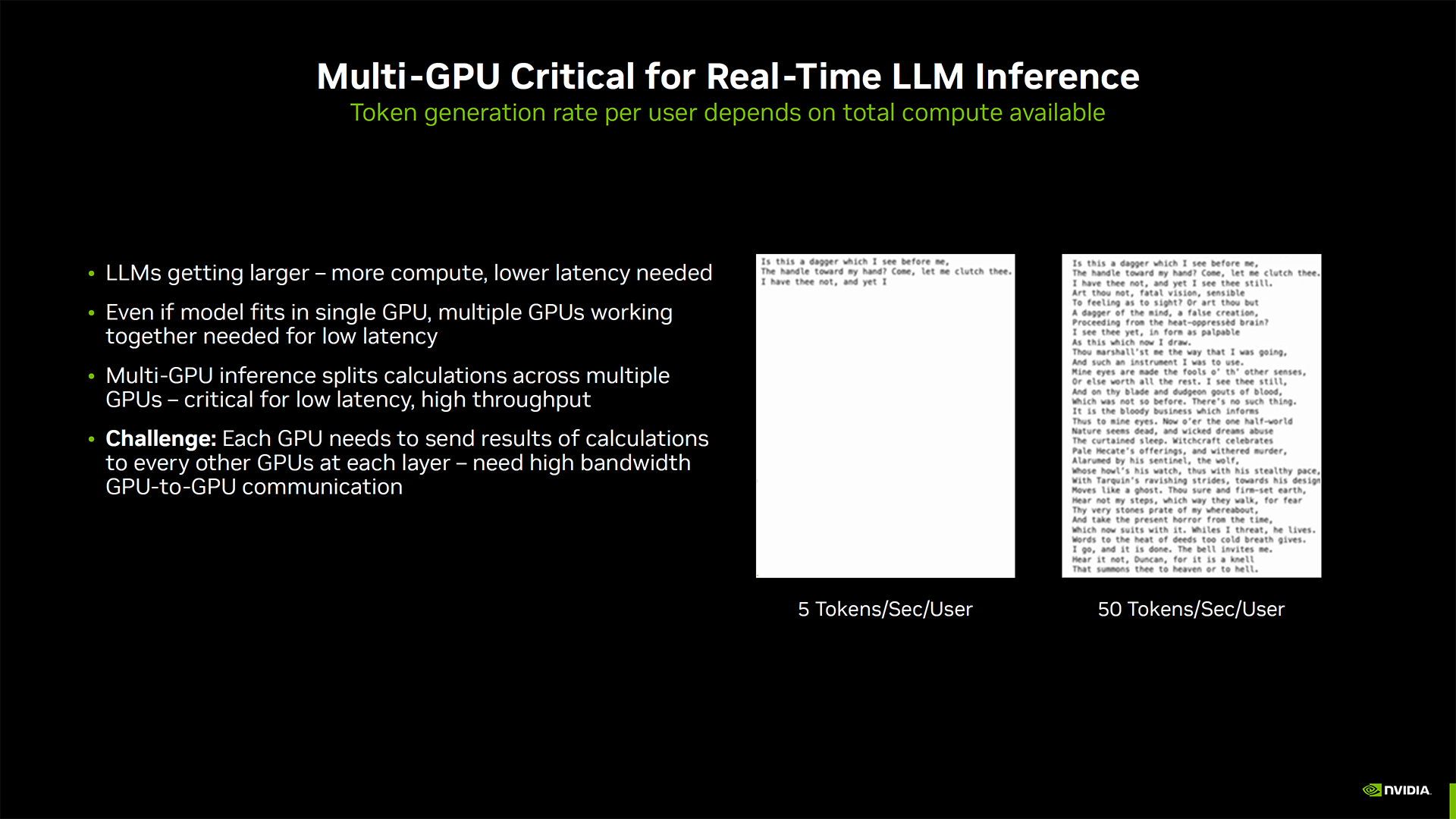

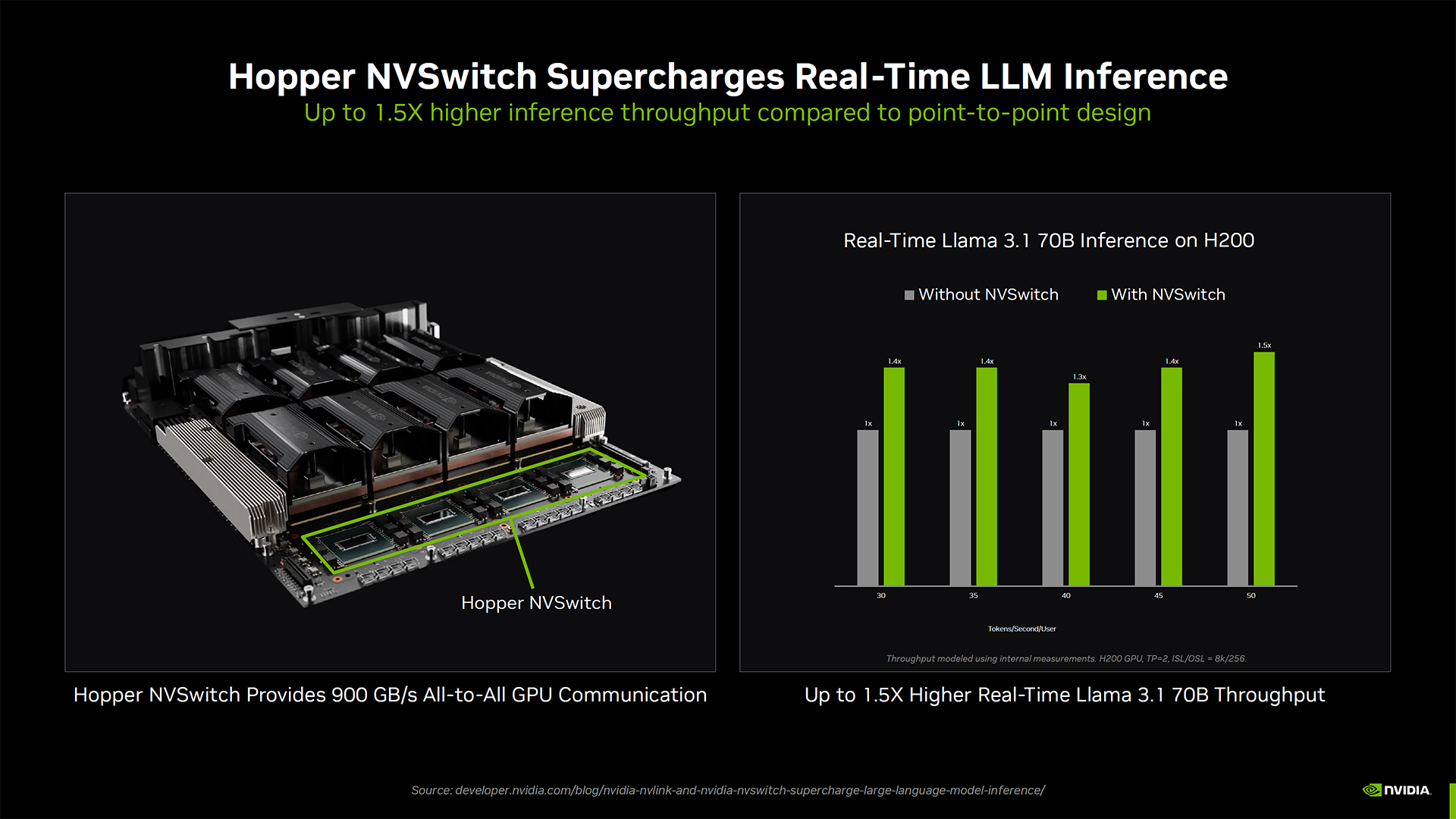

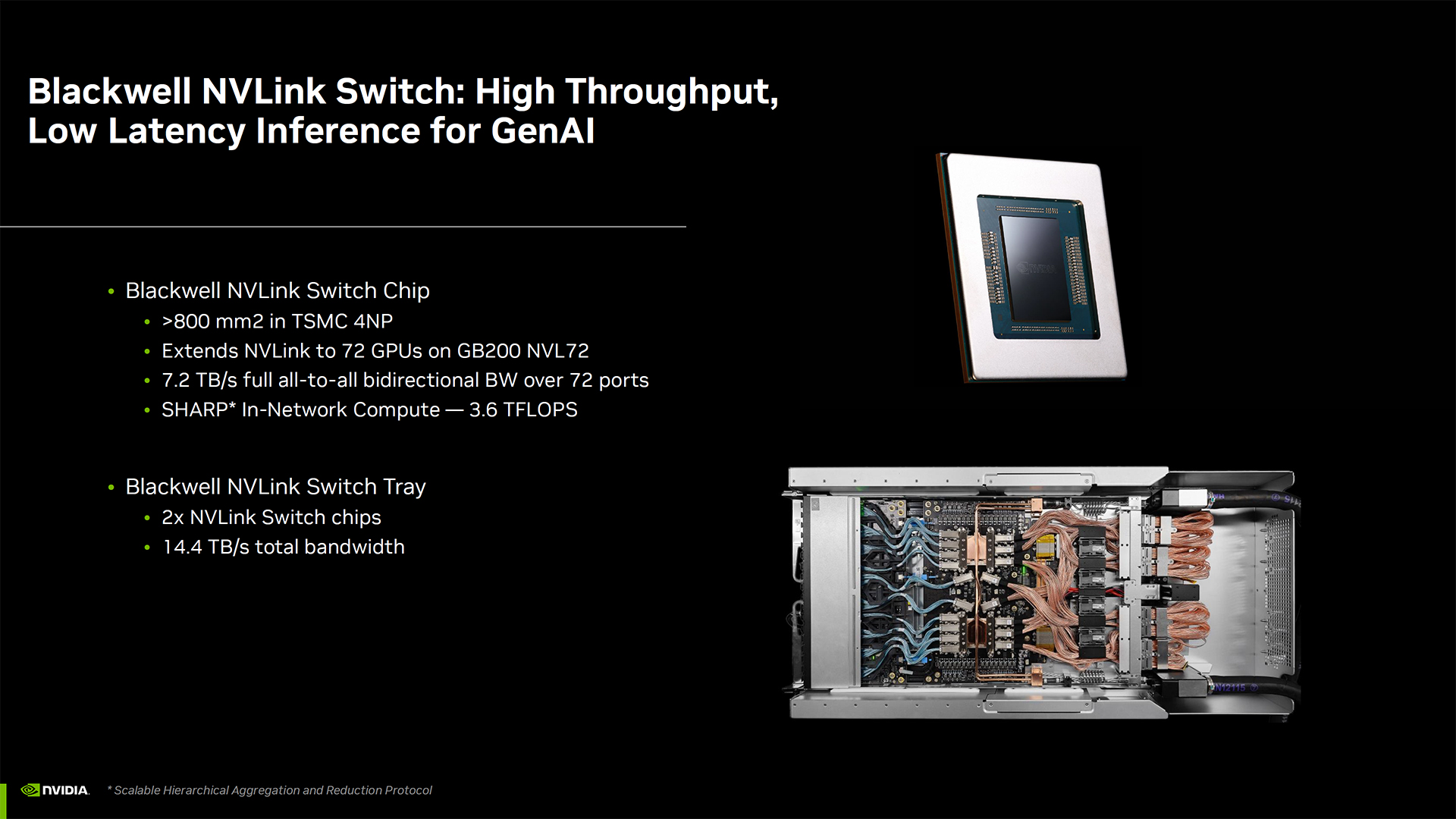

Nvidia also showed some performance results from its existing H200, running with and without NVSwitch. It says performance can be up to 1.5X higher on inference workloads compared to running point-to-point designs — that was using a Llama 3.1 70B parameter model. Blackwell doubles the NVLink bandwidth to offer further improvements, with an NVLink Switch Tray offering an aggregate 14.4 TB/s of total bandwidth.

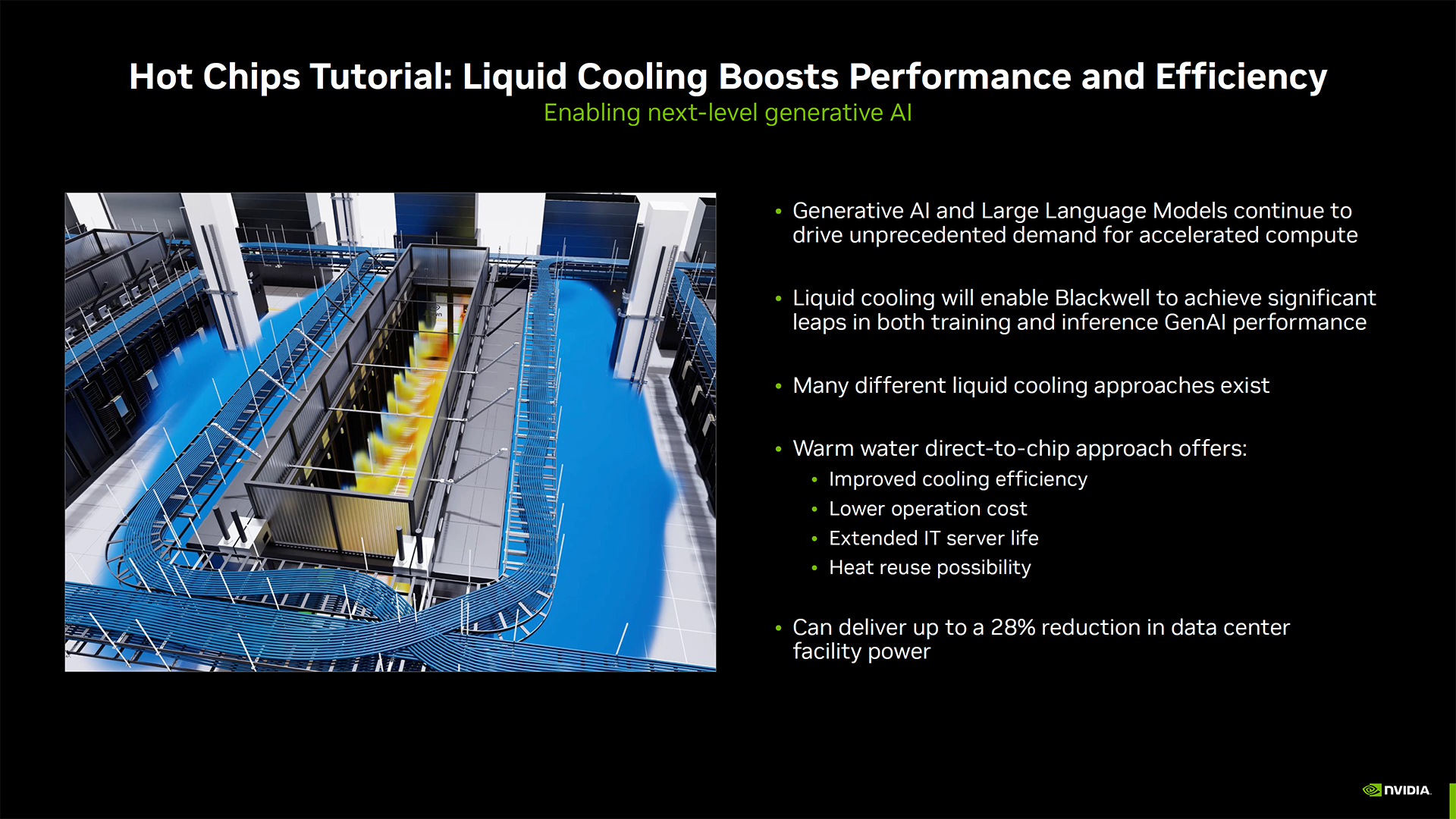

Because data center power requirements keep increasing, Nvidia is also working with partners to boost performance and efficiency. One of the more promising results is using warm water cooling, where the heated water can potentially be recirculated for heating to further reduce costs. Nvidia claims it has seen up to a 28% reduction in data center power use using the tech, with a large portion of that coming from the removal of below ambient cooling hardware.

Above you can see the full slide deck from Nvidia's presentation. There are a few other interesting items of note.

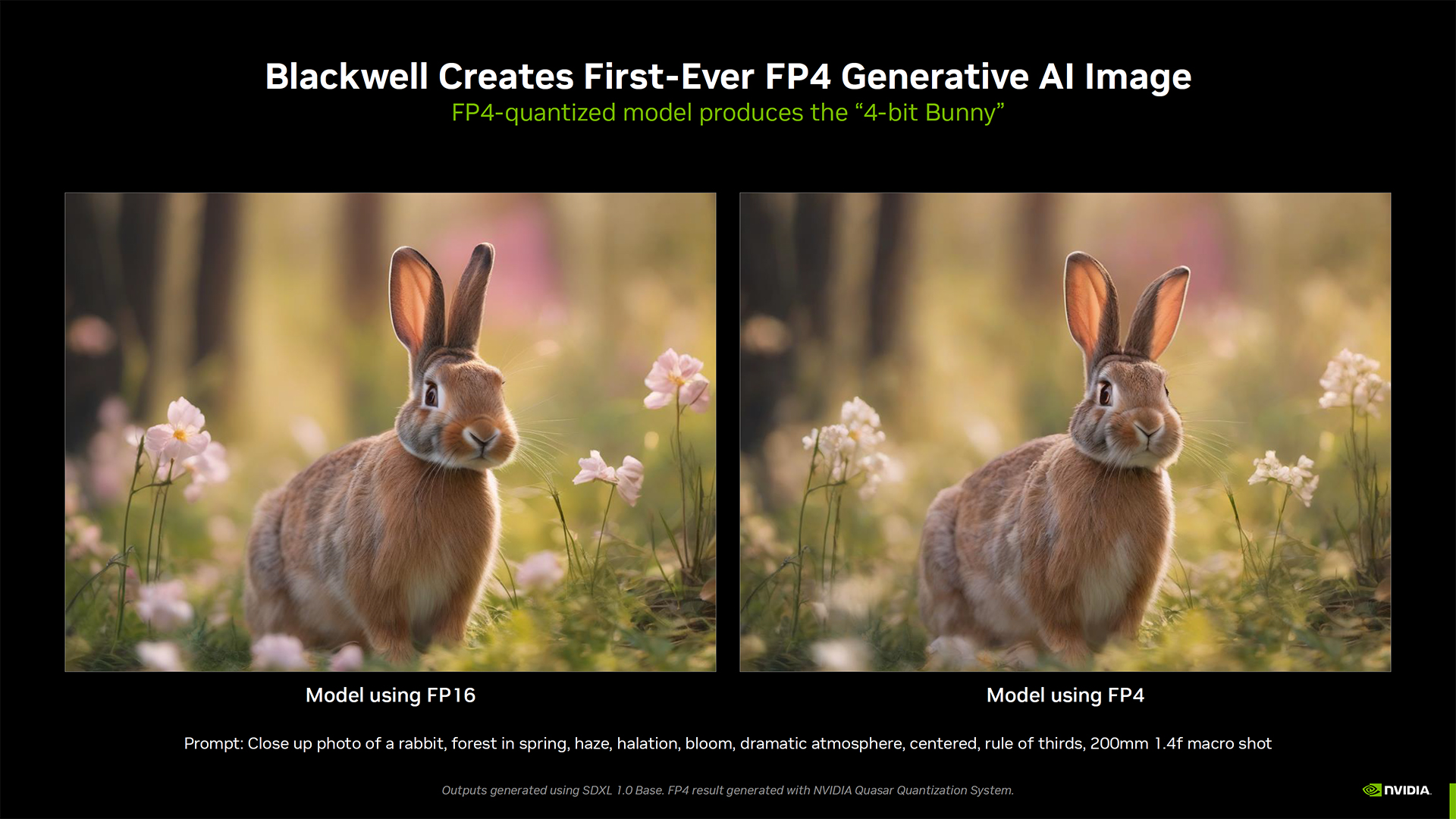

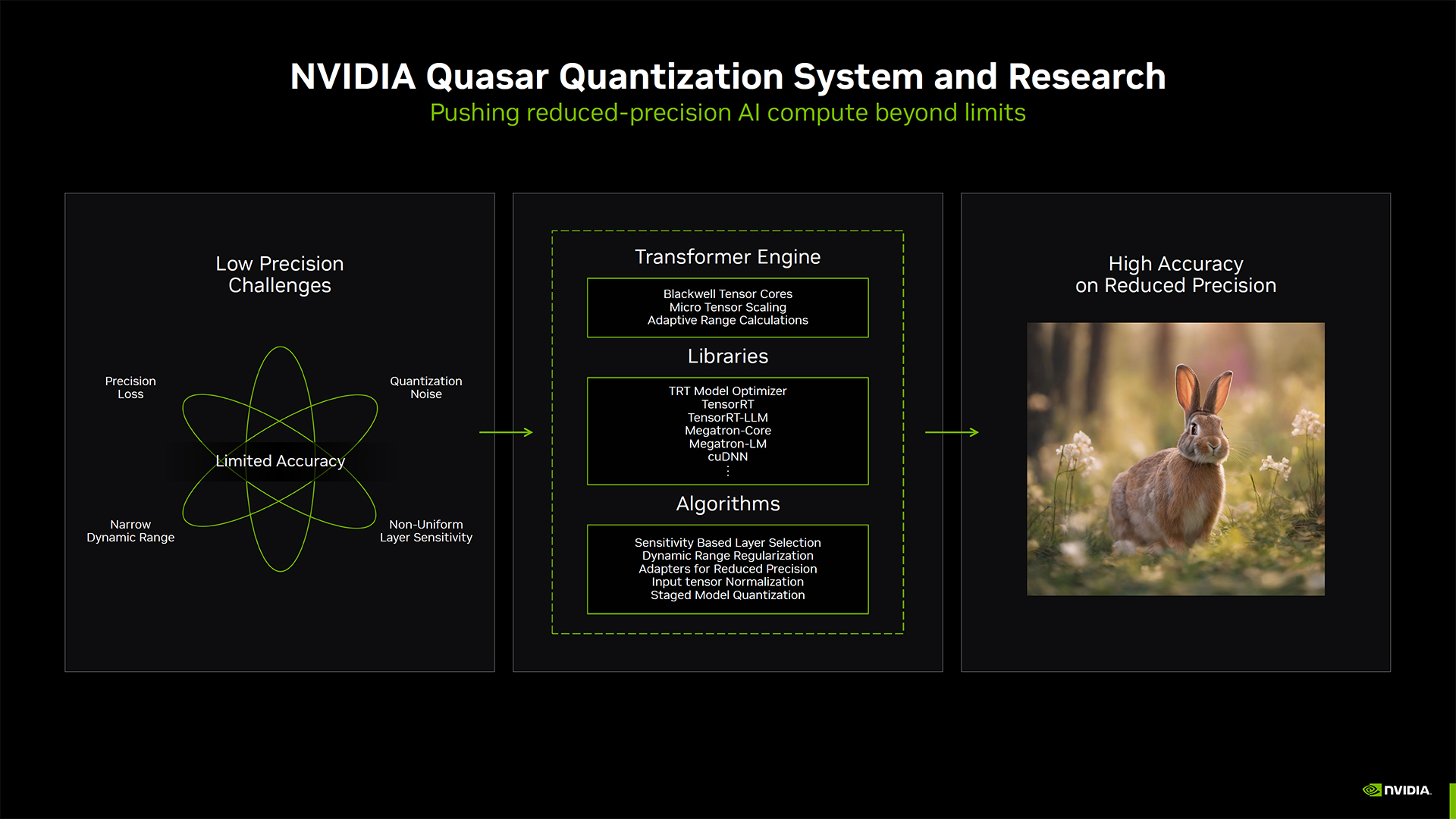

To prepare for Blackwell, which now adds native FP4 support that can further boost performance, Nvidia has worked to ensure it's latest software benefits from the new hardware features without sacrificing accuracy. After using its Quasar Quantization System to tune the workloads results, Nvidia is able to deliver basically the same quality as FP16 while using one quarter as much bandwidth. The two generated bunny images may very in minor ways, but that's pretty typical of text-to-image tools like Stable Diffusion.

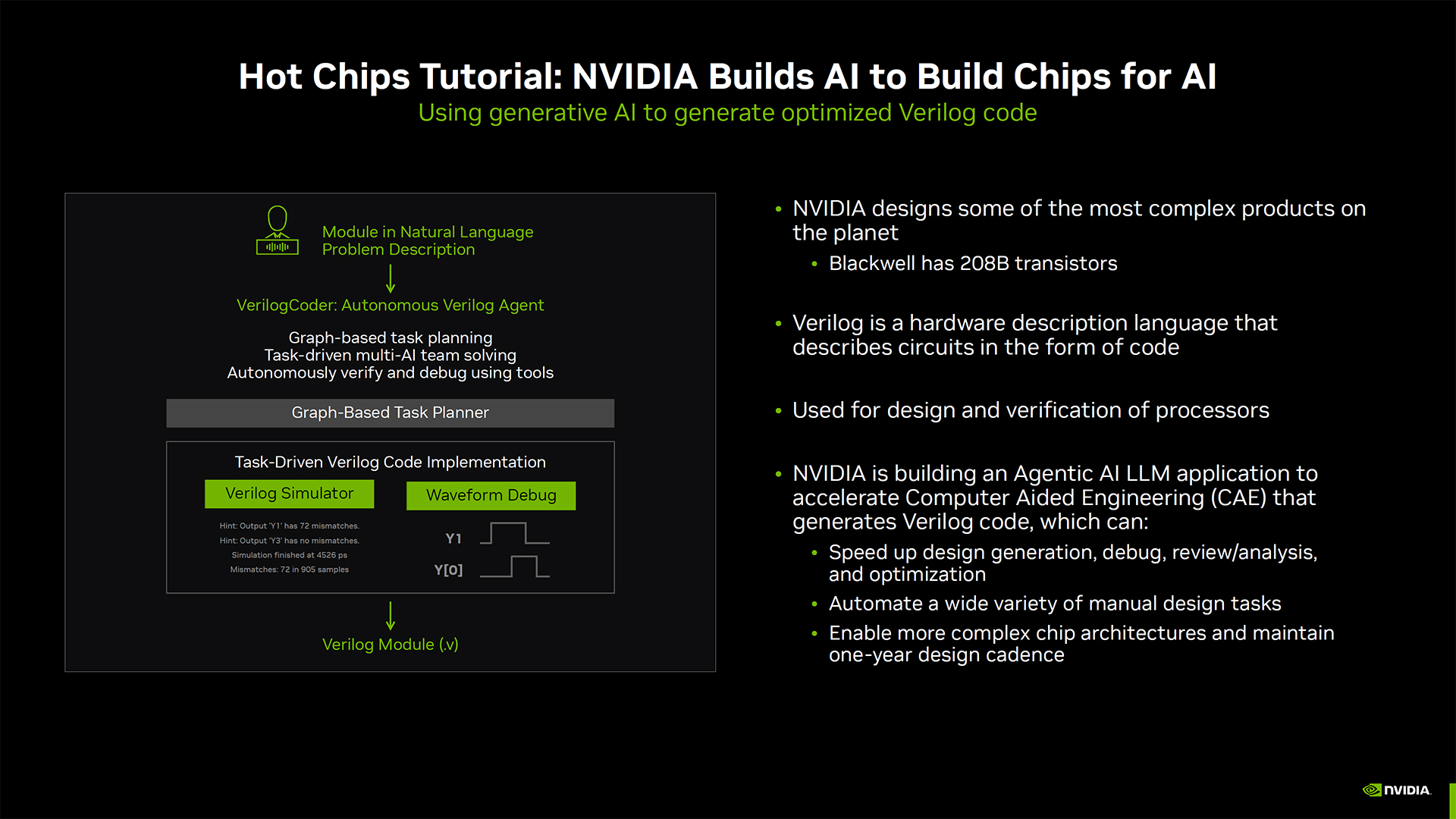

Nvidia also talked about using AI tools to design better chips — AI building AI, with turtles all the way down. Nvidia created an LLM for internal use that helps to speed up design, debug, analysis, and optimization. It works with the Verilog language that's used to describe circuits and was a key factor in the creation of the 208 billion transistor Blackwell B200 GPU. This will then be used to create even better models to enable Nvidia to work on the next generation Rubin GPUs and beyond. [Feel free to insert your own Skynet joke at this point.]

Wrapping things up, we have a better quality image of Nvidia's AI roadmap for the next several years, which again defines the "Rubin platform" with switches and interlinks as an entire package. Nvidia will be presenting more details on the Blackwell architecture, using generative AI for computer aided engineering, and liquid cooling at the Hot Chips conference next week.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

gg83 Hey Jarred, do you see any competitors able to match Nvidia's AI market share anytime soon? Jensen seems to always make the right choices. Ever since he got chips into the first xbox Nvidia has been almost unstoppable. With the amount of r&d Nvidia can afford, I don't see anyone passing them. Maybe a drastic change in approach or something is needed.Reply -

vanadiel007 It will be concerning for a video card to become a platform, as that means they will leverage their IP to ensure nobody else can produce a video card for that platform, in effect killing off competition.Reply

I can see this ending not good for consumers, especially if Intel is unable to rebound. At this point Nvidia could scoop up Intel and form a unified front to push AMD out of the market. -

JRStern I see the water-cooled and I say LOL, we're back to 1968.Reply

Or hey, what kind of game system is this?