Ashes Of The Singularity Beta: Async Compute, Multi-Adapter & Power

DirectX 12 has been available since Windows 10, but there aren't any games for it yet, so we're using the Ashes of the Singularity beta to examine DX12 performance.

Frame Rates & Times

Frame Rates

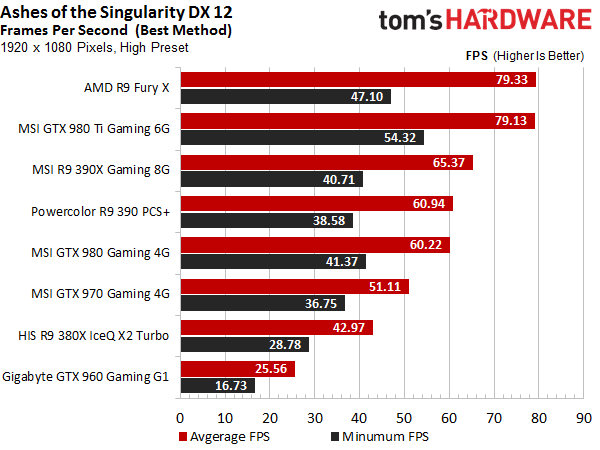

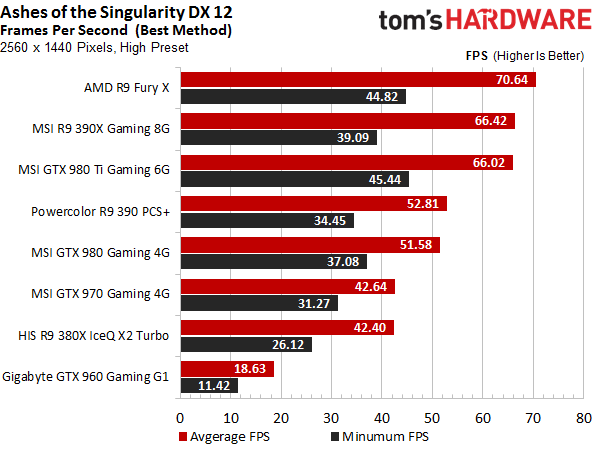

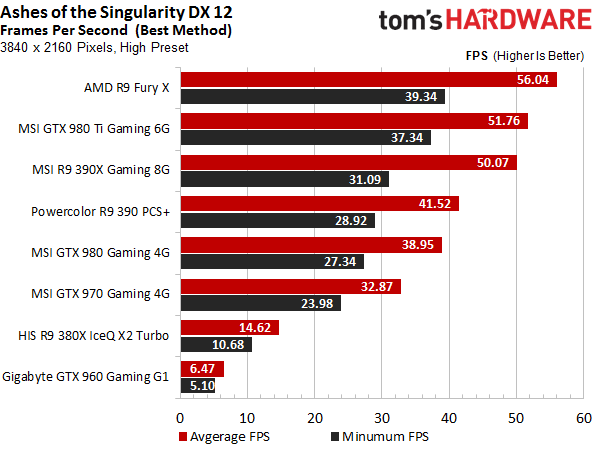

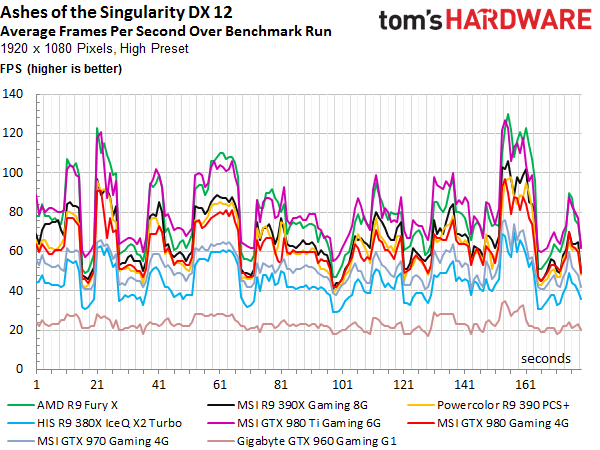

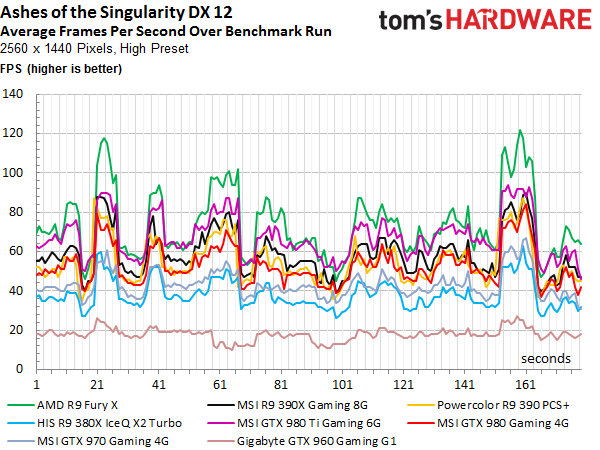

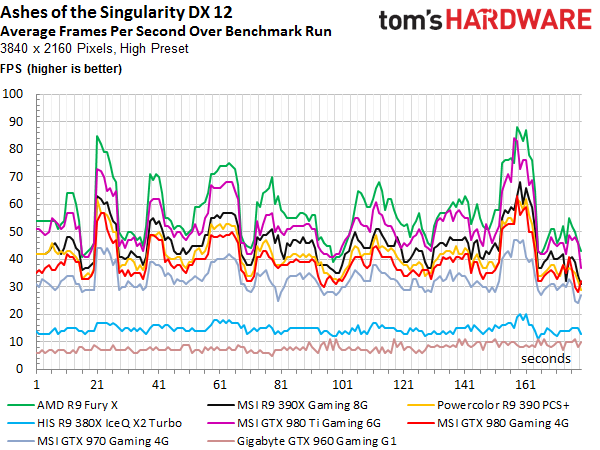

First, let's take a look at average and minimum frames per second, both of which are certainly interesting. We're using three different resolutions with the High preset: Full HD (1920x1080), WQHD (2560x1440) and Ultra HD (3840x2160). The results are presented in galleries you can scroll through.

The minimum frames per second reflect the subjective experience best, especially when it comes to stuttering in a few spots. With only a few exceptions, Nvidia edges out AMD. Then again, results like this don't necessarily tell the whole story, as we'll see below.

Frame Rates Over Time

Let's take a look at the frame per second curve for the benchmark's entire 180-second run next. Our interpreter summarizes the log file's data so that we can put it in a graph that shows how frame rate changes across the benchmark's different scenes with their different loads.

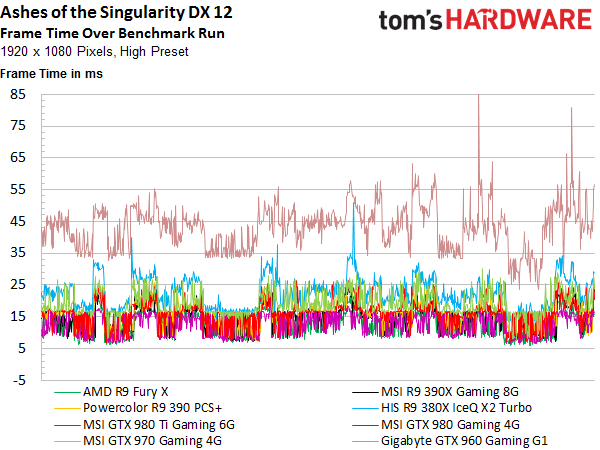

Frame Time And Smoothness

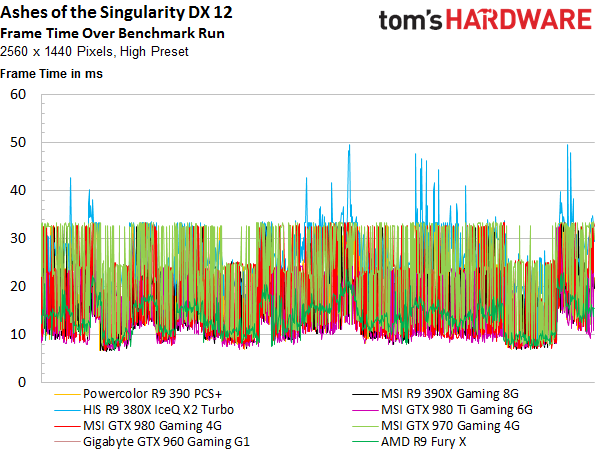

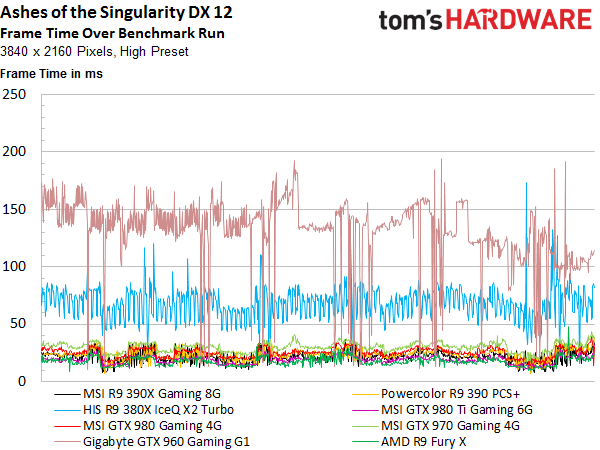

The graph above is still just not detailed enough to provide us with a good representation of the subjective experience. The only way we can achieve this is by looking at the actual render times. Since different graphics cards render different numbers of frames during the benchmark run depending on their speed, we're using a complex mathematical procedure to have our interpreter analyze the log file. Larger deviations are weighed differently compared to consistent sequences. This way, the subjective stuttering is retained.

The 2560x1440 resolution with the High preset is great for us because the faster Nvidia graphics cards hit their limit earlier due to the increased CPU load. Even though the frame rates and frame rates over time don't show this at all, this graph indicates that the CPU sets a clear cap on performance due to the draw calls.

Smoothness

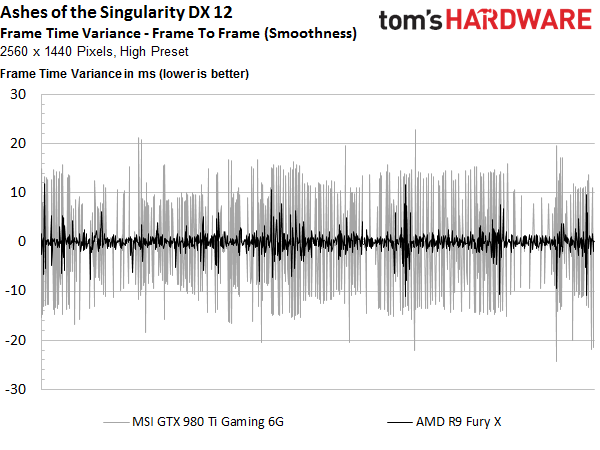

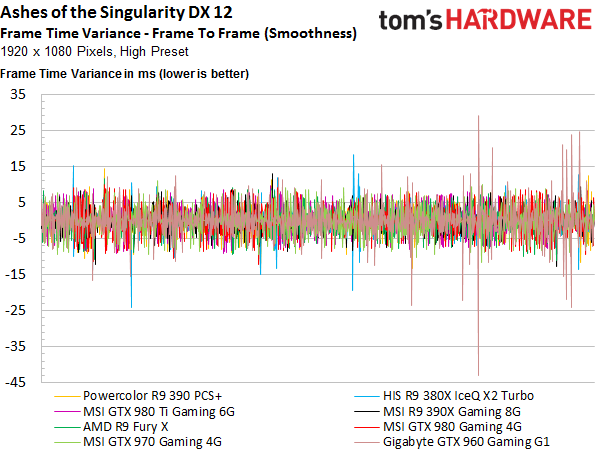

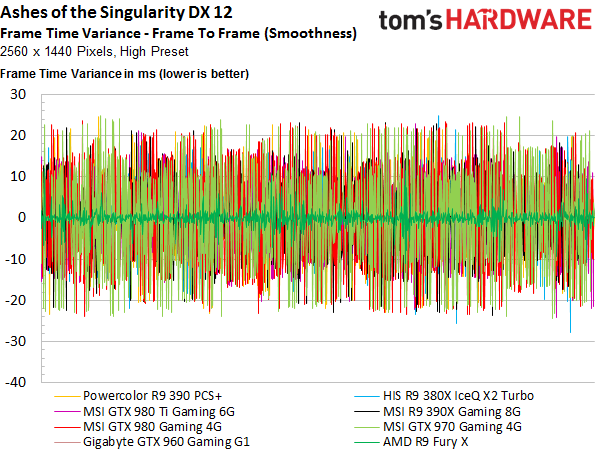

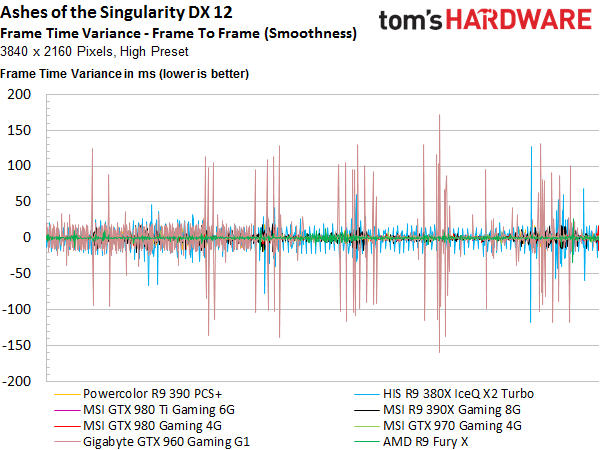

Finally, let's take a look at the smoothness, which is to say the relative time differences between frames. Looking at the benchmark results this way shows any stuttering that you might subjectively experience better without being influenced by the actual rendering time.

Using the same settings to examine the subjective user experience, AMD's Radeon R9 Fury X offers a calmer and smoother picture across the entire benchmark run. Because we also statistically analyzed the CPU and driver data, we'll see why this is the case shortly.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Frame Rates And Times Bottom Line

AMD's graphics cards do particularly well in scenes that have a lot of AI activity (and its accompanying compute load).

The older Radeon R9 390X is especially noteworthy in this context, since it's actually playing in Nvidia’s GeForce GTX 980 Ti's league, and not just in the markedly slower non-Ti version's. Similarly, an overclocked AMD Radeon R9 390 handily beats both Nvidia's overclocked GeForce GTX 970 and 980.

At Full HD, AMD's Radeon R9 380X does pretty well, whereas Nvidia's GeForce GTX 960 can't compete.

All of these results are based on just one benchmark, of course, which isn't representative of most games. Still, it gives us a bit of an idea where optimizations for asynchronous shading/compute might lead, though.

Current page: Frame Rates & Times

Prev Page Introduction And Test System Next Page Bottlenecks & Render Times

Igor Wallossek wrote a wide variety of hardware articles for Tom's Hardware, with a strong focus on technical analysis and in-depth reviews. His contributions have spanned a broad spectrum of PC components, including GPUs, CPUs, workstations, and PC builds. His insightful articles provide readers with detailed knowledge to make informed decisions in the ever-evolving tech landscape

-

In other words DX12 is business gimmick which doesn't translate to squat in real game scenario and I am glad I stayed on Windows 7...running crossfire R9 390x.Reply

-

FormatC Especially Hawaii / Grenada can benefit from asynchronous shading / compute (and your energy supplier). :)Reply -

17seconds An AMD sponsored title that shows off the one and only part of DirectX 12 where AMD cards have an advantage. The key statement is: "But where are the games that take advantage of the technology?" Without that, Async Compute will quickly start to take the same road taken by Mantle, remember that great technology?Reply -

FormatC ReplyAn AMD sponsored title

Really? Sponsoring and knowledge sharing are two pairs of shows. Nvidia was invited too. ;)

Async Compute will quickly start to take the same road taken by Mantle

Sure? The design of the most current titles and engines was started long time before Microsoft started with DirectX 12. You can find the DirectX 12 render path in first steps now in a lot of common engines and I'm sure that PhysX will faster die than DirectX12. Mantle was the key feature to wake up MS, not more. And: it's async compute AND shading :) -

turkey3_scratch Well, there's no denying that for this game the 390X sure is great performing.Reply -

James Mason ReplyAn AMD sponsored title

Really? Sponsoring and knowledge sharing are two pairs of shows. Nvidia was invited too. ;)

Async Compute will quickly start to take the same road taken by Mantle

Sure? The design of the most current titles and engines was started long time before Microsoft started with DirectX 12. You can find the DirectX 12 render path in first steps now in a lot of common engines and I'm sure that PhysX will faster die than DirectX12. Mantle was the key feature to wake up MS, not more. And: it's async compute AND shading :)

Geez, Phsyx has been around for so long now and usually only the fanciest of games try and make use of it. It seems pretty well adopted, but it's just that not all games really need to add an extra layer of physics processing "just for the lulz." -

Wisecracker Thanks for the effort, THG! Lotsa work in here.Reply

What jumps out at me is how the GCN Async Compute frame output for the R9 380X/390X barely moves from 1080p to 1440p ---- despite 75% more frames. That's sumthin' right there.

It will be interesting to see how Pascal responds ---- and how Polaris might *up* AMD's GPU compute.

Neat stuff on the CPU, too. It would be interesting to see how i5 ---> i7 hyperthreads react, and how the FX 8-cores (and 6-cores) handle the increased emphasis on parallelization.

You guys don't have anything better to do .... right? :)

-

For someone who runs Crossfire R9 390x (three cards) DX12 makes no difference in term of performance. For all BS Windows 10 brings not worth *downgrading to considering that lot of games under Windows 10 are simply broken or run like garbage where no issue under Windows 7.Reply

-

ohim ReplyAn AMD sponsored title that shows off the one and only part of DirectX 12 where AMD cards have an advantage. The key statement is: "But where are the games that take advantage of the technology?" Without that, Async Compute will quickly start to take the same road taken by Mantle, remember that great technology?

Instead of making random assumptions about the future of DX12 and Async shaders you should first be mad at Nvidia for stating they have full DX12 cards and that`s not the case, and the fact that Nvidia is trying hard to fix this issues trough software tells a lot.

PS: it`s so funny to see the 980ti being beaten by 390x :) -

cptnjarhead ReplyFor someone who runs Crossfire R9 390x (three cards) DX12 makes no difference in term of performance. For all BS Windows 10 brings not worth *downgrading to considering that lot of games under Windows 10 are simply broken or run like garbage where no issue under Windows 7.

There are no DX12 games yet for review, so why would you assume that you should see better performance in games made for windows 7 DX11, in win10 DX12? Especially in "tri-Fire". DX12 has significant advantages over DX11 so you should wait till these games actually come out before making assumptions on performance, or the validity of DX12's ability to increase performance.

My games, FO4, GTAV and others run better in Win10 and i have had zero problems. I think your issue is more Driver related, which is on AMD's side, not MS's operating system.

I'm on the Red team by the way.