Intel releases new tool to measure gaming image quality — AI tool measures impact of upscalers, frame gen, others; Computer Graphics Video Quality Metric now available on GitHub

New dataset and companion AI model chart a new path forward for objectively quantifying image quality from modern rendering techniques

Intel is potentially making it easier to objectively evaluate the image quality of modern games. A new AI-powered video quality metric, called the Computer Graphics Visual Quality Metric, or CGVQM, is now available on GitHub, as a PyTorch application.

A frame of animation is rarely natively rendered in today's games. Between the use of upscalers like DLSS, frame-generation techniques, and beyond, a host of image quality issues, like ghosting, flicker, aliasing, disocclusion, and many more, can arise. We frequently discuss these issues qualitatively, but assigning an objective measurement or score to the overall performance of those techniques in the context of an output frame is much harder.

Metrics like the peak signal-to-noise ratio (PSNR) are commonly used to quantify image quality in evaluations of video, but those measurements are subject to limitations and misuse.

One such potential misuse is in the evaluation of real-time graphics output with PSNR, which is mainly meant for evaluating the quality of lossy compression. Compression artifacts aren't generally a problem in real-time graphics, though, so PSNR alone can't account for all the potential issues we mentioned.

To create a better tool for objectively evaluating the image quality of modern real-time graphics output, researchers at Intel have taken a twofold approach, discussed in a paper, "CGVQM+D: Computer Graphics Video Quality Metric and Dataset," by Akshay Jindal, Nabil Sadaka, Manu Mathew Thomas, Anton Sochenov, and Anton Kaplanyan.

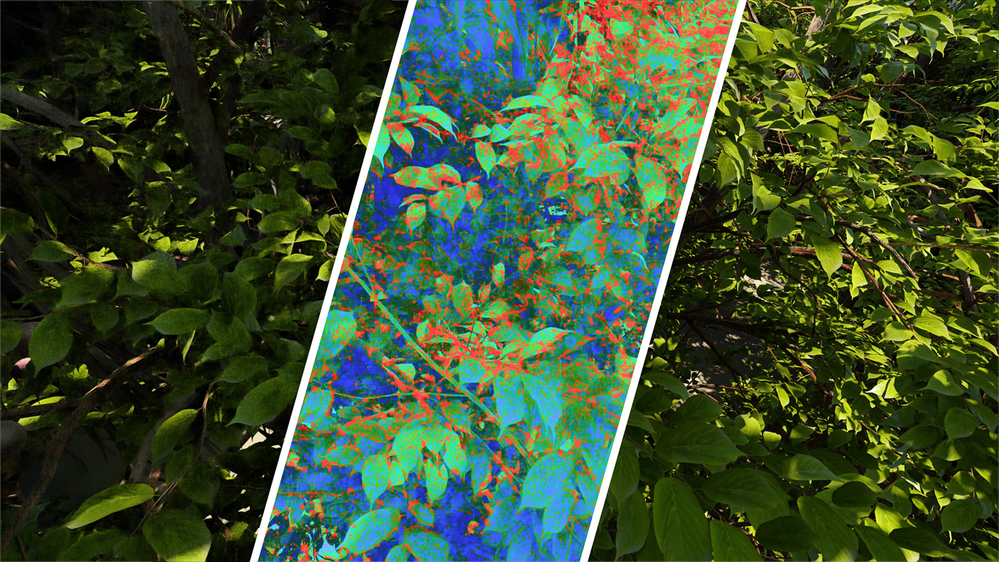

The researchers first created a new video dataset, called the Computer Graphics Visual Quality Dataset or CGVQD, that includes a variety of potential image quality degradations created by modern rendering techniques. The authors considered distortions arising from path tracing, neural denoising, neural supersampling techniques (like FSR, XeSS, and DLSS), Gaussian splatting, frame interpolation, and adaptive variable-rate shading.

Second, the researchers trained an AI model to produce a new rating of image quality that accounts for this wide range of possible distortions: the aforementioned Computer Graphics Visual Quality Metric, or CGVQM. Using an AI model makes the evaluation and rating of real-time graphics output quality a more scalable exercise.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

To ensure that their AI model's observations correlated well with those of humans, the researchers first presented their new video data set to a group of human observers to create a ground truth regarding the perceived extremity of the various distortions within. The observers were asked to rate the different types of distortions contained in each video on a scale ranging from "imperceptible" to "very annoying."

With their quality baseline in hand, the researchers set out to train a neural network that could also identify these distortions, ideally one competitive with human observers. They chose a 3D convolutional neural network (CNN) architecture, using a residual neural network (ResNet) as the basis for their image quality evaluation model. They used the 3D-ResNet-18 model as the basis of their work, training and calibrating it specifically to recognize the distortions of interest.

The choice of a 3D network was crucial in achieving high performance on the resulting image quality metric, according to the paper. A 3D network can consider not only spatial (2D) pattern information, such as the grid of pixels in an input frame, but also temporal pattern information.

In use, the paper claims that the CGVQM model outperforms practically every other similar image quality evaluation tool, at least on the researchers' own dataset. Indeed, the more intensive CGVQM-5 model is second only to the human baseline evaluation of the CGVQD catalog of videos, while the simpler CGVQM-2 still places third among the tested models.

The researchers further demonstrate that their model not only performs well at identifying and localizing distortions within the Computer Graphics Visual Quality Dataset, but also is able to generalize its identification powers to videos that aren't part of its training set.

That generalizable characteristic is critical for the model to become a broadly useful tool in evaluating image quality from real-time graphics applications. While the CGVQM-2 and CGVQM-5 models didn't lead across the board on other data sets, they were still strongly competitive across a wide range of them.

The paper leaves open several possible routes to improving this neural-network-driven approach to evaluating real-time graphics output. One is the use of a transformer neural network architecture to further improve performance. The researchers explain that they chose a 3D-CNN architecture for their work due to the massive increase in compute resources that would be required to run a transformer network against a subject data set.

The researchers also leave open the possibility of including information like optical flow vectors to refine the image quality evaluation. Even in its present state, however, the strength of the CGVQM model suggests it's an exciting advance in the evaluation of real-time graphics output.

Follow Tom's Hardware on Google News to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button.

As the Senior Analyst, Graphics at Tom's Hardware, Jeff Kampman covers everything to do with GPUs, gaming performance, and more. From integrated graphics processors to discrete graphics cards to the hyperscale installations powering our AI future, if it's got a GPU in it, Jeff is on it.

-

dalek1234 Doesn't Intel have anything better to do, like design and manufacture a CPU that doesn't suck?Reply -

Dementoss I don't need an Intel tool, to tell me whether I think a game looks good on my monitor.Reply -

rluker5 I'm glad some company is trying to standardize image quality measurements in real time.Reply

Otherwise people will just argue apples and oranges and progress will be slow. -

Gururu You all are missing the point. It will greatly assist game developers to correct for quality anomalies when using MFR or upscaling.Reply -

-Fran- This is great news!Reply

We need AMD, nVidia and Intel to keep tabs on eachother as much as possible so we, the consumers, benefit from their "checks". Currently, AMD hasn't had the will (or, being incredibly charitable: resources) to create a similar thing. Not even the community has come up with something like that for assessing how "good" a rendered image is.

I remember Maxxon used to do that with OGL accuracy tests, but that was dropped after CB15, I think.

Good on you Intel and thanks.

Regards. -

derekullo 1990s - 1024 \00d7 768Reply

2010s - 1080p

2020 - 2160p

2024 - 720p upscaled to 2160p with every other frame being fake

We've made it full circle ! -

thestryker Reply

I agree and really hope they take advantage of this to do just that.Gururu said:You all are missing the point. It will greatly assist game developers to correct for quality anomalies when using MFR or upscaling. -

bill001g Reply

A huge number of people do. All they seem to see is the frame count number in the corner. Go watch some videos where they point out what artifacts from rendering look like. Once you know what to look for you see things you kinda ignored before. The problem is there is not simple number for quality of the image you can brag about on social mediaDementoss said:I don't need an Intel tool, to tell me whether I think a game looks good on my monitor.