How to Build a Raspberry Pi Object Identification Machine

We can train our Raspberry Pi to identify objects, including other Pis.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

In this tutorial, we will train our Raspberry Pi to identify other Raspberry Pis (or other objects of your choice) with Machine Learning (ML). Why is this important? An example of an industrial application for this type of ML is identifying defects in circuit boards. As circuit boards exit the assembly line, a machine can be trained to identify a defective circuit board for troubleshooting by a human.

We have discussed ML and Artificial Intelligence in previous articles, including facial recognition and face mask identification. In the facial recognition and face mask identification projects, all training images were stored locally on the Pi and the model training took a long time as it was also performed on the Pi. In this article, we’ll use a web platform called Edge Impulse to create and train our model to alleviate a few processing cycles from our Pi. Another advantage of Edge Impulse is the ease of uploading training images, which can be done from a smartphone (without an app).

We will use BalenaCloudOS instead of the standard Raspberry Pi OS since the folks at Balena have pre-built an API call to Edge Impulse. The previous facial recognition and face mask identification tutorials also required tedious command line package installs and Python code. This project eliminates all terminal commands and instead utilizes an intuitive GUI interface.

What You’ll Need

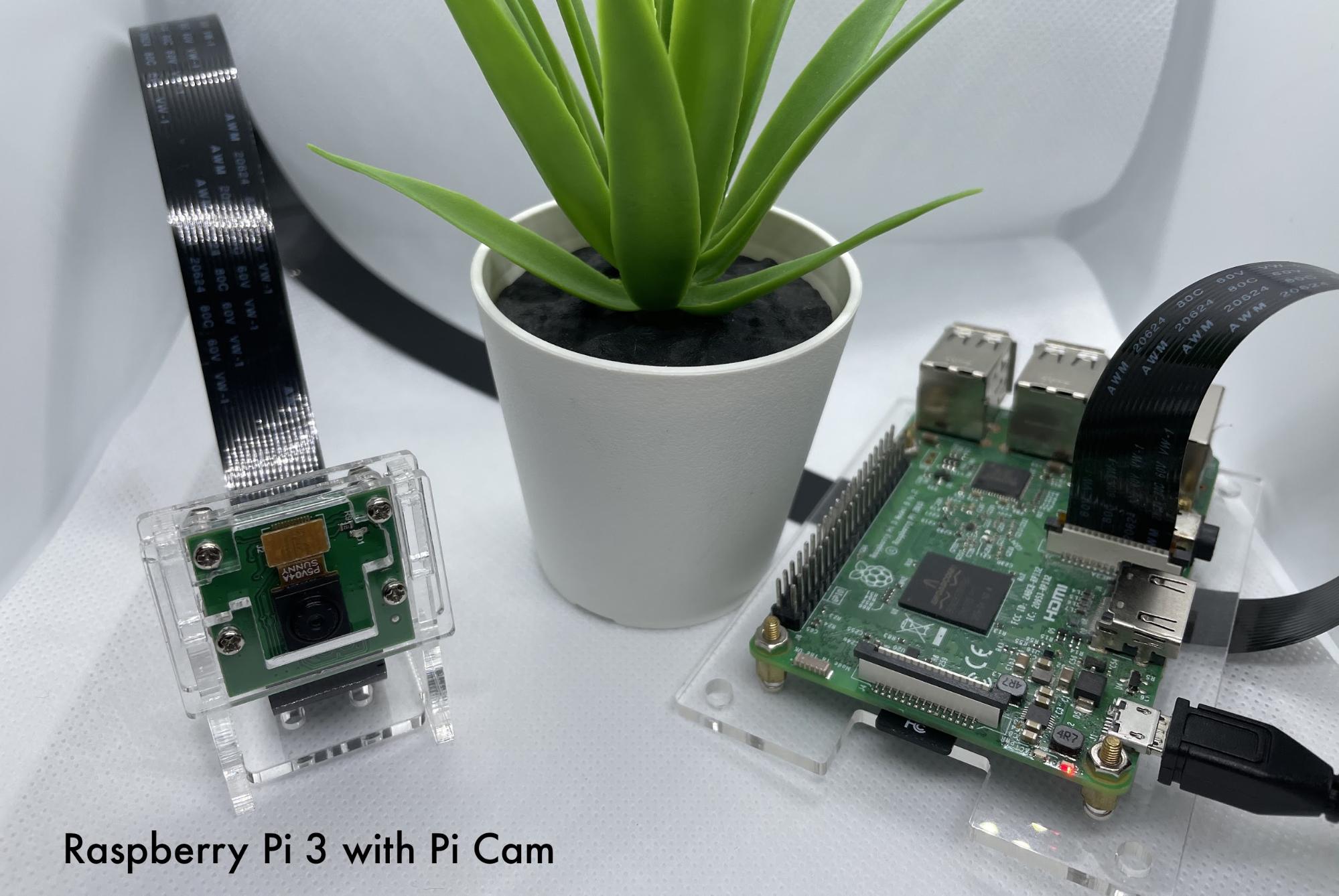

- Raspberry Pi 4, Raspberry Pi 400, or Raspberry Pi 3

- 8 GB (or larger) microSD card

- Raspberry Pi Camera, HQ Camera, or USB webcam

- Power Supply for your Raspberry Pi

- Your smartphone for taking photos

- Windows, Mac or Chromebook

- Objects for classification

Notes:

- If you are using a Raspberry Pi 400, you will need a USB webcam as the Pi 400 does not have a ribbon cable interface.

- You do NOT need a monitor, mouse, or keyboard for your Raspberry Pi in this project.

- Timing: Please plan for a minimum 1-2 hours to complete this project.

Create and Train the Model in Edge Impulse

1. Go to Edge Impulse and create a free account (or login), from a browser window on your desktop or laptop (Windows, Mac, or Chromebook).

Data Acquisition

2. Select Data Acquisition from the menu bar on the left.

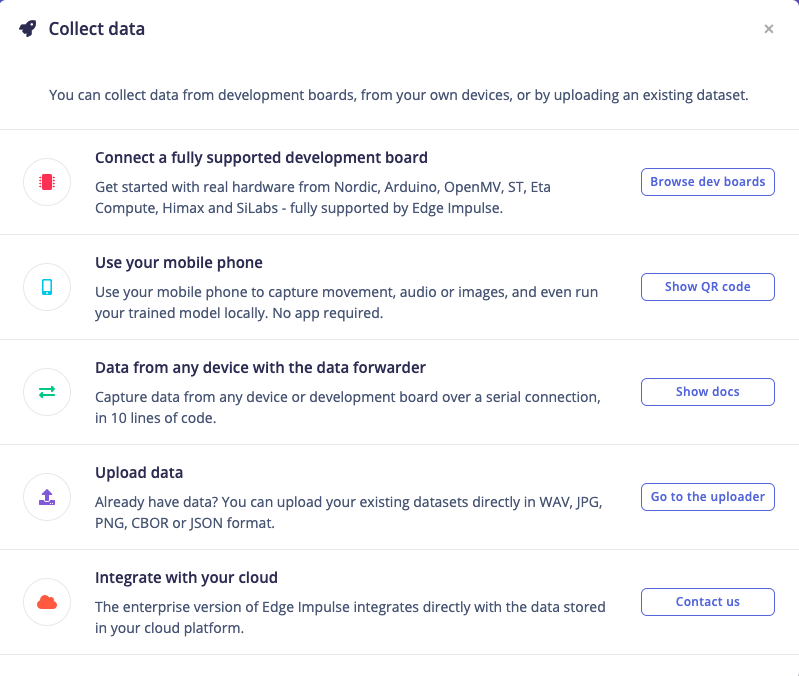

3. Upload photos from your desktop or scan a QR code with your smartphone and take photos. In this tutorial we’ll opt for taking photos with our smartphone.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

4. Select "Show QR code" and a QR code should pop-up on your screen.

5. Scan the QR code with your phone’s camera app.

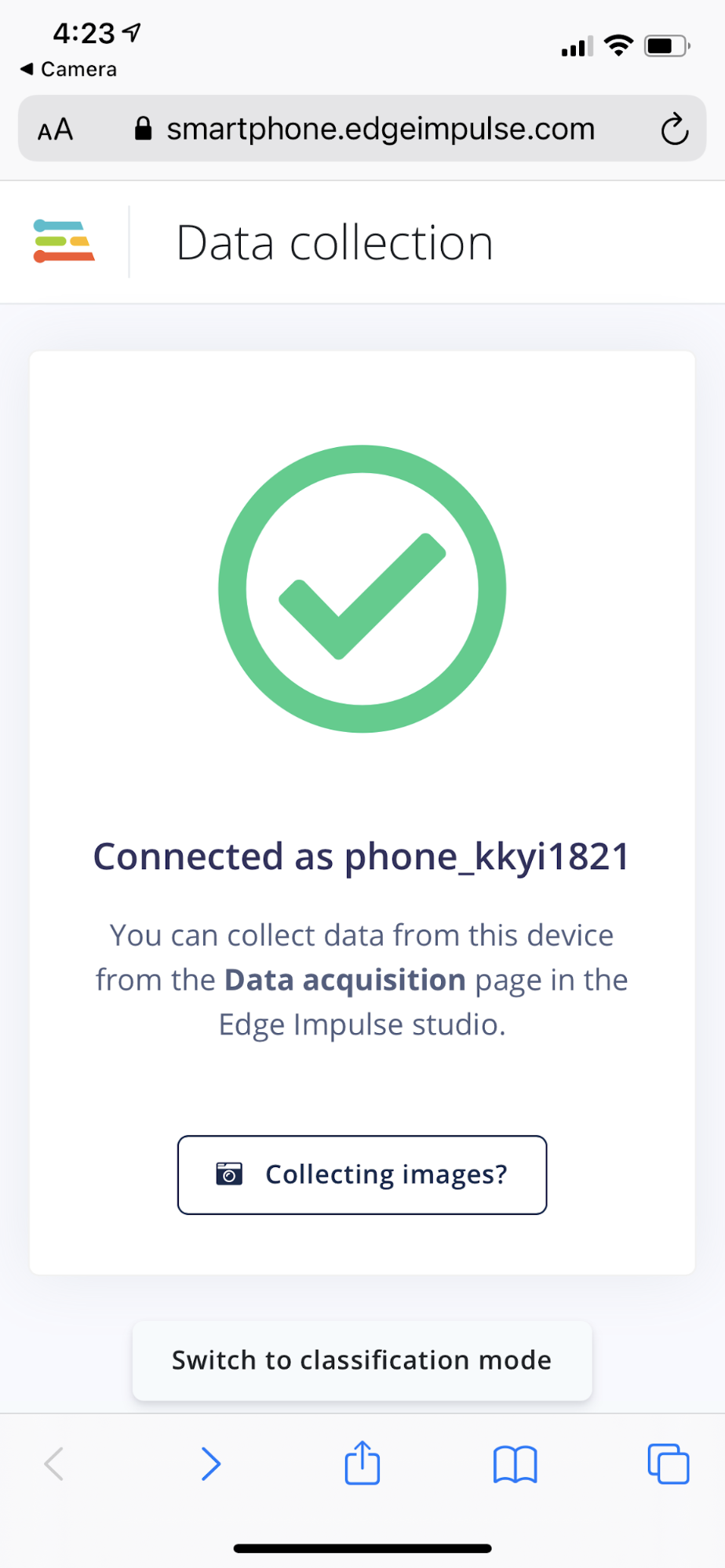

6. Select Open in browser and you’ll be taken to a data collection website. You will not need to download an app to collect images.

7. Accept permissions on your smartphone and tap "Collecting images?" in your phone’s browser screen.

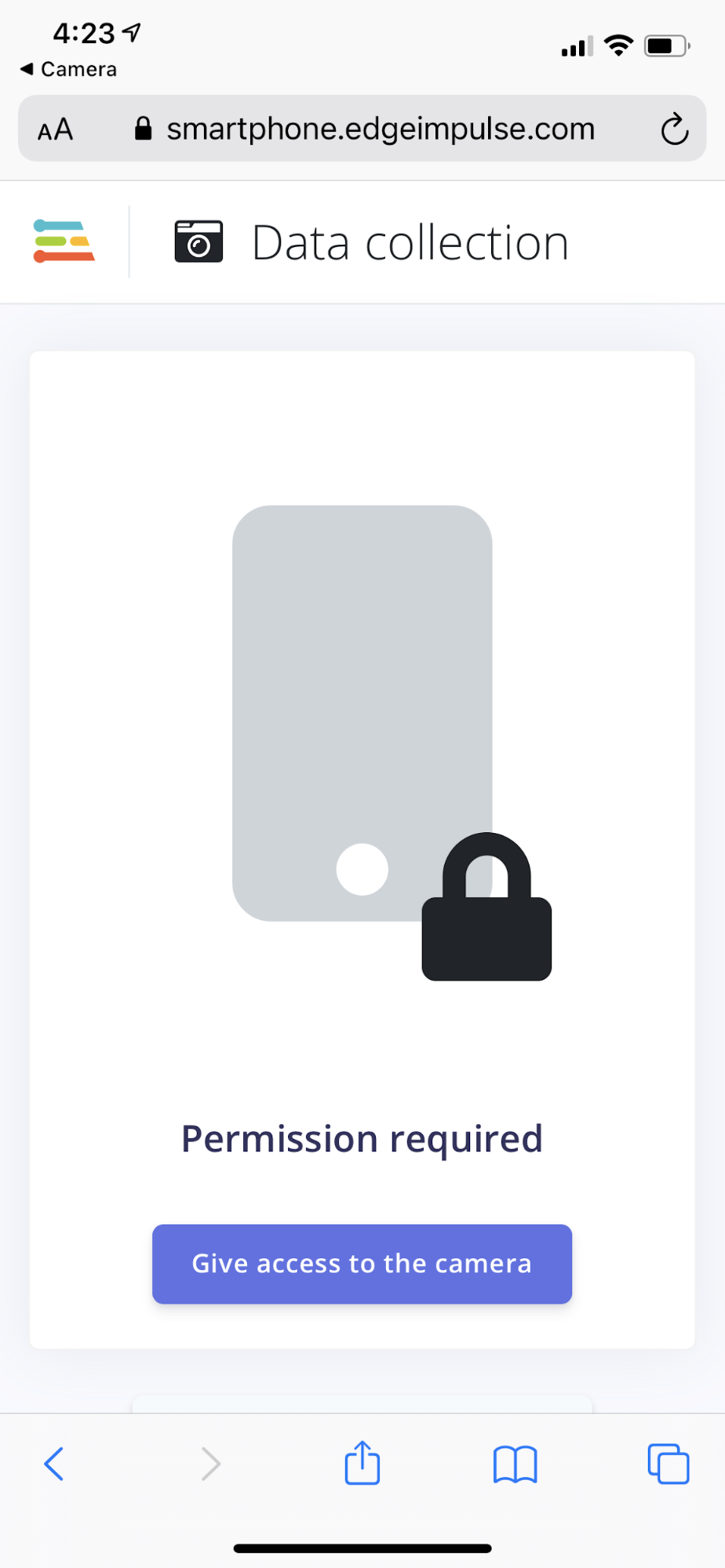

8. If prompted for permissions, tap the "Give access to the camera" button and allow access on your device.

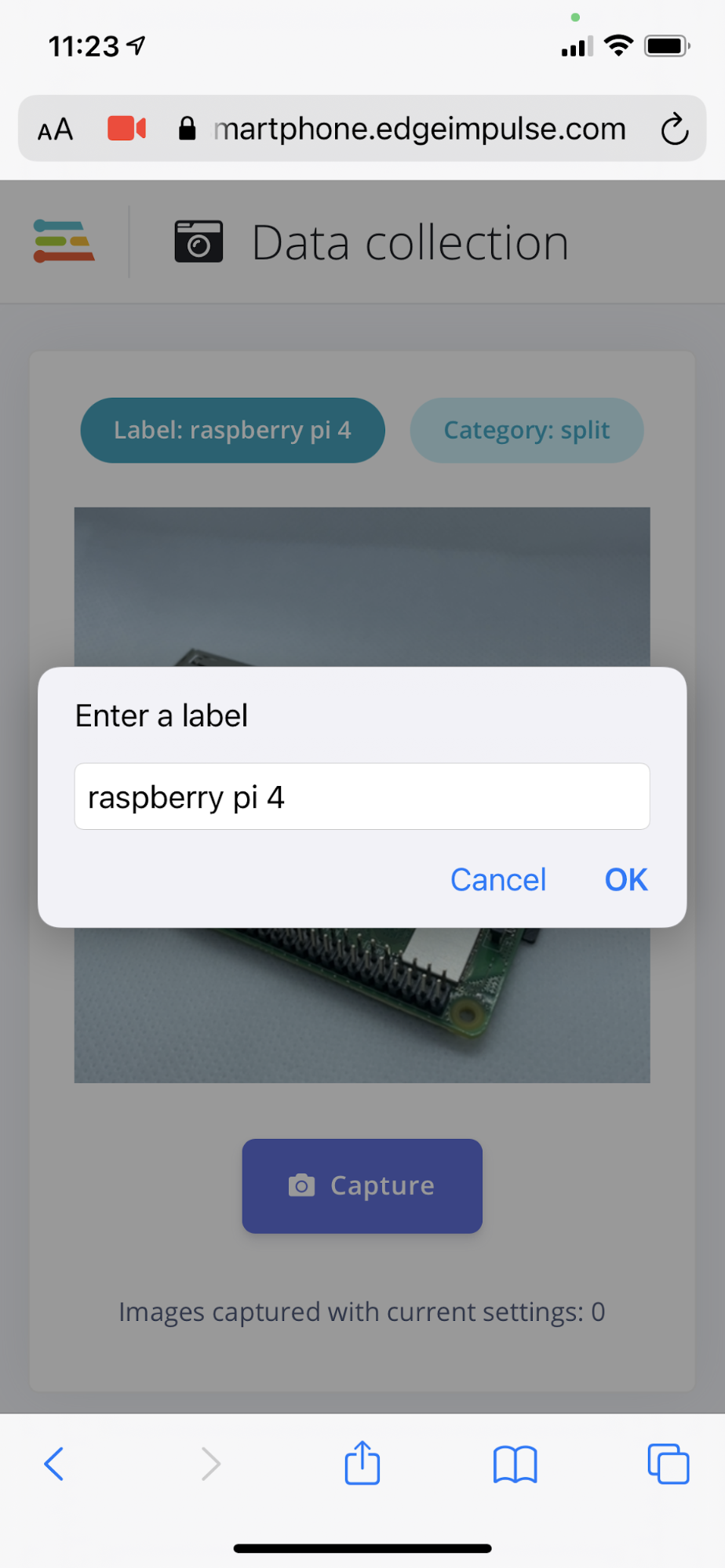

9. Tap "Label" and enter a tag for the object you will take photos of.

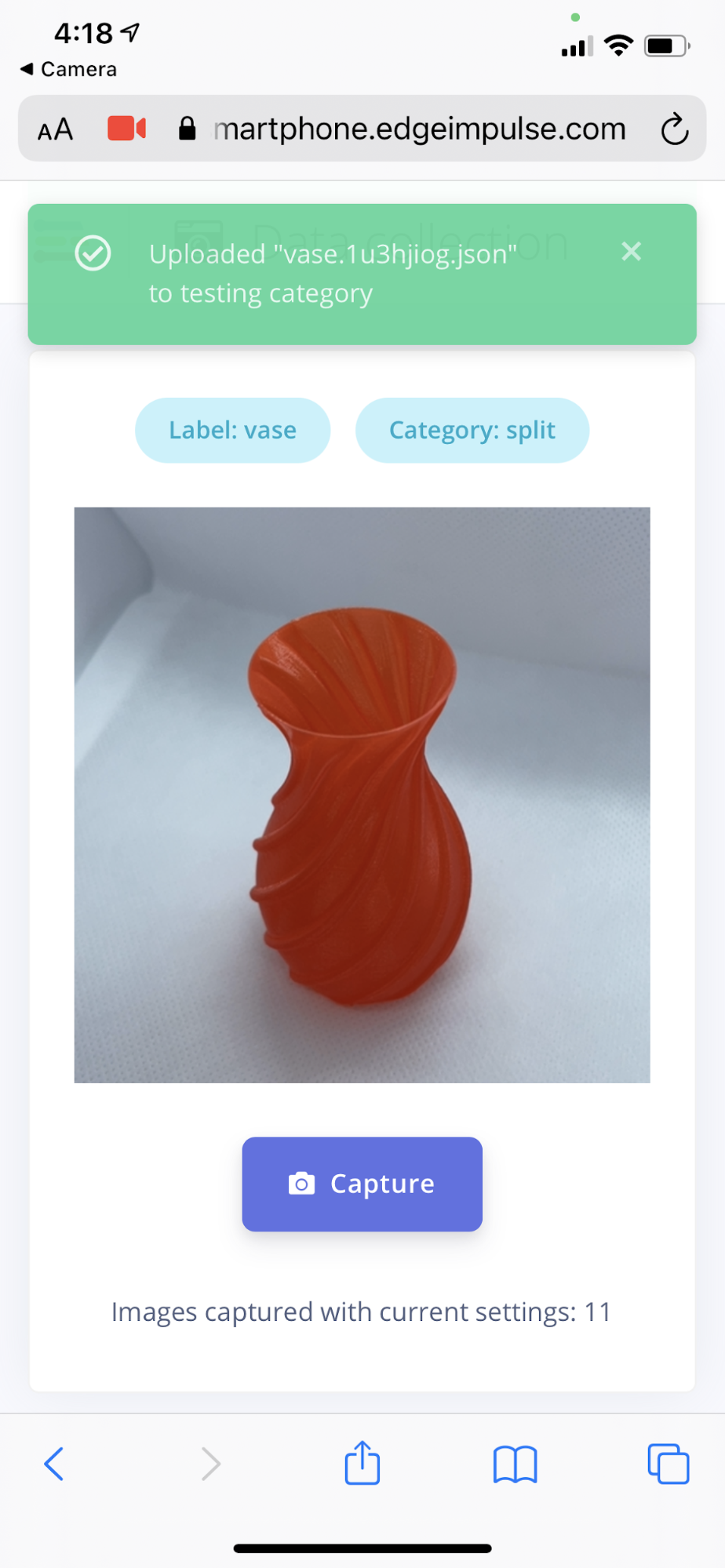

10. Take 30-50 photos of your item at various angles. Some photos will be used for training and other photos will be used for testing the model. Edge Impulse automatically splits photos between training and testing.

11. Repeat the process of Entering a label for the next object and taking 30-50 photos per object until you have at least 3 objects complete. We recommend 3 to 5 identified objects for your initial model. You will have an opportunity to re-train the model with more photos and/or types of objects later in this tutorial.

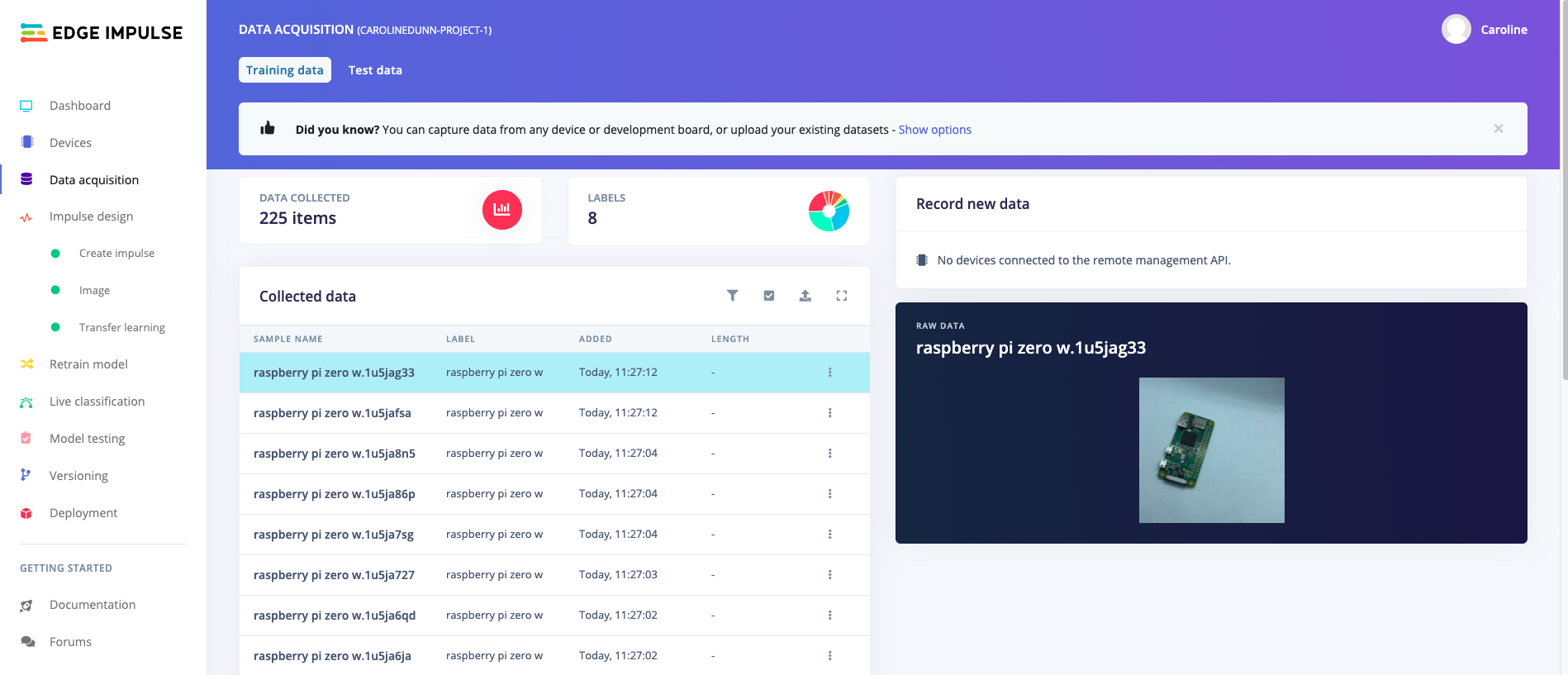

From your "Data Acquisition" tab in the Edge Impulse browser window, you should now see the total number of photos taken (or uploaded) and the number of labels (type of objects) you have classified. (You may need to refresh the tab to see the update.) Optional: You can click on any of the collected data samples to view the uploaded photo.

Impulse Design

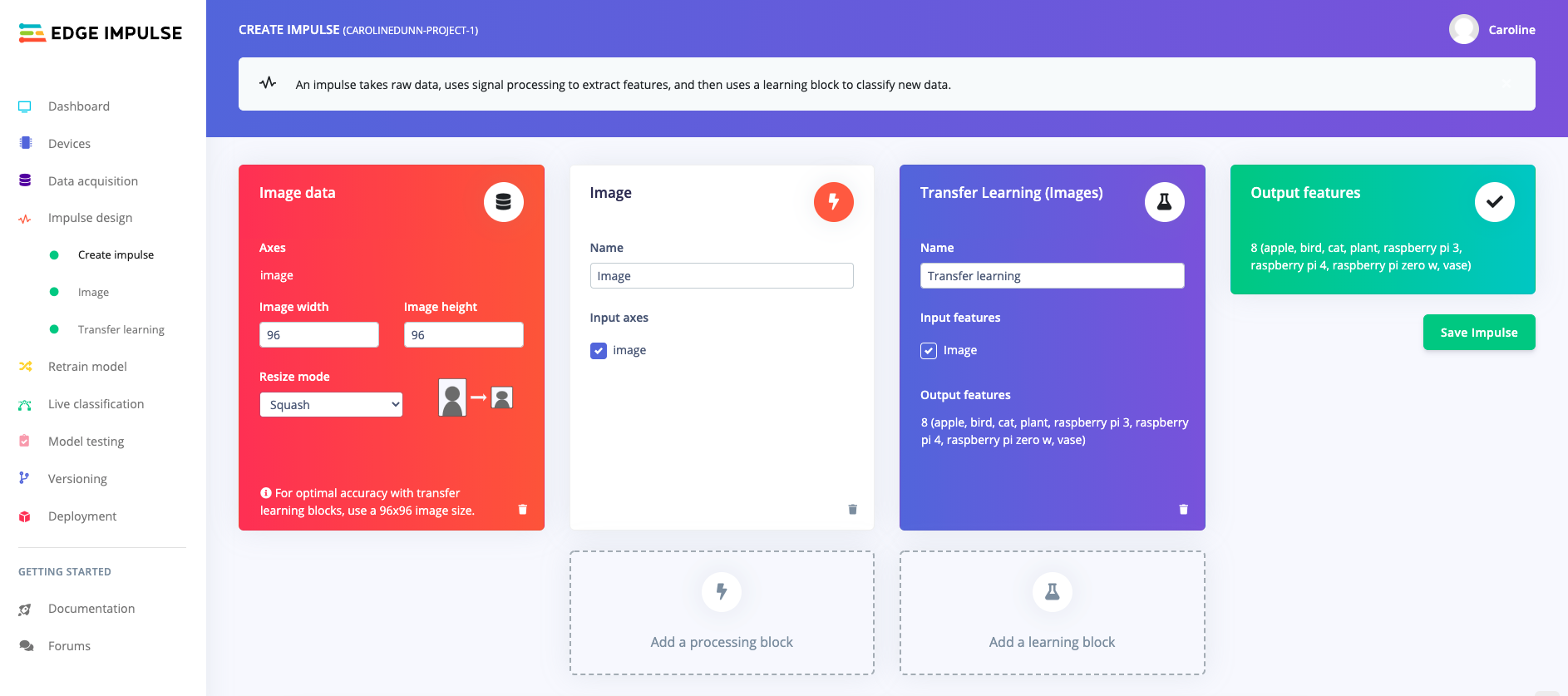

12. Click "Create impulse" from "Impulse design" in the left column menu.

13. Click "Add a processing block" and select "Image" to add Image to the 2nd column from the left.

14. Click "Add a learning block" and select "Transfer Learning."

15. Click the "Save Impulse" button on the far right.

16. Click "Image" under "Impulse design" in the left menu column.

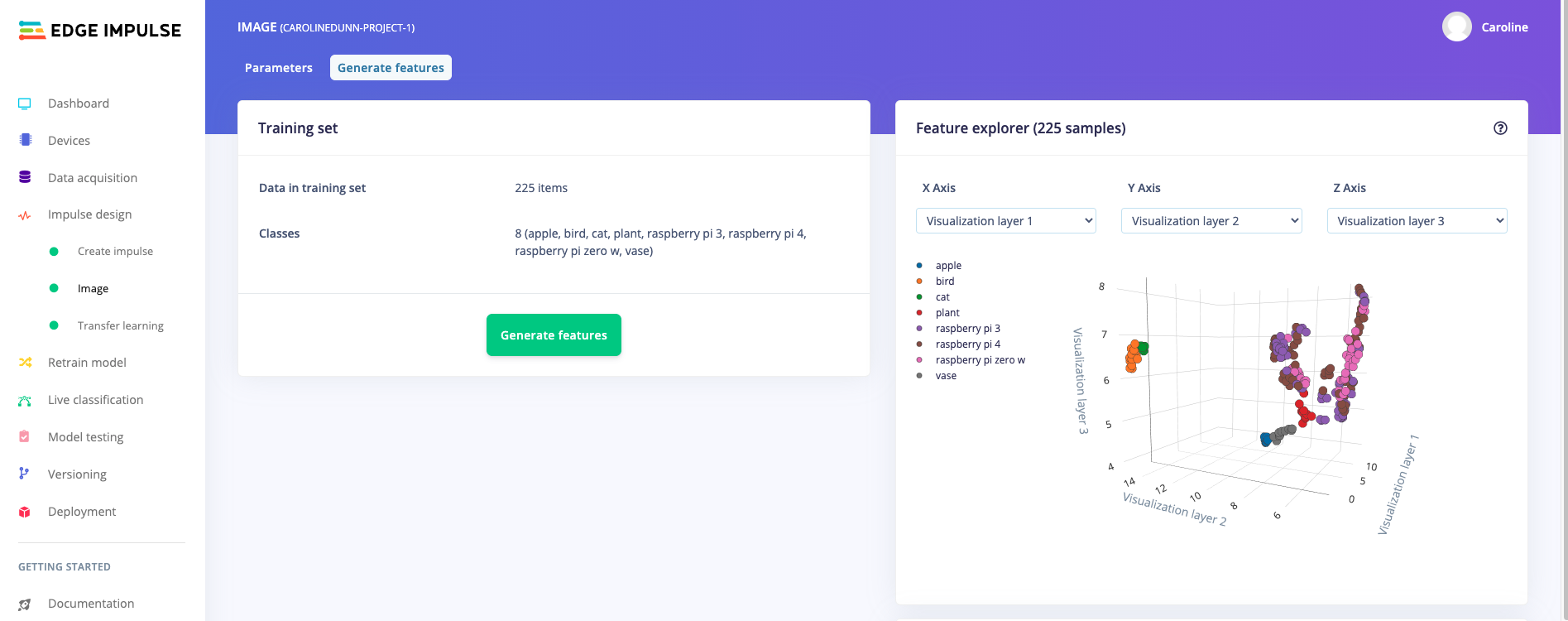

17. Select "Generate features" to the right of "Parameters" near the top of the page.

18. Click the "Generate features" button in the lower part of the "Training set" box. This could take 5 to 10 minutes (or longer) depending on how many images you have uploaded.

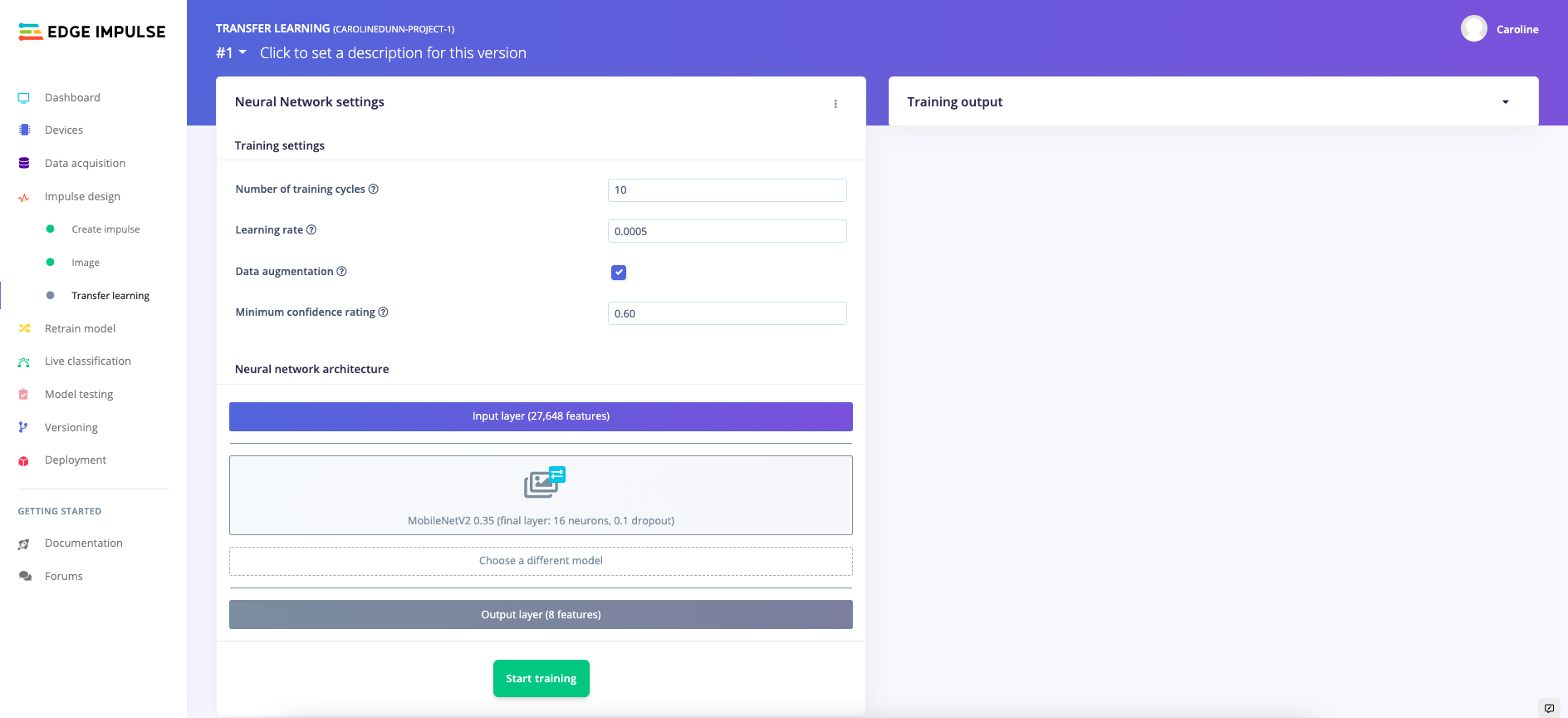

19. Select "Transfer learning" within "Impulse design," set your Training settings (keep defaults, check "Data augmentation" box), and click "Start training." This step will also take 5 minutes or more depending on your amount of data.

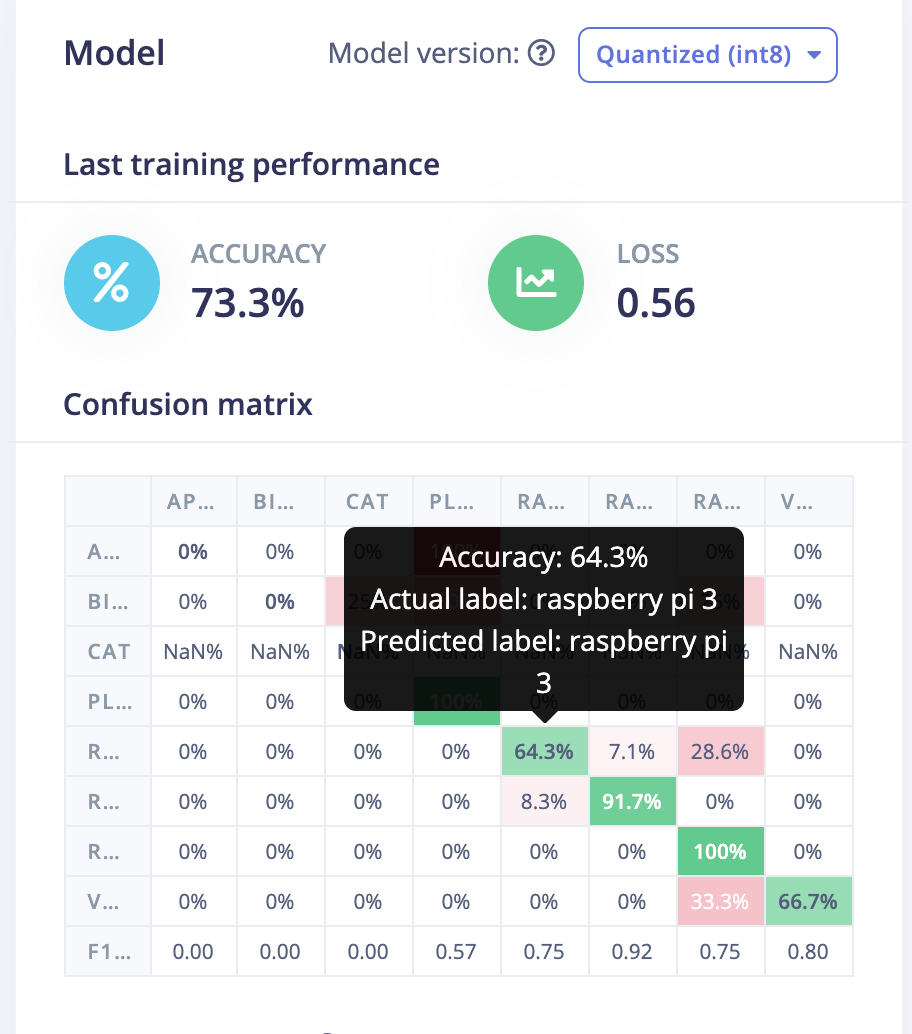

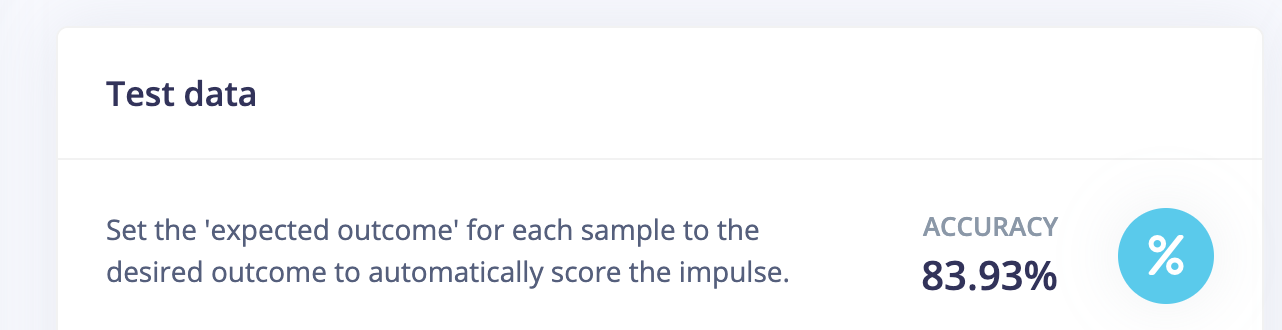

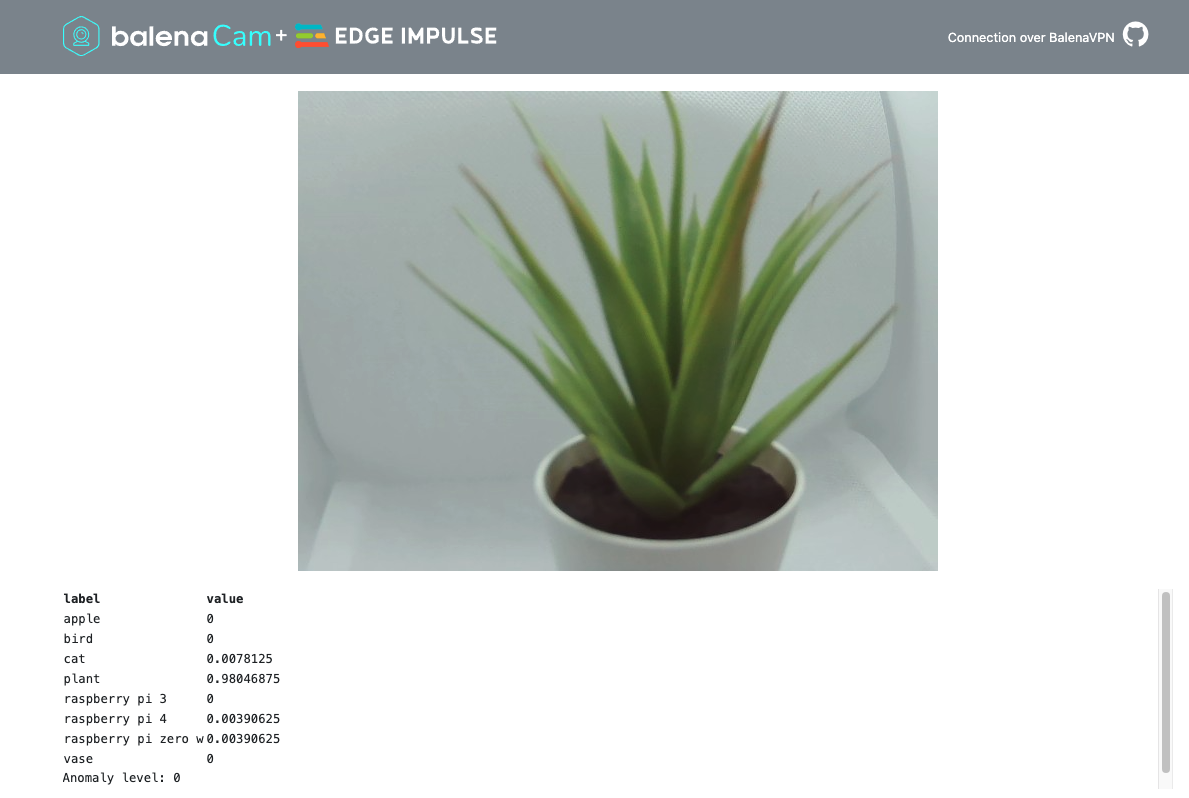

After running the training algorithm, you’ll be able to view the predicted accuracy of the model. For example, in this model, the algorithm can only correctly identify a Raspberry Pi 3 - 64.3% of the time and will misidentify a Pi 3 as a Pi Zero 28.6% of the time.

Model Testing

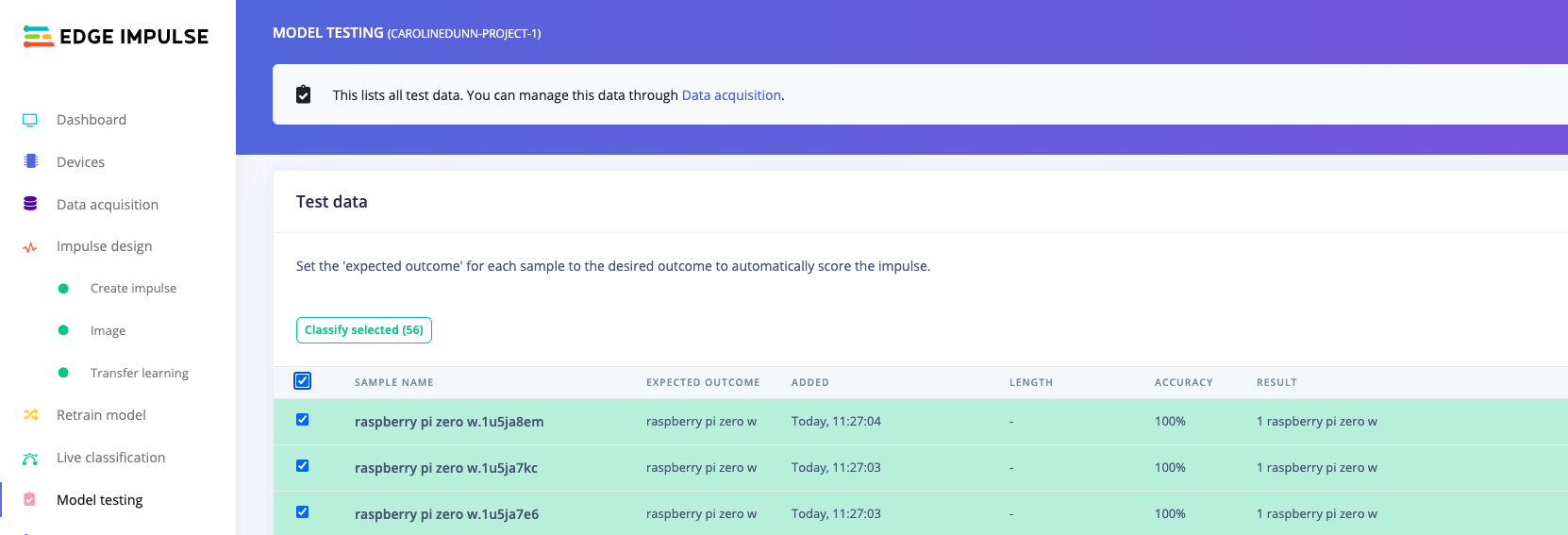

20. Select "Model testing" in the left column menu.

21. Click the top check box to select all and press "Classify selected" to test your data. The output of this action will be a percent accuracy of your model.

If the level of accuracy is low, we suggest going back to the "Data Acquisition" step and adding more images or removing a set of images.

Model Testing

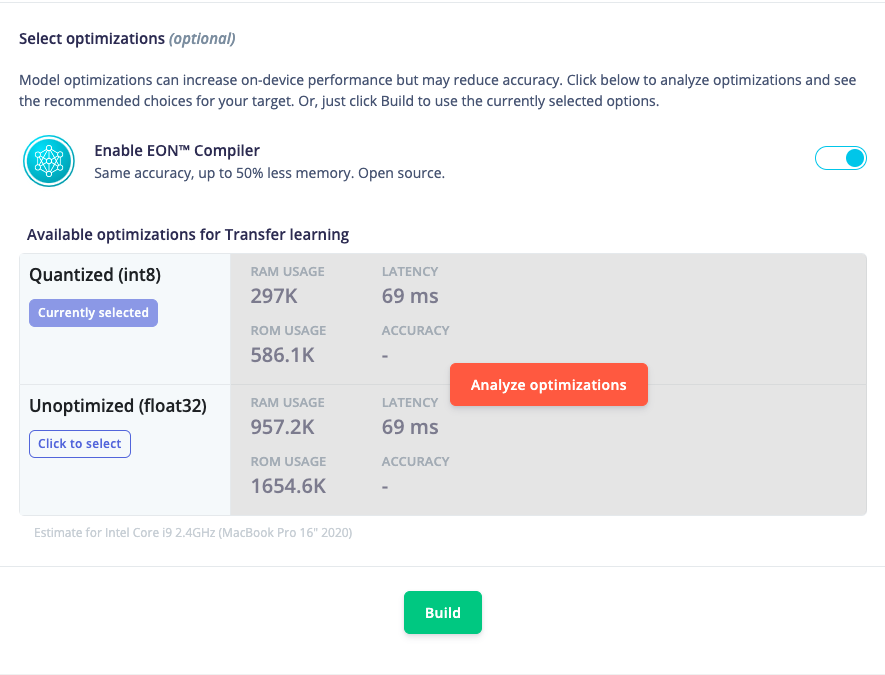

22. Select "Deployment" in the left menu column.

23. Select "WebAssembly" for your library.

24. Scroll down ("Quantized" should be selected by default) and click the "Build" button. This step may also take 3 minutes or more depending on your amount of data.

Setting Up BalenaCloud

Instead of the standard Raspberry Pi OS, we will flash BalenaCloudOS to our microSD card. The BalenaCloudOS is pre-built with an API interface to Edge Impulse and eliminates the need for attaching a monitor, mouse, and keyboard to our Raspberry Pi.

25. Create a free BalenaCloud account here. If you already have a BalenaCloud account, login to BalenaCloud.

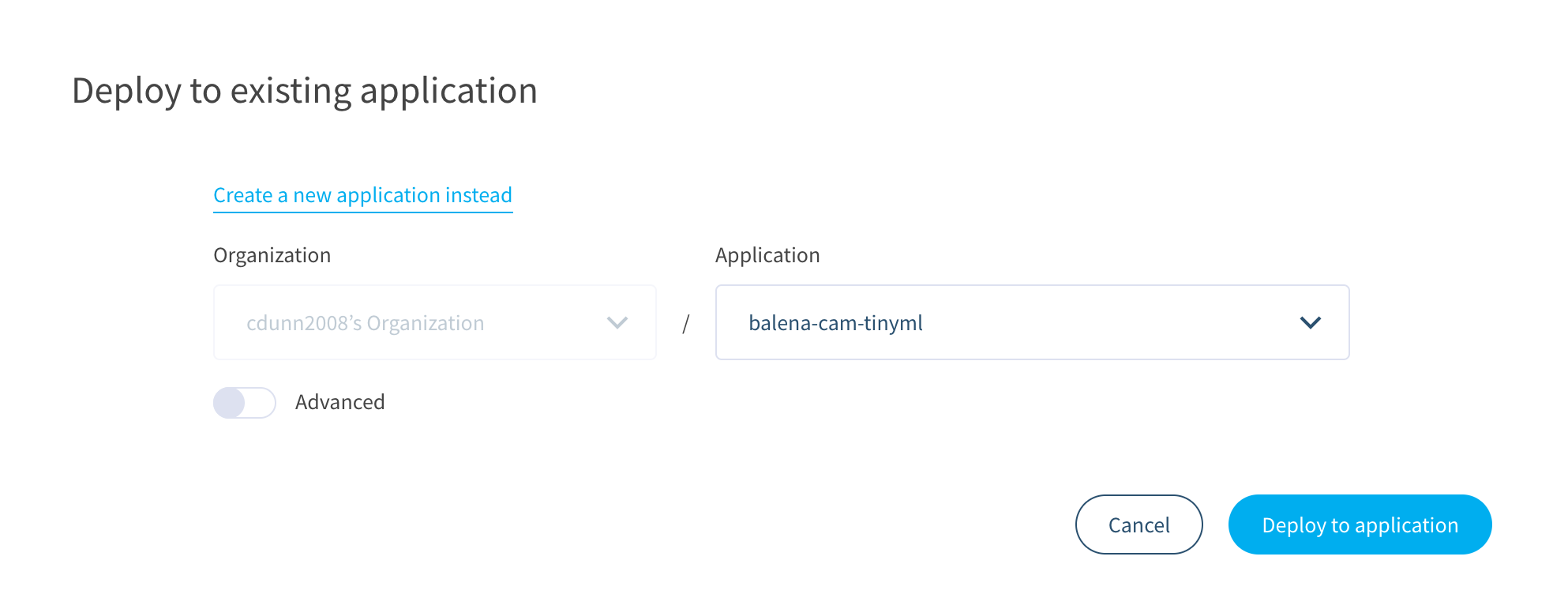

26. Deploy a balena-cam-tinyxml application here. Note: You must already be logged into your Balena account for this to automatically direct you to creating a balena-cam-tinyml application.

27. Click “Deploy to Application.”

After creating your balena-cam-tinyml application, you’ll land on the "Devices'' page. Do not create a device yet!

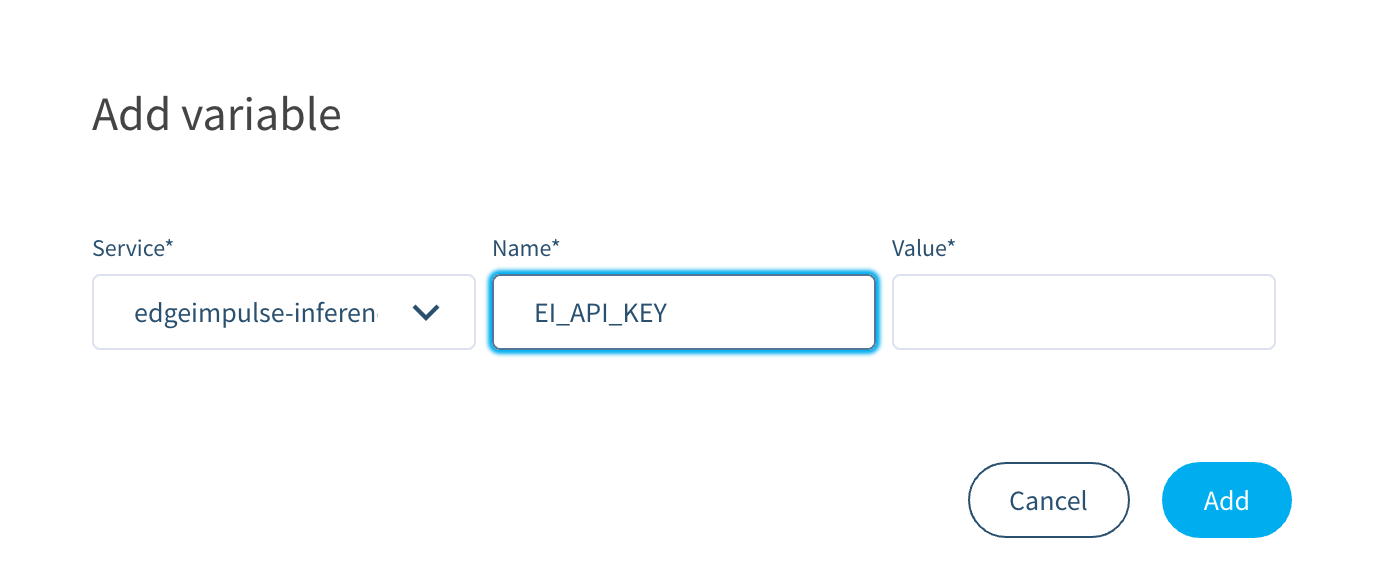

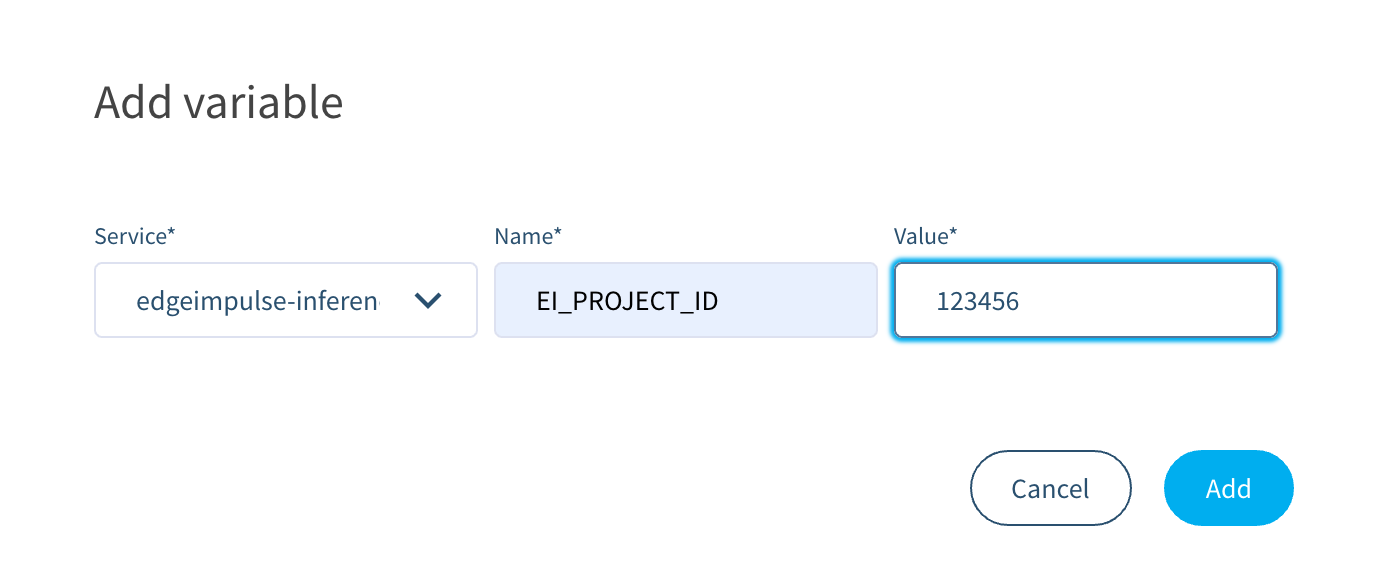

28. In Balena Cloud, select "Service Variables" and add the following 2 variables.

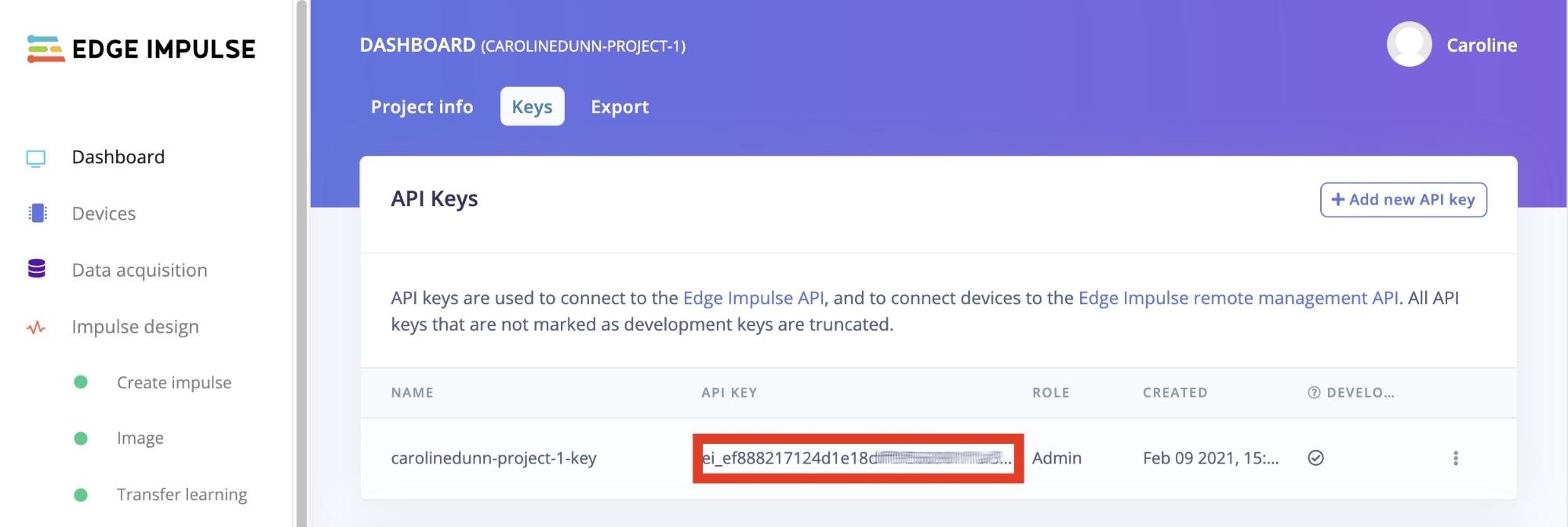

Variable 1:

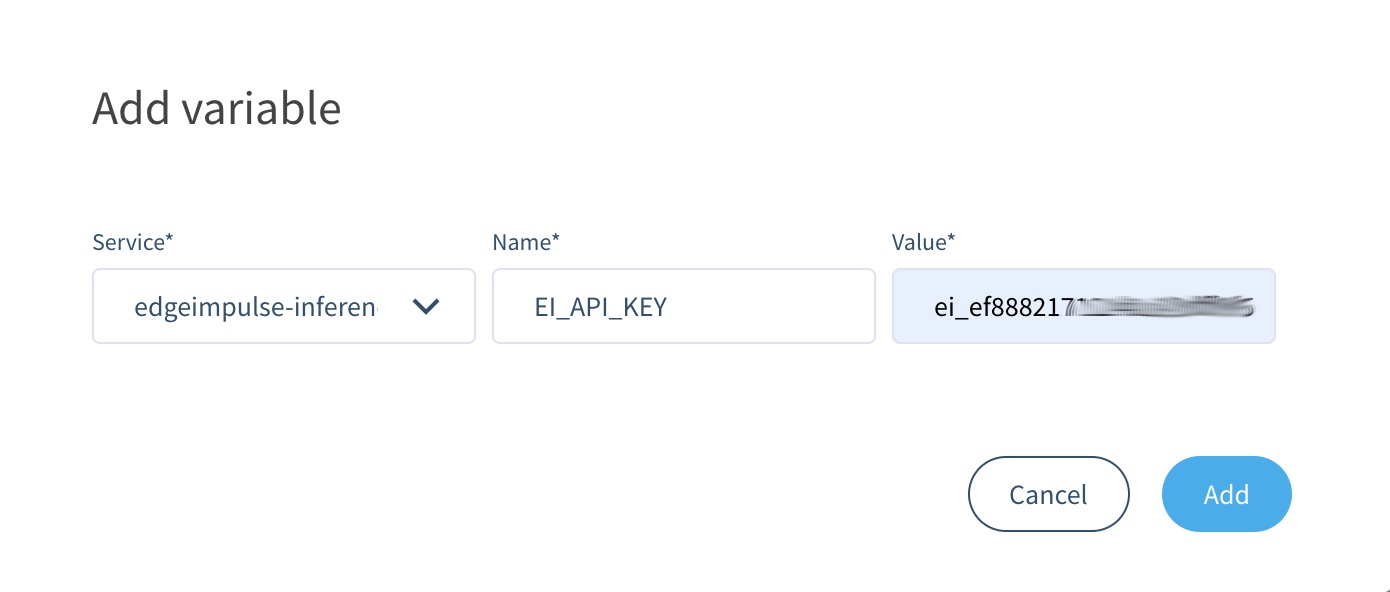

Service: edgeimpulse-inference

Name: EI_API_KEY

Value: [API key found from your Edge Impulse Dashboard].

To get your API key, go to your Edge Impulse Dashboard, select "Keys'' and copy your API key.

Go back to Balena Cloud and paste your API key in the value field of your service variable.

Click “Add”.

Variable 2:

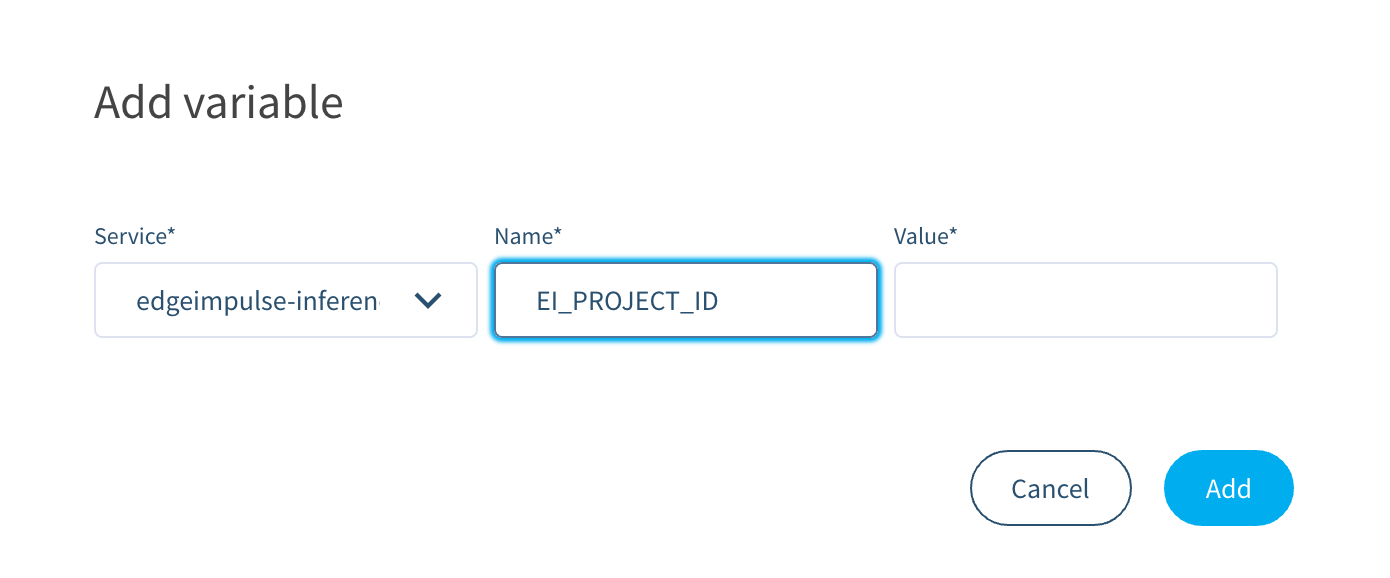

Service: edgeimpulse-inference

Name: EI_PROJECT_ID

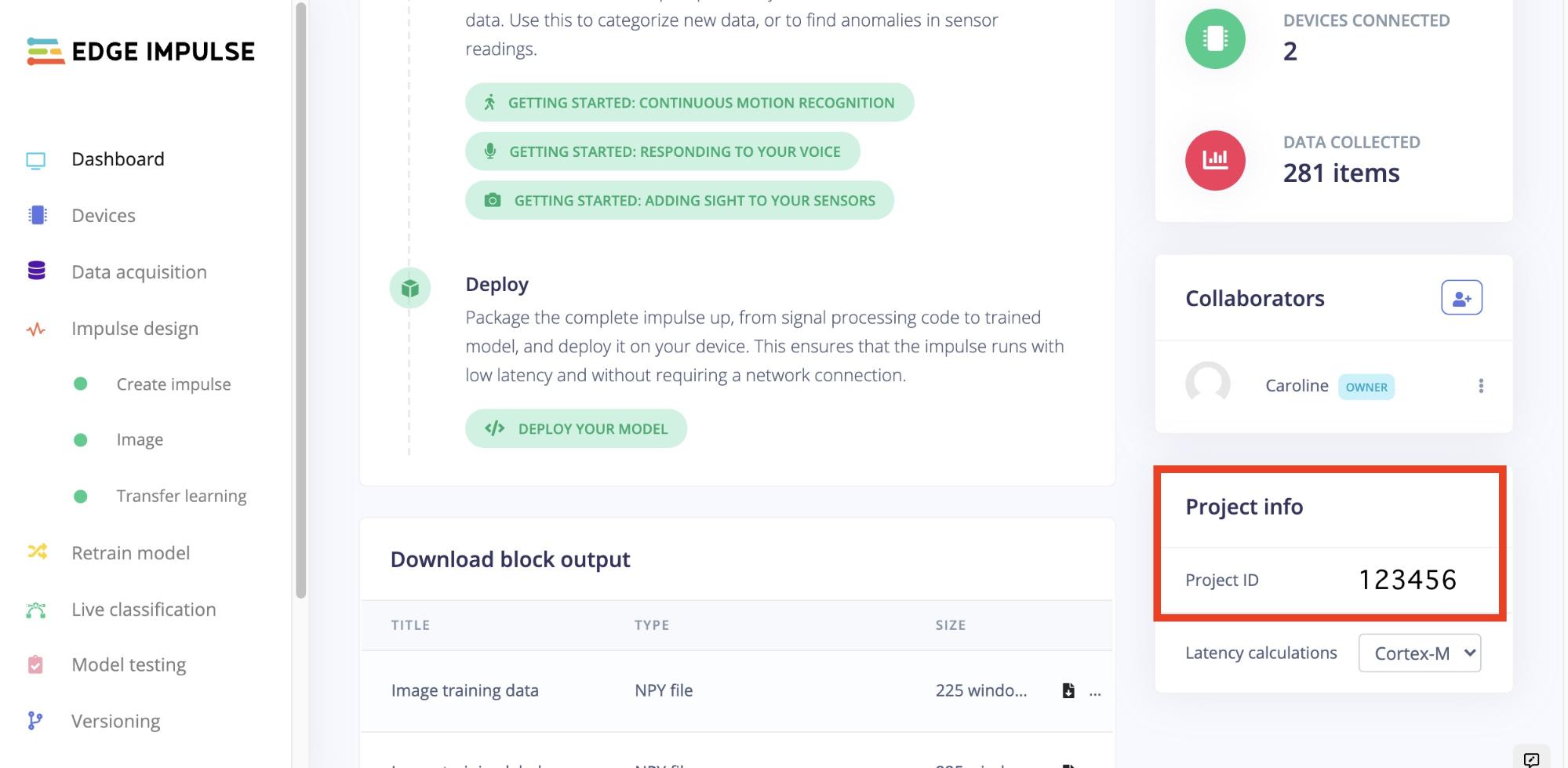

Value: [Project ID from your Edge Impulse Dashboard].

To get your Project ID, go to your Edge Impulse Dashboard, select "Project Info," scroll down, and copy your "Project ID."

Go back to Balena Cloud and paste your Project ID in the value field.

Click Add.

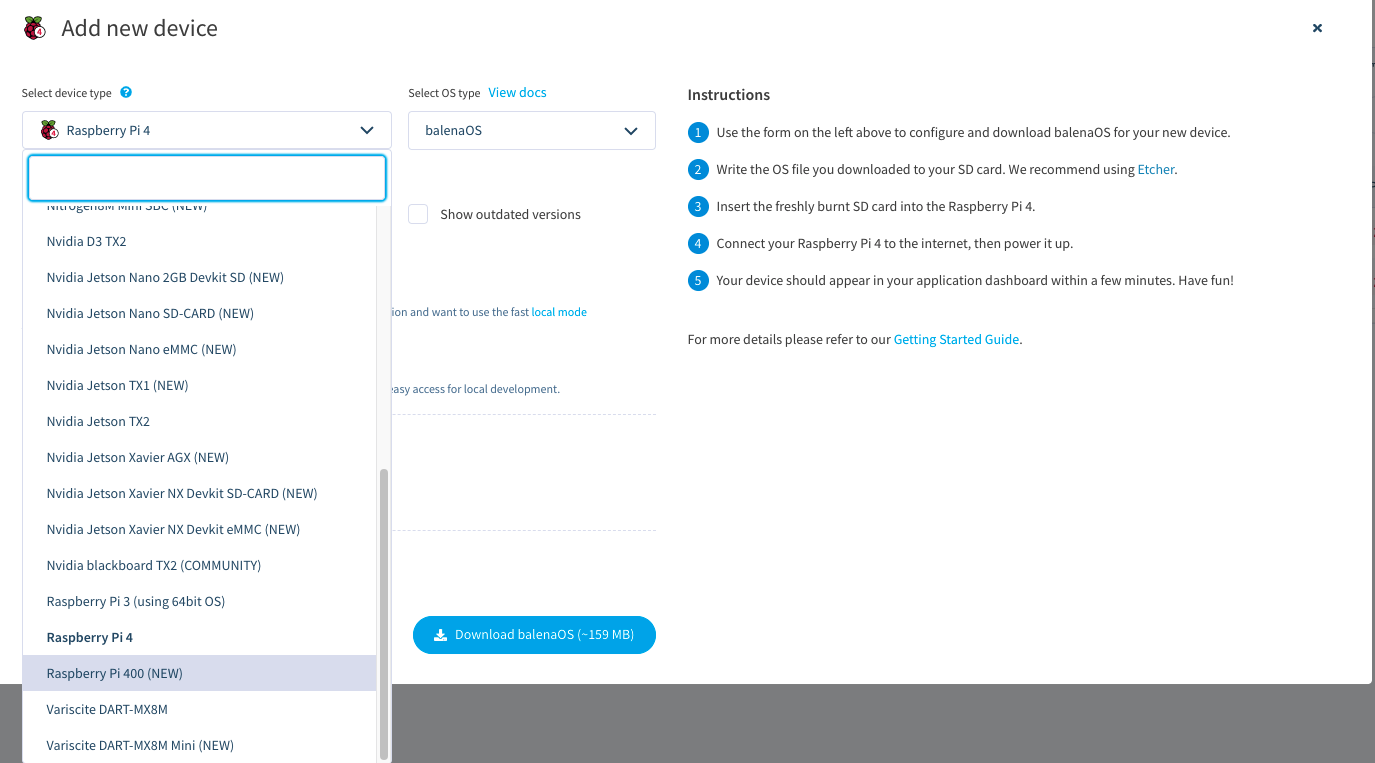

27. Select "Devices" from the left column menu in your BalenaCloud, and click "Add device."

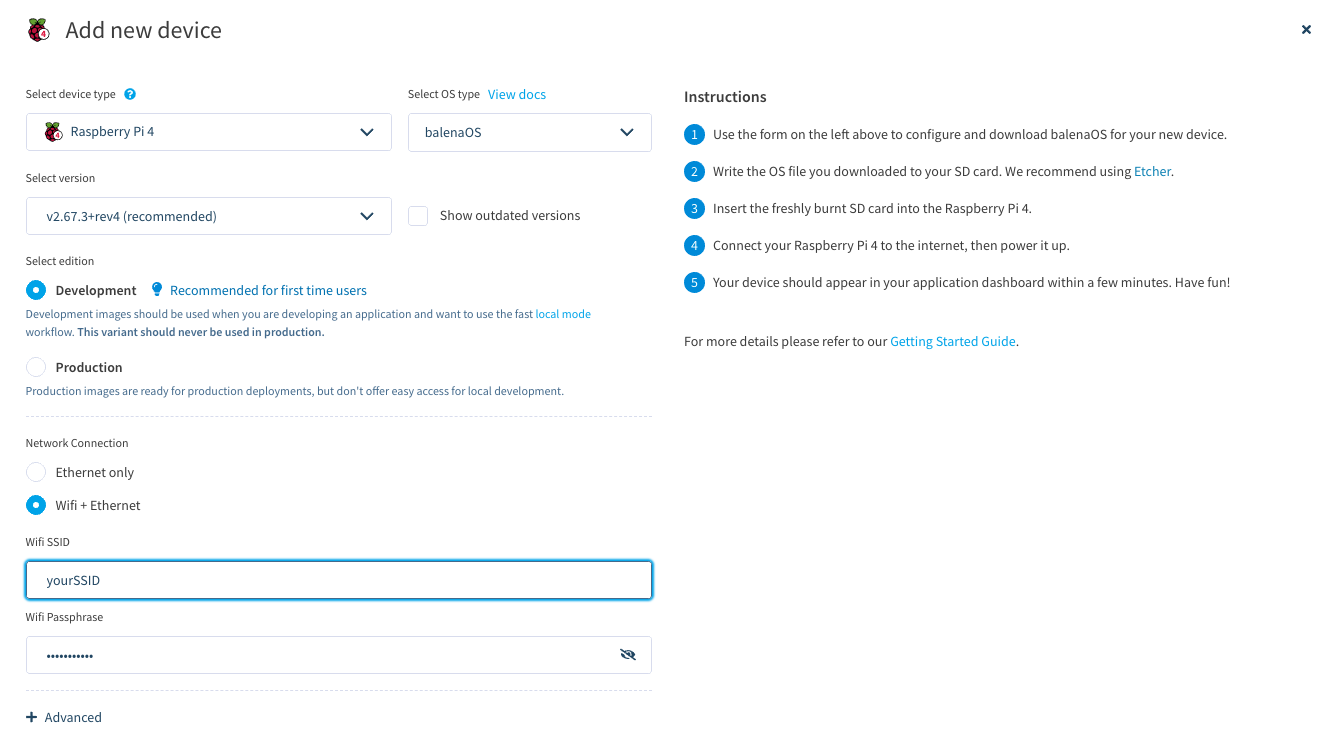

28. Select your Device type, (Raspberry Pi 4, Raspberry Pi 400, or Raspberry Pi 3).

29. Select the radio button for Development.

30. If using Wifi, select the radio button for "Wifi + Ethernet" and enter your Wifi credentials.

31. Click "Download balenaOS" and a zip file will start downloading.

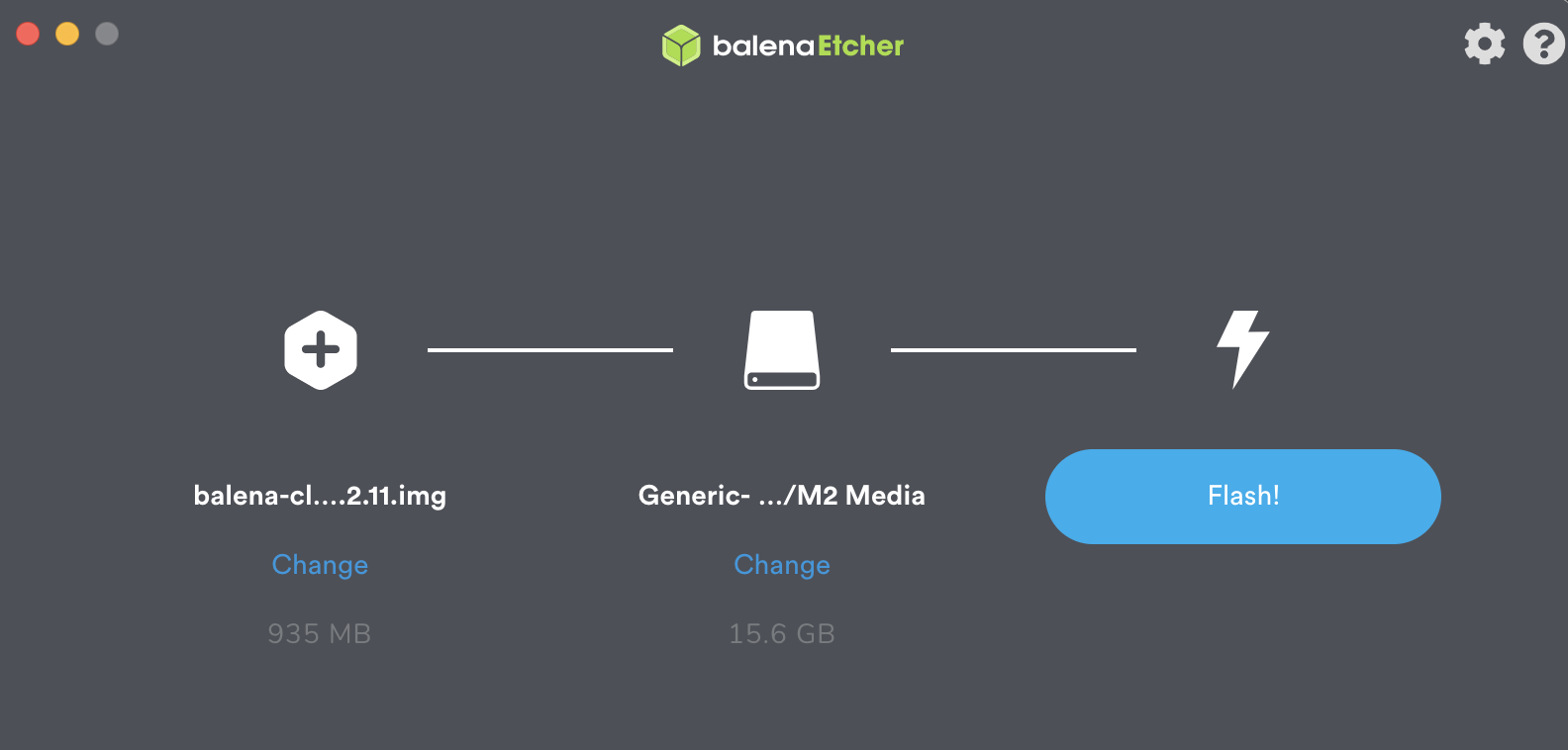

32. Download, install, and open the Balena Etcher app to your desktop (if you don’t already have it installed). Raspberry Pi Imager also works, but Balena Etcher is preferred since we are flashing the BalenaCloudOS.

33. Insert your microSD card into your computer.

34. Select your recently-downloaded BalenaCloudOS image and flash it to your microSD card. Please note that all data will be erased from your microSD card.

Connect the Hardware and Update BalenaCloud

35. Remove the microSD card from your computer and insert into your Raspberry Pi.

36. Attach your webcam or Pi Camera to your Raspberry Pi.

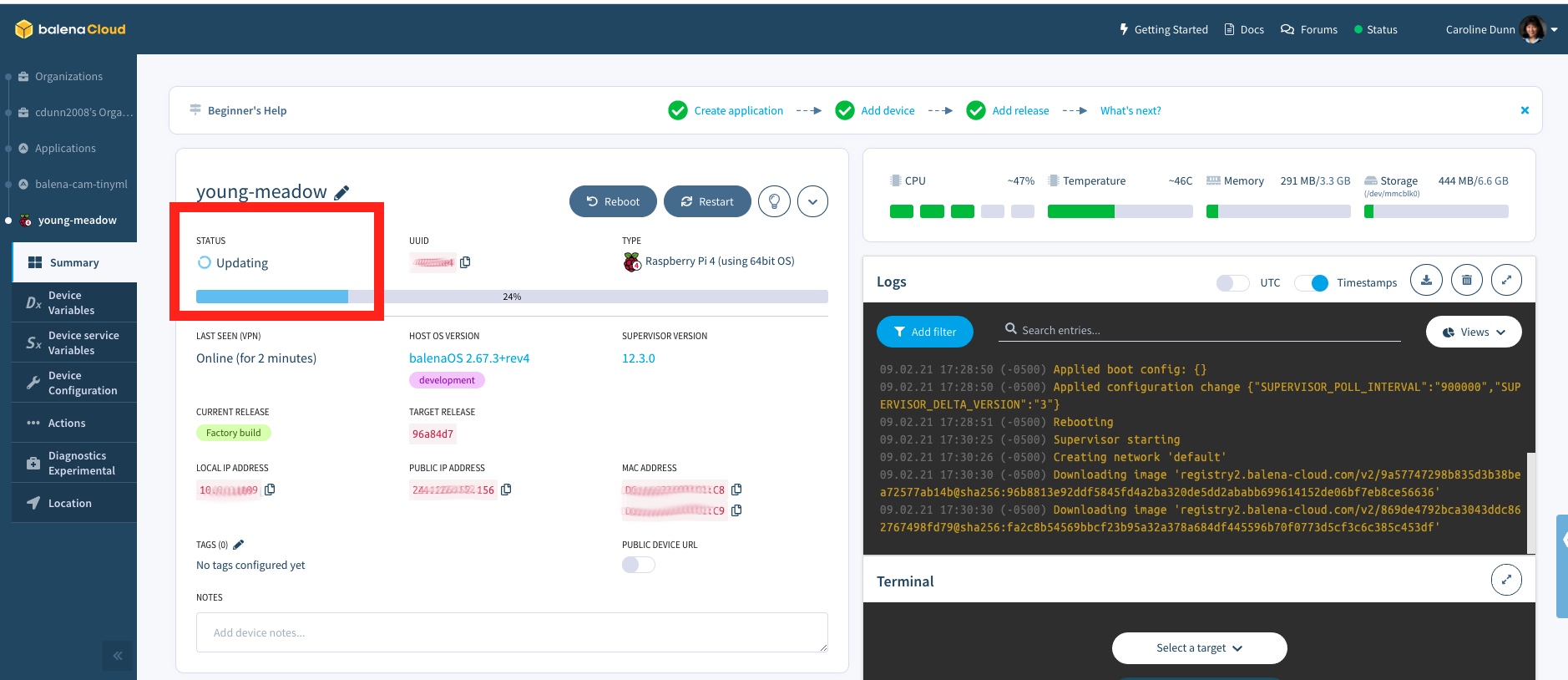

37. Power up your Pi. Allow 15 to 30 minutes for your Pi to boot up and BalenaOS to update. Only the initial boot requires the long update. You can check the status of your Pi Balena Cloud OS in the BalenaCloud dashboard.

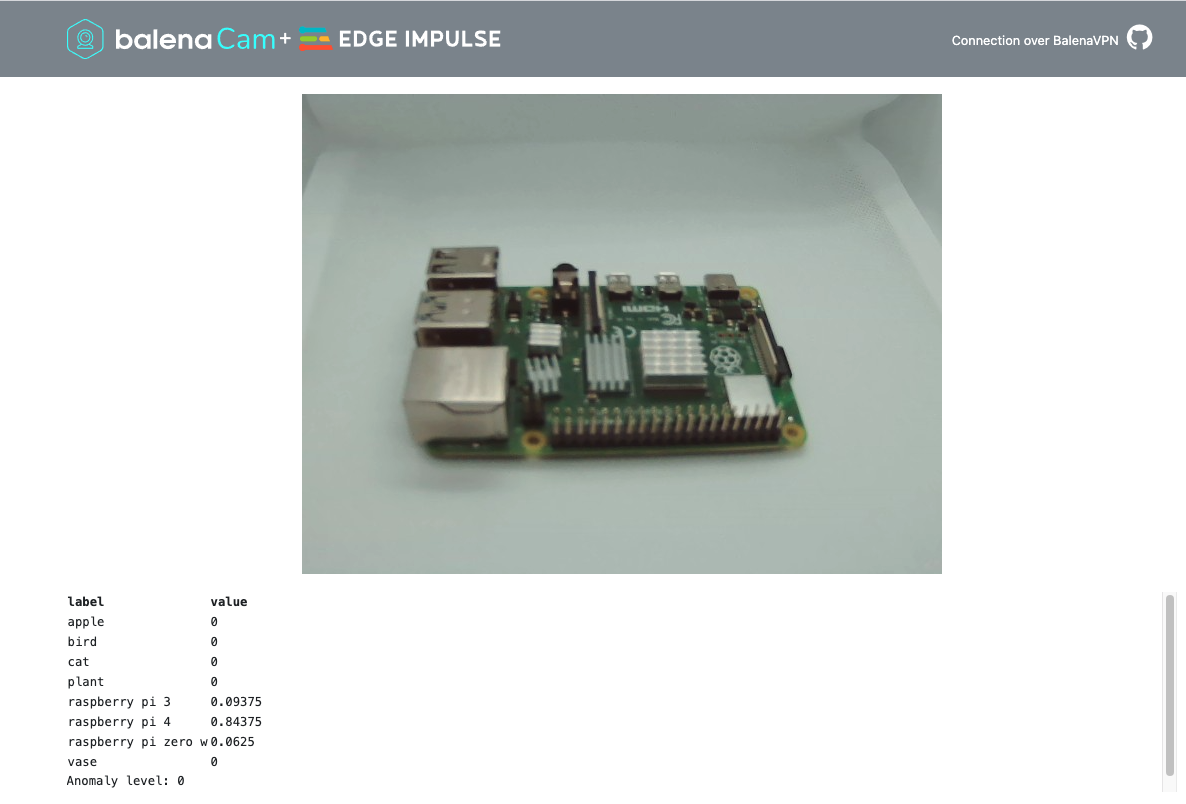

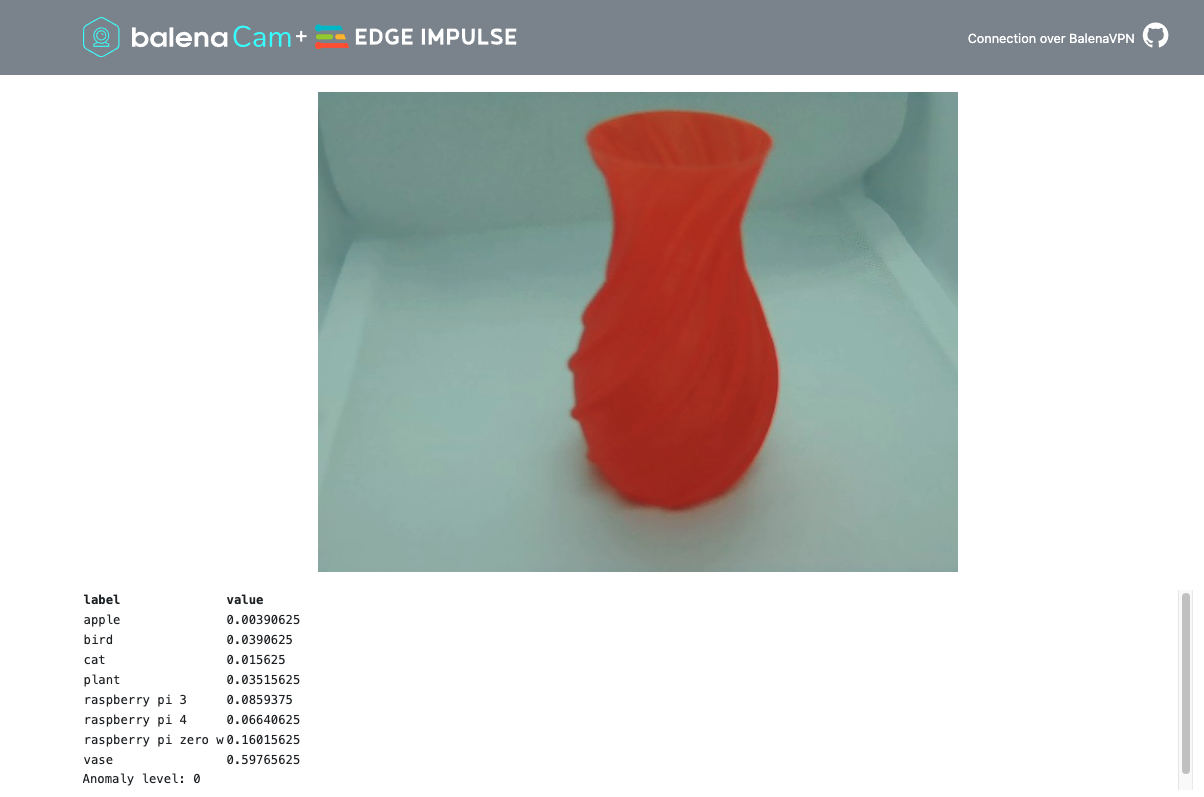

Object Identification

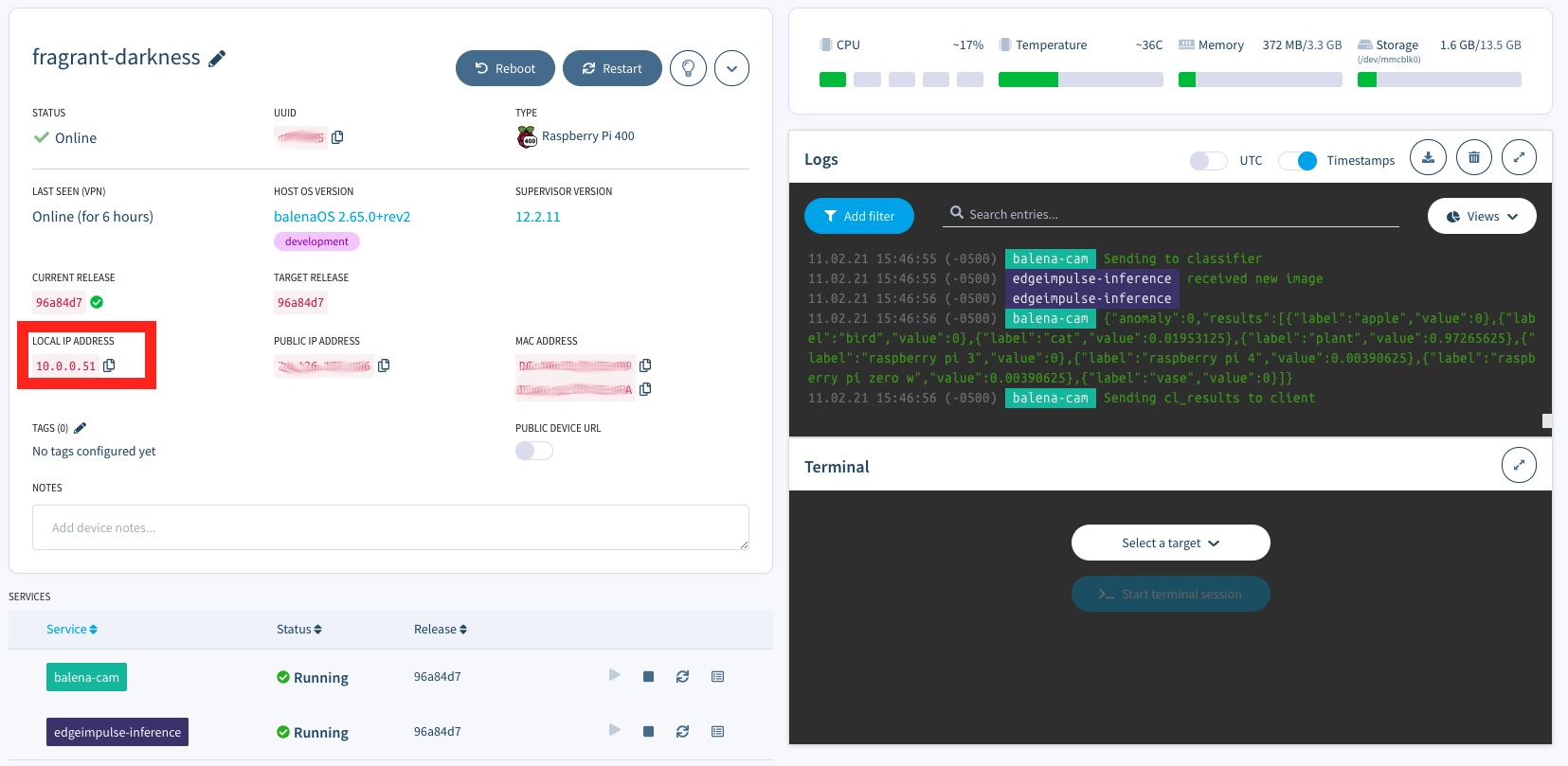

38. Identify your internal IP address from your BalenaCloud dashboard device.

39. Enter this IP address in a new browser Tab or Window. Works great in Safari, Chrome, and Firefox.

40. Place an object in front of the camera.

You should start seeing a probability rating for your object in your browser window (with your internal IP address).

41. Try various objects that you entered into the model and perhaps even objects you didn’t use to train the model.

Refining the Model

- If you find that the identification is not very accurate, first check your model’s accuracy for that item in the Edge Impulse Model Testing tab.

- You can add more photos by following the Data Acquisition steps and then selecting "Retrain model" in Edge Impulse.

- You can also add more items by labeling and uploading in Data Acquisition and retraining the model.

- After each retraining of the model, check for accuracy and then redeploy by running x "WebAssembly" within Deployment.

Caroline Dunn is a freelance writer for Tom's Hardware. Her expertise lies in covering Raspberry Pi projects, creating video tutorials, writing guides, and exploring other entertaining tech DIY initiatives.