Live

AMD Advancing AI Event Live Blog: Instinct MI300 Launch, Ryzen 8000 "Hawk Point" Expected

AMD takes the Nvidia bull by the horns.

Also check out our extended deep-dive coverage:

AMD unveils Instinct MI300X GPU and MI300A APU, claims up to 1.6X lead over Nvidia’s competing GPUs

The refresh that wasn’t - AMD announces ‘Hawk Point’ Ryzen 8040 Series, teases Strix Point

AMD CEO Lisa Su will take to the stage here in San Jose, California, to share the company's latest progress on enabling AI from the cloud to the edge and PCs. The show begins today, December 6, at 10am PT, and we're here to provide live event coverage.

AMD says it will reveal its Instinct MI300 accelerators at the event. All signs point to these coming as both a GPU and a blended CPU+GPU product (APU), both of which are designed to unseat Nvidia's dominance in the AI market.

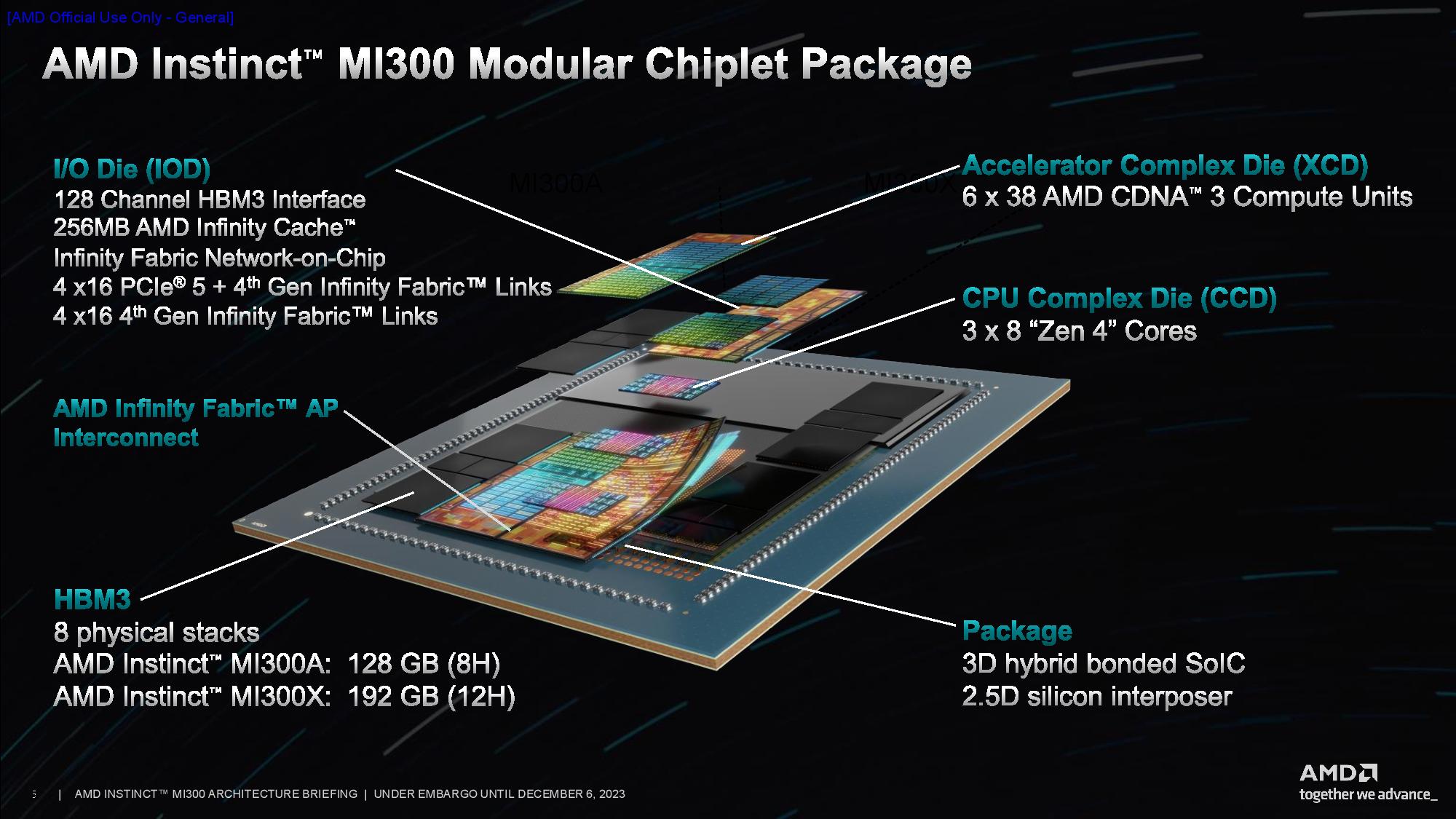

Make no mistake; the Instinct MI300 is a game-changing design - the data center APU blends a total of 13 chiplets, many of them 3D-stacked, to create a chip with twenty-four Zen 4 CPU cores fused with a CDNA 3 graphics engine and eight stacks of HBM3. Overall, the chip weighs in with 146 billion transistors, making it the largest chip AMD has pressed into production.

If you're more interested in the latest PC technology, AMD is also expected to unveil its "Hawk Point" Ryzen 8000 mobile series of chips. Rumors point to these chips offering many of the same characteristics as their predecessors, but targeted enhancements offer more performance. These are the follow-on to AMD's Ryzen 7040 series, the first PC chips to launch with a dedicated AI processing NPU unit, so we think there's a chance these enhanced models will debut at the show.

Pull up a seat; the show starts shortly.

LIVE: Latest Updates

AMD has begun displaying its cautionary statements on the screen, so the show is about to start.

AMD CEO Lisa Su has come out on the stage. She opened the presentation reminiscing on the launch of ChatGPT just one year ago, and the explosive impact it has had on the world.

Generative AI will require significant investments to meet the needs for training and inference workloads. One year ago, AMD predicted a $150 billion TAM for AI workloads by 2027. Now AMD has revised that estimate up to $400 billion in 2027.

AMD is currently focusing on tearing down the barriers to AI adoption and cooperating with its partners to develop new solutions.

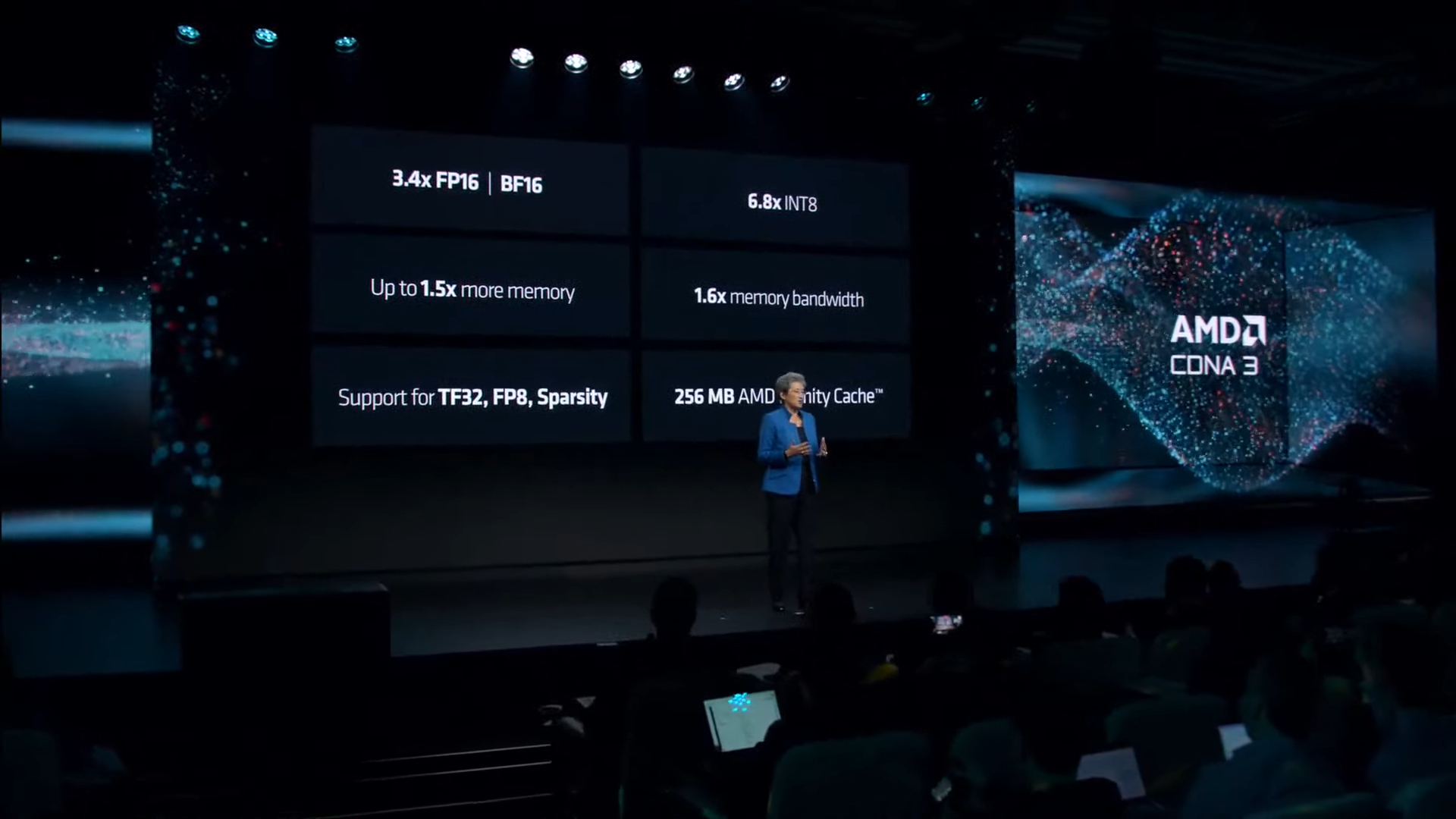

Lisa Su said that the availability of GPU hardware is the biggest barrier, and now the company is helping address that with the launch of its Instinct MI300 accelerators. The new CDNA 3 architecture delivers huge performance gains in multiple facets.

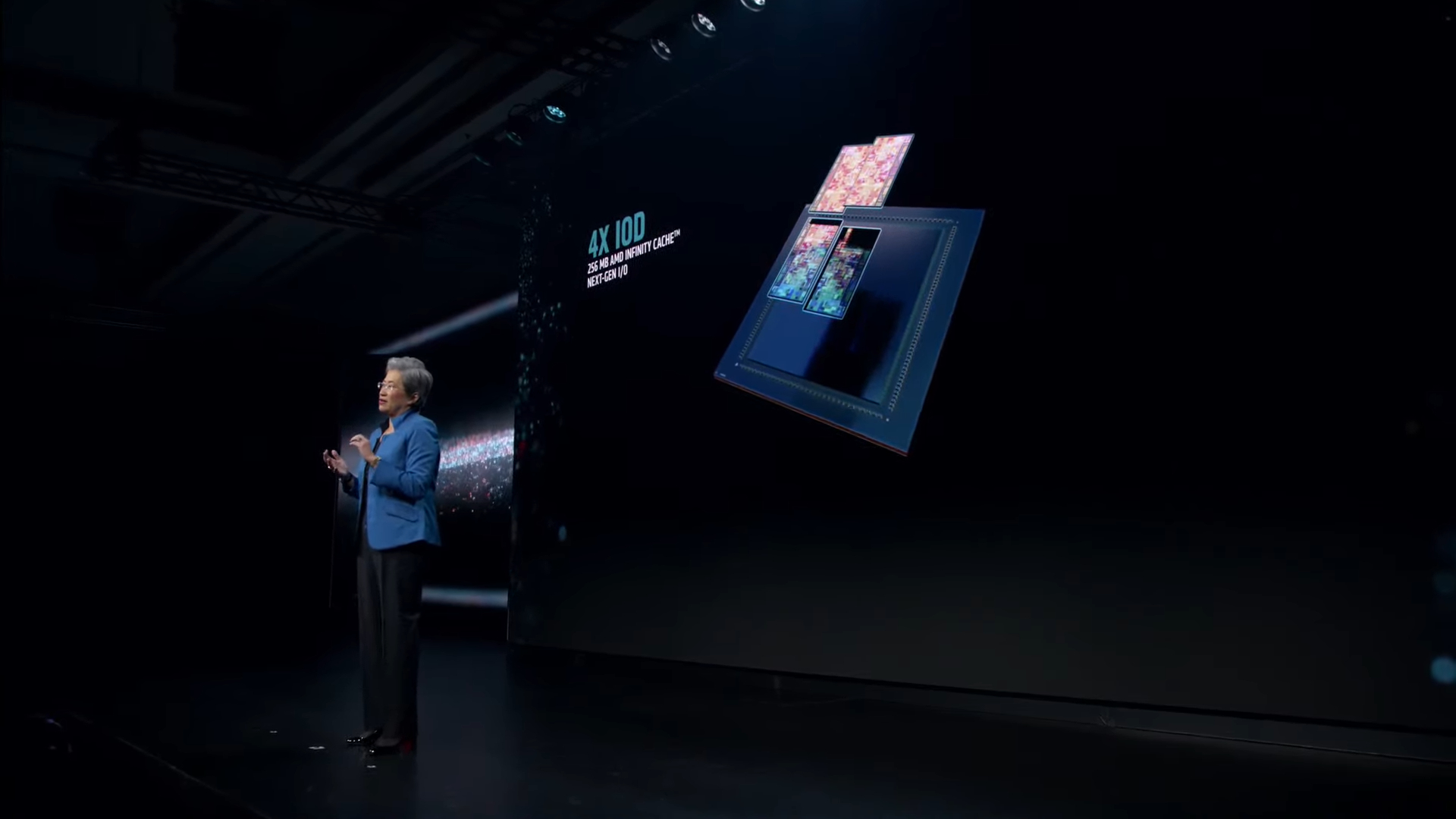

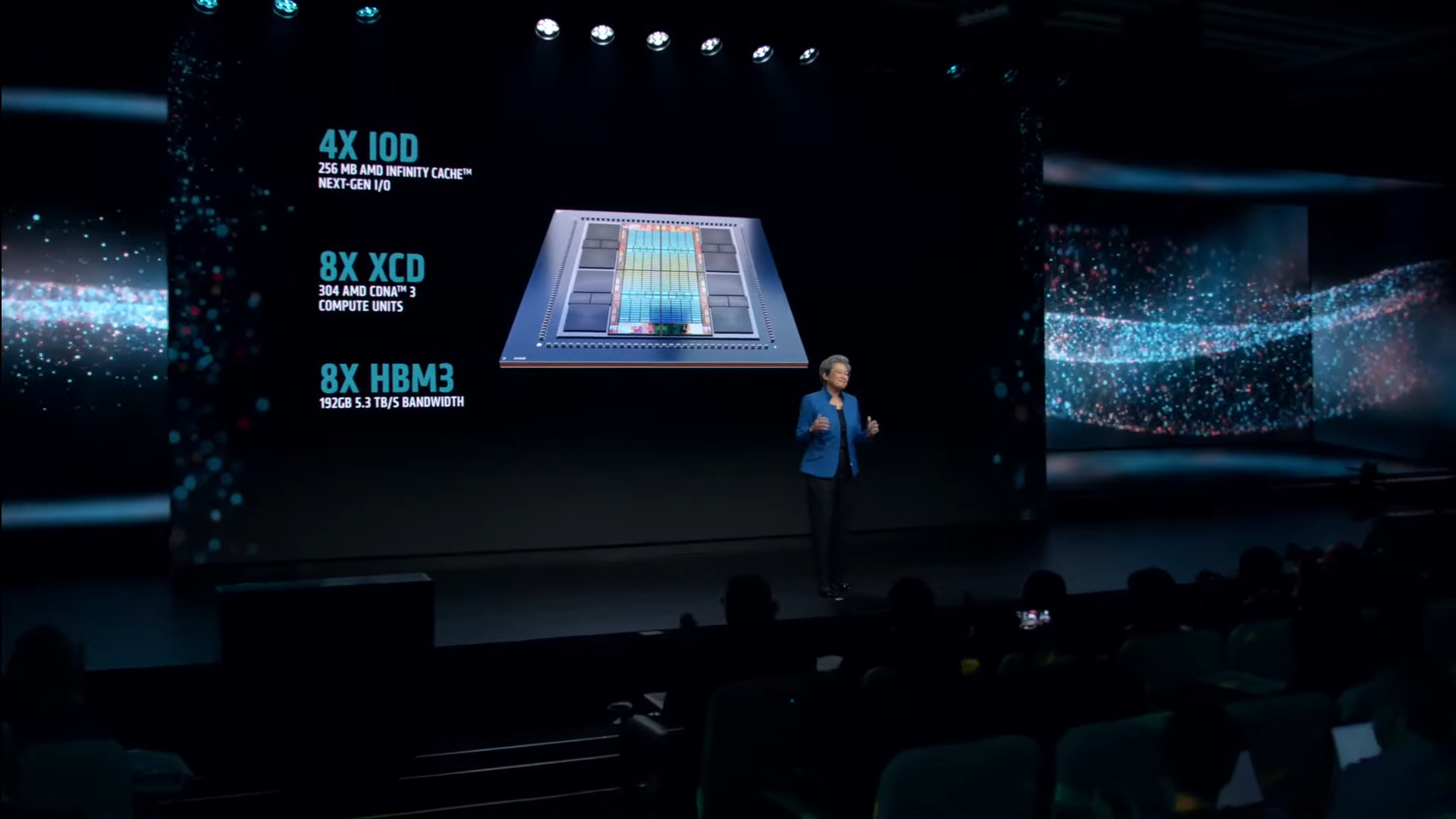

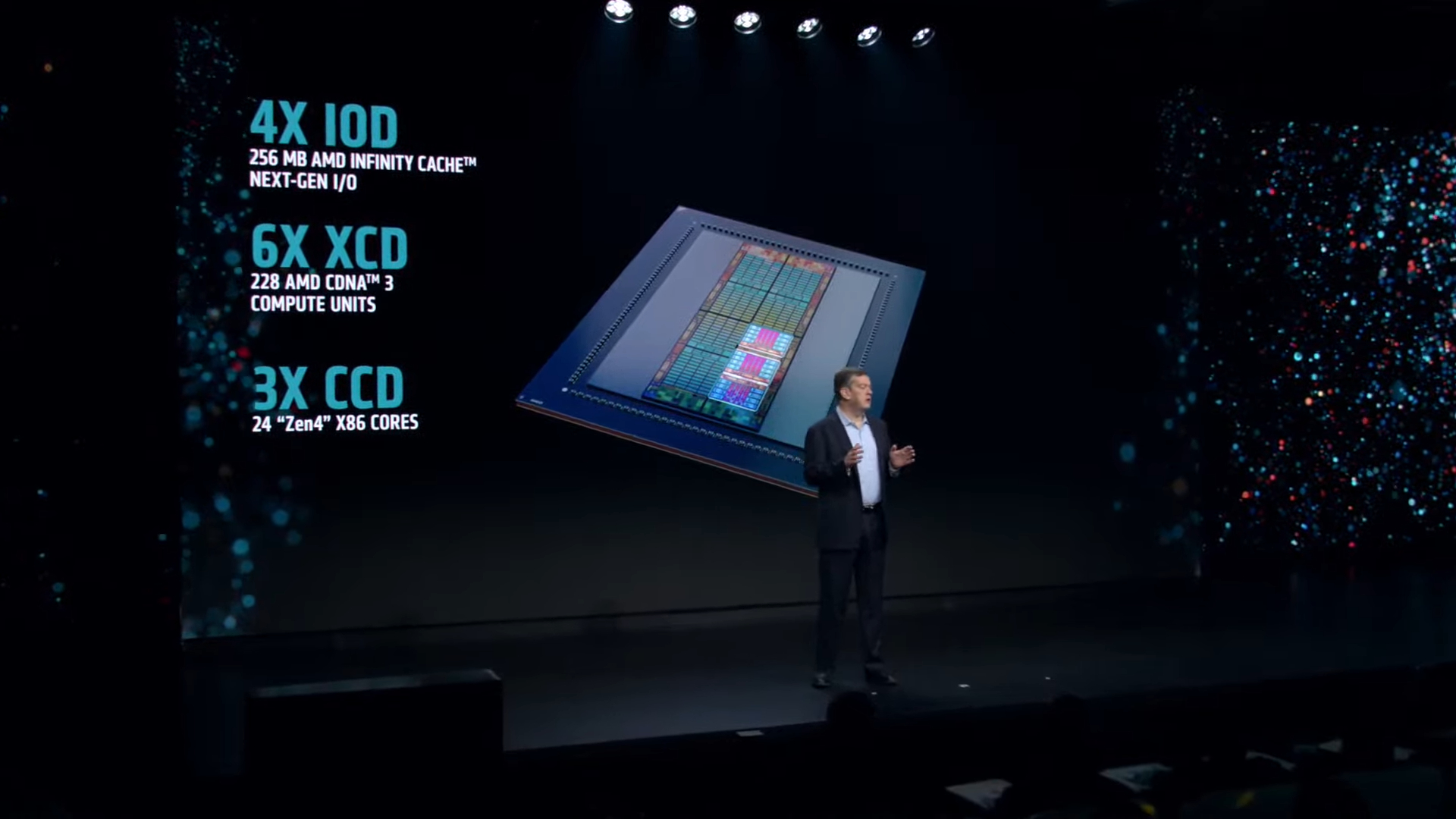

The MI300 has 150 billion transistors. 128-channels of HBM3, fourth-gen Infinity Fabric, and eight CDNA 3 GPU chiplets.

The Instinct MI300 is a game-changing design - the data center APU blends a total of 13 chiplets, many of them 3D-stacked, to create a chip with twenty-four Zen 4 CPU cores fused with a CDNA 3 graphics engine and 8 stacks of HBM3. Overall, the chip weighs in with 146 billion transistors, making it the largest chip AMD has pressed into production.

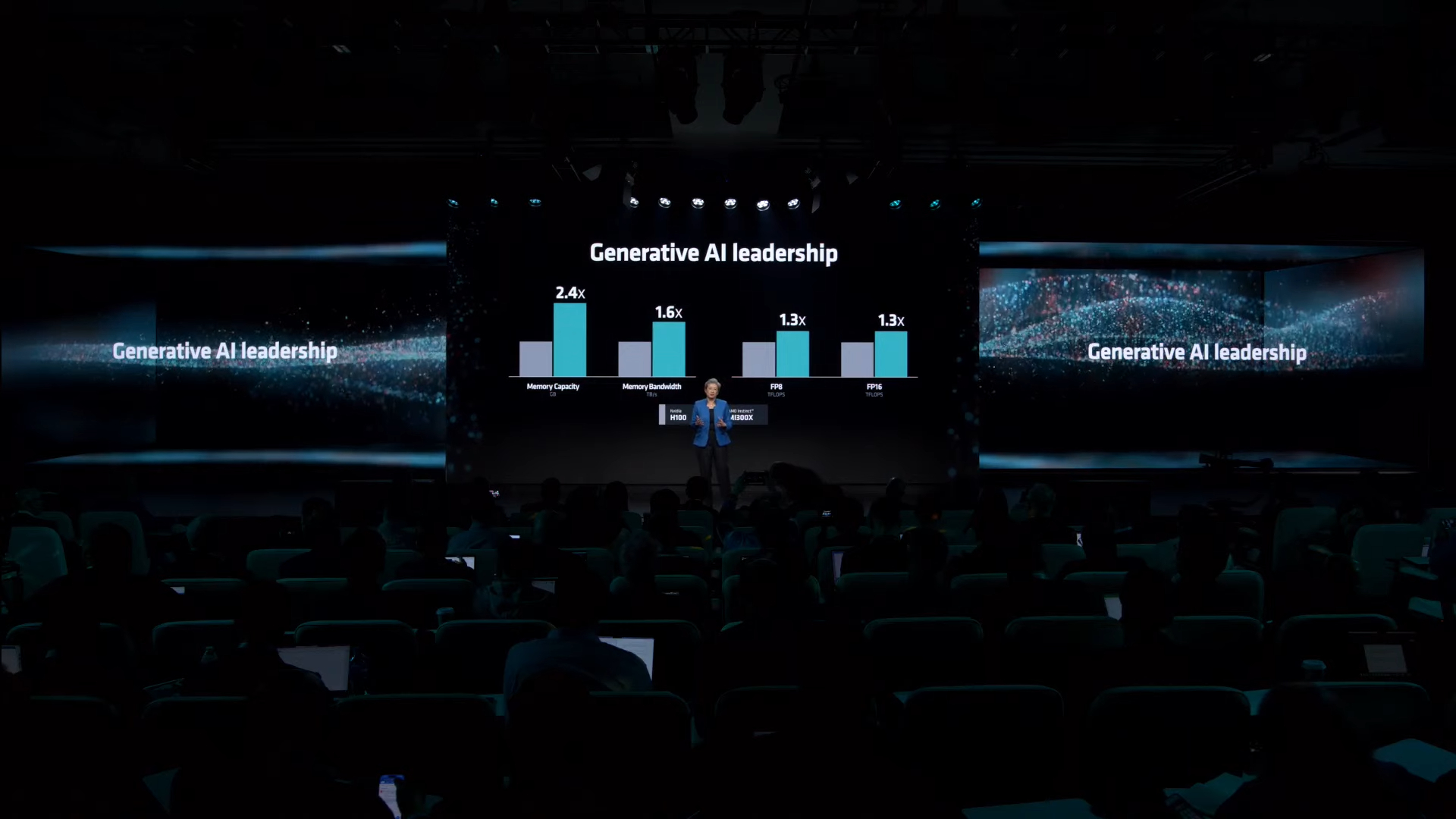

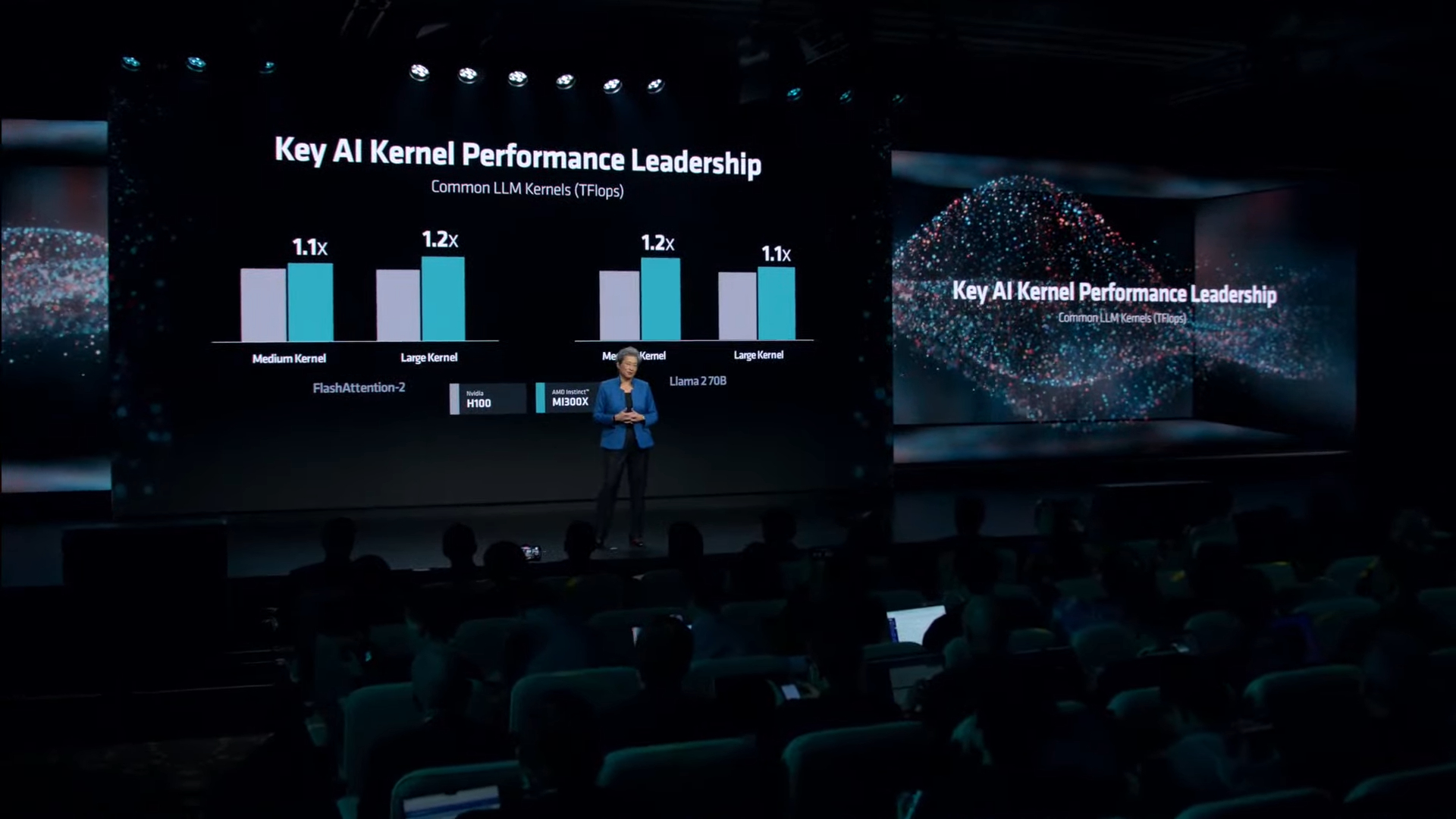

AMD claims up to a 1.3X more performance than Nvidia's H100 GPUs in certain workloads. The slide above outlines the claimed performance advantages.

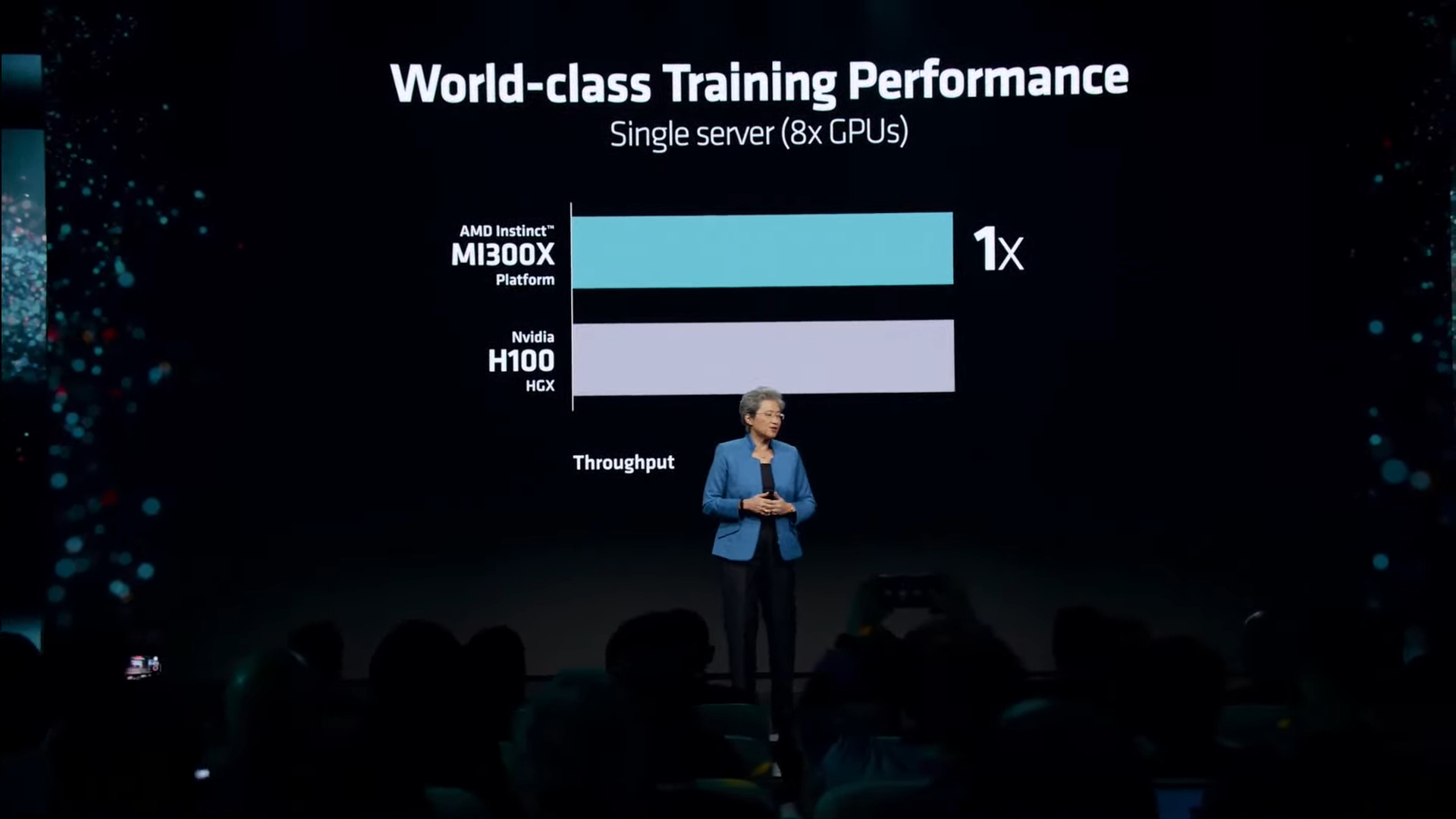

Scalability is incredibly important -- performance needs to increase linearly as more GPUs are employed. Here AMD shows they match Nvidia's eight-GPU H100 HGX system with an eight-GPU AMD platform.

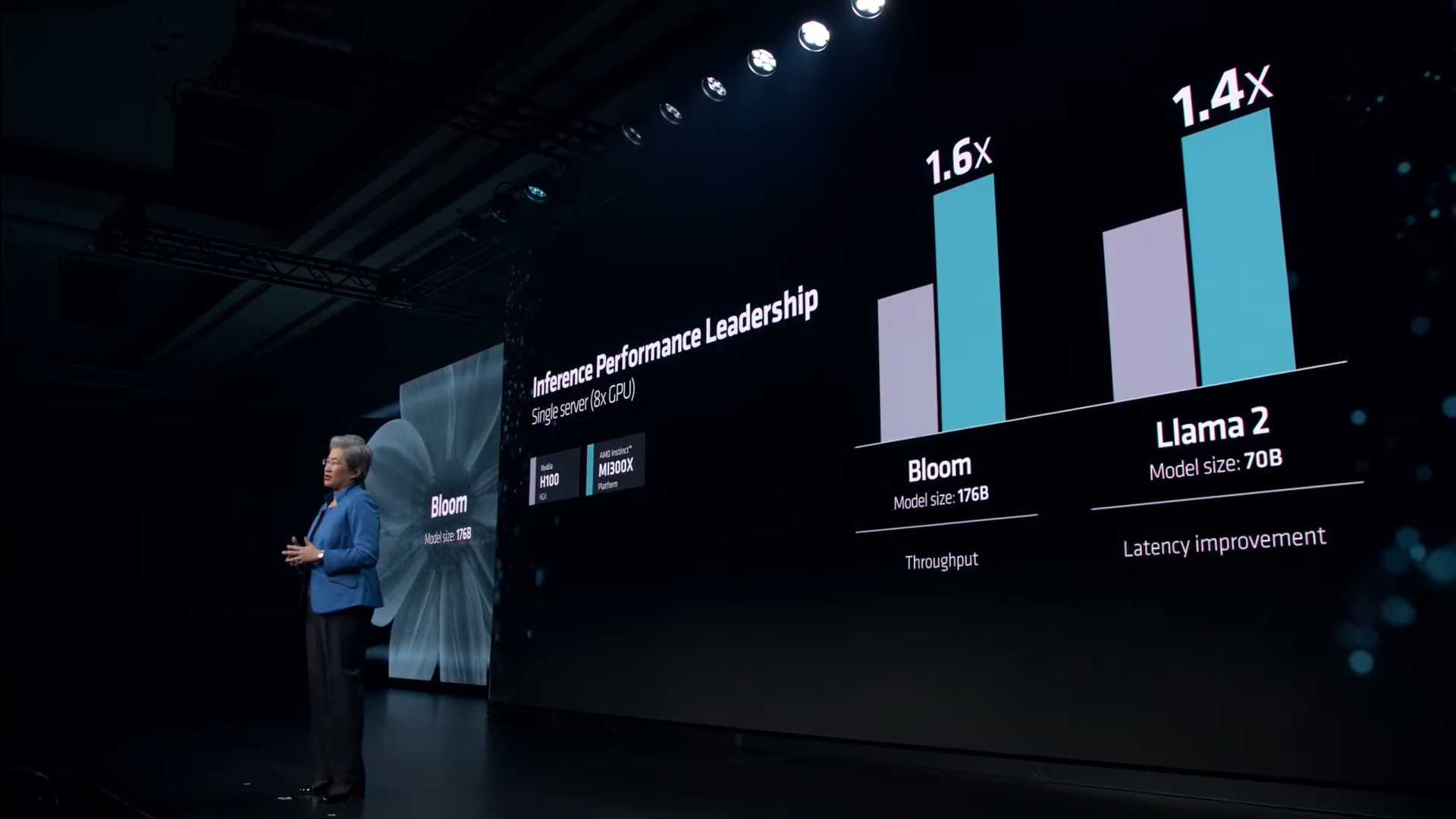

The MI300 delivers performance parity in training with Nvidia, but exhibits the strongest advantages in inference. AMD highlights a 1.6X advantage in inferencing.

Microsoft CTO Kevin Scott has come to the stage to talk with Lisa Su about the challenges of building out AI infrastructure.

While they discuss the details, here are some details about MI300.

Microsoft will have MI300X coud instances available in preview today.

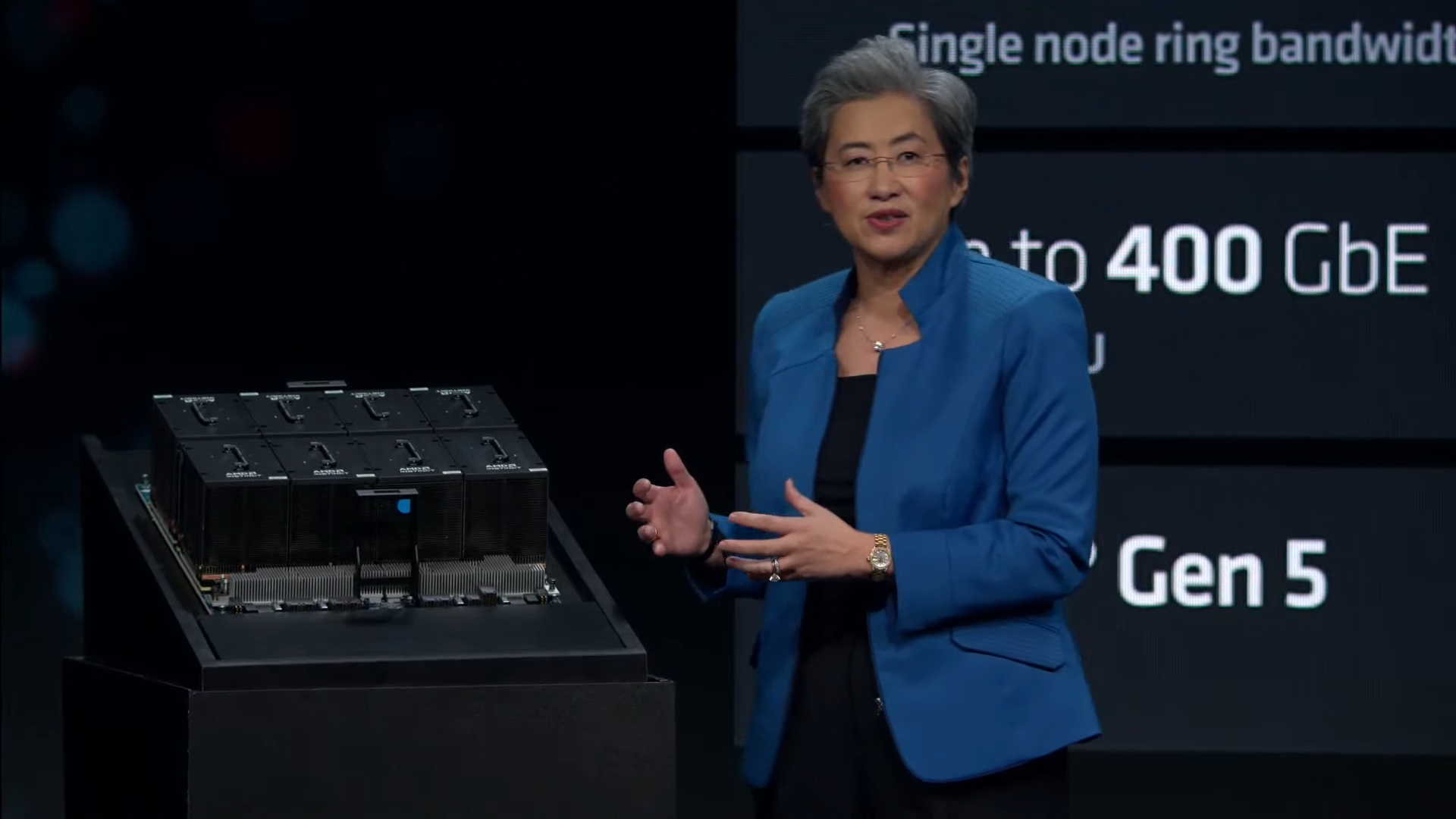

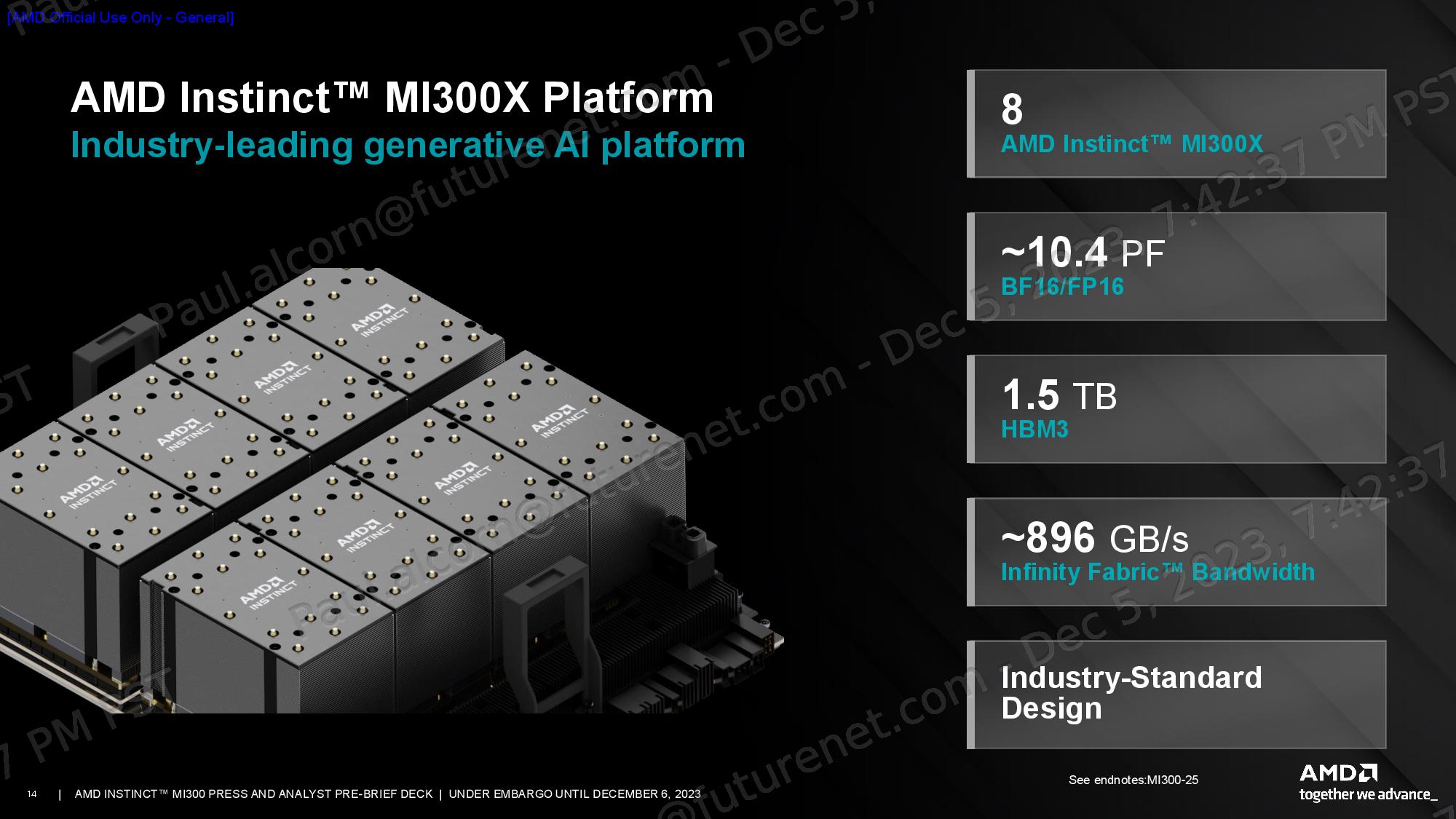

Lisa Su displayed the AMD Instinct MI300X platform.

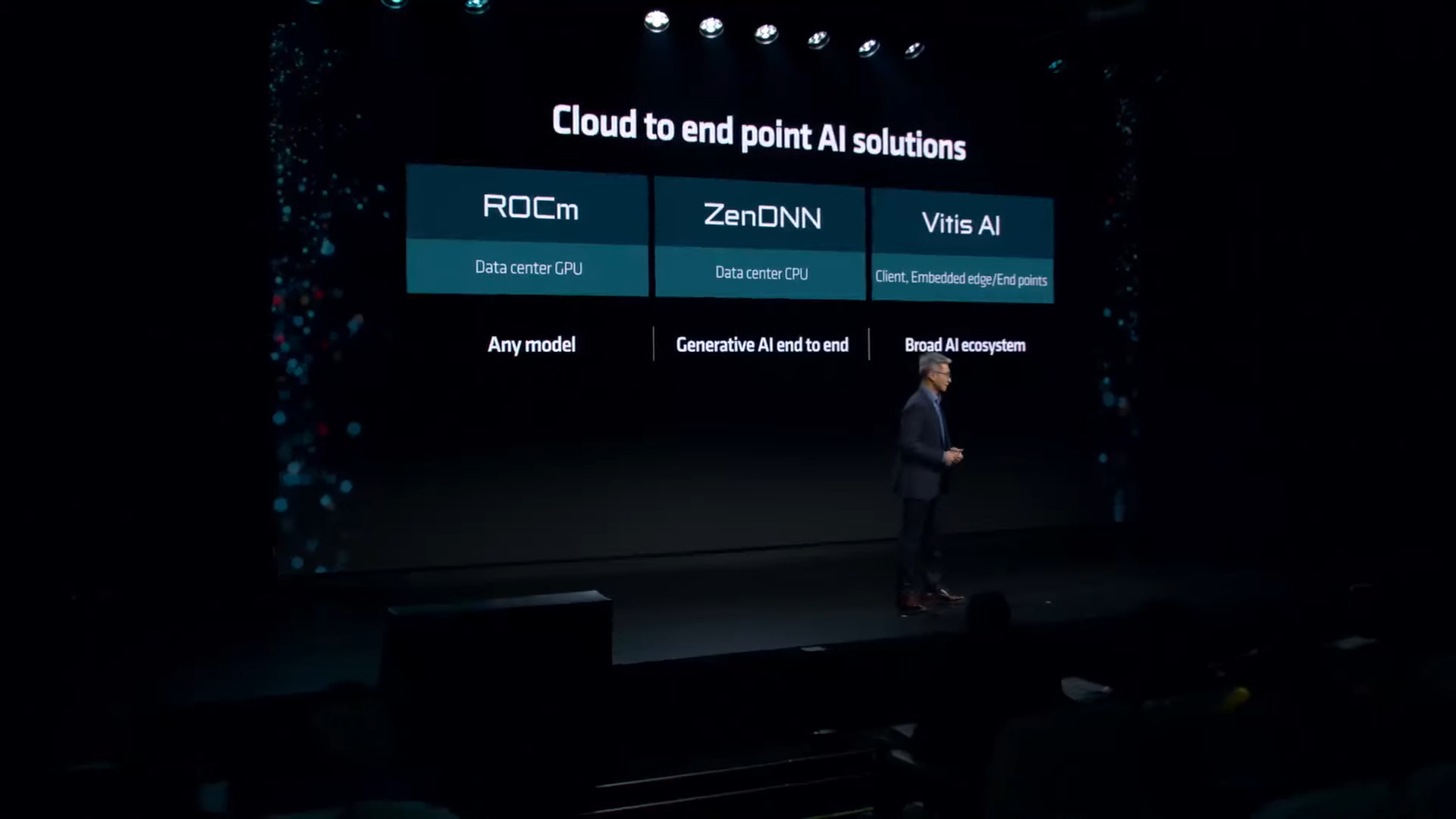

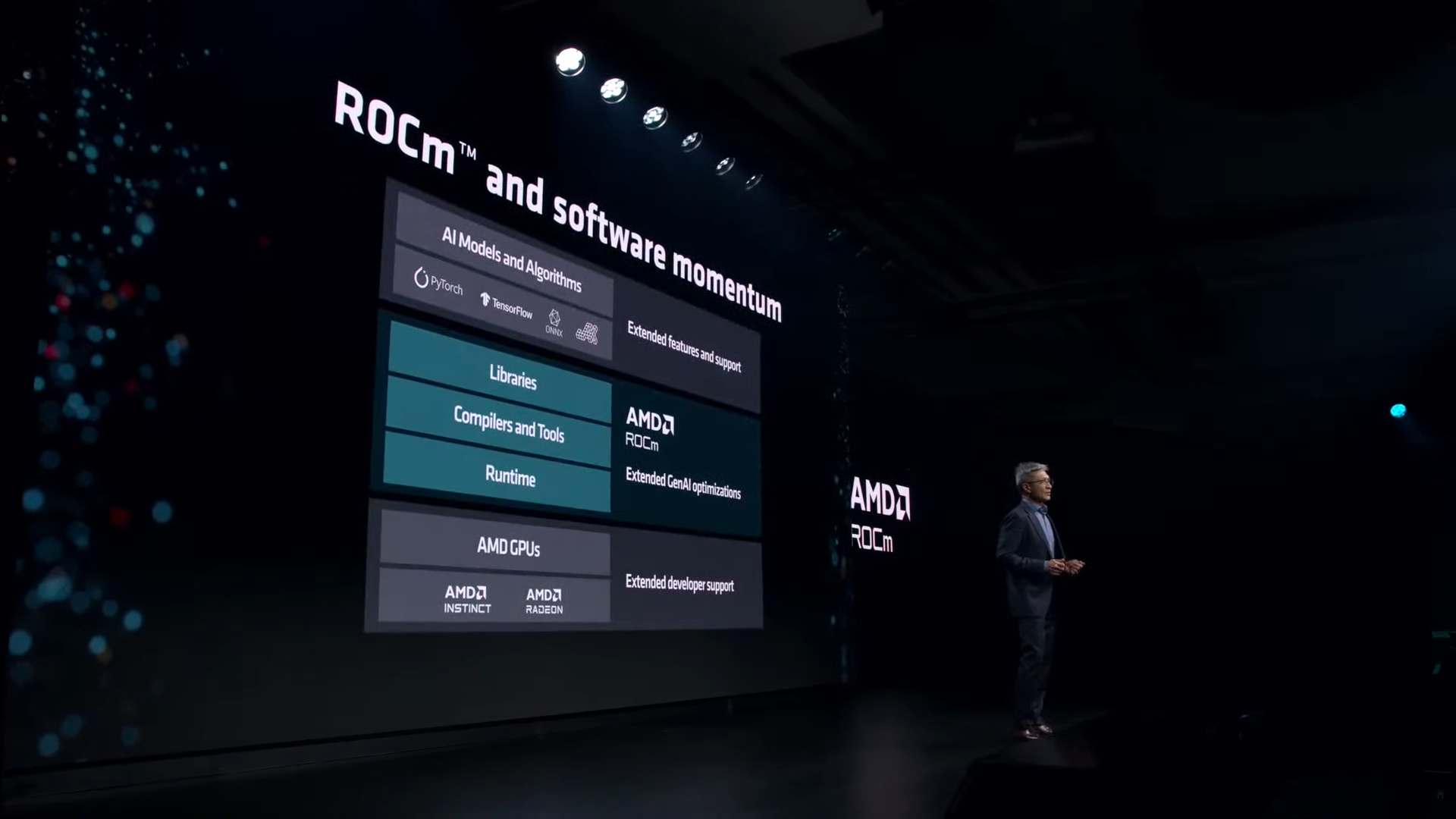

AMD CTO Victor Peng has come to stage to talk about the latest advances in ROCM, AMD's open source competitor to Nvidia's CUDA.

Peng talked about the advantages of the open ROCm ecosystem, as opposed to Nvidia's proprietary approach.

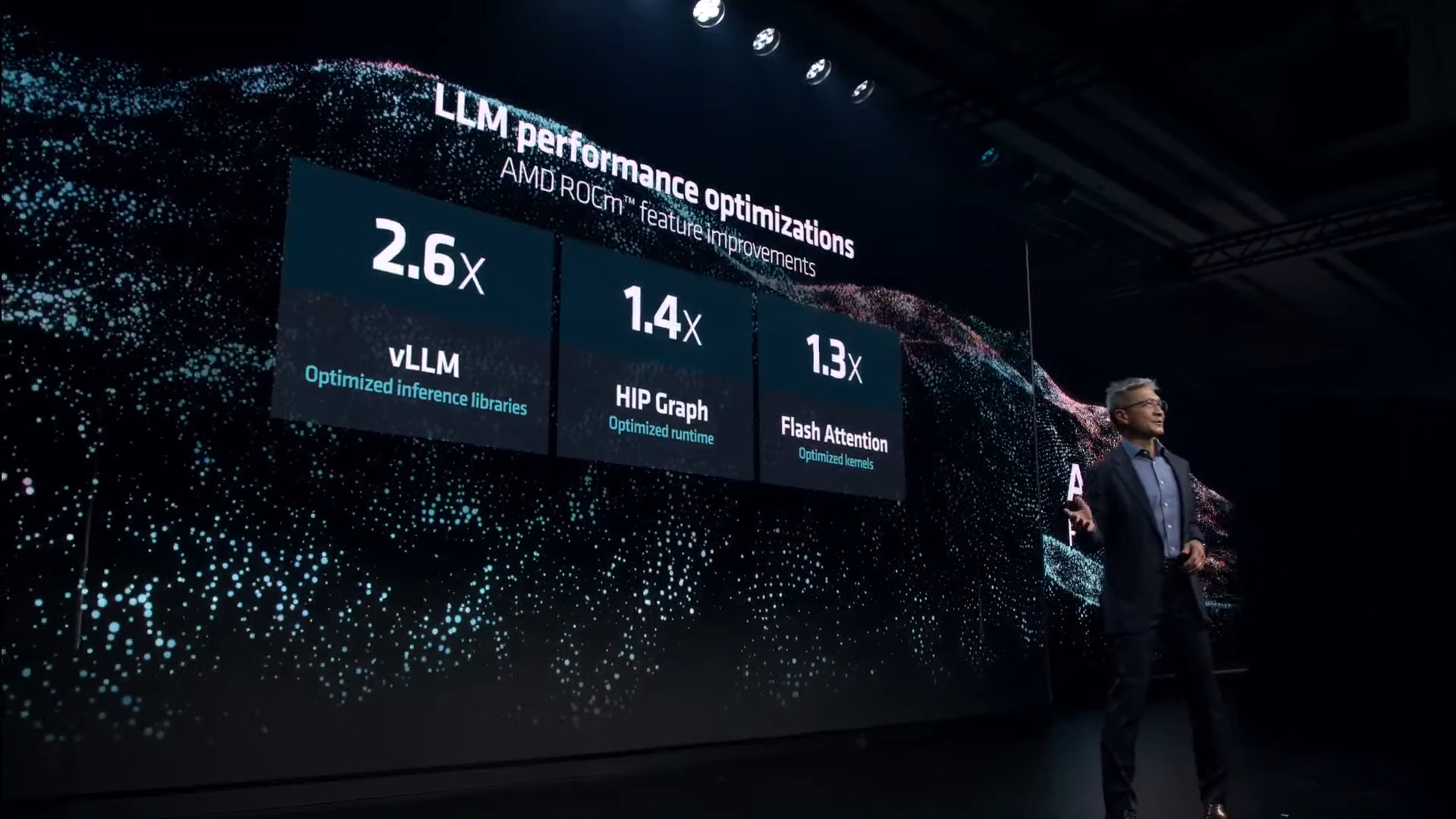

AMD's next-gen ROCm 6 is launching later this month. Support for Radeon GPUs continues, but it also has new optimizations for MI300.

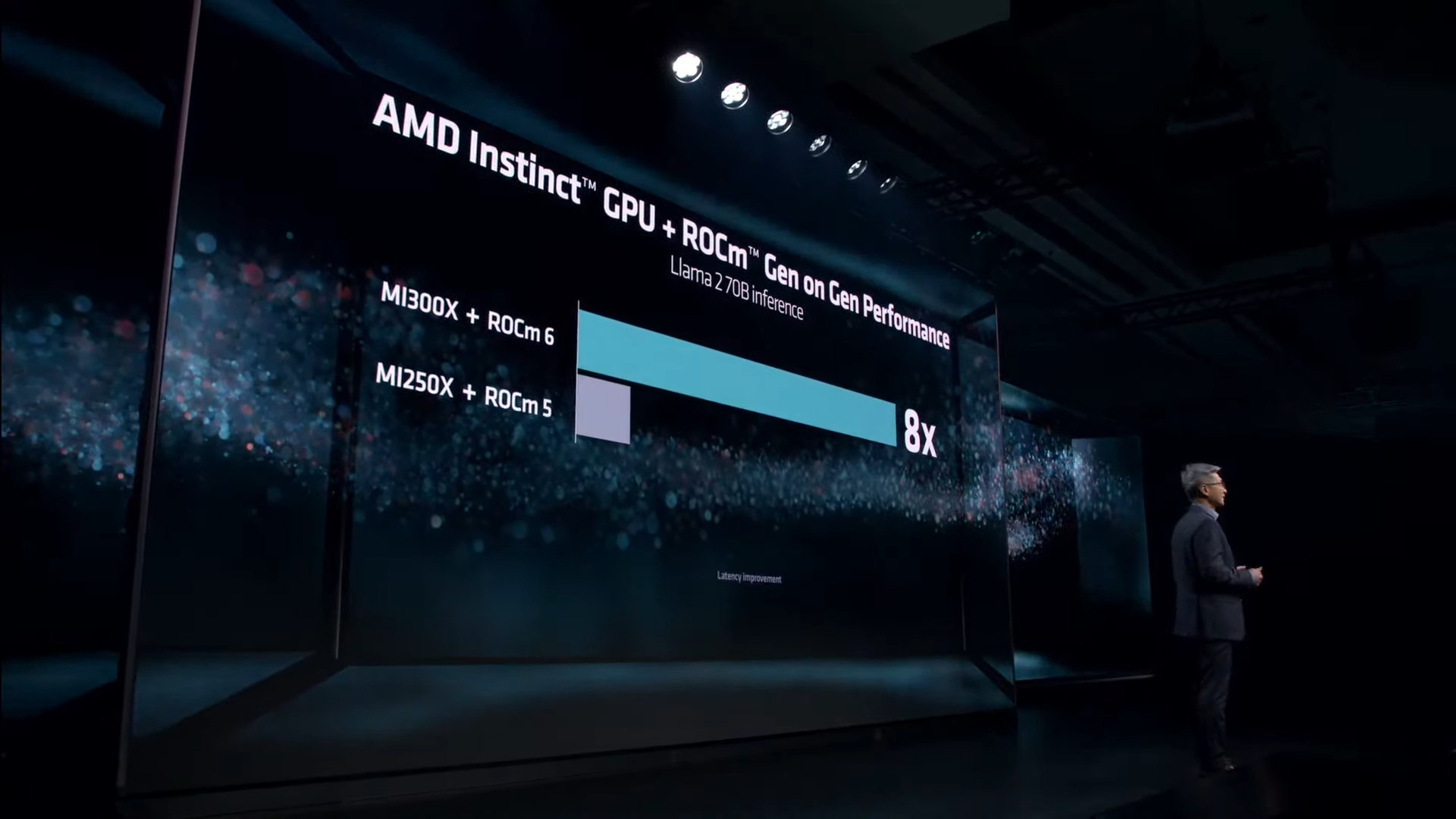

ROCm provides up to a 2.6X improvement in vLLM, among other optimizations that total an 8X improvement on MI300X compared to ROCm 5 on MI250X (this isn't a great comparison).

AMD continues to work with industry stalwarts like Hugging Face and PyTorch to expand the open source ecosystem.

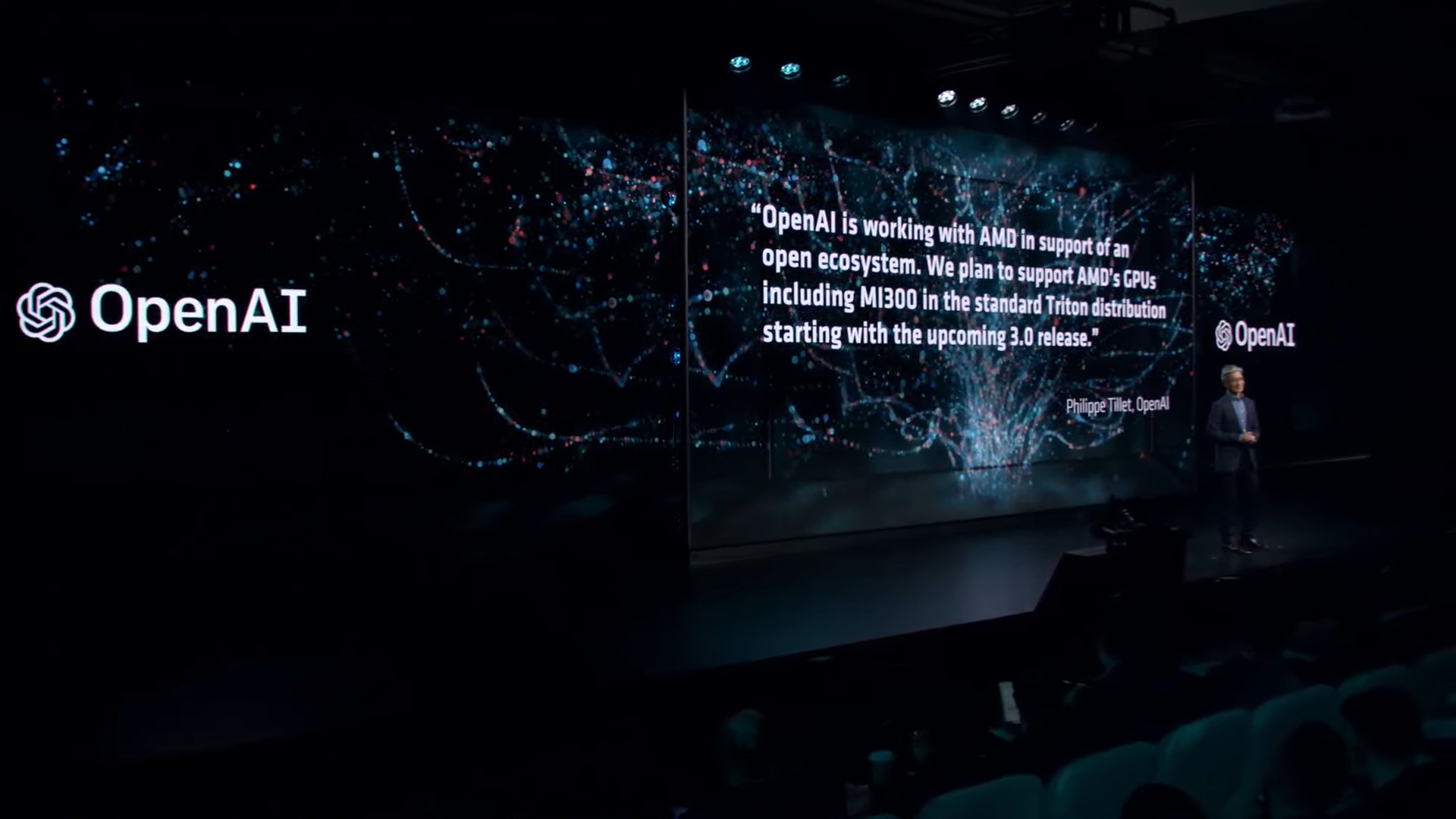

AMD GPUs, including the MI300, will be supported in the standard Triton distribution starting with version 3.0.

Peng is now talking with leaders from Databricks, essential AI, and Lamini.

The talk has turned to different forms of AI, and possible evolutionary updates in the future.

Here are some of the specifications of AMD's new Instinct MI300X platform. The system consists of eight MI300X accelerators in one system. It supports 400 GbE networking and has a monstrous 1.5TB of total HBM3 capacity.

62,000 AI models run on the Instinct lineup today, and many more will run on the MI300X. Peng says the arrival of ROCm 6 heralds the inflection point for the broader adoption of AMD's software.

Lisa Su has returned to stage, inviting Ajit Mathews, the Senior Director of Engineering at Meta, to the stage.

Meta feels that an open source approach to AI is the best path forward for the industry.

Meta has been benchmarking ROCm and working to build its support in PyTorch for several years. Meta will deploy Instinct MI300X GPUs in its data centers.

AMD is working to bring integrated AI solutions to market for enterprises, a lucrative portion of the market.

Arthur Lewis, the President of Dell's Core Business Operations, Global Infrastructure Solutions Group, to talk about the company's partnership with AMD.

Dell has added AMD's MI300X to its portfolio, offering Poweredge servers with eight of the GPUs inside.

Supermicro founder and CEO Charles Liang has come to the stage to talk about how the company is embracing the generative AI wave with new systems.

Supermicro has MI300X systems in both air and watercooled versions, thus allowing customers to build rack scale solutions.

Kirk Skaugen, the EVP and President of the Lenovo Infrastructure Solutions Group, has come to the stage. Lenovo is focusing heavily on developing new AI ThinkEdge systems.

Lenovo has added the MI300X to the ThinkSystem platform.

AMD has engaged with an incredible number of OEM and ODM system vendors, and is now working with new cloud service providers, too.

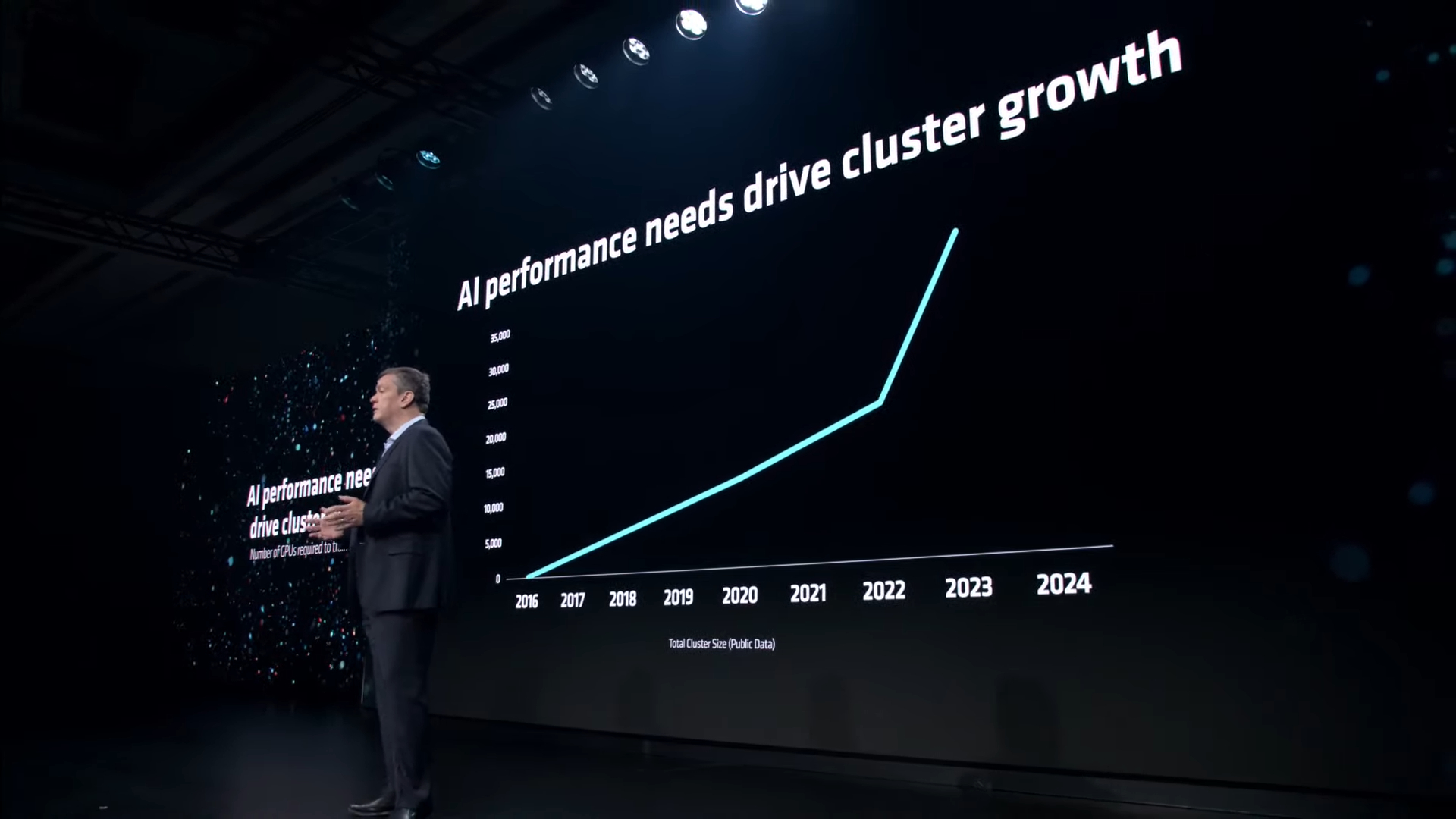

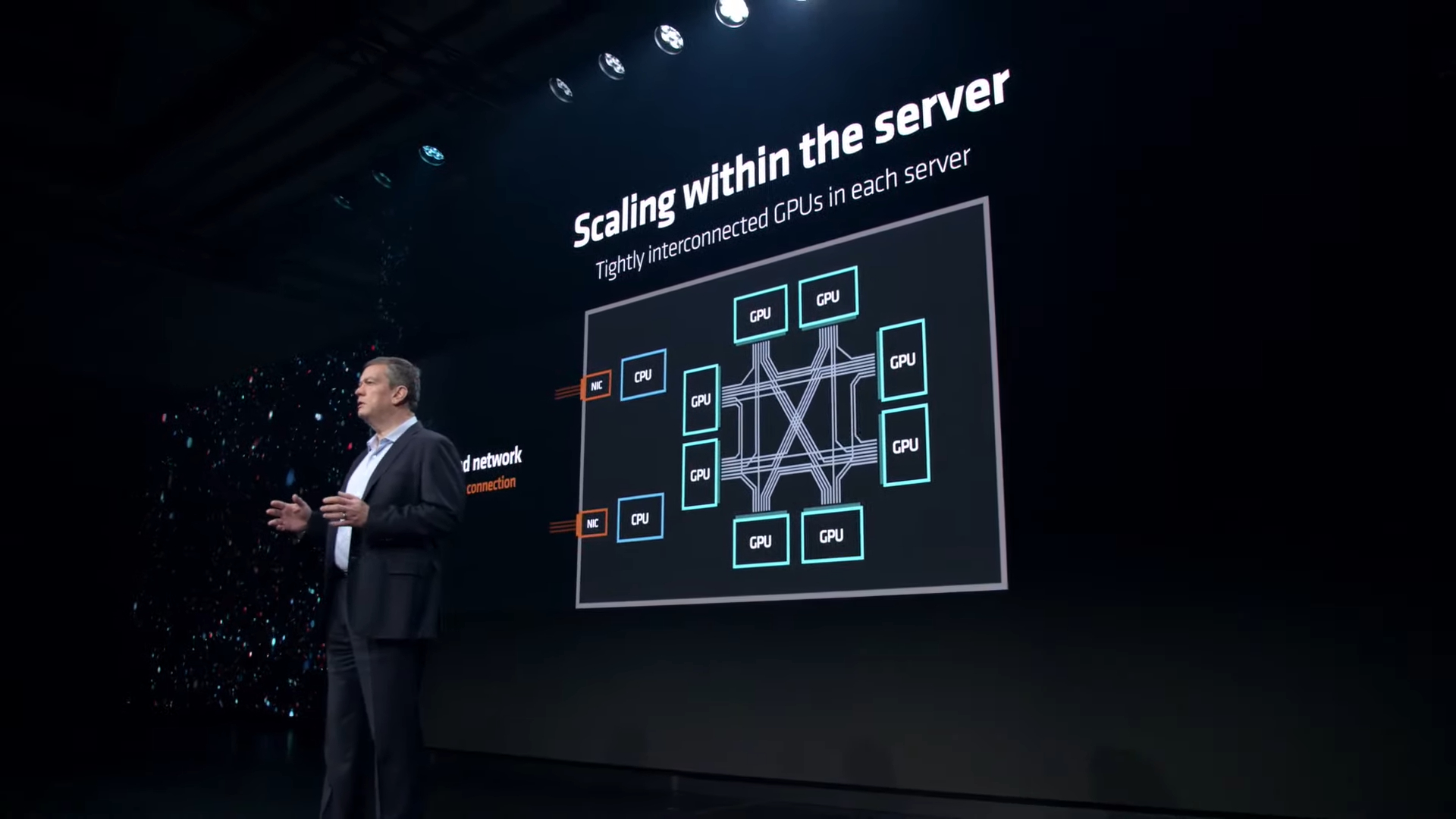

Forrest Norrod, AMD's EVP and GM of the data center group, has come to the stage.

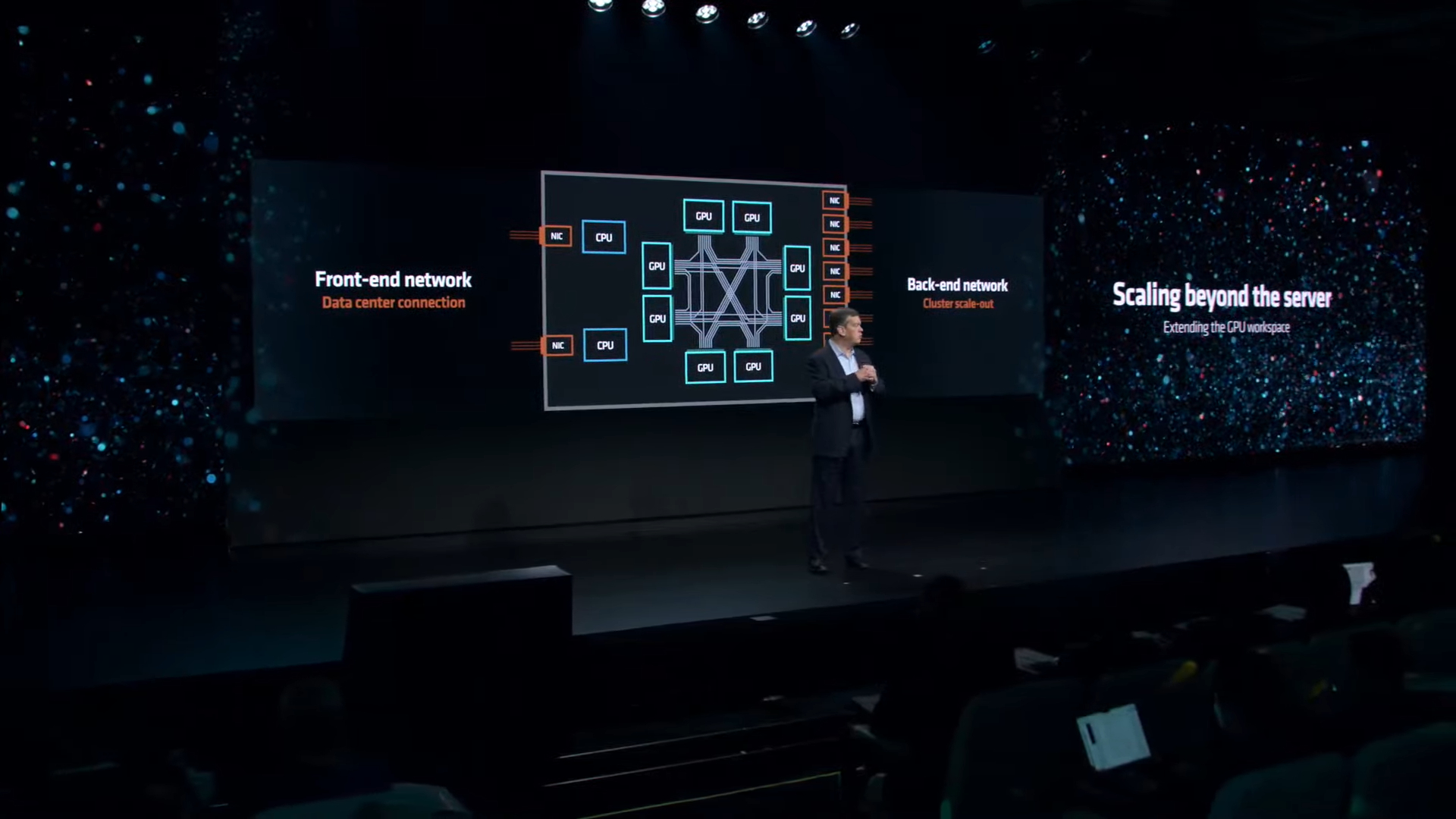

AI performance needs are driving the growth of clusters, thus requiring high-performance networking.

AMD uses its Infinity Fabric technology to provide near linear performance scaling, while Nvidia uses its NVLink.

AMD is now opening up its Infinity Fabric technology to outside firms, a huge announcement that will expand the number of companies that use its networking protocol. Meanwhile, Nvidia's CUDA remains proprietary.

AMD thinks Ethernet is a better solution than Fibre Channel for data center networking. Ethernet does have a host of advantages, including scalability and an open design. AMD is part of the new Ultra Ethernet standard to further performance for AI and HPC workloads.

Norrod invited representatives from Arista, Broadcom, and Cisco to stage to talk about the importance of continued adoption of the Ethernet standard for data centers.

If you're wondering why this is important -- Nvidia has acquired Mellanox and uses its Fibre Channel networking gear heavily in its systems. Notably, Nvidia is not a member of the Ultra Ethernet consortium.

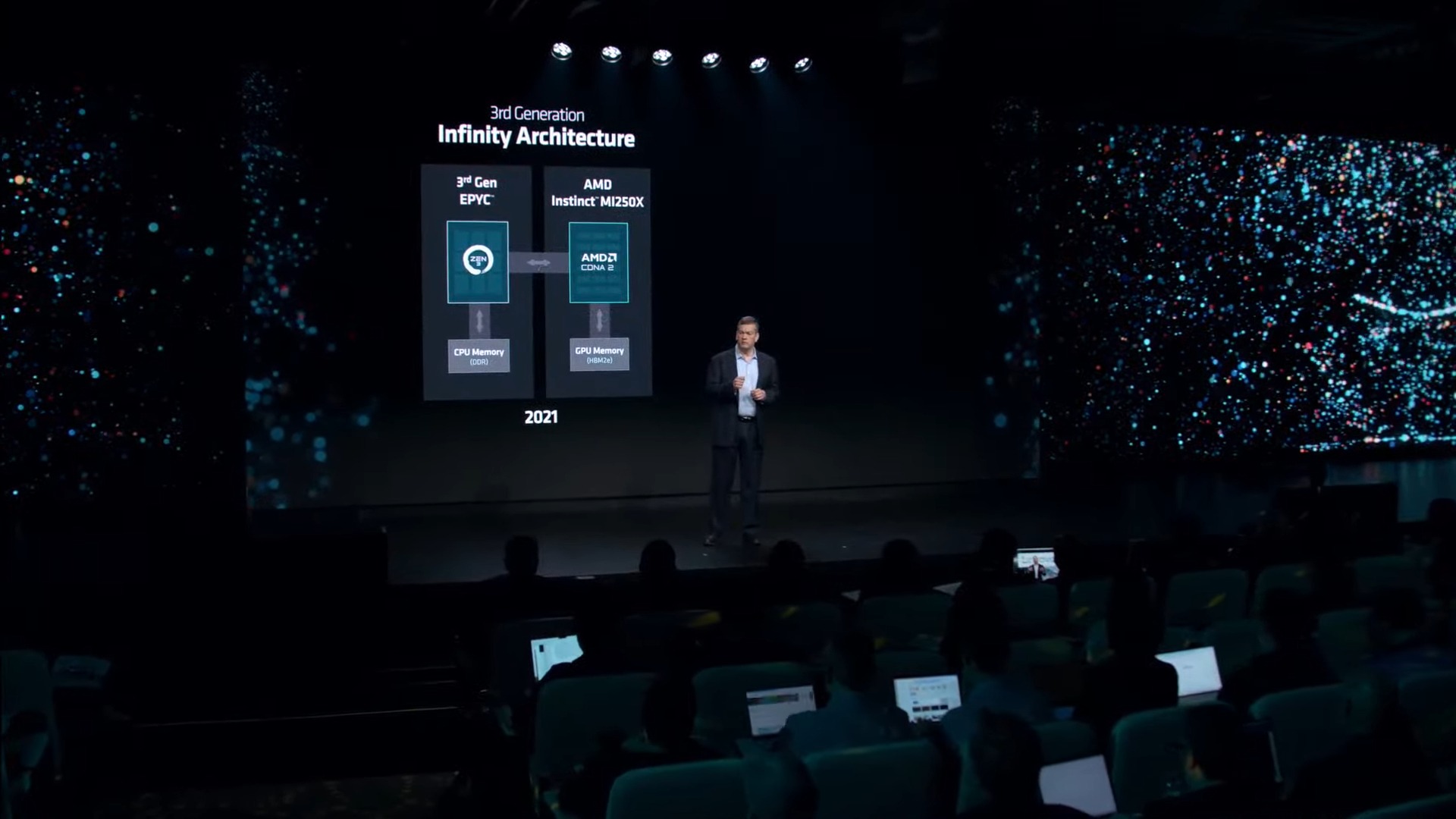

Here comes another hardware announcement! Norrod is talking about AMD's traditional approach to CPUs.

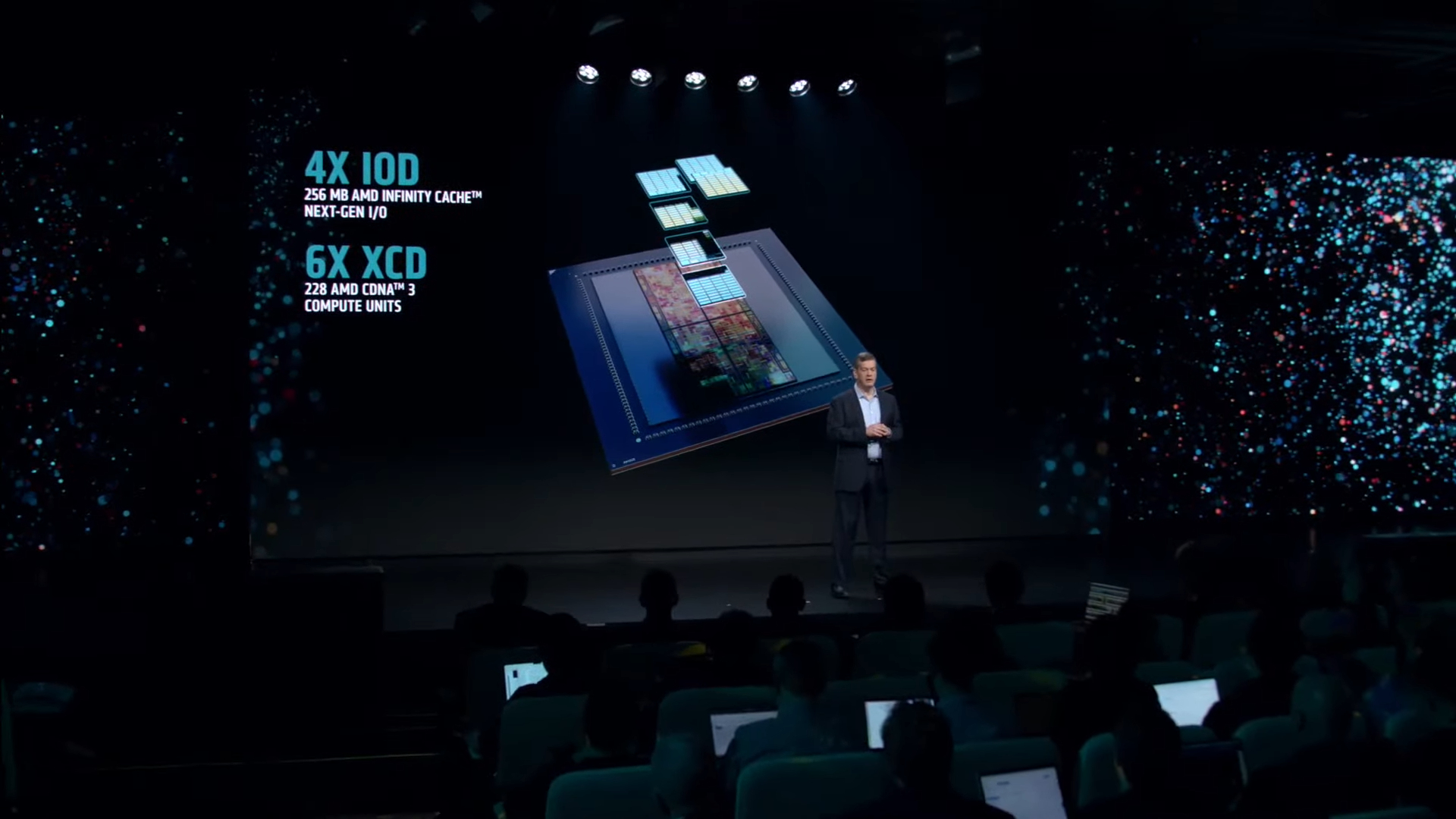

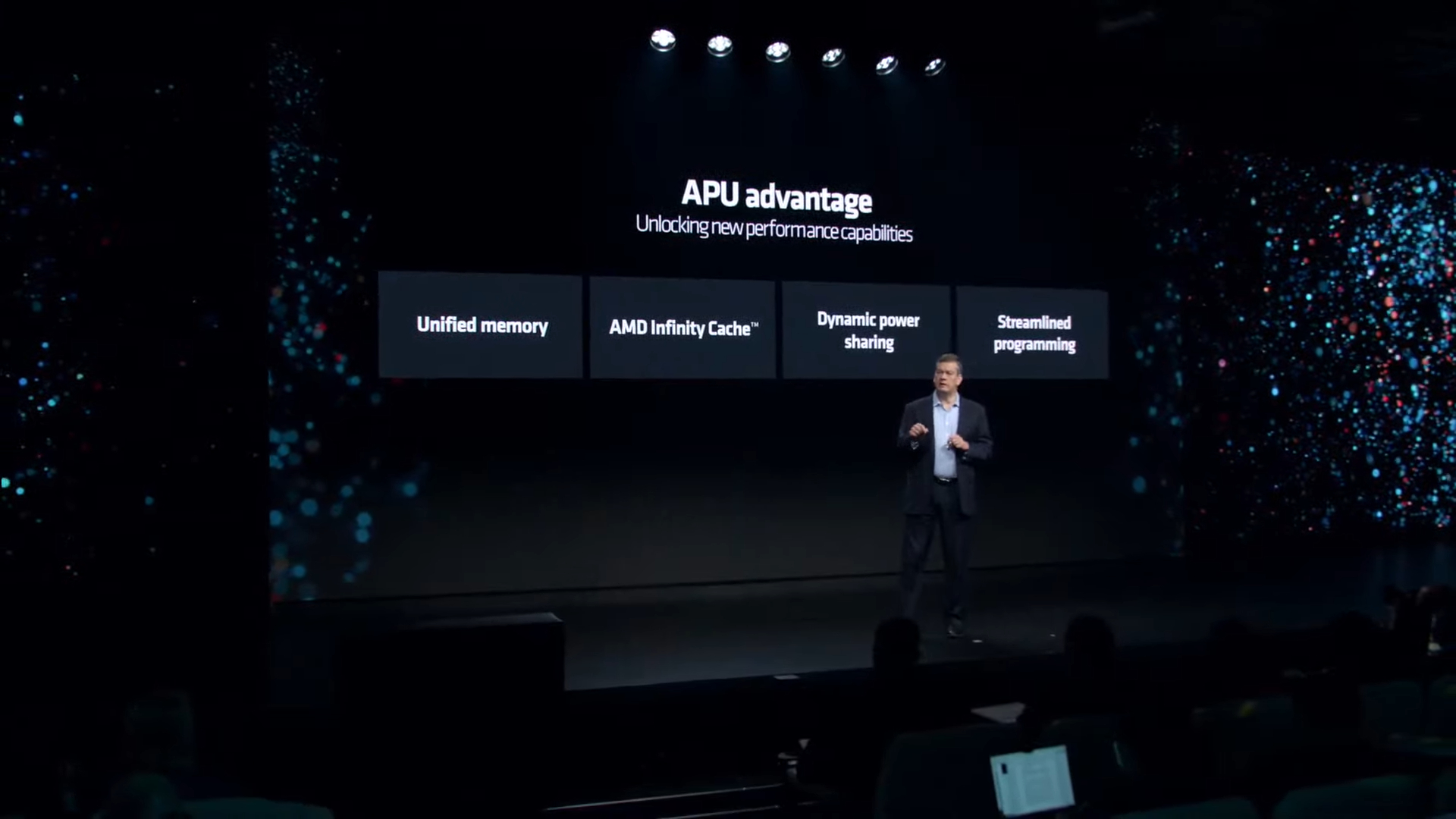

AMD has announced the first data center CPU, the MI300A, has entered into volume production. This is the chip that powers El Capitan. The MI300A uses the same fundamental design and methodology as the MI300X but substitutes in three 5nm core compute die (CCD) with eight Zen 4 CPU cores apiece, the same as found on the EPYC and Ryzen processors, thus displacing two of the XCD GPU chiplets.

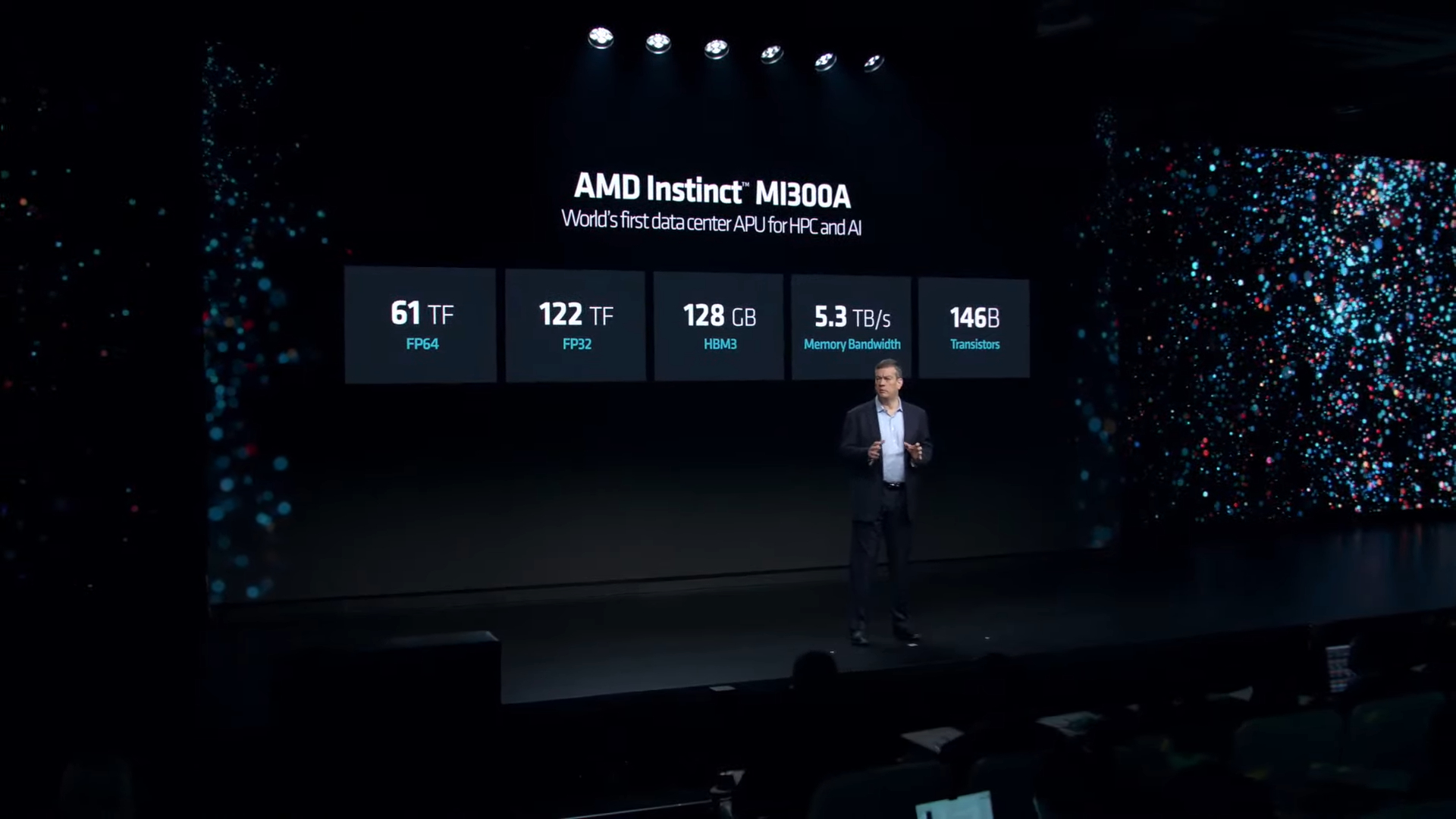

Here are the mind-bending stats behind the MI300A.

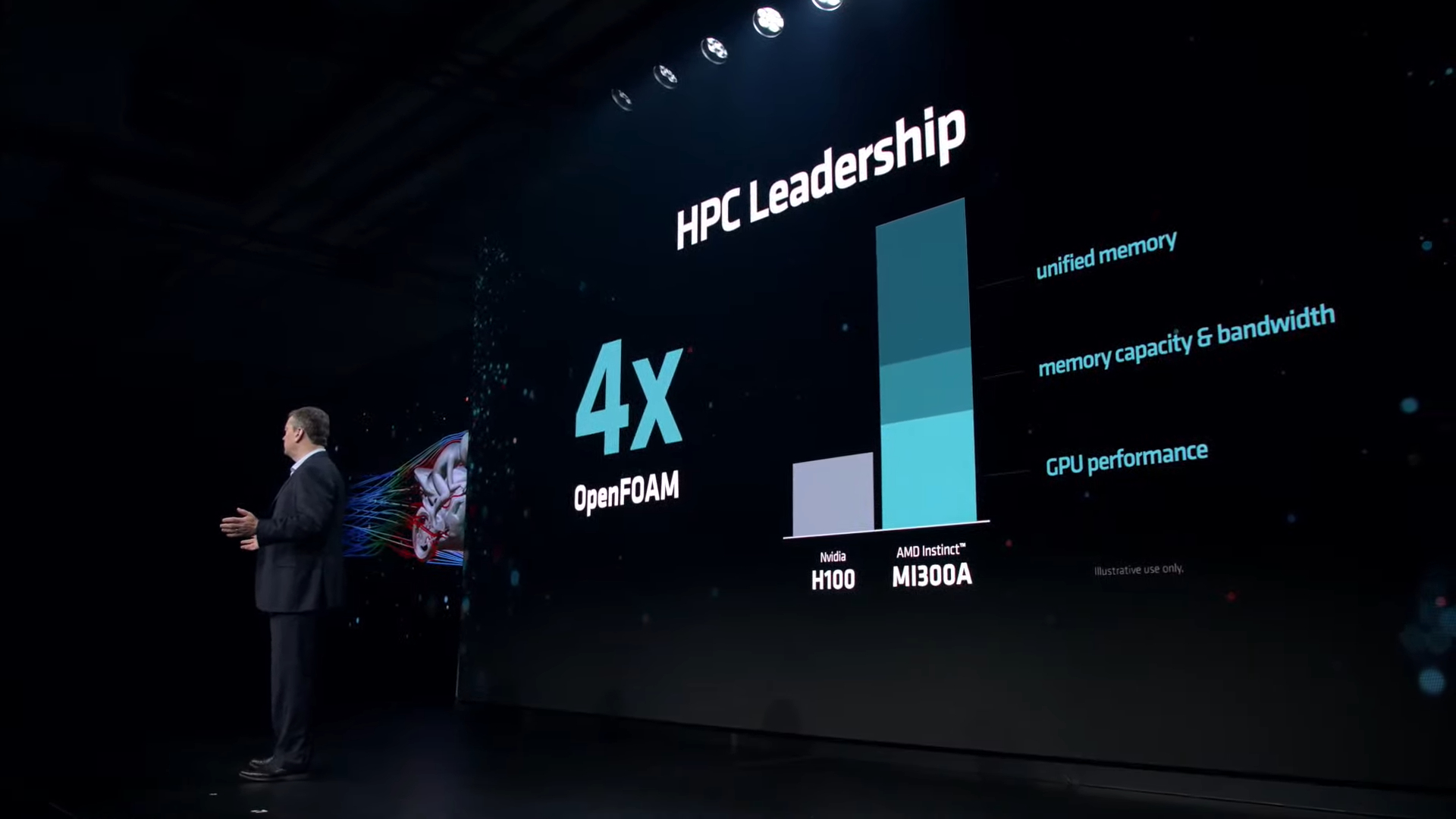

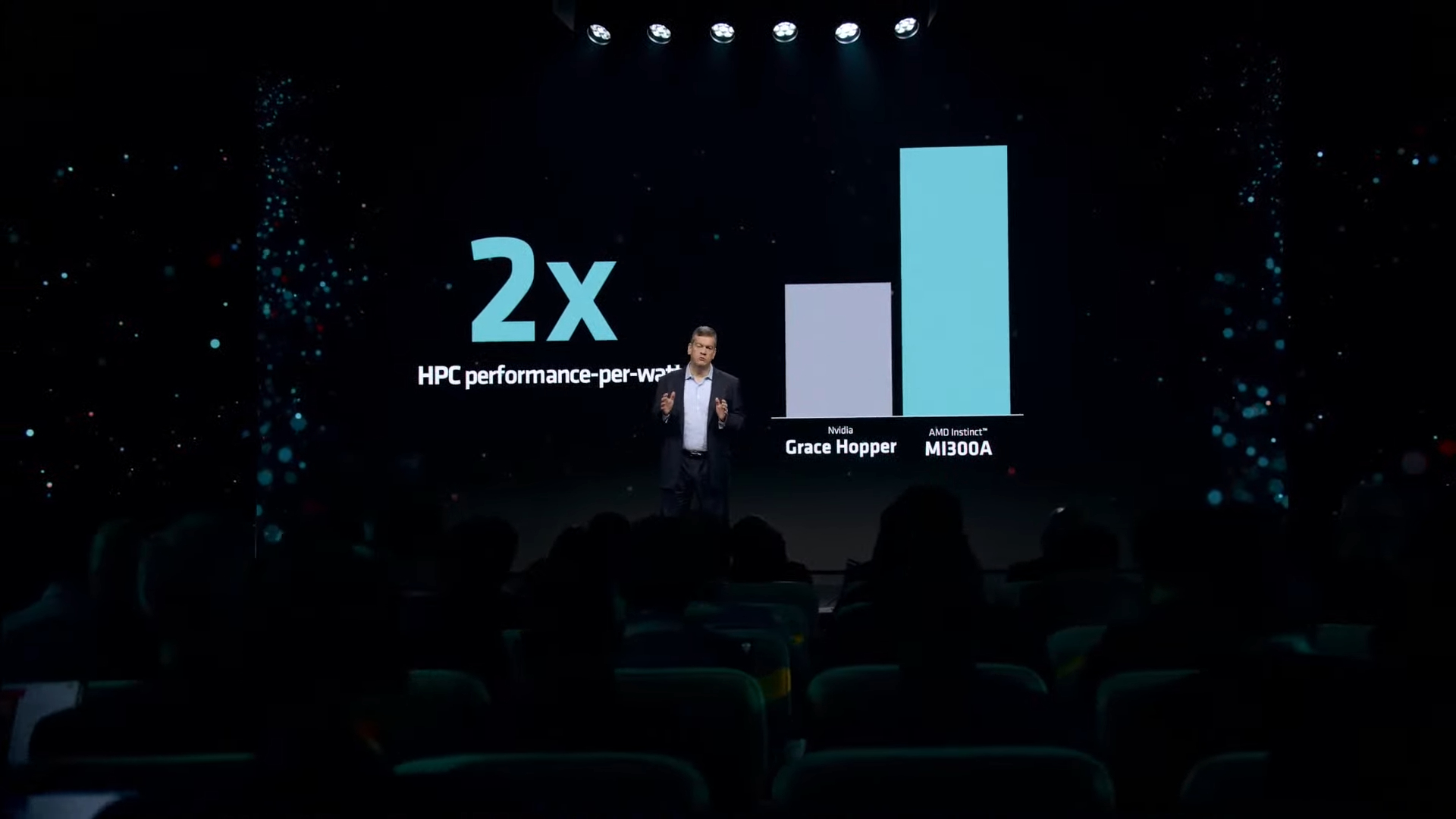

AMD claims that MI300A provides up to 4X the performance in the OpenFOAM motorbike test, but this comparison isn’t ideal: The H100 is a GPU, while the blended CPU and GPU compute in the MI300A provides an inherent advantage in this memory-intensive workload through its shared memory addressing space. Comparisons to the Nvidia Grace Hopper GH200 Superchip, which also brings a CPU and GPU together in a tightly coupled implementation, would be better here, but AMD says that it couldn’t find any publicly listed OpenFOAM results for Nvidia’s chip.

AMD says MI300A is twice as power efficient as the Nvidia Grace Hopper Superchip.

Here's a nice shot of the MI300A.

AMD broke the exascale barrier with the Frontier supercomputer, which uses its MI250X accelerators. Now the MI300A will be deployed into El Capitan, which is expected to pass two exaflops of performance.

HPE and AMD have developed the supercomputers for the Department of Energy.

The MI300A will be available soon from partners around the world.

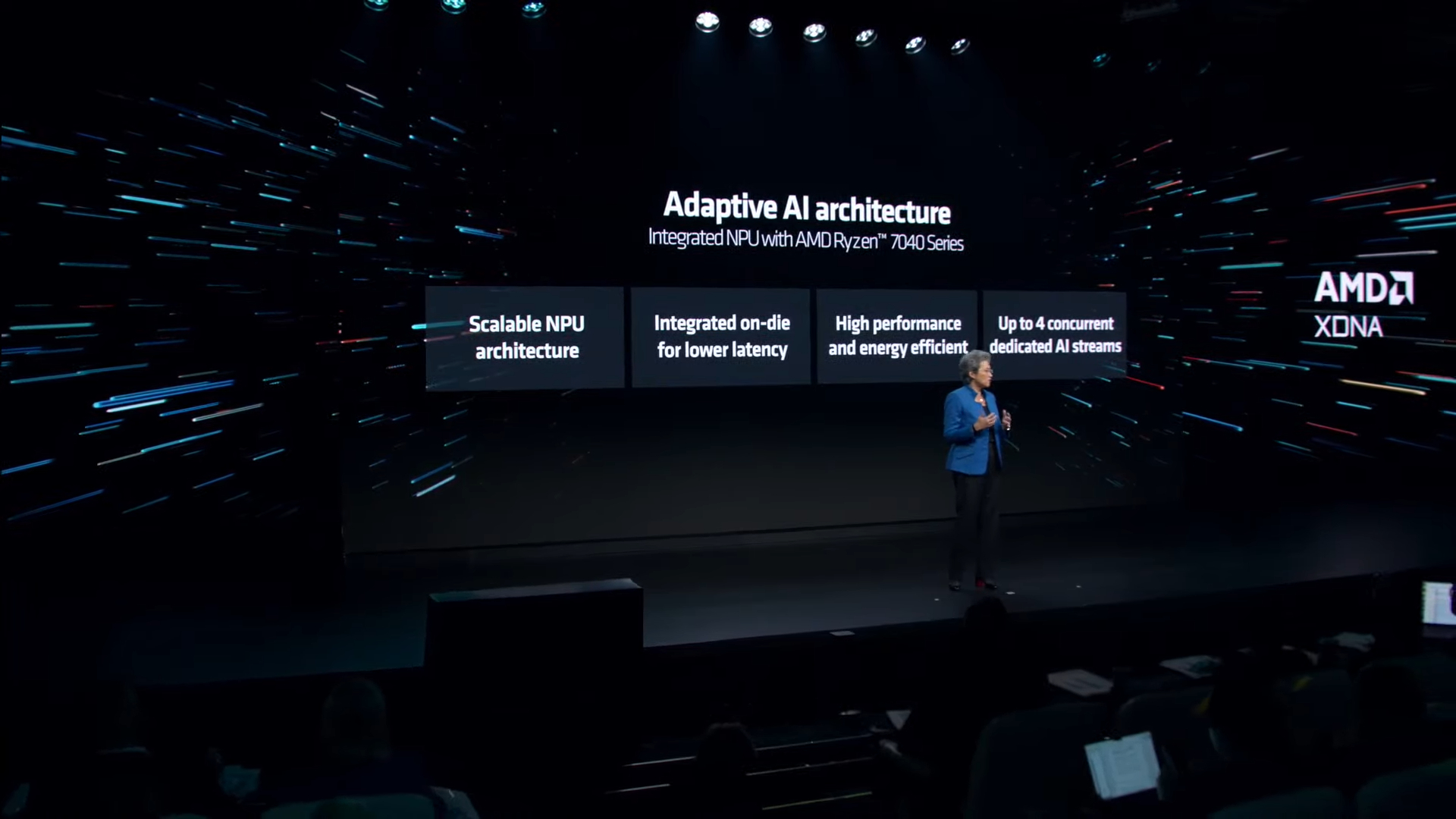

Lisa Su has come back to the stage and has moved into talking about consumer AI enablement. AMD integrated the XDNA architecture into its Ryzen 7040 chips, bring the first dedicated on-die AI processor to market in PCs.

AMD has worked diligently to enable the AI-accelerated software ecosystem in Windows.

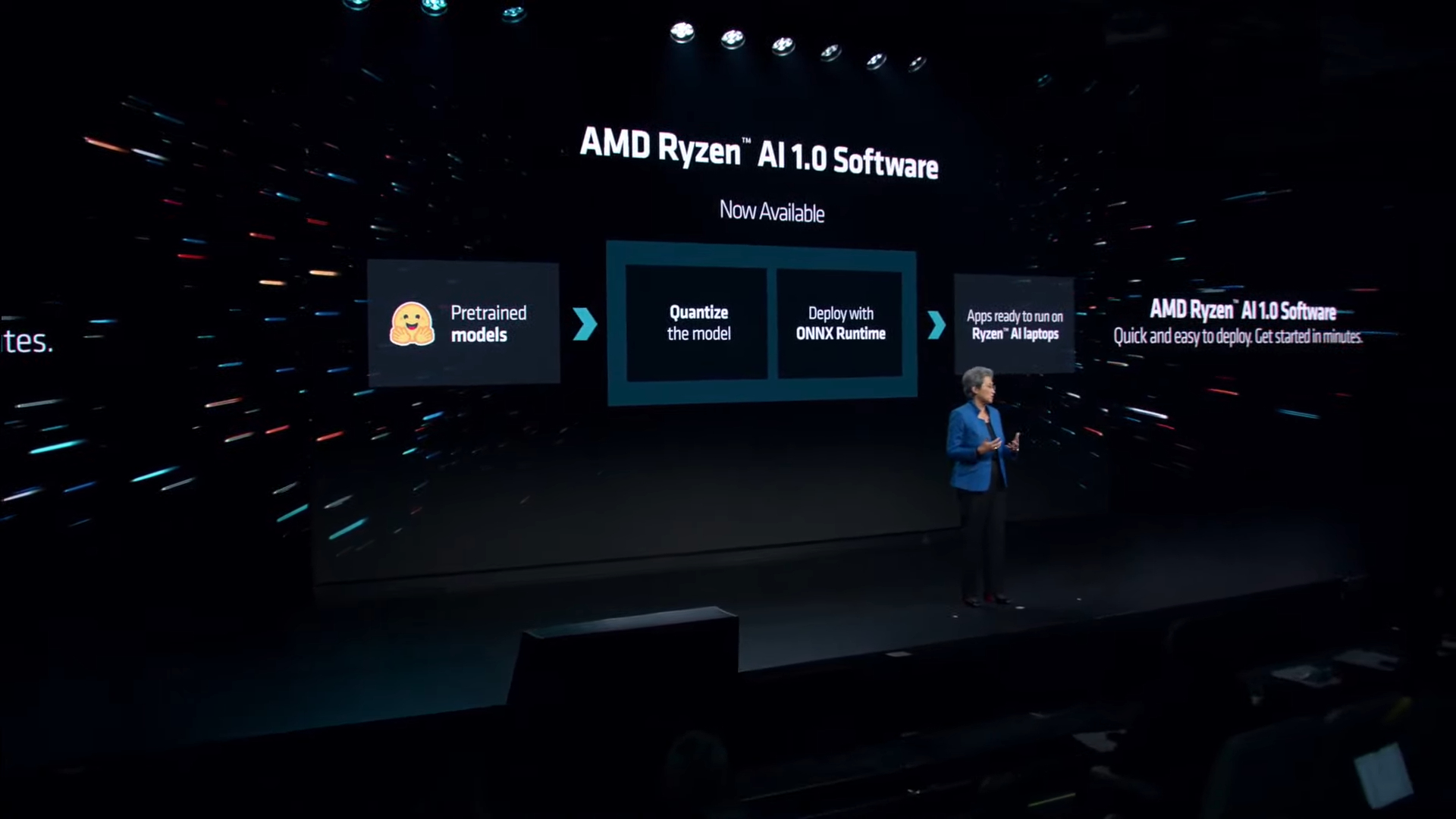

AMD has released its Ryzen AI 1.0 software today. This software will allow its customers to easily deploy AI models on NPU-equipped laptops.

Lisa Su announced the launch of the Ryzen 8040 series, codenamed Hawk Point. These chips are shipping to partners now. AMD claims up to 60% more performance in AI workloads.

AMD is working with Microsoft to broaden the AI ecosystem with AI processing power.

Lisa teased the next-gen "Strix Point" processors that will arrive next year. AMD also provided performance claims for XDNA 1, saying the NPU alone delivers 10 TOPS (teraops INT8) of performance in the Phoenix 7040 series, and that increases to 16 TOPS in the Hawk Point 8040 series

Here's the wrap-up slide. MI300X and MI300A are already shipping and are in production with a wide range of OEM partners. ROCm 6 is coming, and AMD launched the Ryzen 8040 series.

And with that, Lisa closed the show.