AMD unveils Instinct MI300X GPU and MI300A APU, claims up to 1.6X lead over Nvidia’s competing GPUs

AMD’s chiplet strategy comes to fore.

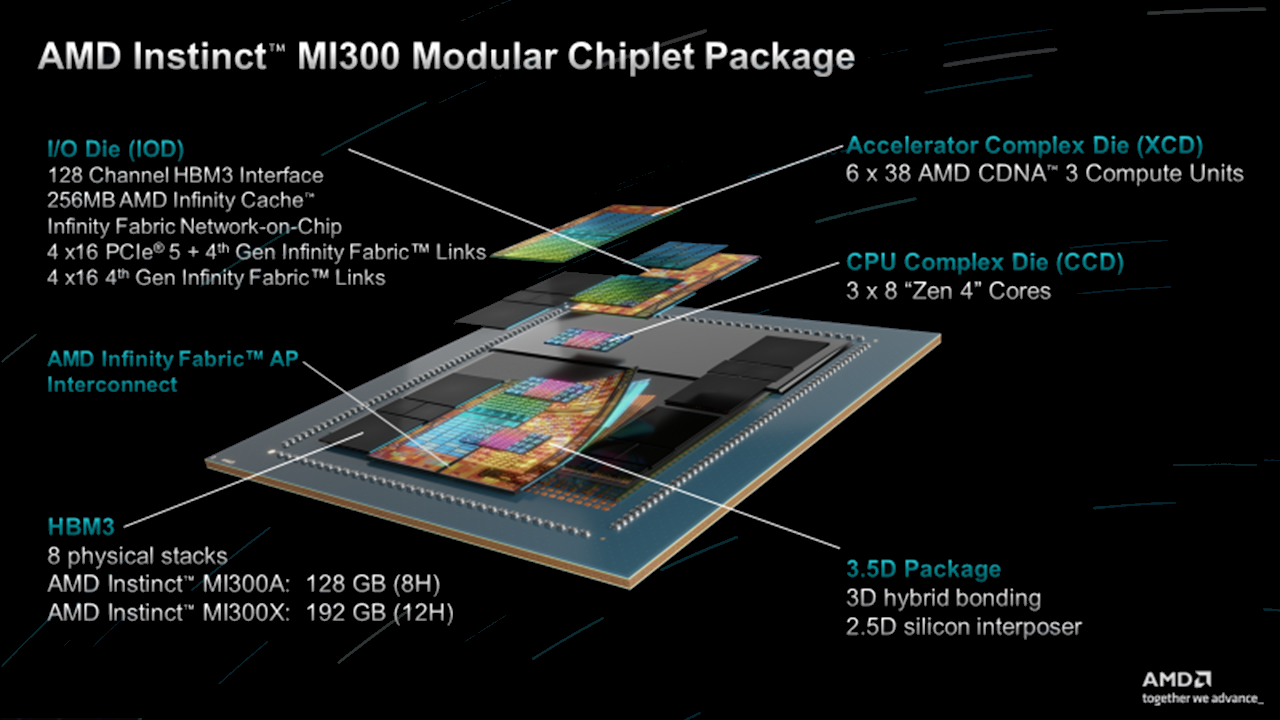

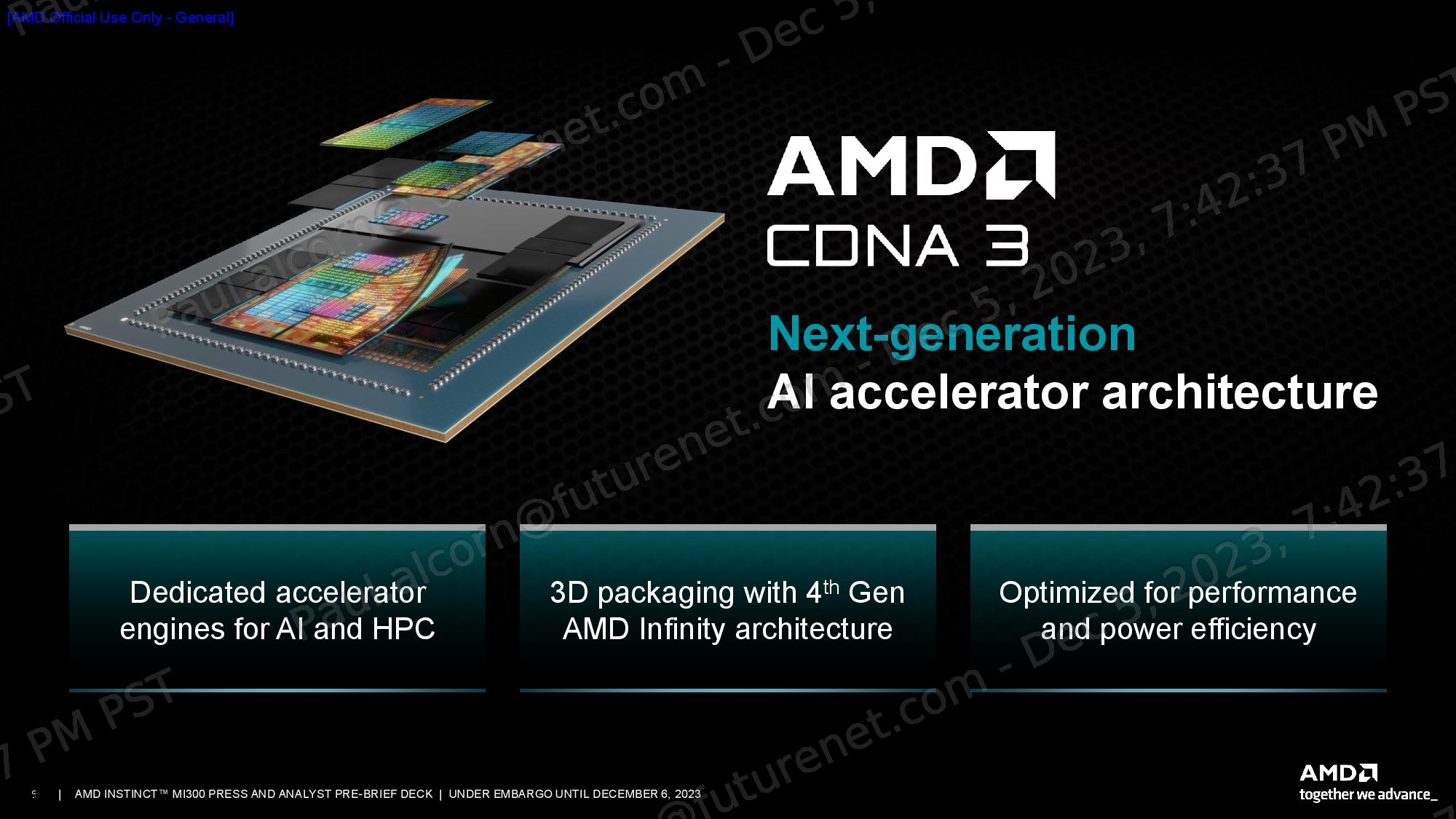

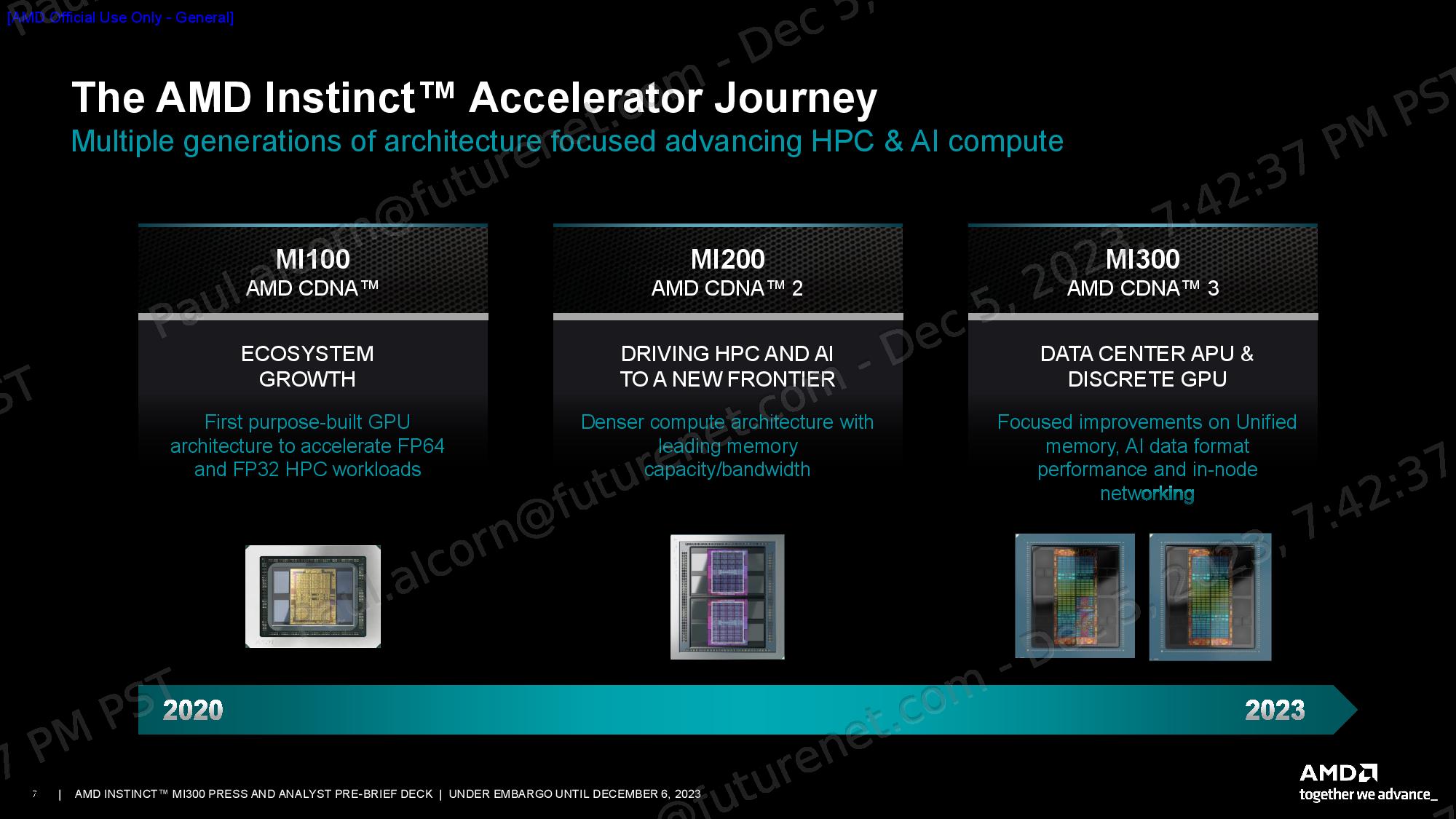

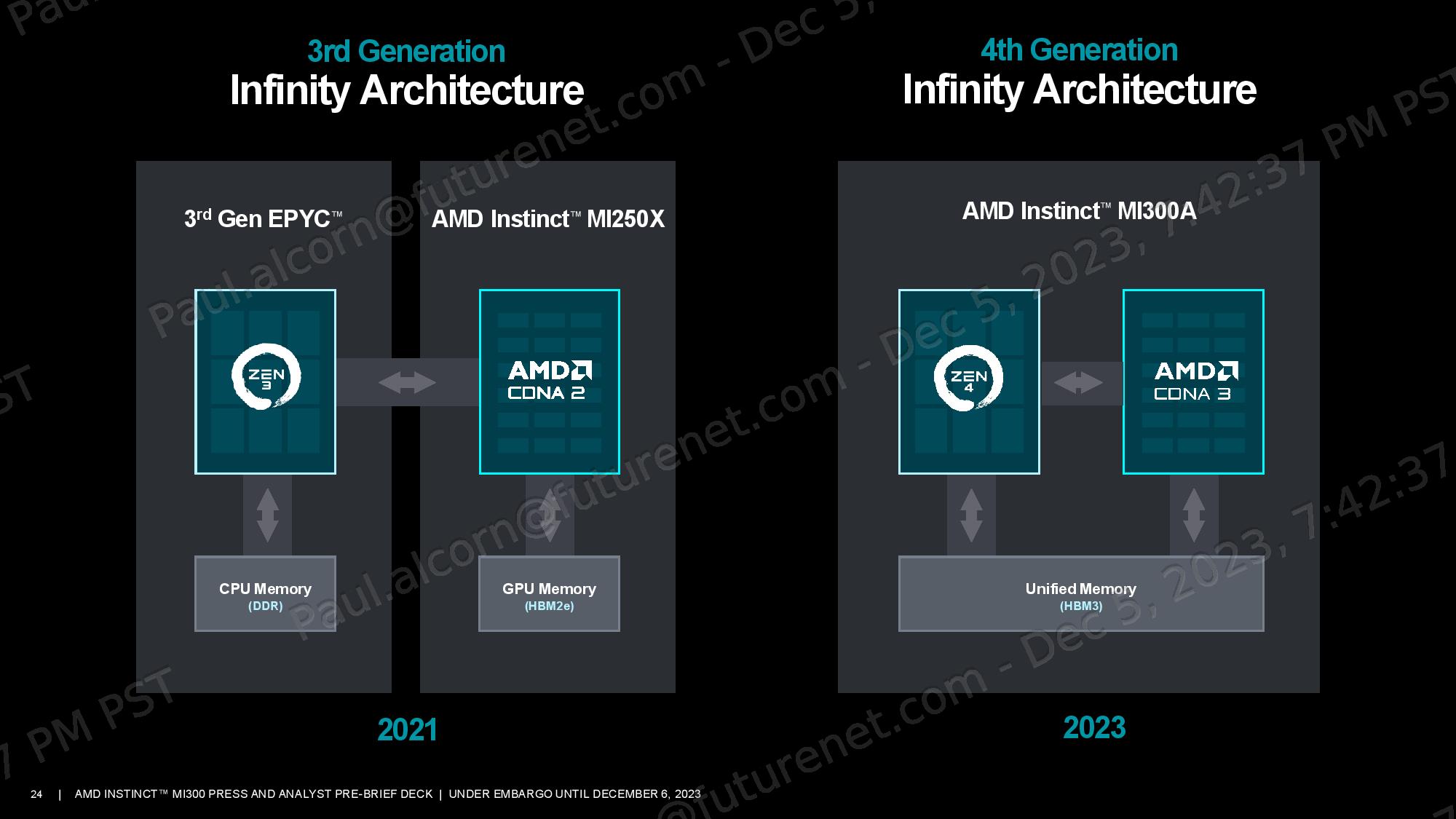

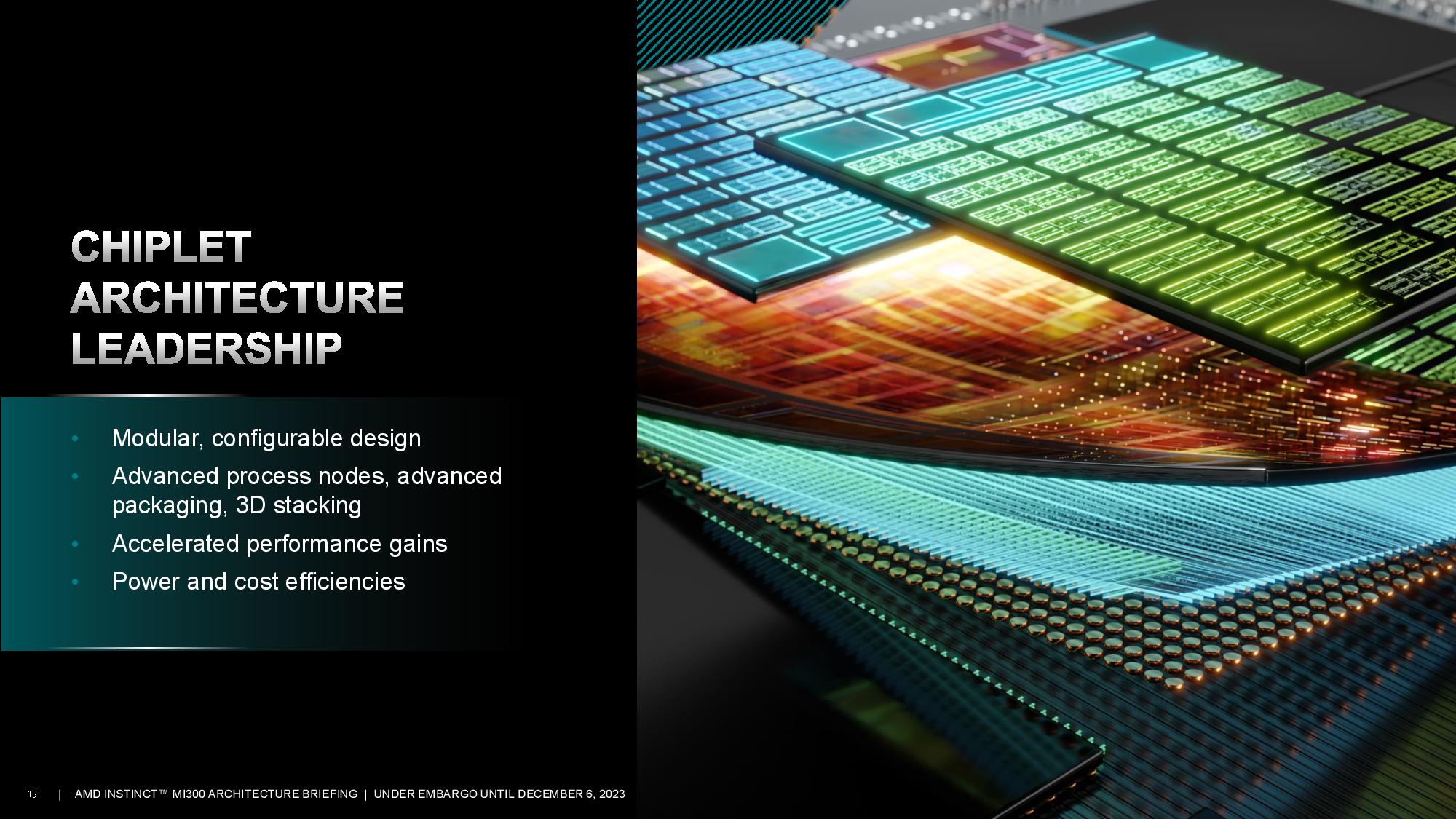

AMD launched its Instinct MI300X AI accelerator and the Instinct MI300A, the world’s first data center APU, during its Advancing AI event here in San Jose, California, as it looks to capitalize on the booming generative AI and HPC market. AMD forged its MI300 lineup using the most advanced production technologies ever pressed into mass production, employing new techniques like its ‘3.5D’ packaging to produce two multi-chip behemoths that it says provide Nvidia-beating performance in a wide range of AI workloads. AMD isn’t sharing pricing for its new exotic silicon, but the products are now shipping to a wide range of OEM partners.

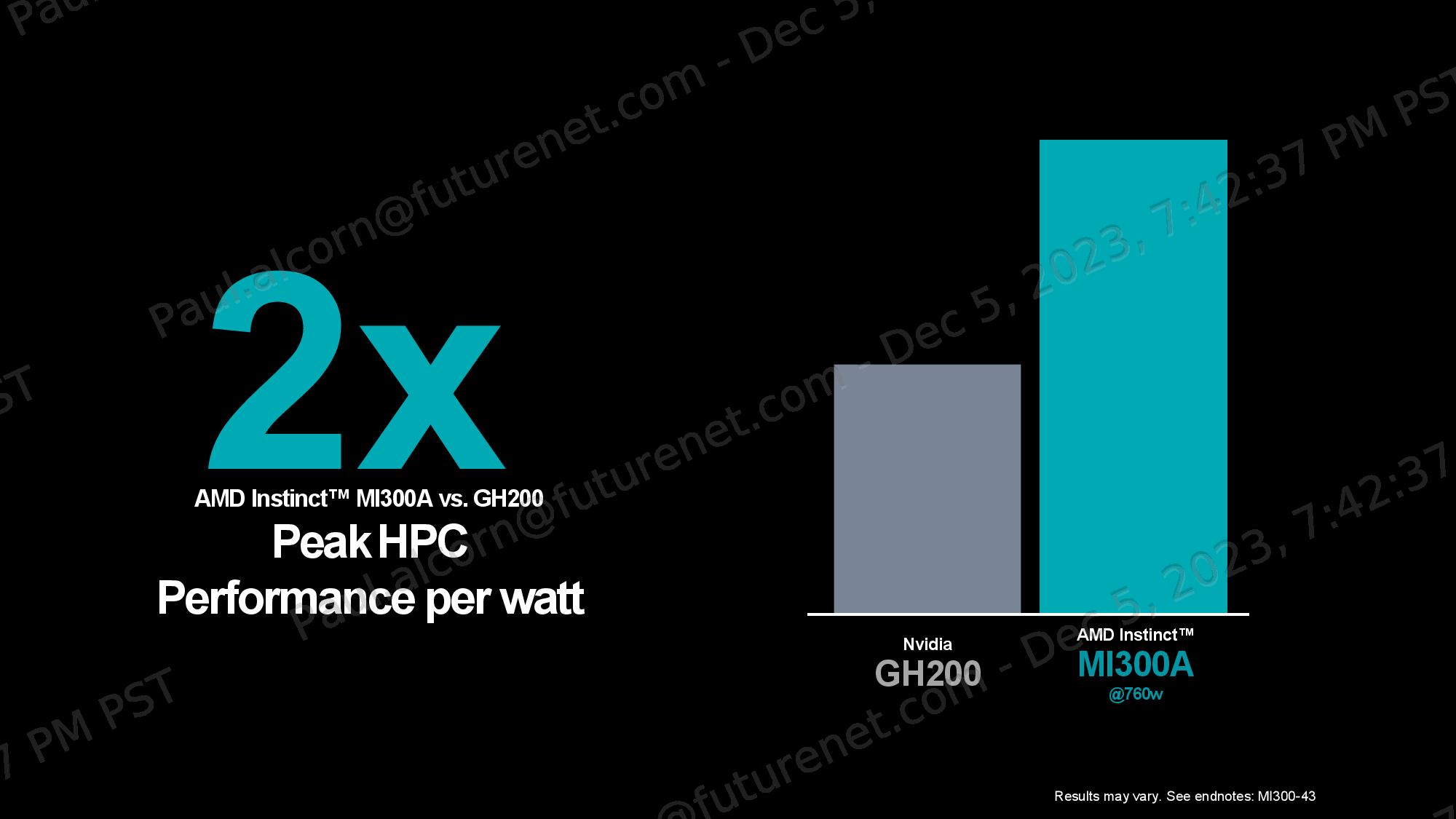

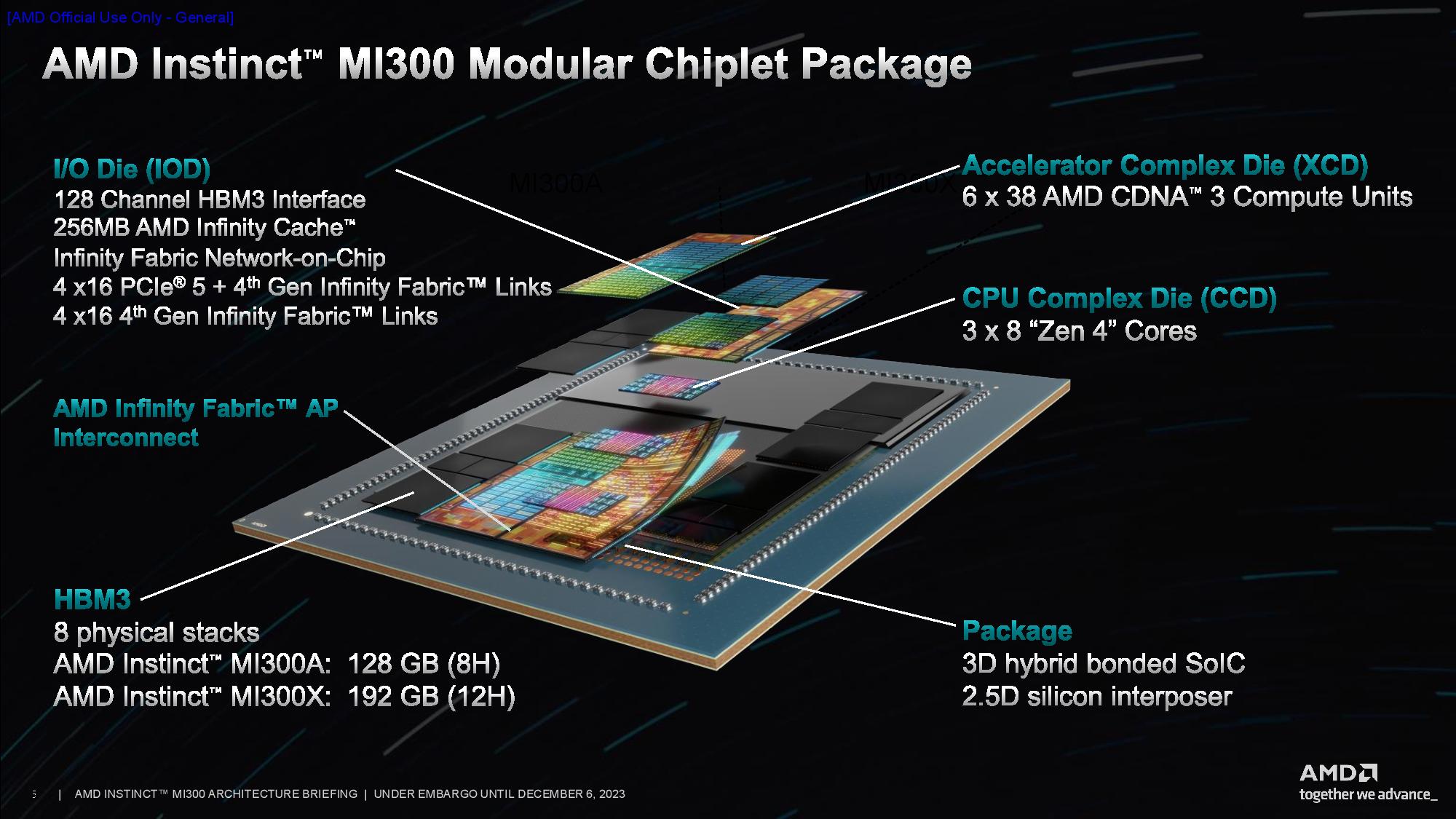

The Instinct MI300 is a game-changing design - the data center APU blends a total of 13 chiplets, many of them 3D-stacked, to create a chip with twenty-four Zen 4 CPU cores fused with a CDNA 3 graphics engine and eight stacks of HBM3. Overall, the chip weighs in with 153 billion transistors, making it the largest chip AMD has ever made. AMD claims this chip delivers up to 4X more performance than Nvidia’s H100 GPUs in some workloads and touts that it has twice the performance per watt.

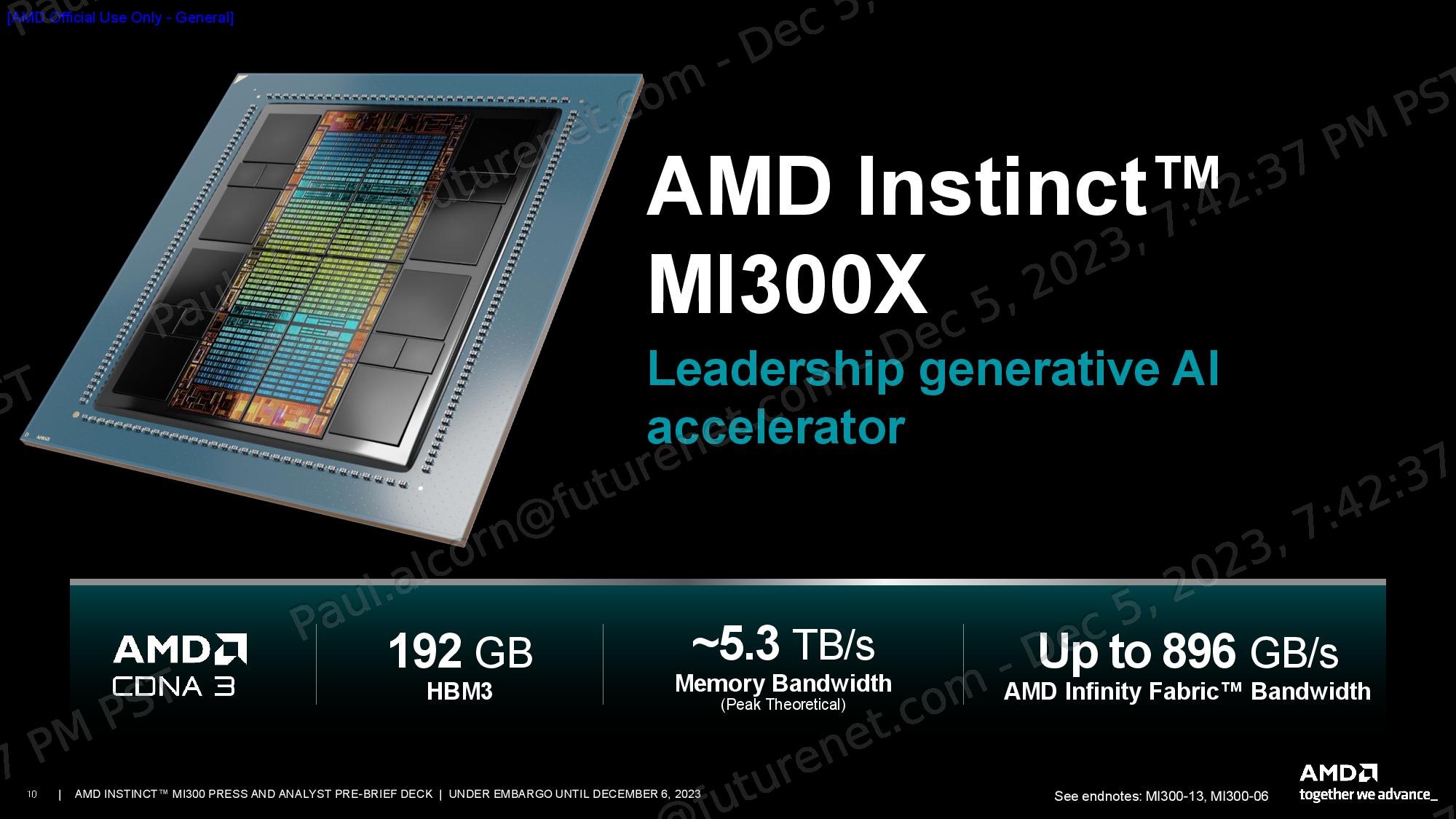

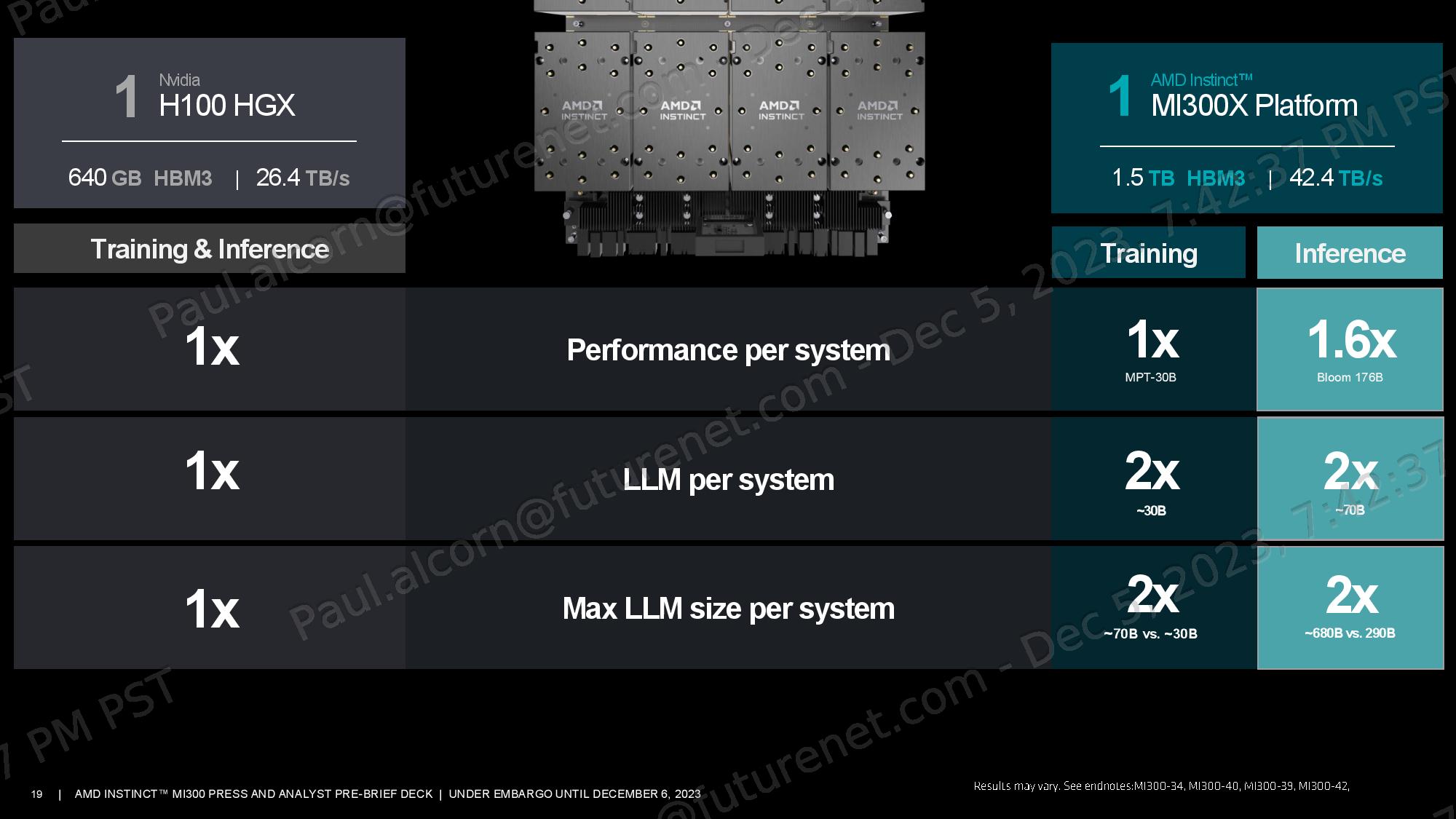

AMD says its Instinct MI300X GPU delivers up to 1.6X more performance than the Nidia H100 in AI inference workloads and offers similar performance in training work, thus providing the industry with a sorely needed high-performance alternative to Nvidia’s GPUs. Additionally, these accelerators come with more than twice the HBM3 memory capacity than Nvidia’s GPUs — an incredible 192 GB apiece — enabling its MI300X platforms to support more than twice the number of LLMs per system as well as running larger models than Nvidia’s H100 HGX.

AMD Instinct MI300X

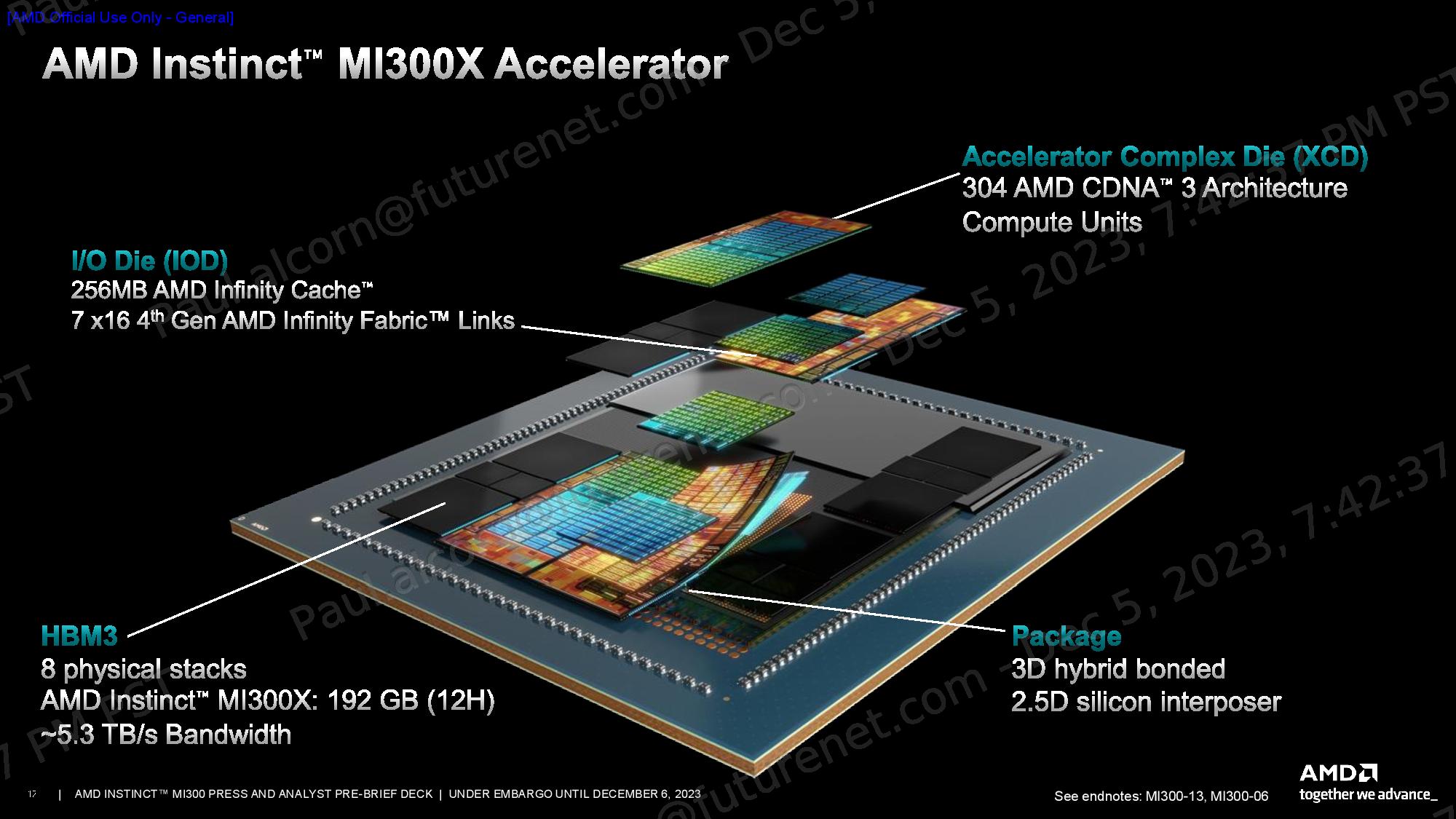

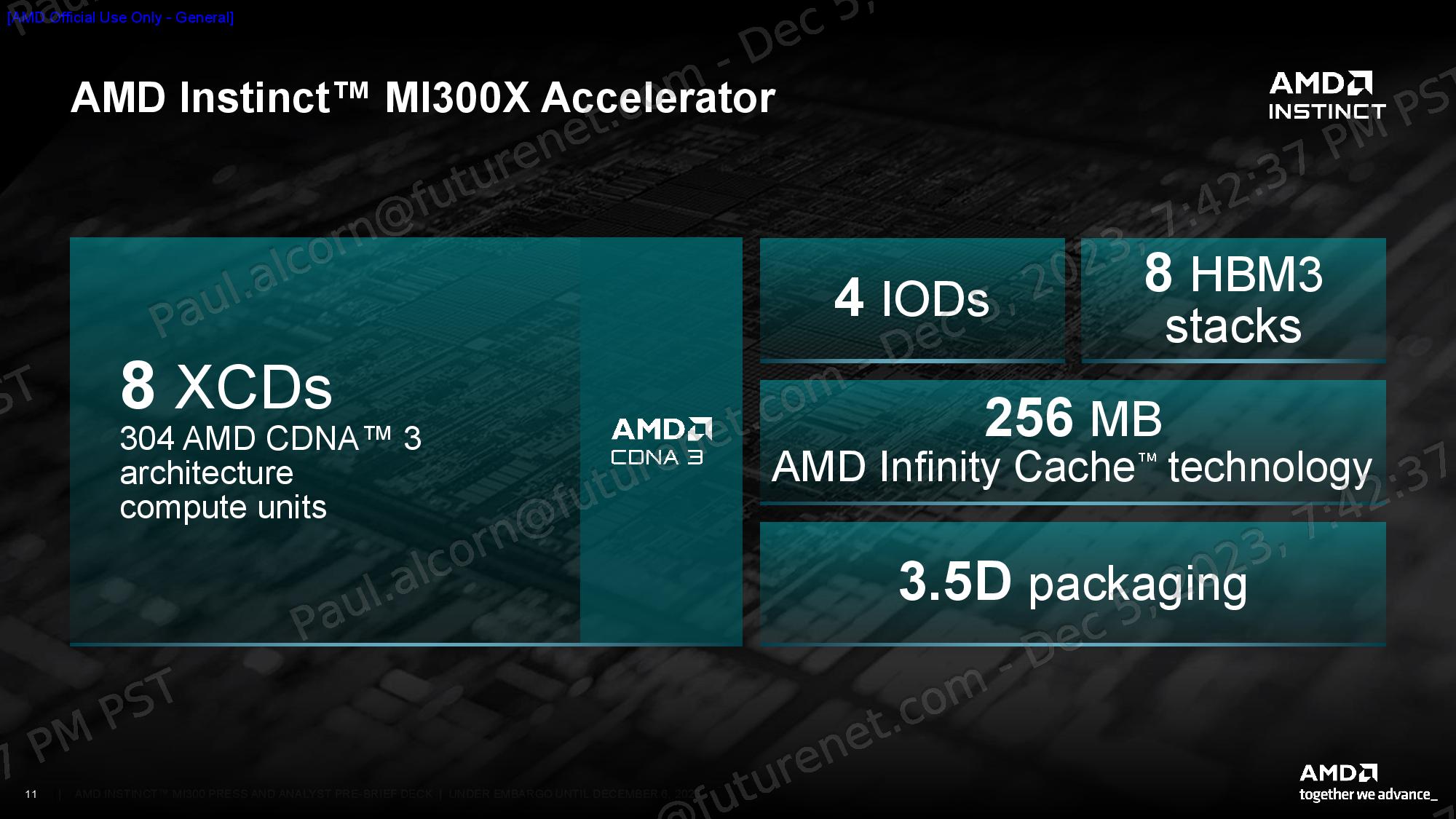

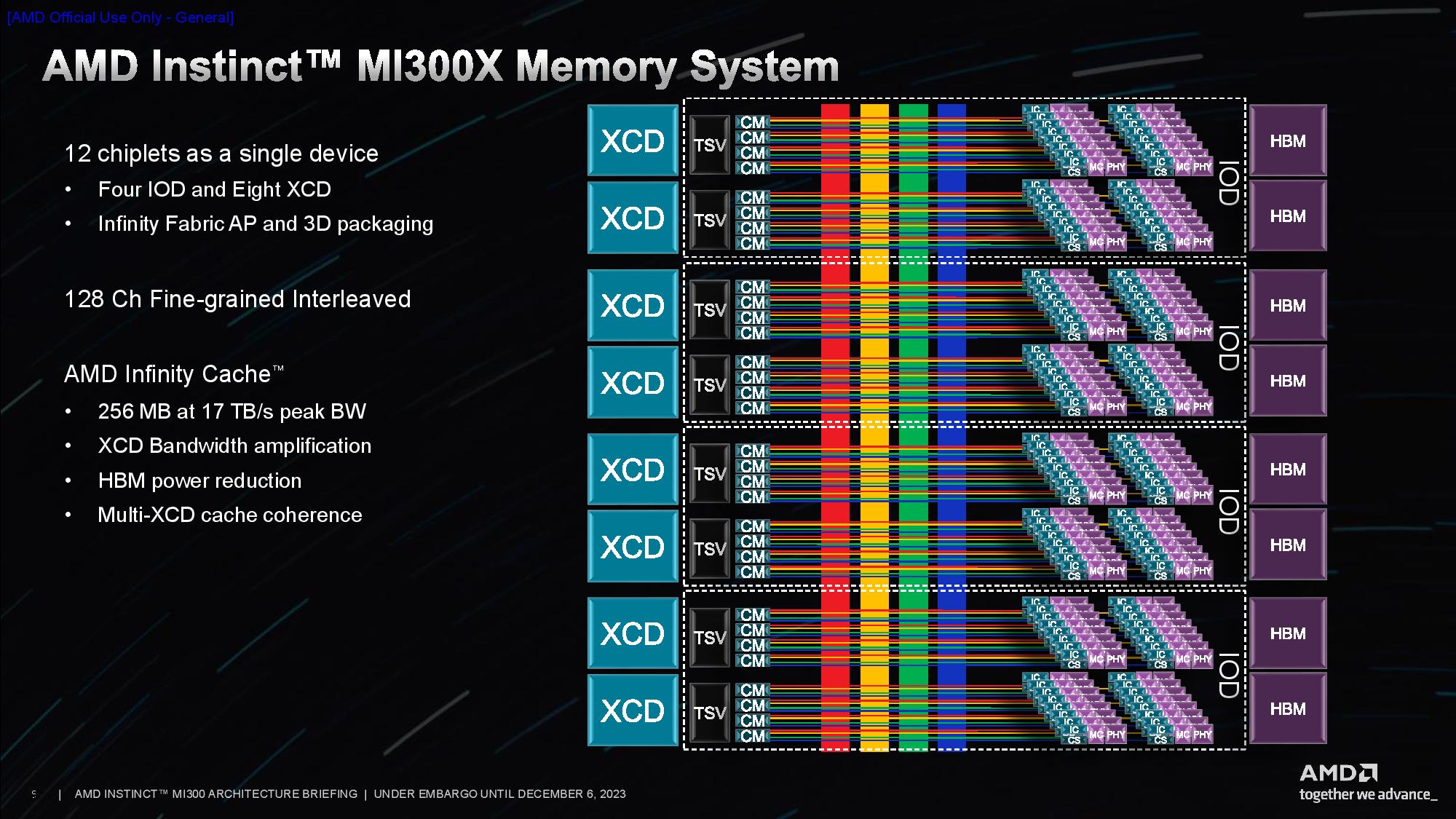

The MI300X represents the pinnacle of AMD’s chiplet-based design methodology, melding eight 12Hi stacks of HBM3 memory with eight 3D-stacked 5nm CDNA 3 GPU chiplets, dubbed XCD, on four underlying 6nm I/O dies that are connected using AMD’s now-mature hybrid bonding technique.

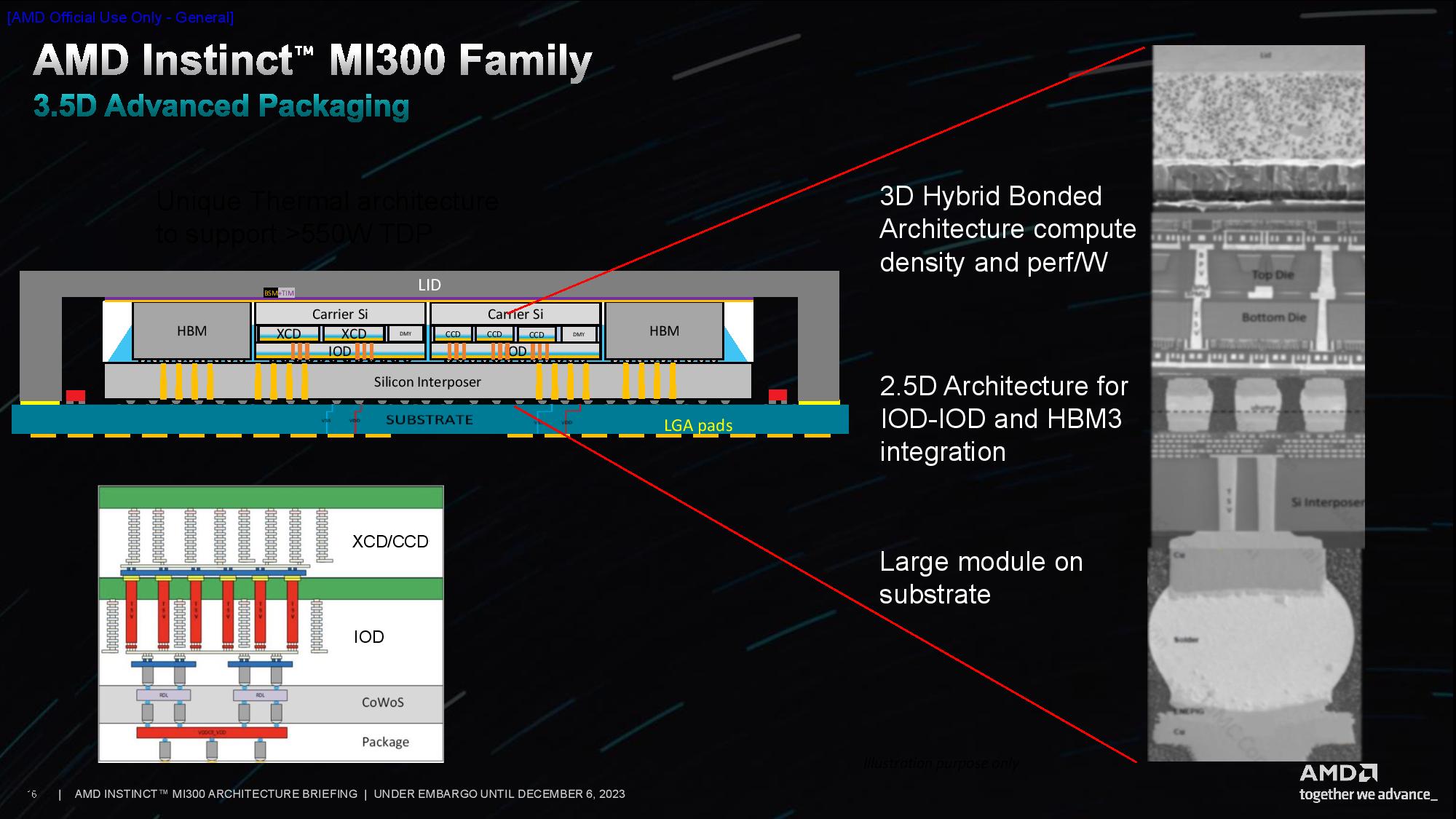

The result is a 750W accelerator that wields 304 compute units, 192GB of HBM3 capacity, and 5.3 TB/s of bandwidth. The accelerator also has 256MB of Infinity Cache that serves as a shared L3 cache layer to facilitate communication between the chiplets. AMD dubs its packaging tech that binds the chiplets together as ‘3.5D,’ denoting a combination of 3D-stacked GPU and I/O dies fused together with hybrid bonding paired with standard 2.5D packaging (horizontal connections) for the remainder of the module. We’ll dive much deeper into the architectural components below.

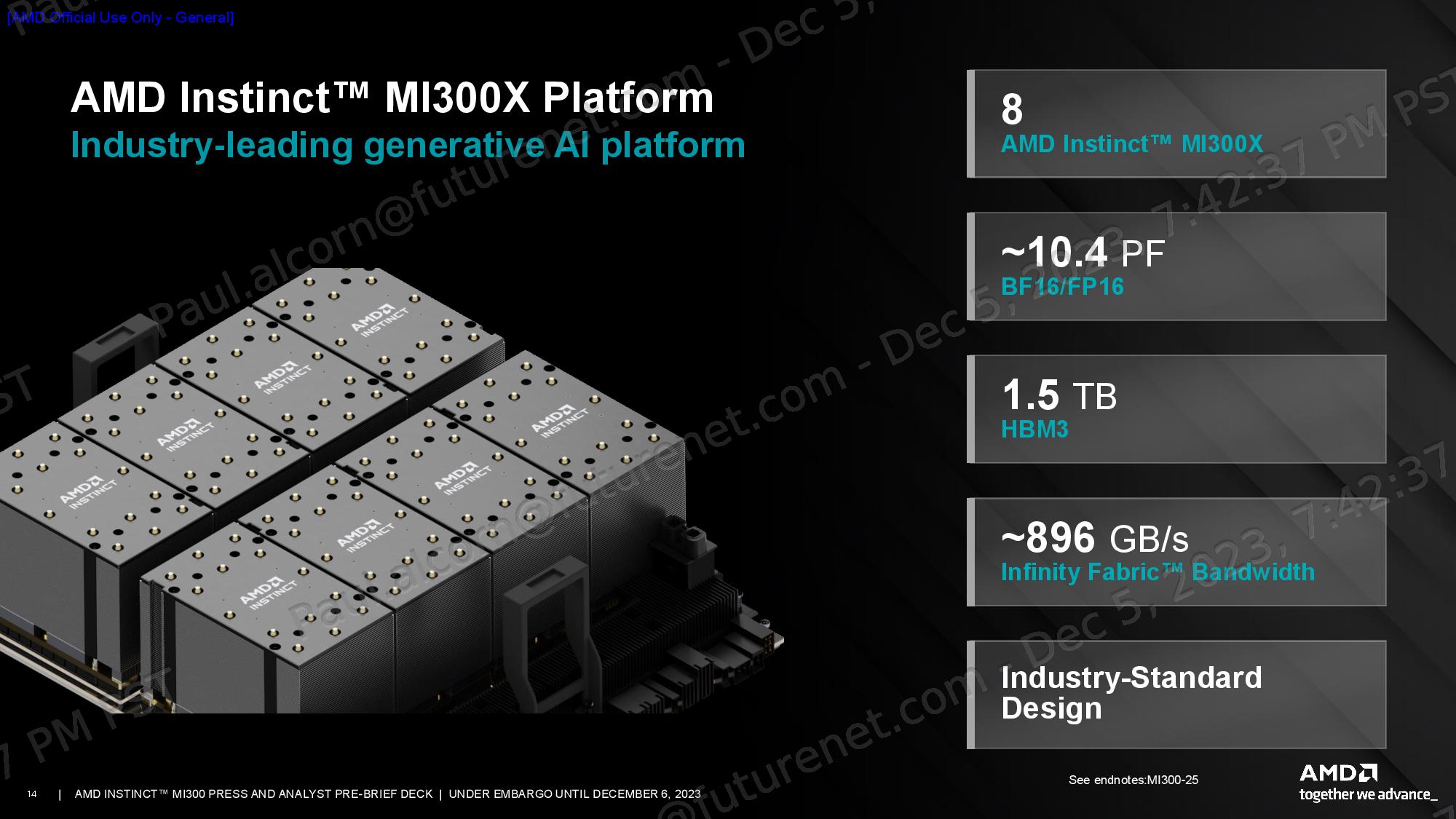

The MI300X accelerator is designed to work in groups of eight in AMD’s generative AI platform, facilitated by 896 GB/s of throughput between the GPUs across an Infinity Fabric interconnect. This system has 1.5TB of total HBM3 memory and delivers up to 10.4 Petaflops of performance (BF16/FP16). This system is built on the Open Compute Project (OCP) Universal Baseboard (UBB) Design standard, thus simplifying adoption - particularly for hyperscalers.

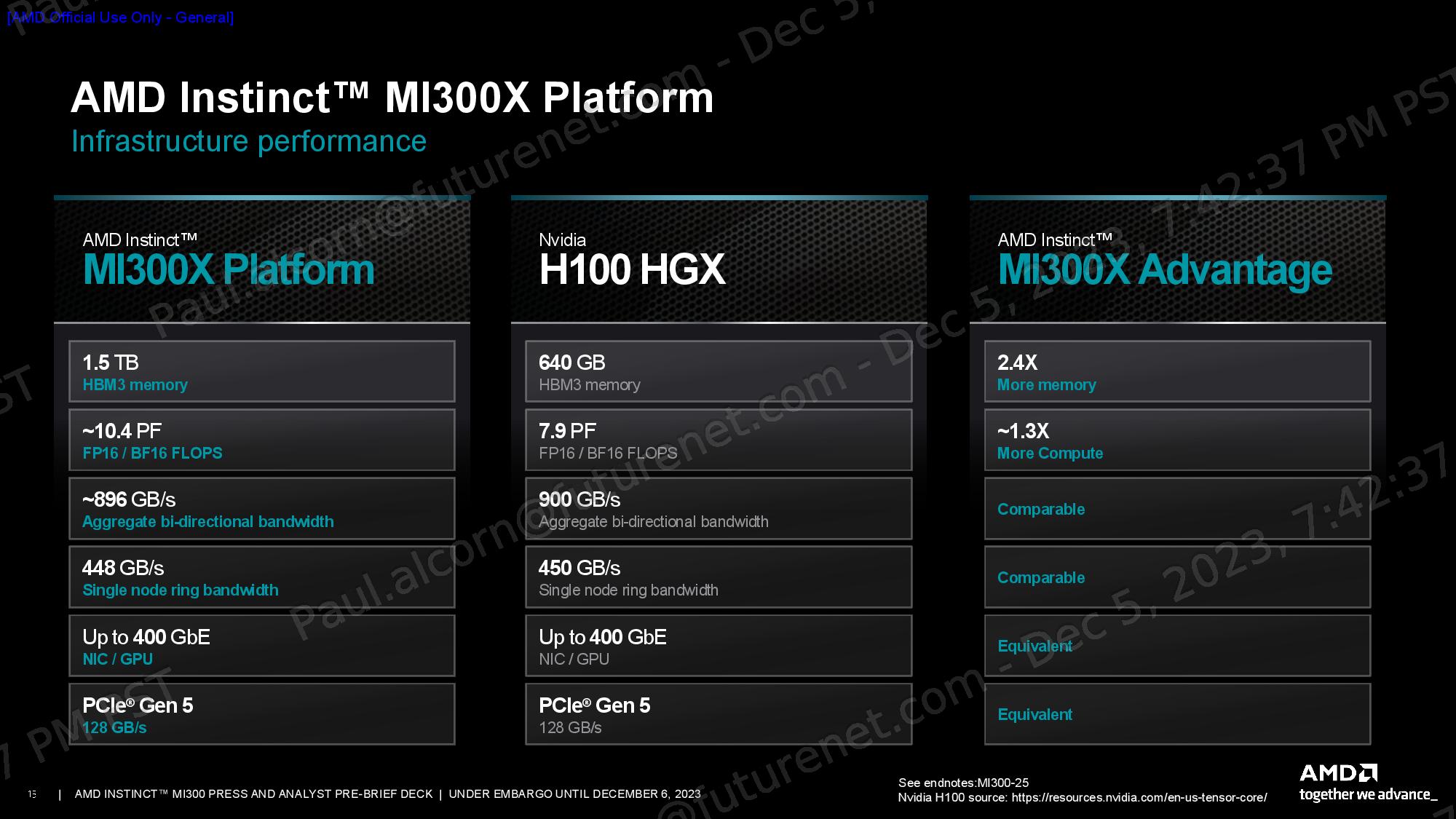

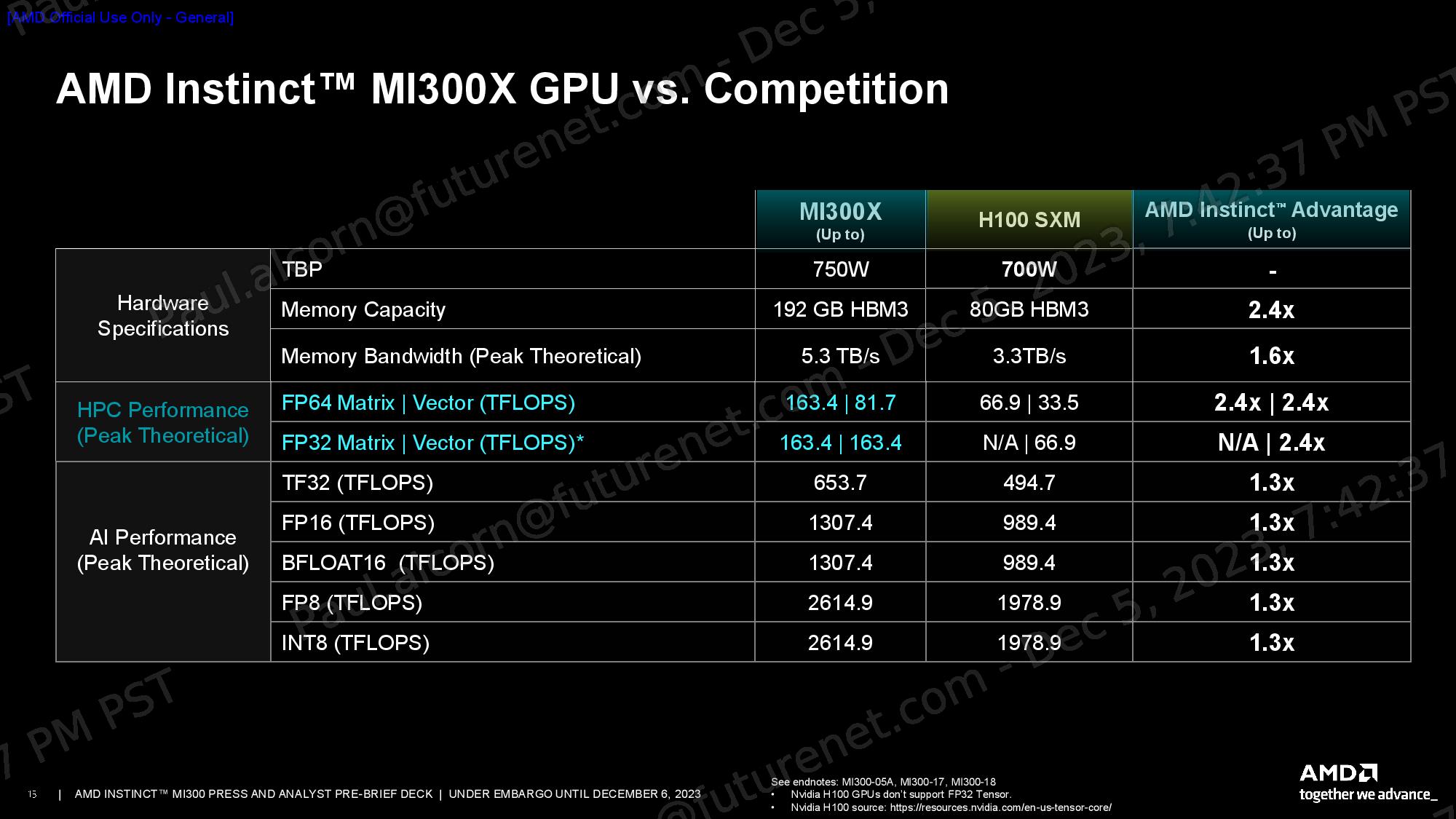

AMD’s MI300X platform has 2.4X more memory capacity and a claimed 1.3X advantage in computational horsepower over Nvidia’s H100 HGX platform (BF16/FP16), all while maintaining comparable aggregate bi-directional and single-node ring bandwidth. AMD outfits the MI300X platform with 400GbE networking and supports a wide variety of NICs, whereas Nvidia favors using its own networking products borne of its Mellanox acquisition.

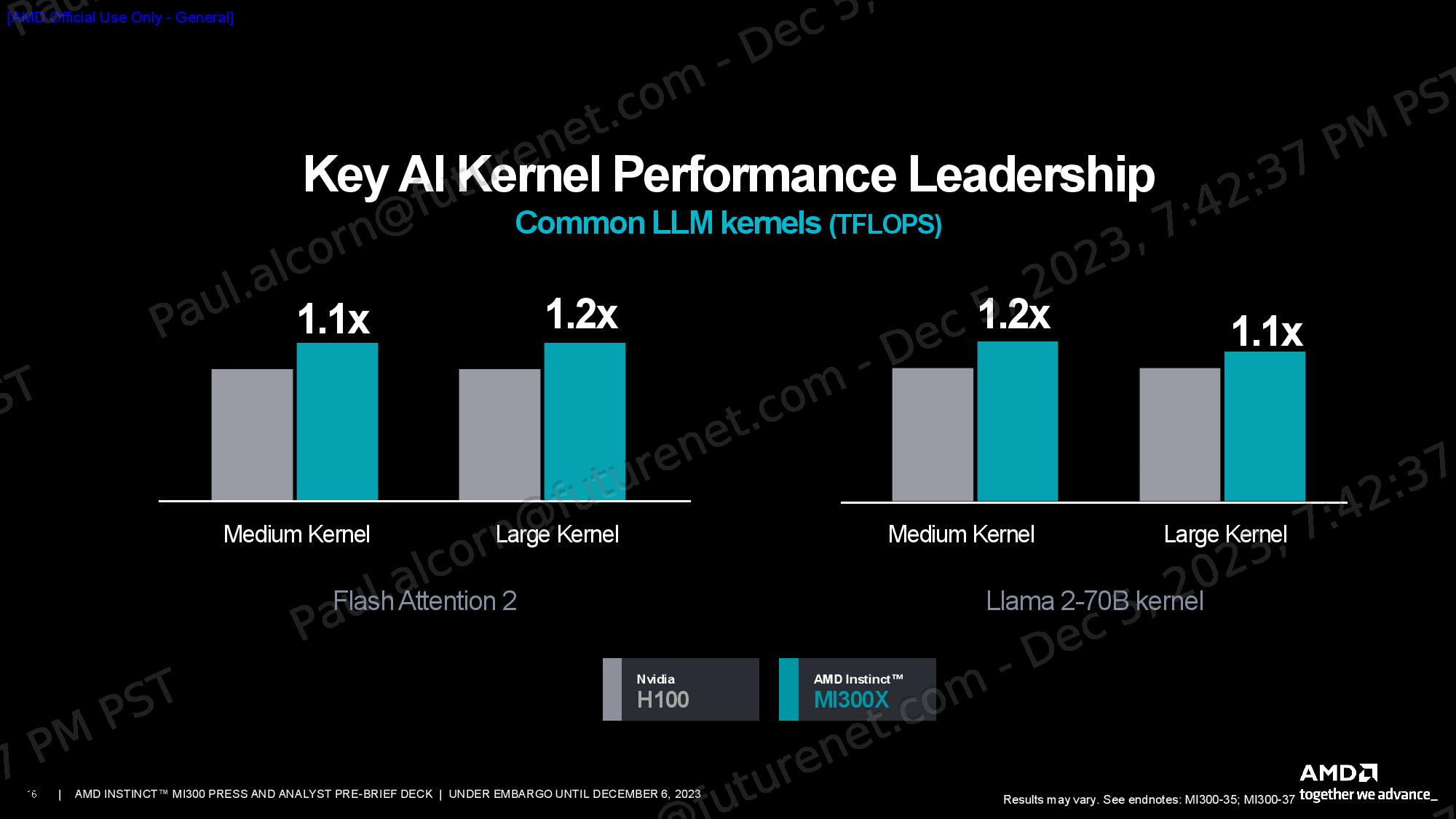

As always, we should approach vendor benchmarks with caution. AMD shared a host of performance metrics, showing that it has up to 2.4X the peak theoretical FP64 and FP32 vector matrix throughput of the H100 for HPC workloads and up to 1.3X higher peak theoretical TF32, FP16, BF16, FP8, and INT8 throughput for AI workloads, all projected without sparsity (the MI300X does support sparsity, though).

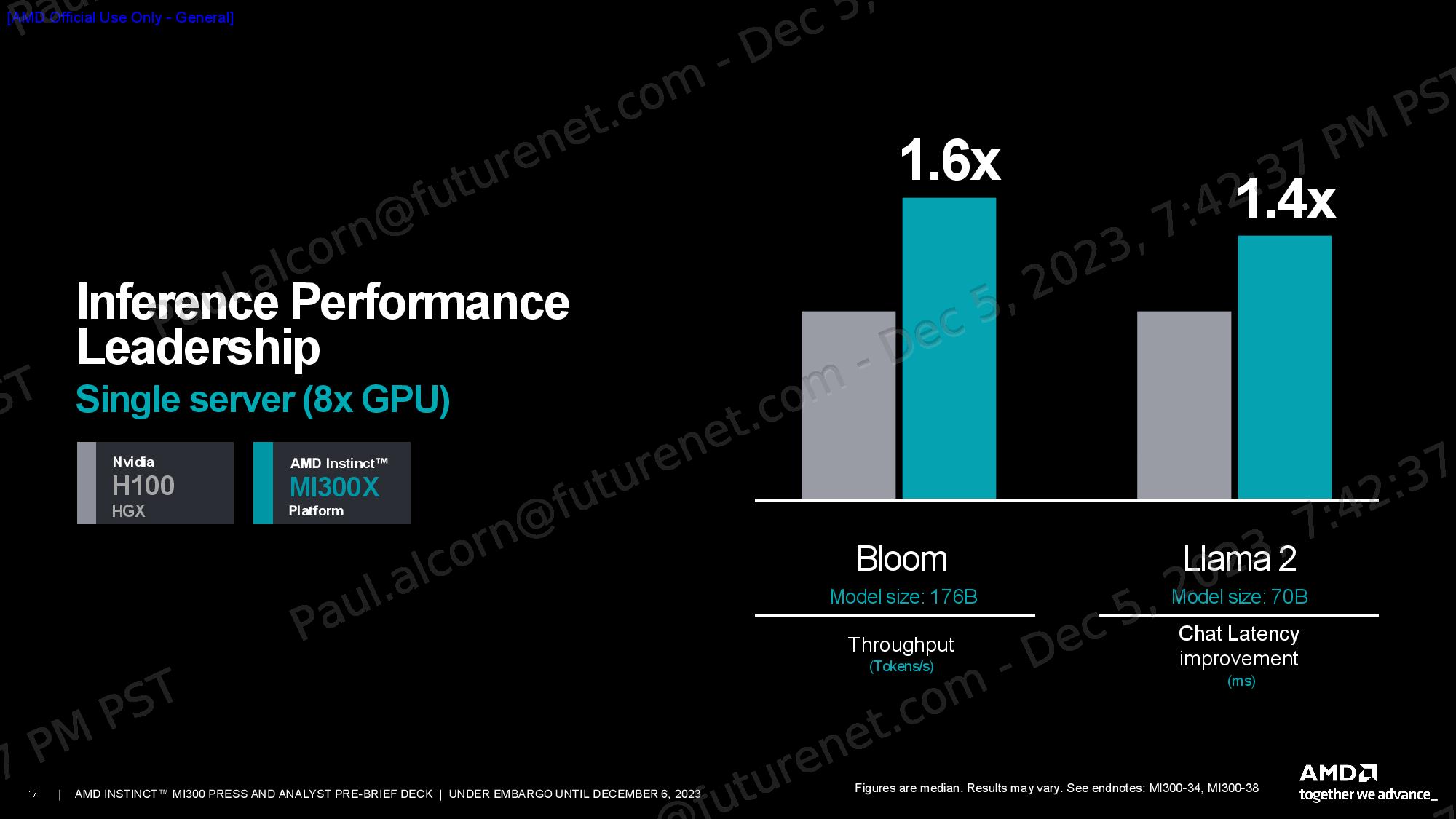

The MI300X’s hefty memory capacity and bandwidth are ideal for inferencing. AMD used a 176-billion-parameter Flash Attention 2 model to claim a 1.6X performance advantage over the Nvidia H100 in tokens/s throughput, and a 70-billion-parameter Llama 2 model to highlight a 1.4X chat latency advantage (measured from the start to the end of the 2K sequence length/128 tokens workload).

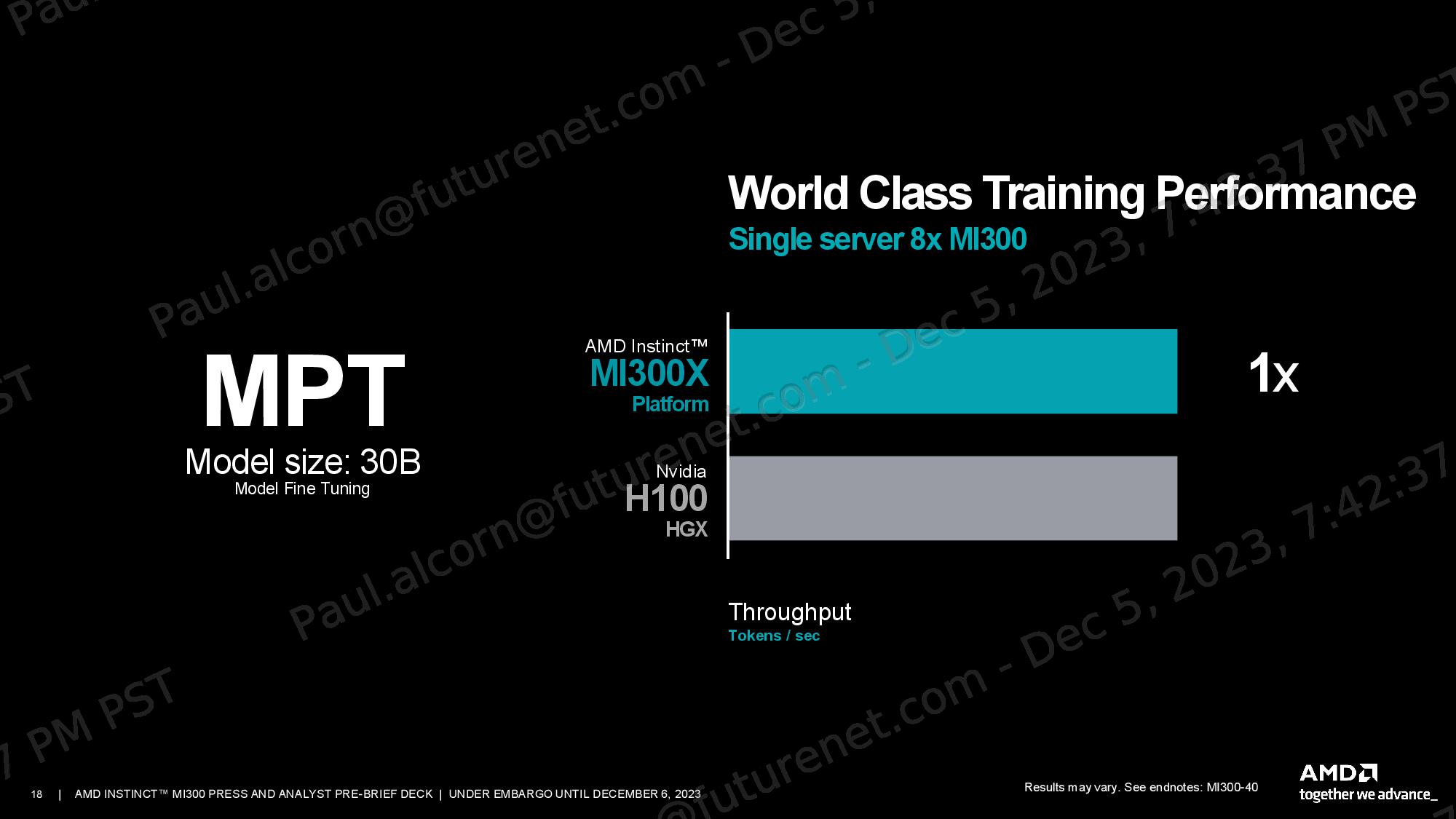

AMD’s MI300X platform delivered roughly the same amount of performance in a 30-billion-parameter MPT training workload as the H100 HGX system, but it is important to note that this test isn’t a one-to-one comparison of accelerators. Instead, this test pits groups of eight accelerators against one another, so platform-level capabilities become more of a limiting factor. In either case, this is the type of performance that will gain quick interest in an industry plagued by Nvidia's GPU shortages.

Speaking of platform capabilities, AMD also claims the MI300X platform’s memory capacity advantage allows hosting up to twice the number of 30B-parameter training models and 70B-parameter inference models than the H100 system. Additionally, the MI300X platform can support up to 70B training and 290B-parameter inference models, both of which are twice as large as the models supported by the H100 HGX.

Naturally, Nvidia’s forthcoming H200 GPUs will be more competitive on the memory capacity and bandwidth front, while compute performance will remain similar to the existing H100. Nvidia won’t start shipping the H200 until next year, so a competitive comparison with the MI300X is still on the horizon.

AMD Instinct MI300A

The AMD Instinct MI300A is the world’s first data center APU, meaning that it combines both a CPU and GPU in the same package. It will compete directly with Nvidia’s Grace Hopper Superchips, which have a CPU and GPU in separate chip packages that work in tandem. The MI300A has already scored a notable win in the El Capitan supercomputer, and AMD is already shipping this silicon to its partners.

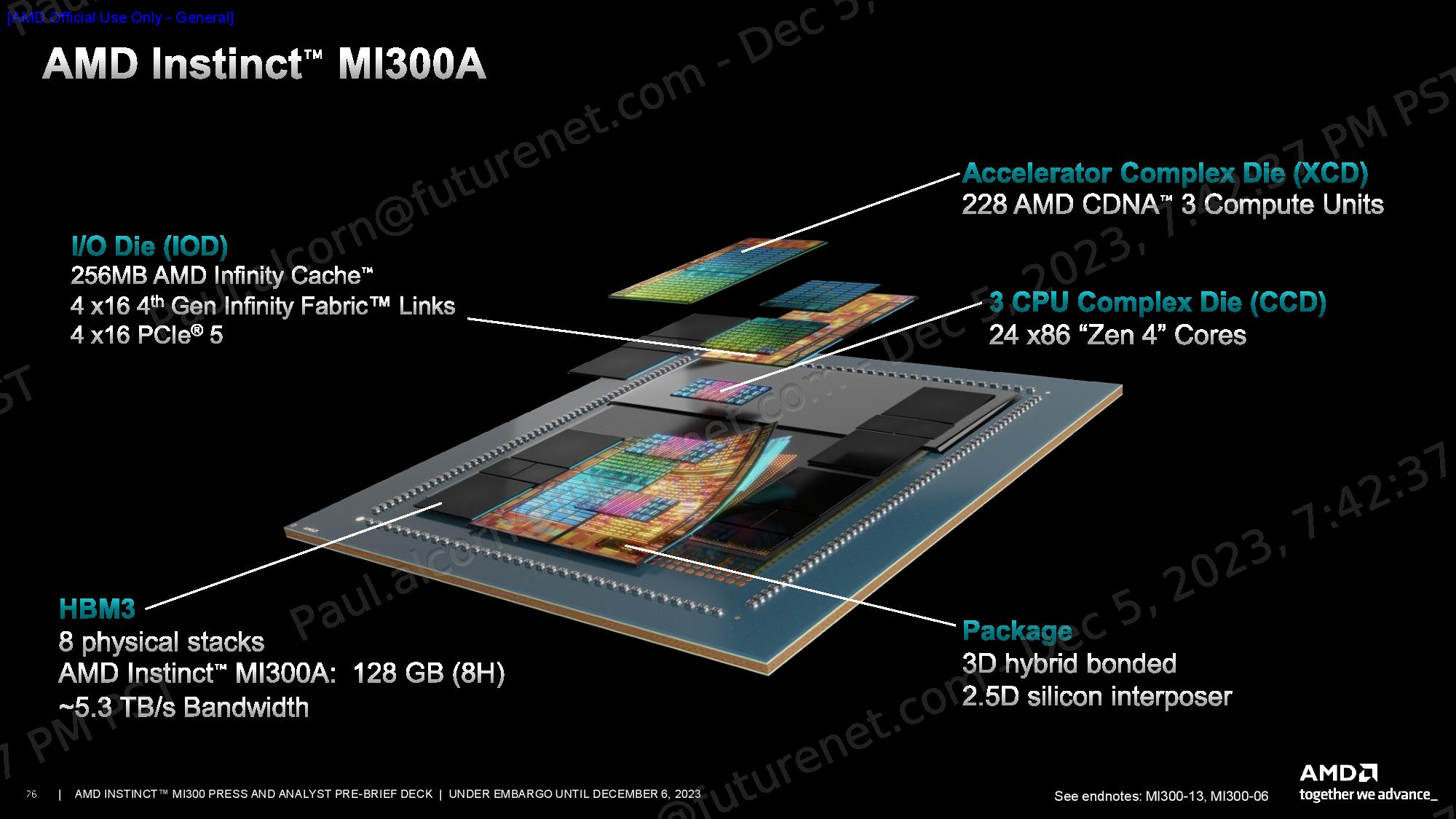

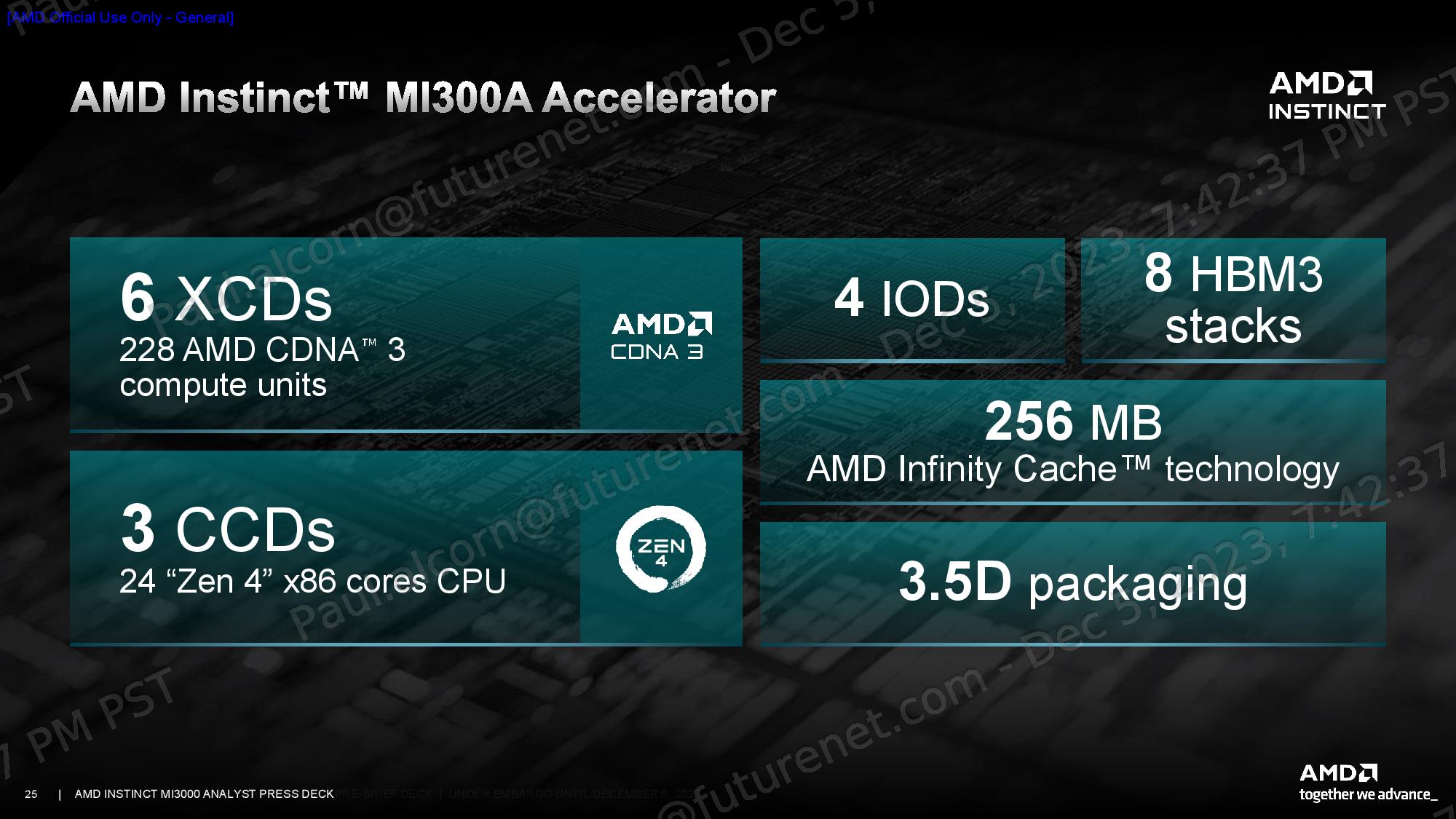

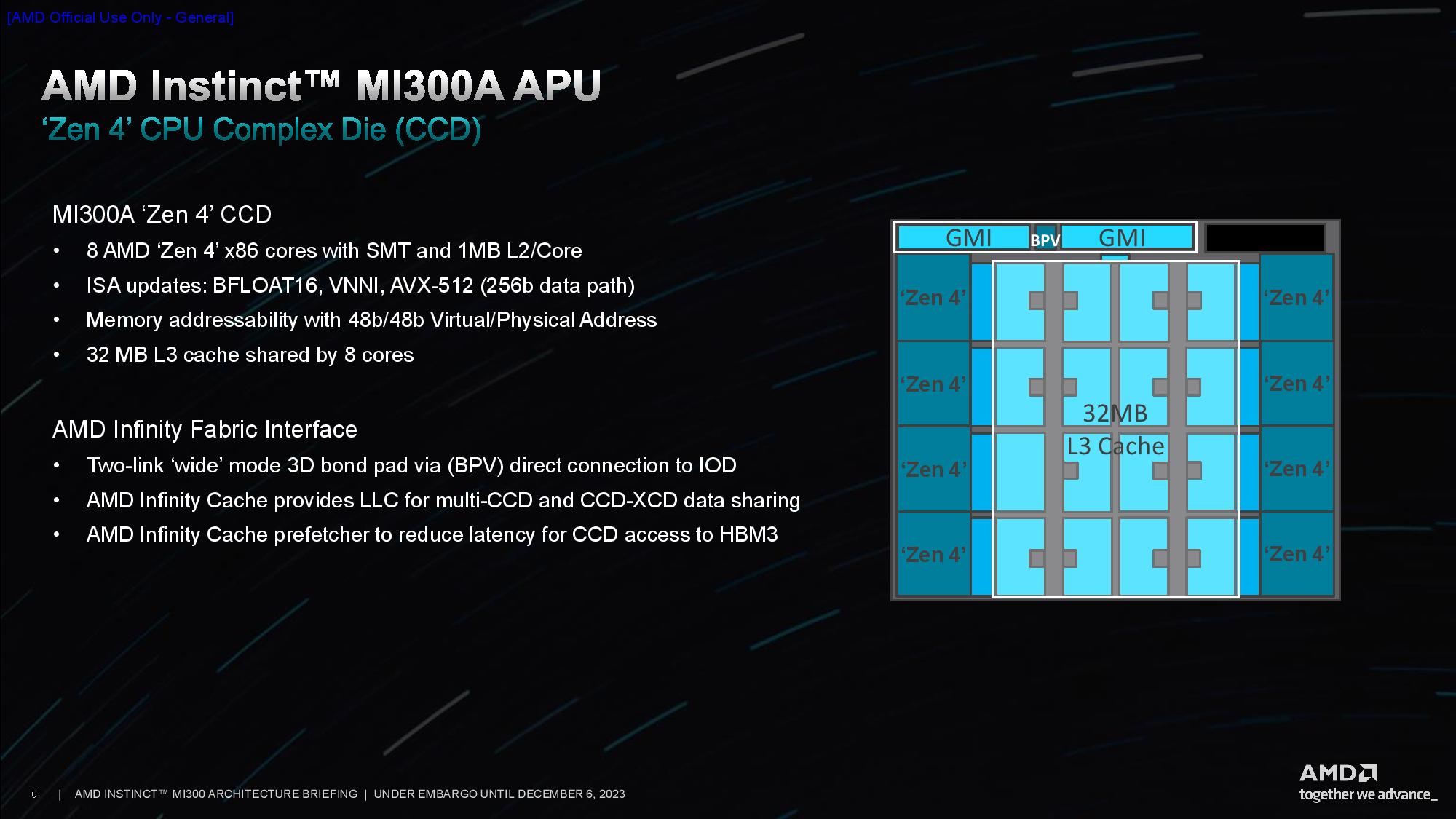

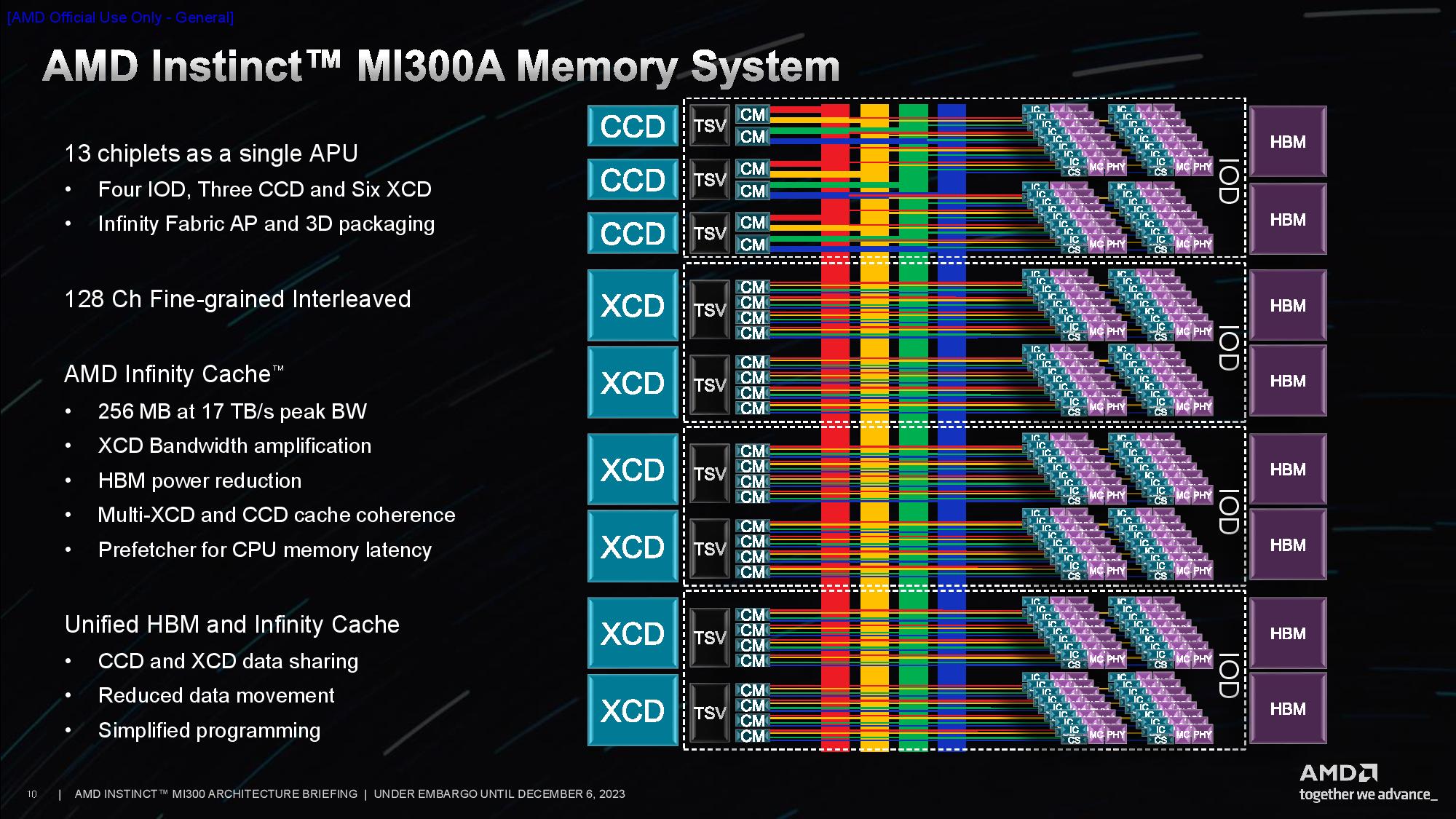

The MI300A uses the same fundamental design and methodology as the MI300X but substitutes in three 5nm core compute die (CCD) with eight Zen 4 CPU cores apiece, the same as found on the EPYC and Ryzen processors, thus displacing two of the XCD GPU chiplets.

That leaves the MI300A armed with 24 threaded CPU cores and 228 CDNA 3 compute units spread across six XCD GPU chiplets. As with the MI300X, all the compute chiplets are 3D-stacked using hybrid bonding to four underlying I/O Dies (IOD) to enable far superior bandwidth, latency, and energy efficiency than standard chip-packaging techniques can achieve.

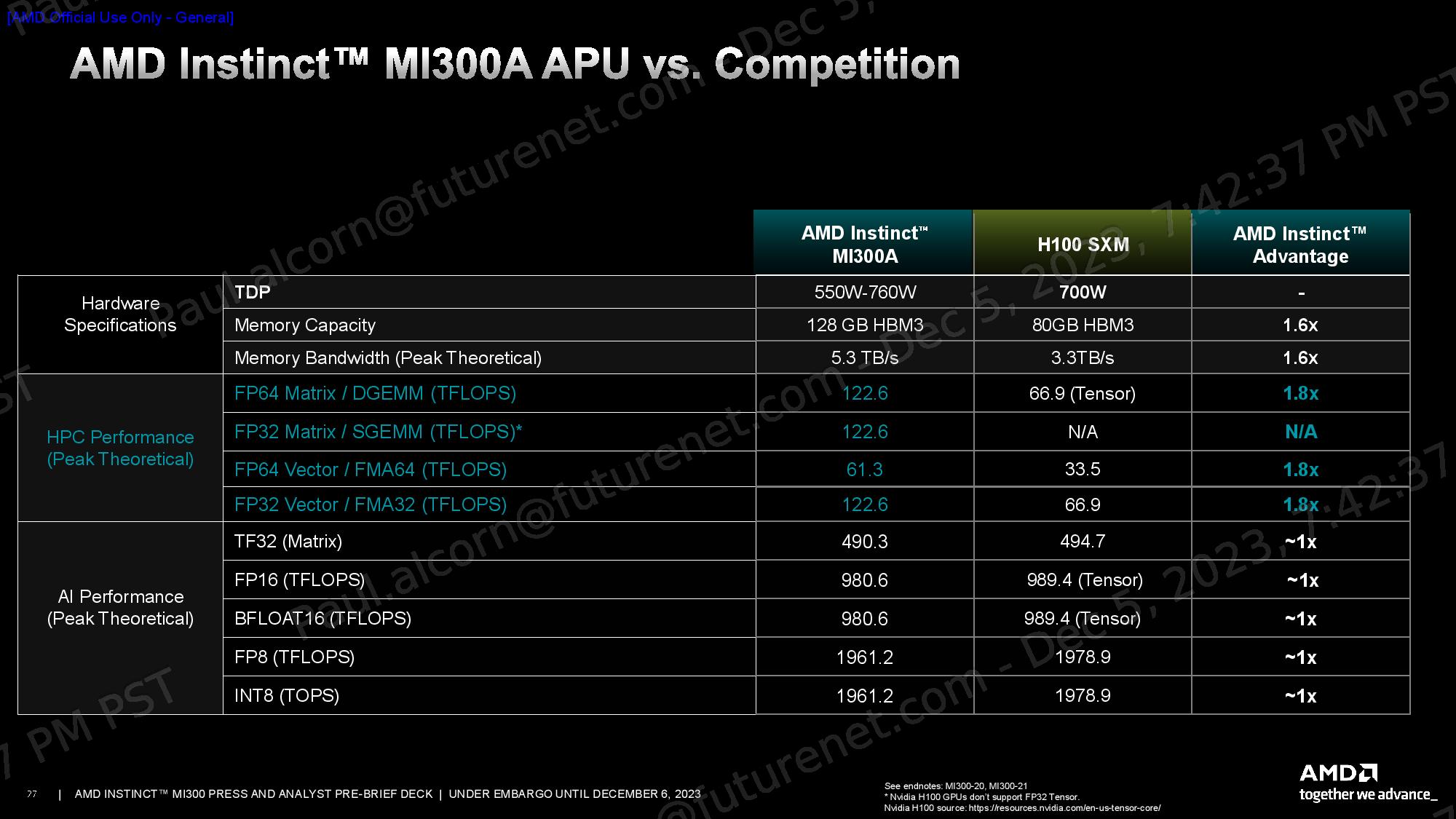

AMD culled the memory capacity by using eight 8Hi HBM3 stacks instead of the eight 12Hi stacks used for the MI300X, thus cutting capacity from 192GB to 128GB. However, memory bandwidth remains at 5.3 TB/s. AMD tells us the decision to reduce the memory capacity wasn’t due to power or thermal limitations; instead, it tailors the chip for the targeted HPC and AI workloads. Regardless, the 128GB of capacity and 5.3 TB/s of throughput are both still 1.6X more than provided by Nvidia’s H100 SXM GPU.

The MI300A has a default 350W TDP, but it is configurable up to 760W. AMD dynamically allocates power between the CPU and GPU portions of the chip based on usage, thus optimizing performance and efficiency. AMD employs clever reuse wherever it can: The MI300A drops into AMD's standard LGA6096 socket, just like the EPYC Genoa processors, but the SH5 version of this socket isn't electrically compatible with AMD's EPYC processors, which use SP5.

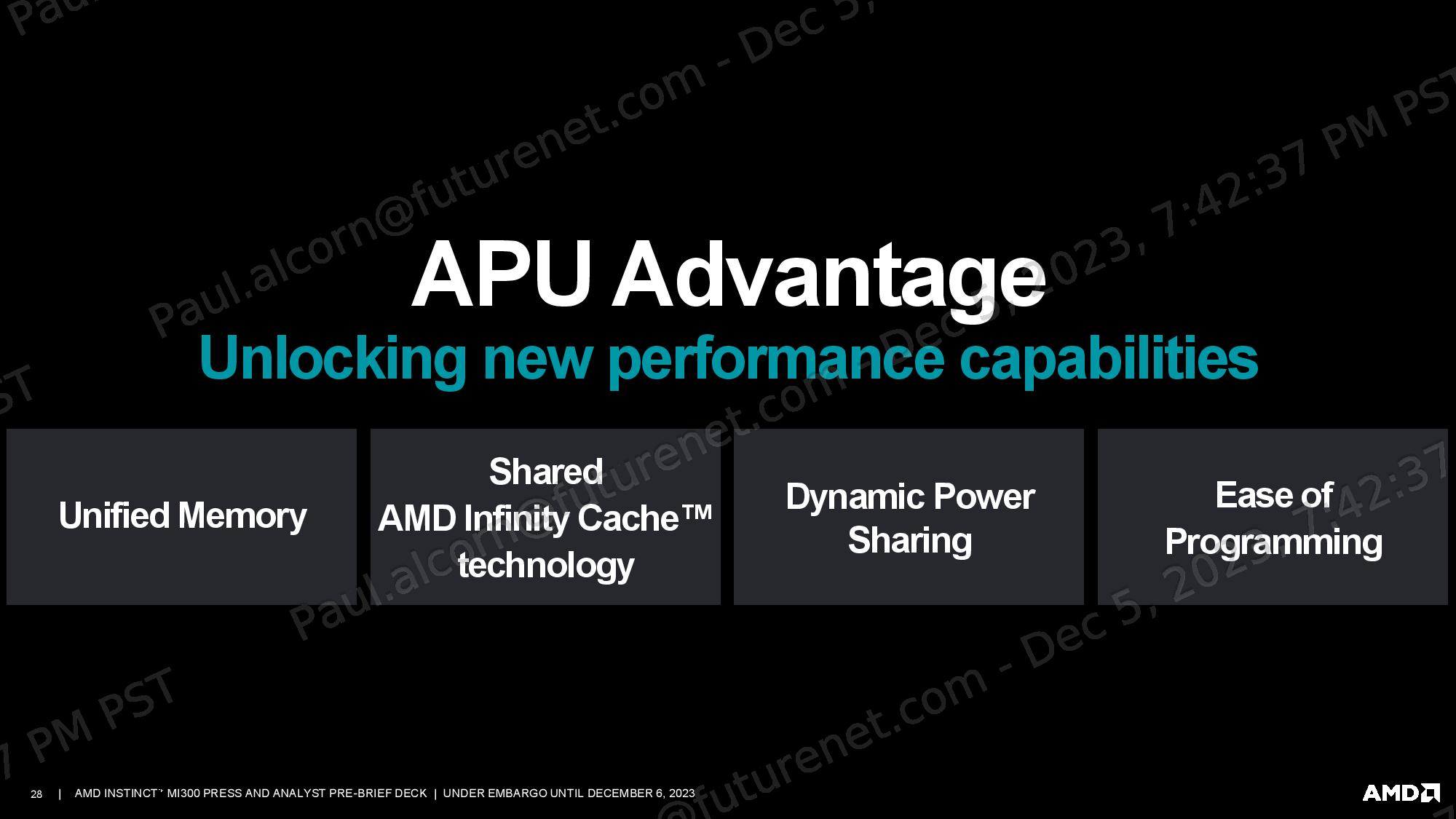

The memory space is shared between the CPU and GPU, thus eliminating data transfers. This technique boosts performance and energy efficiency by eliminating data transfers between the units, while also reducing the coding burden. As with the MI300X, the chip has a central 256MB Infinity Cache that helps ensure bandwidth and latency for data flowing through the chip.

AMD claims a 1.8X advantage over the H100 in FP64 Matrix/DGEMM and FP64/FP32 Vector TFLOPS. The company also claims parity with the H100 in TF32, FP16, BF16, FP8, and INT8.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

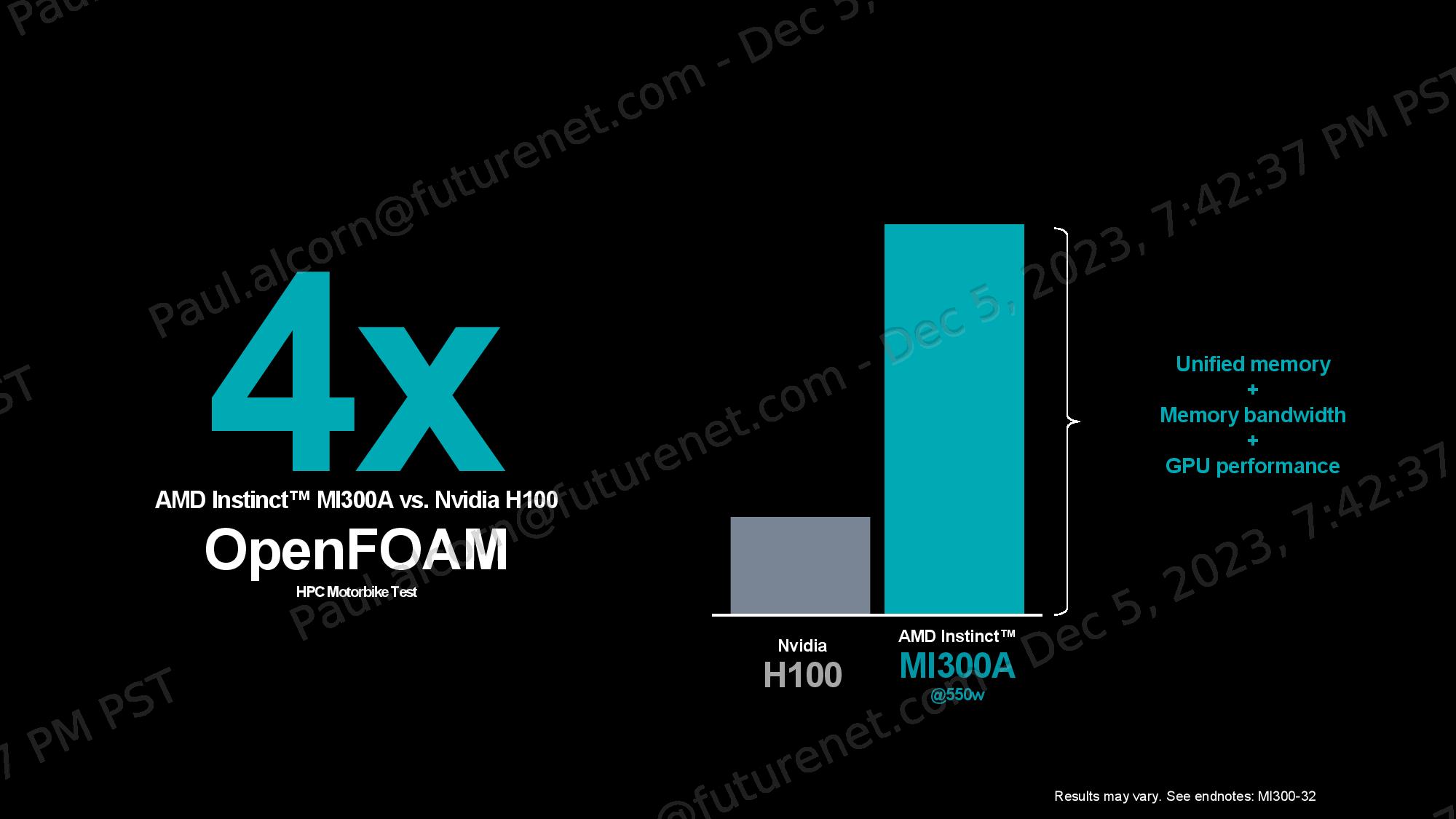

AMD claims its MI300A is 4X faster than Nvidia’s H100 in an OpenFOAM HPC motorbike test, but this comparison isn’t ideal: The H100 is a GPU, while the blended CPU and GPU compute in the MI300A provides an inherent advantage in this memory-intensive, and at times serial, workload through its shared memory addressing space. Comparisons to the Nvidia Grace Hopper GH200 Superchip, which also brings a CPU and GPU together in a tightly coupled implementation, would be better here, but AMD says that it couldn’t find any publicly listed OpenFOAM results for Nvidia’s GH200.

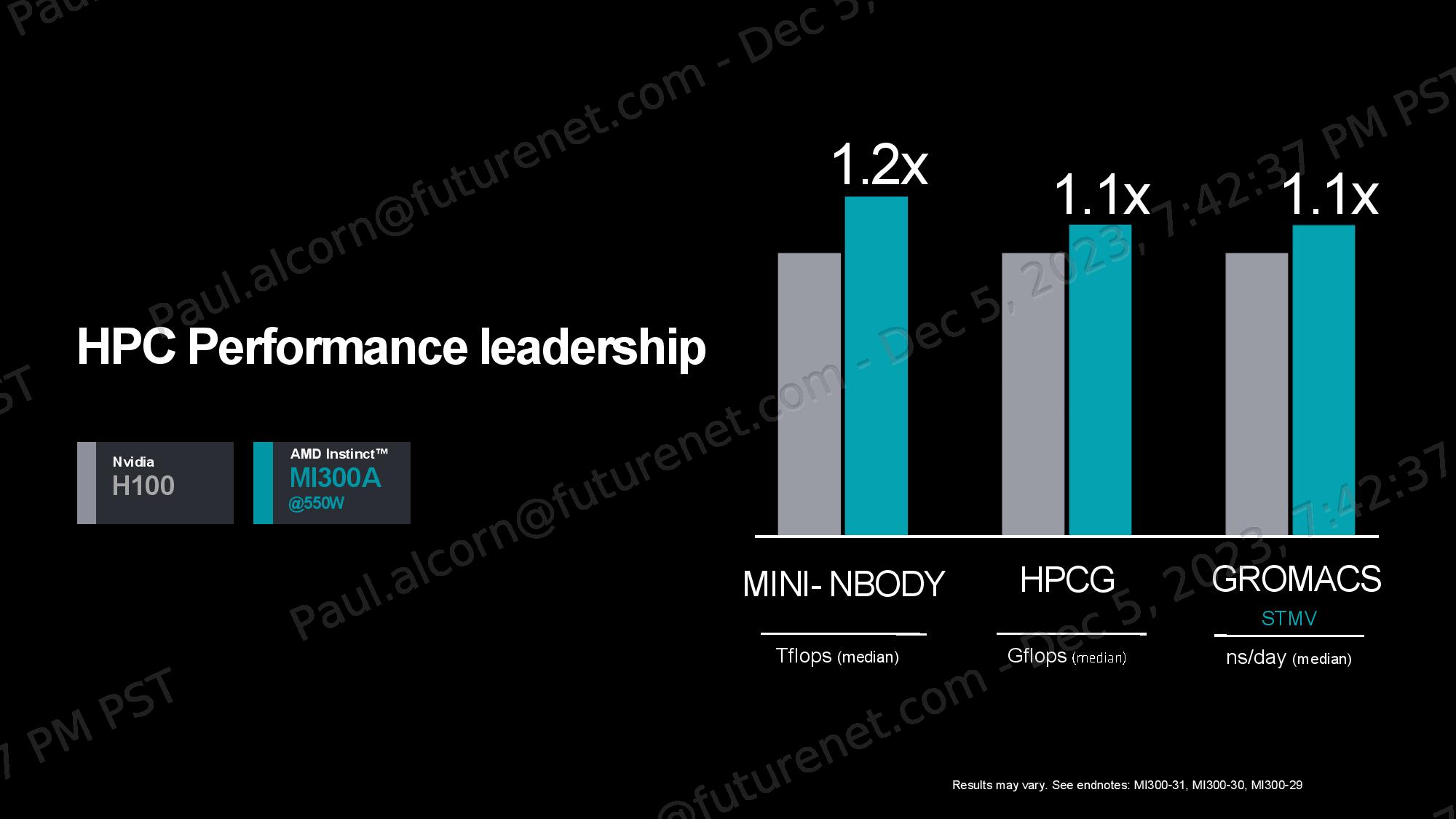

AMD did present comparative perf-per-watt data to Nvidia’s GH200 to highlight a 2X advantage, with these results based on publicly available information about the H200. AMD also highlighted comparisons to the H100 in the Mini-Nbody, HPCG, and Gromacs benchmarks, claiming leads of 1.2X, 1.1X, and 1.1X, respectively. Again, comparisons to the GH200 would be more ideal for this set of benchmarks.

AMD Instinct MI300X and MI300A Architecture

We covered the basics of the design above, and those details provide important perspective to understand the sections below.

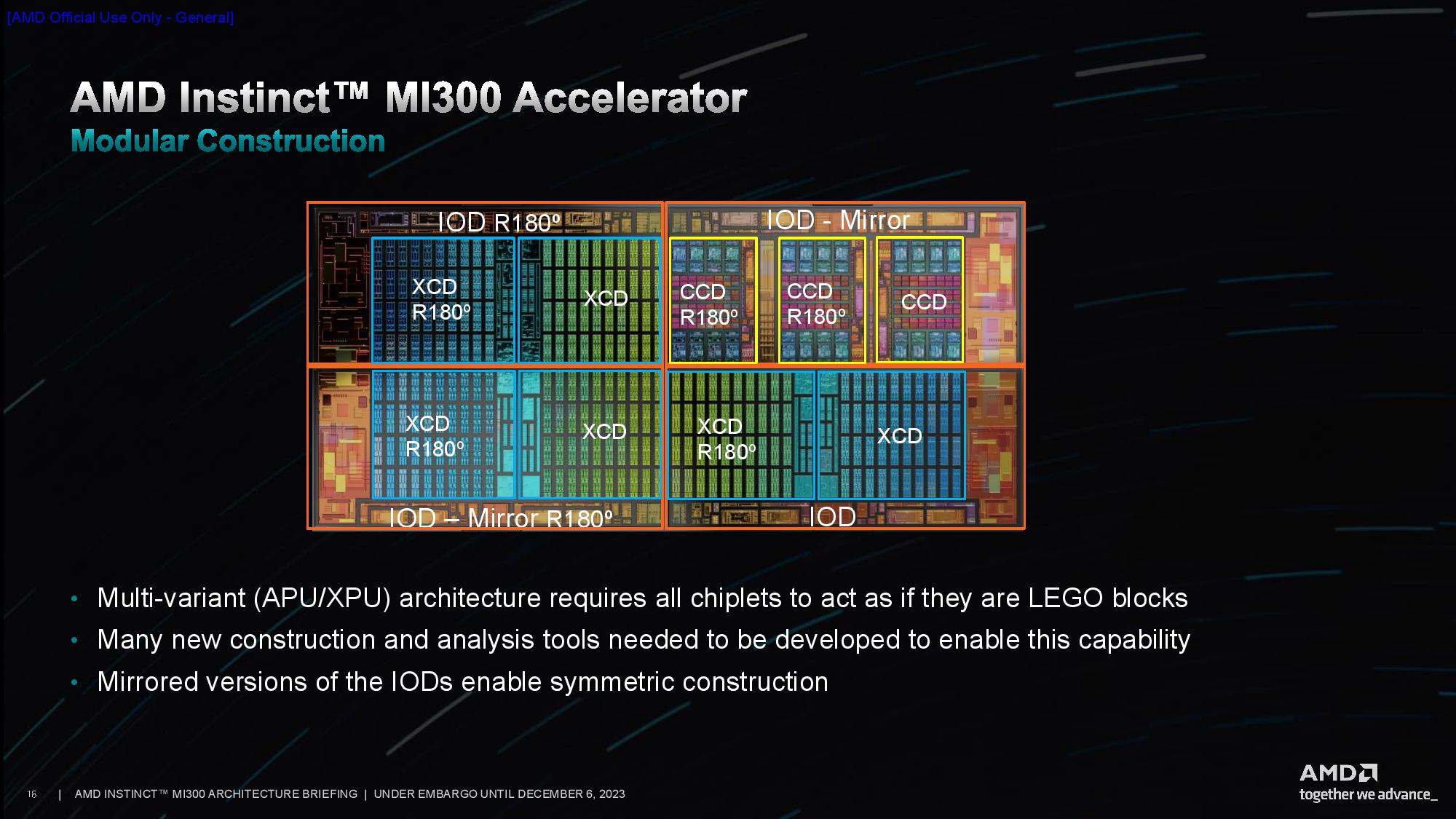

AMD leverages TSMC’s 3D hybrid bonding SoIC (silicon-on-integrated-circuit) tech to 3D-stack the various compute elements, be they the CPU CCDs (core compute die) or the GPU XCDs, atop the four underlying I/O Dies. Each I/O Die can house either two XCDs or three CCDs. Each CCD is identical to the CCDs used in existing EPYC chips, holding eight hyperthreaded Zen 4 cores apiece. Three of these CCDs and six XCDs are used to create the MI300A, while the MI300X uses eight XCDs.

The HBM stacks are connected using a standard interposer with 2.5D packaging technology. AMD’s combination of 2.5D and 3D packaging techniques led the company to coin the ‘3.5D’ packaging moniker. Each I/O die houses a 32-channel HBM3 memory controller to host two of the eight HBM stacks, resulting in 128 total 16-bit memory channels for the device. The MI300X leverages 12Hi HBM3 stacks for 192GB of capacity, while the MI300A uses 8Hi stacks for 128GB of capacity.

AMD has also added 256MB of total Infinity Cache capacity, spread across all four of the I/O Dies, to cache data traffic via a prefetcher, thus increasing hit rates and power efficiency while reducing bus contention and latency. This adds a new level of caching for the CPUs (conceptually a shared L4) while providing a shared L3 cache for the GPUs. An Infinity Fabric NoC (network on chip), dubbed the AMD Infinity Fabric AP (Advanced Package) Interconnect, connects the HBM, I/O subsystems, and compute.

In total, the chip has 128 lanes of PCIe 5.0 connectivity spread across the four I/O Dies. These are allocated into two groups: One is a combination of four x16 PCIe 5.0 + 4th-gen Infinity Fabric links, while the other has four x16 links dedicated solely to the Infinity Fabric. The latter is used solely to connect MI300s to each other (cross-socket traffic).

The MI300X serves purely as an endpoint device — it connects to an external CPU — so its PCIe root complex needs to function as an endpoint device. In contrast, the MI300A is self-hosted due to its native CPU cores, so the PCIe root complex must function as a host. To accommodate both scenarios, AMD’s custom-built MI300 I/O Die supports both modes from the same PCIe 5.0 root complex, a new addition to the company’s IP portfolio.

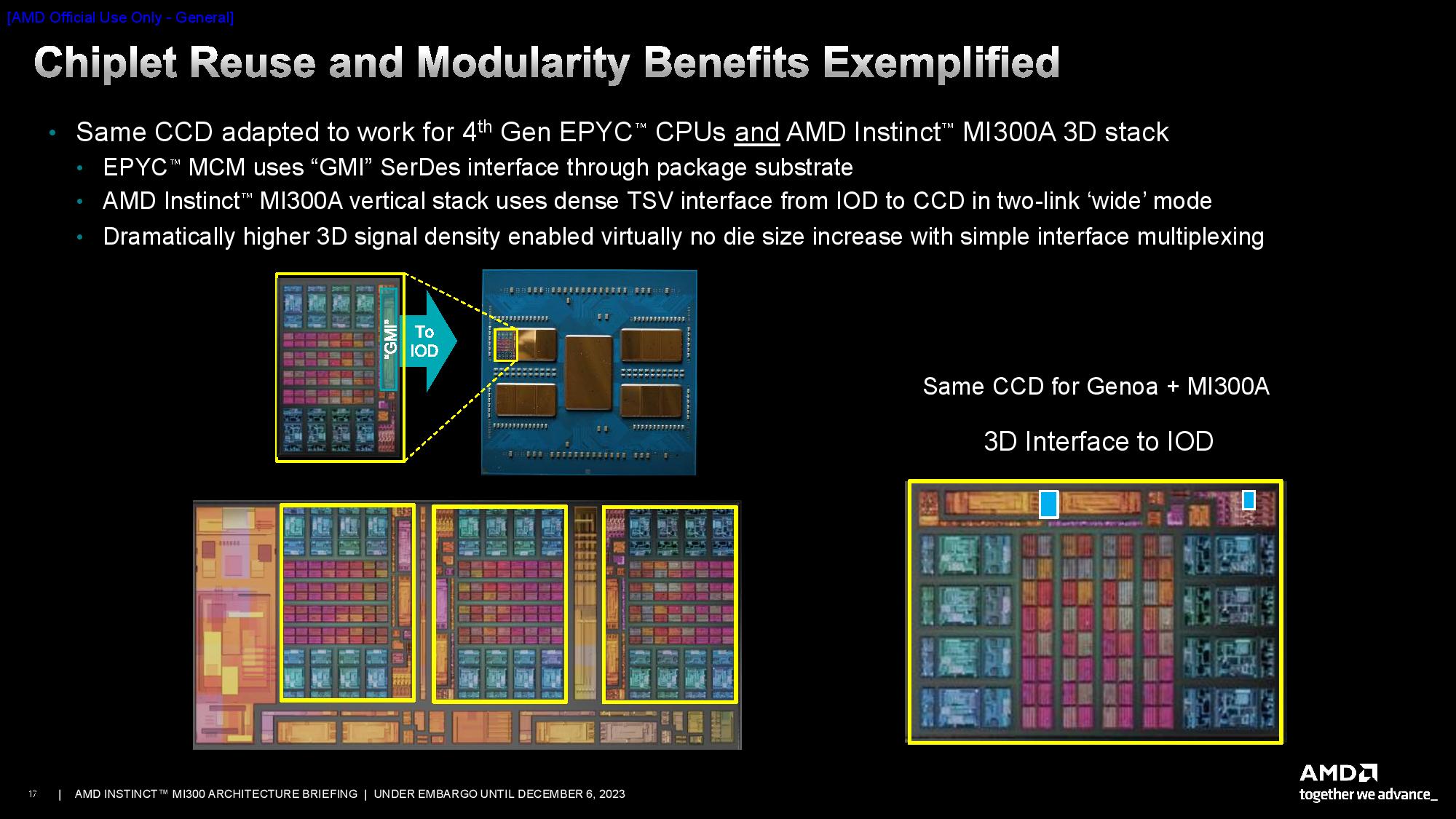

AMD’s CPU CCDs are 3D hybrid-bonded to the underlying I/O Dies, requiring a new interface. While this is the same CCD found in the EPYC server processors, those chips communicate over a GMI3 (Global Memory Interconnect 3) interface that leverages standard 2.5D packaging. For MI300, AMD added a new bond pad via interface that bypasses the GMI3 links, thus providing the TSVs (through silicon vias) needed for stacking the chips vertically. This interface operates in the two-link wide mode (see link above for more details).

The 5nm XCD GPU die marks the full chipletization of AMD’s GPU design. Unlike the chiplets used in MI200, which present as two separate devices, the MI300 chiplets present as one monolithic GPU.

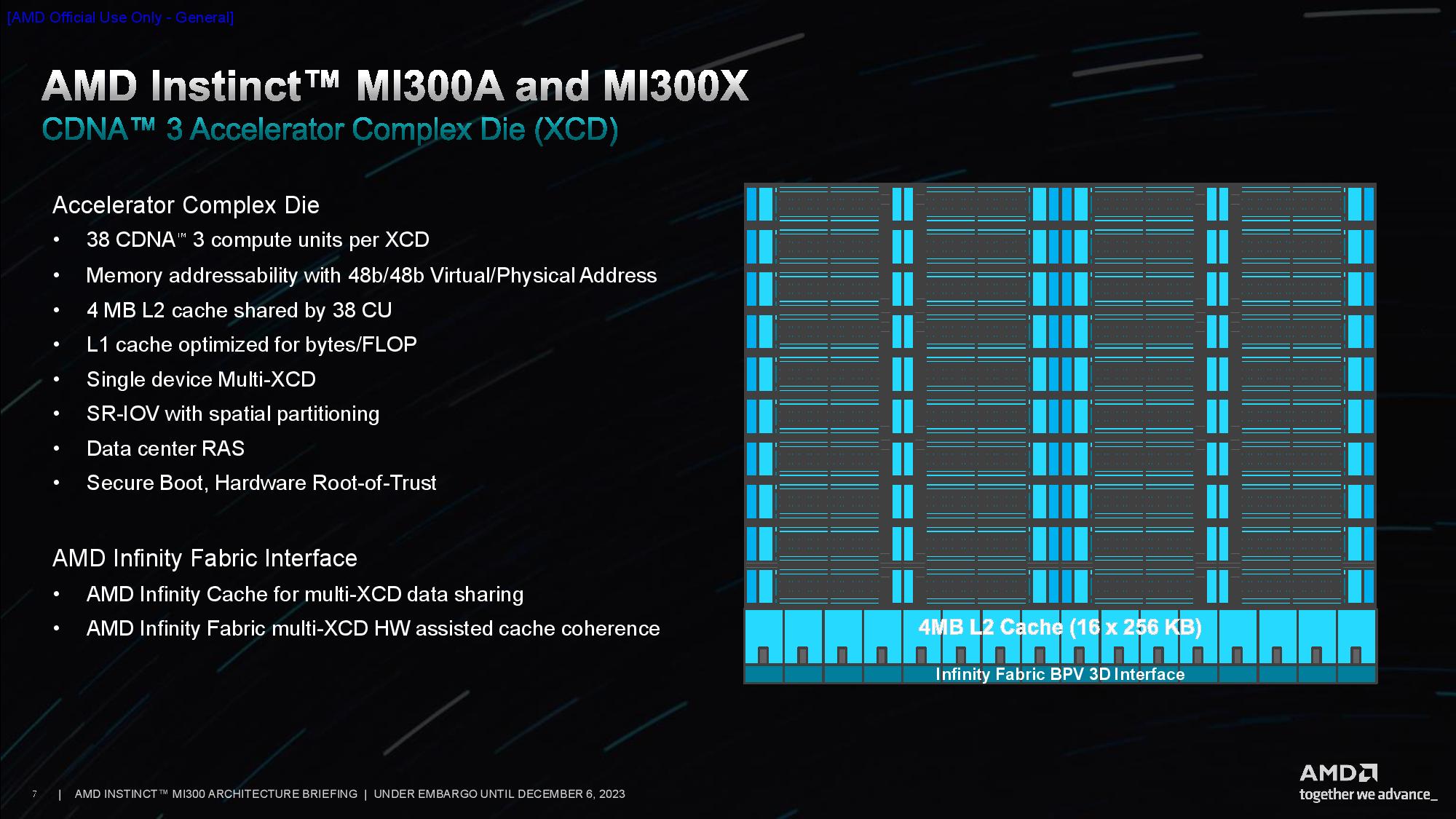

Each XCD has 40 physical CDNA3 compute units, but only 38 are activated (this helps defray yield issues). Each 38-CU chiplet has 4MB of shared L2 (16x 256KB). The XCD and IOD have hardware-assisted mechanisms to break jobs into smaller parts, dispatch them, and keep them synchronized, thus reducing host system overhead. The units also have hardware-assisted cache coherence.

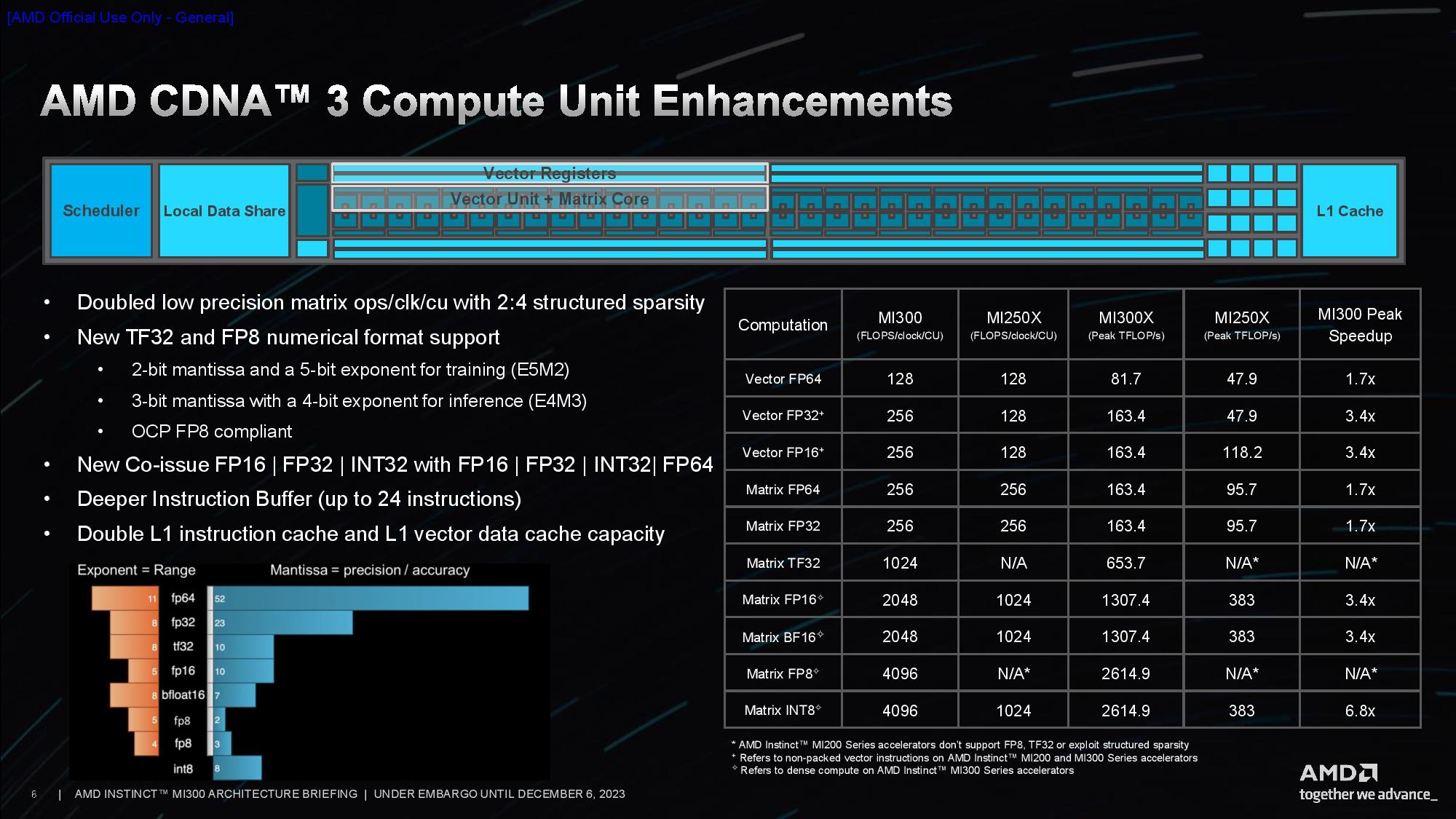

The final slide in the above deck shows the enhancements and performance of the CDNA 3 compute unit.

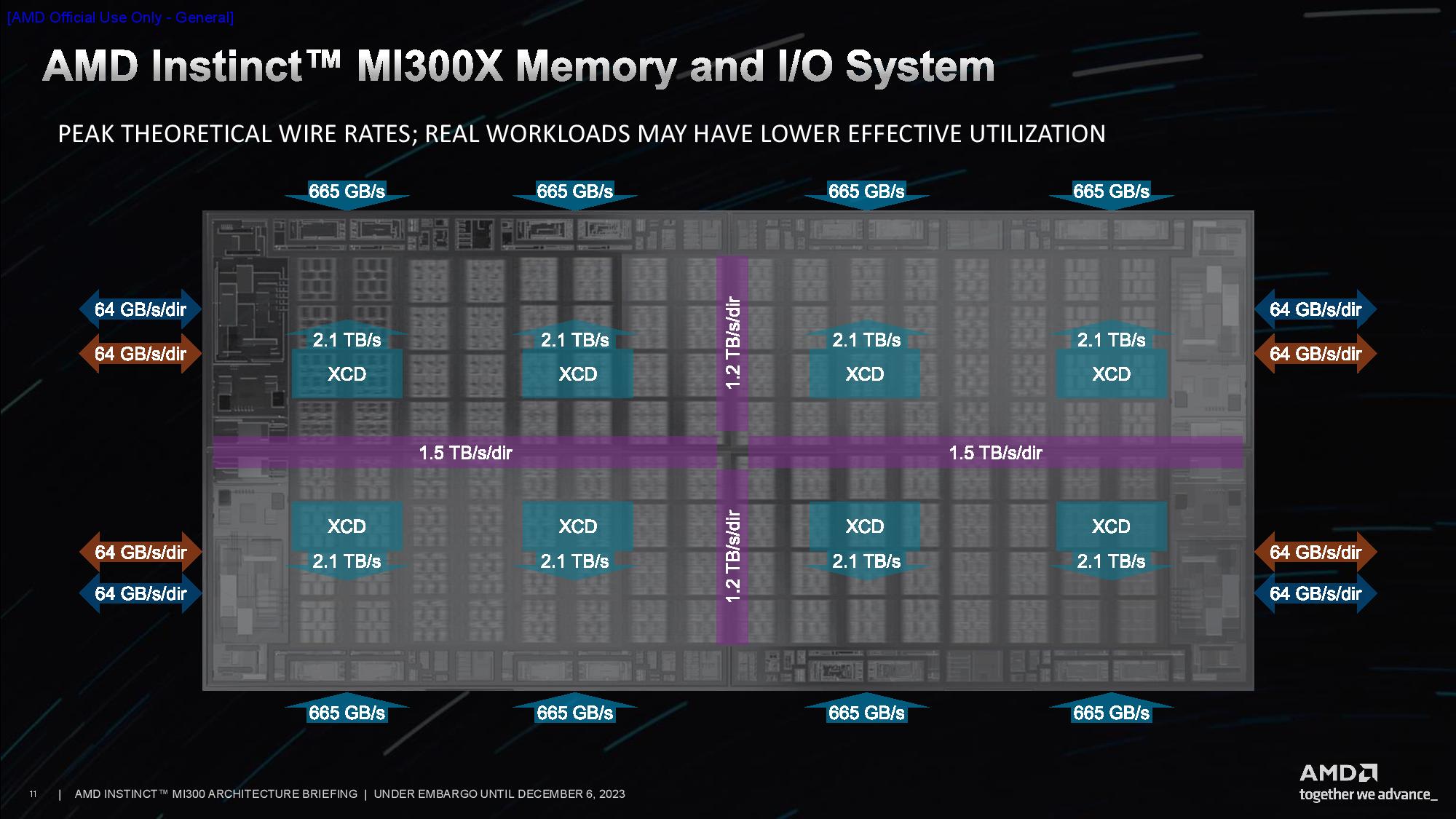

Two XCDs are attached to each I/O Die, which then connects to two of the HBM3 stacks. This allows the GPU to communicate privately with the two connected stacks to alleviate bandwidth, latency, and consistency issues. However, every XCD can communicate with any memory stack (the Infinity Fabric connections between the I/O Dies are visually shown as colored bars in the first two images in the album).

Naturally, addressing the remote stacks will incur latency penalties. Memory transactions incur more latency the further they travel. AMD noted that HBM connected directly to the IOD and XCD is a zero-hop transaction while accessing a different memory stack on the IOD is a two-hop jump. Finally, accessing a memory stack on an adjacent IOD is a three-hop jump. The penalty for a two-hop jump is roughly a 30% increase in latency, while a three-hop jump adds 60% more latency.

The third slide shows the bandwidths available from the NoC, with 1.2 TB/s/dir of bandwidth between the I/O Dies across the vertical section of the package, while a horizontal data path provides slightly more, 1.5 TB/s/dir of bandwidth, to help accommodate additional traffic from the I/O devices, thus allowing I/O traffic to be handled separately from the memory traffic. The PCIe complexes on the right and left of the package provide 64 GB/s/dir of throughput from each I/O die. At the top and bottom of the package, you can see that each HBM stack provides 665 GB/s of throughput.

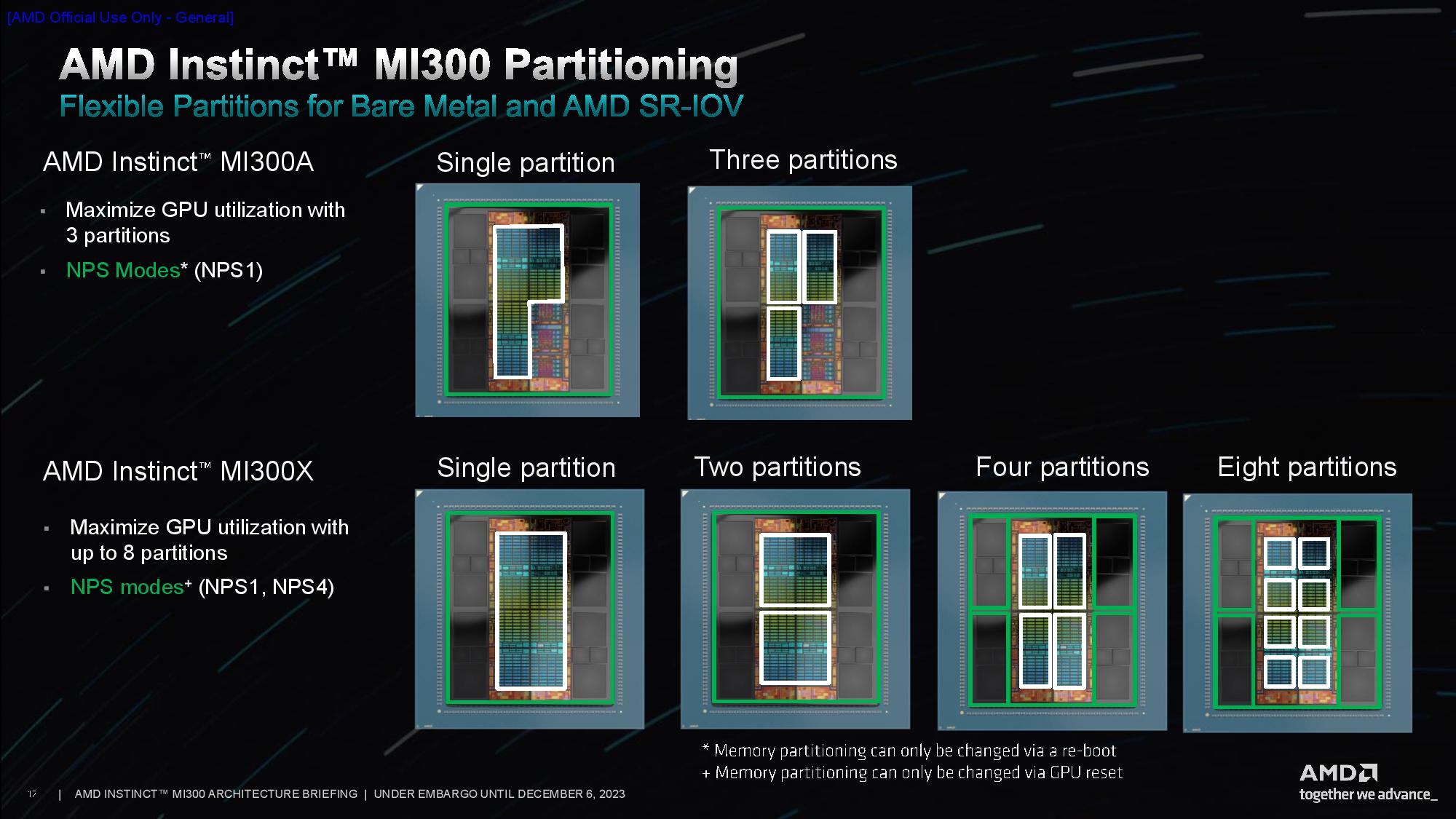

AMD has multiple partitioning schemes that split the compute units into various logical domains, much like the NPS settings for the EPYC processors. This allows breaking up the different XCDs into various groups to optimize bandwidth, thus maximizing performance and limiting the impact of ‘NUMAness.’ The multiple configurations range from addressing the units as one logical device to addressing them as eight different devices, with a multitude of in-between options that provide plenty of flexibility for a wide range of workloads.

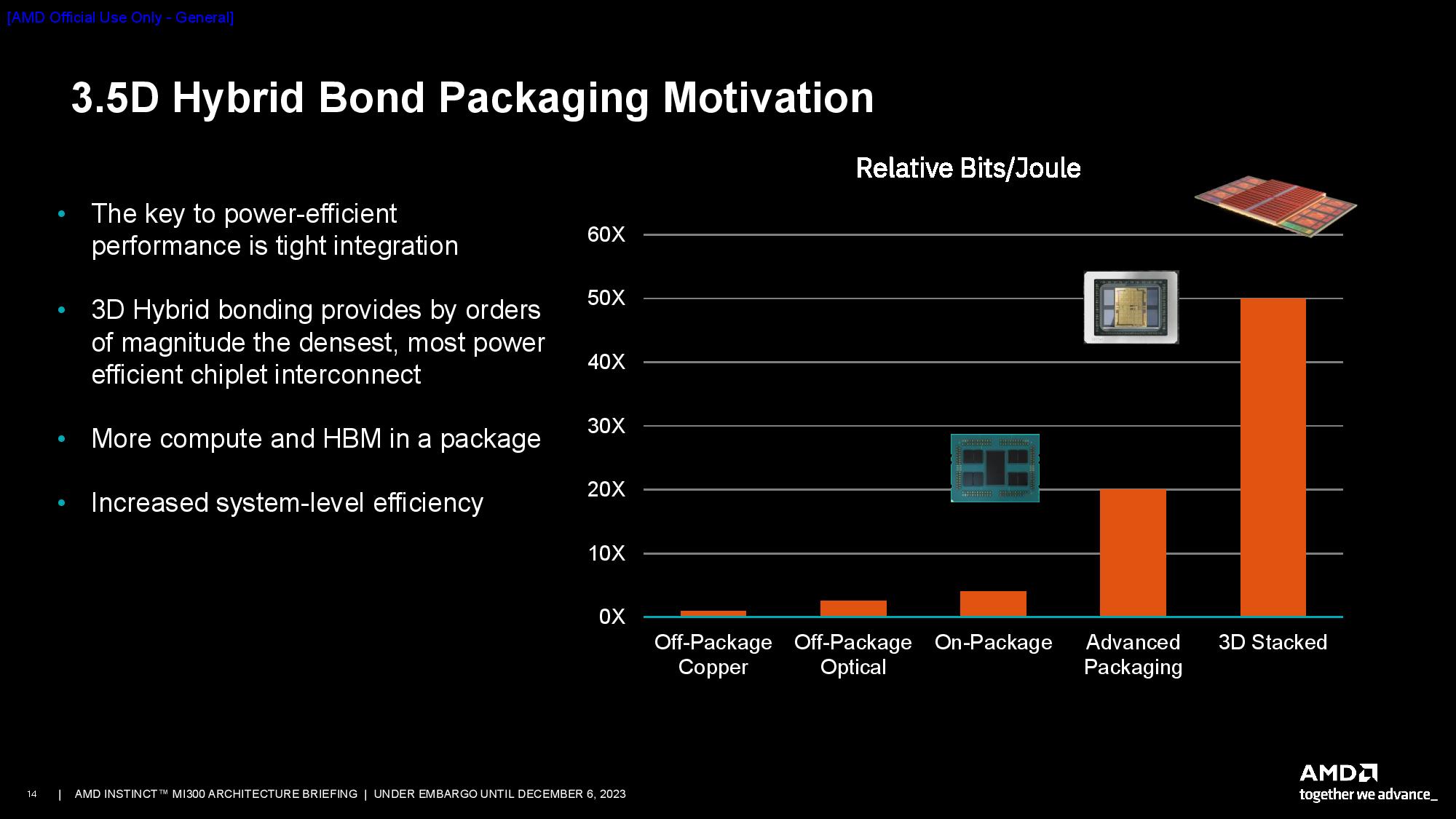

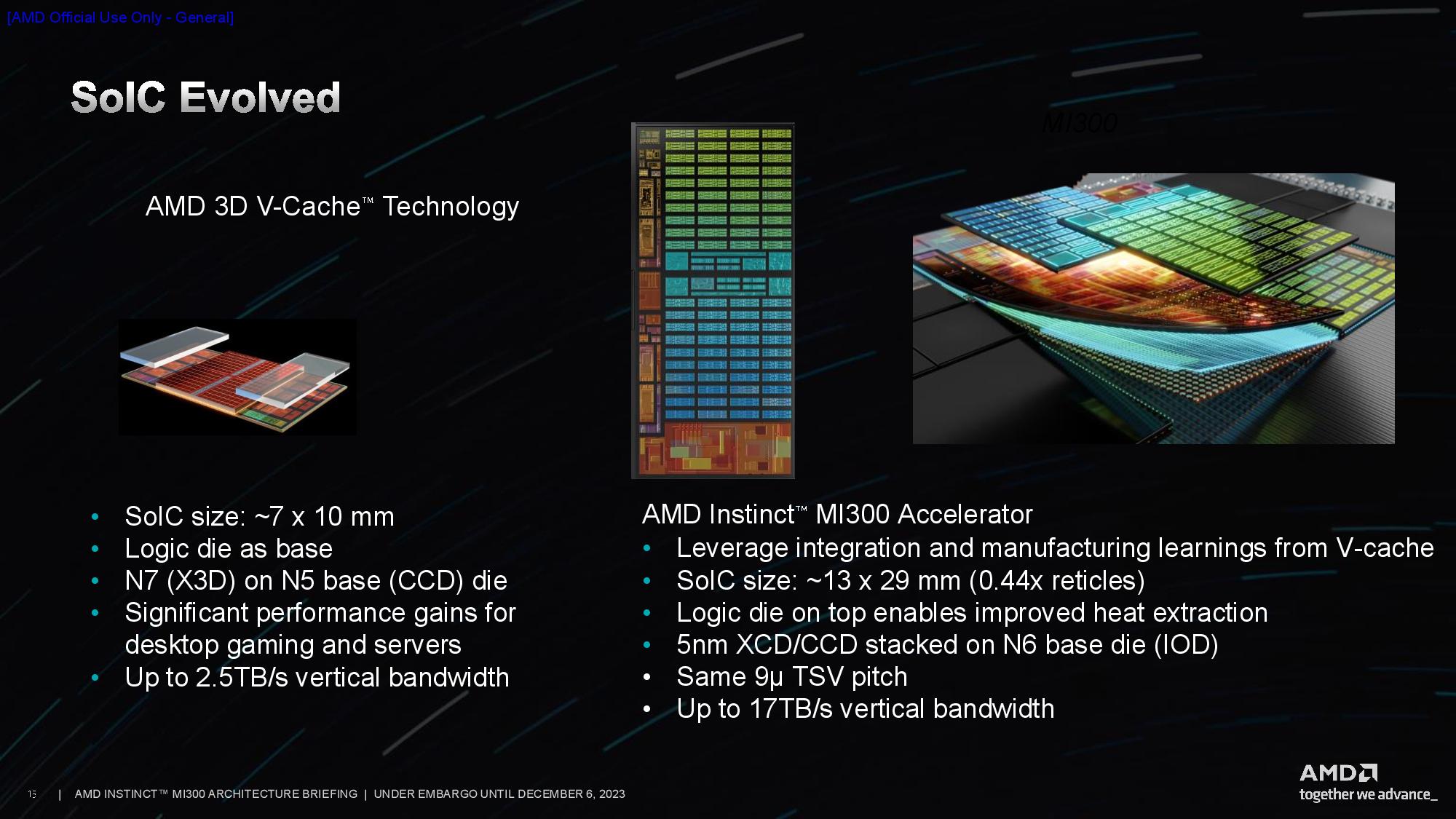

AMD determined that 3D hybrid bonding (SoIC) was the only realistic path to providing enough bandwidth between the compute units and the I/O Die. The company has plenty of experience with this technology; it's already shipped in millions of 3D V-Cache-equipped PC processors.

The company's long experience with this now-mature hybrid bonding technology gave it the confidence to move forward with it in the MI300 processors. In contrast to AMD's consumer PC line of chips, which is comprised of both 3D V-Cache and standard models, the MI300 processors represent the first time the company has been completely reliant upon the technology for an entire product stack.

In aggregate, the SoIC connections provide up to 17 TB/s of vertical bandwidth across the various 3D-stacked units. The SoIC size measures 13x29mm.

The cutaway in the final slide in the album shows the complexity and beauty of the 3.5D packaging approach. It illustrates how the package is built up from the bottom with an organic substrate, the CoWos passive silicon interposer above it with metal routing and TSVs, and then the 3D-stacked IOD and XCD with hybrid bonding (9-micron pitch). The massive copper bump that mates the organic substrate to the silicon interposer (bottom) serves to highlight just how incredibly small and dense the barely-visible hybrid bonded connections are between the top and bottom die of the 3D-stacked portion (near the top of the chip).

The hybrid bonding technique requires thinning the die to expose the TSVs so they can be mated. As a result, AMD has to employ silicon shims at the top of the package to maintain structural integrity, just like its other 3D V-Cache-equipped processors.

AMD's Senior Vice President, AMD Corporate Fellow, and Product Technology Architect Sam Naffziger walked us through some of the challenges the team encountered during the design phases.

Clever IP reuse has been a cornerstone of AMD's chiplet strategy, and the MI300 was no exception to this policy. The MI300 team didn't have the resources to build an entirely new CPU CCD for MI300, so they asked the company's CCD team to add the 3D interface (TSVs) to the EPYC CCD early in the design phase. Amazingly, the engineering teams found a way to cram the connections in between the existing GMI interfaces.

In the first slide, you can see the two small blue dots that represent the total area of the TSVs, while the large orange rectangular blocks flanking the dots are the standard GMI3 interface for 2.5D packaging. This illustrates just how incredibly dense and area-efficient the SoIC tech is. AMD also added some gates, switches, and multiplexors to allow signals to be rerouted from the GMI3 interface to the 3D interface.

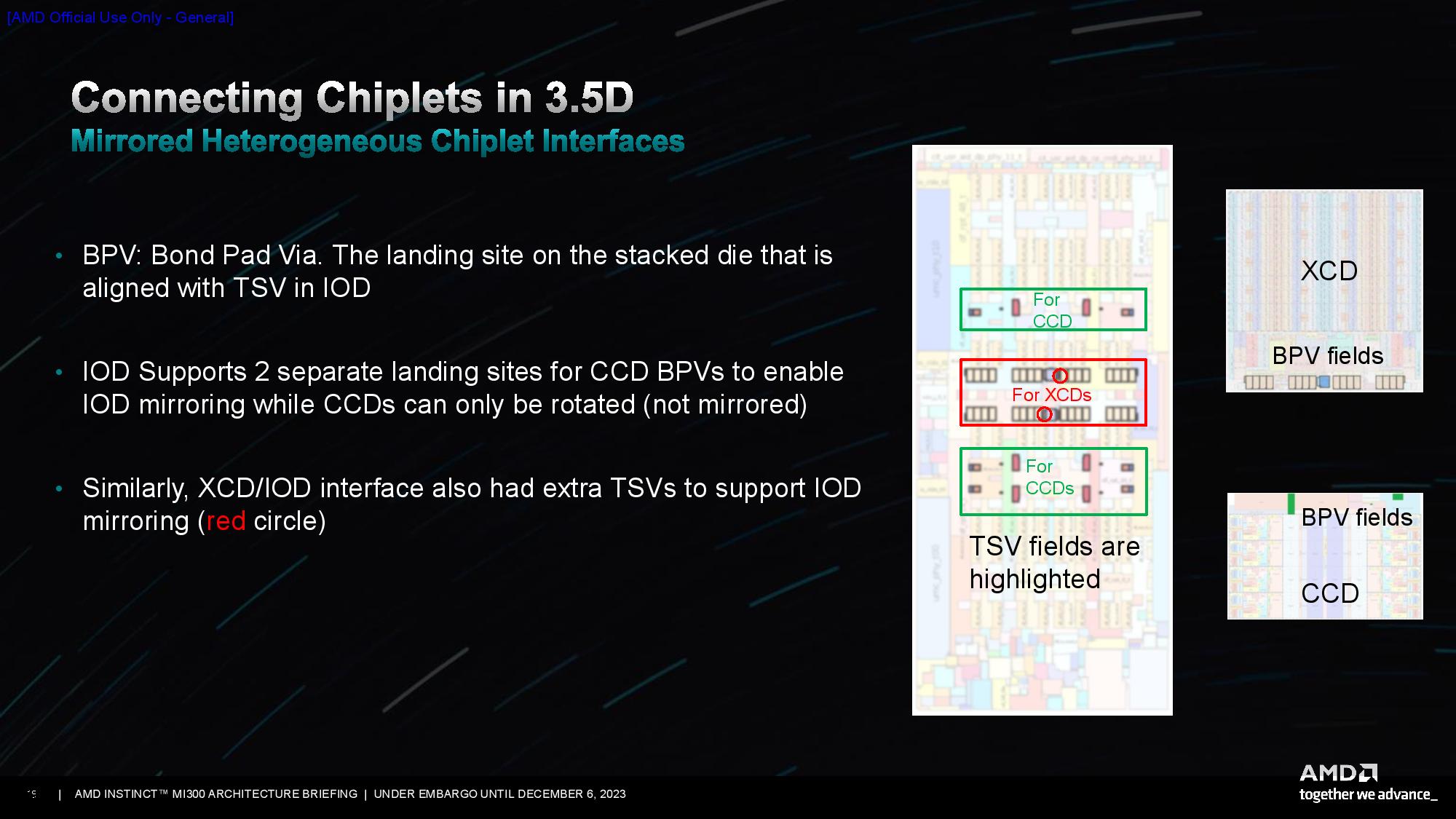

AMD created mirrored versions of the I/O Die to ensure they could be rotated into the correct positions while ensuring the memory controllers and other interfaces in the XCD were still aligned correctly. The engineering teams designed the interface/signals and power connections symmetrically, thus allowing the chiplets to be rotated, as seen in the second image.

However, while AMD designed the I/O Die from the ground up specifically for MI300, the team had to employ the existing CCD design from EPYC. They didn't want to create a mirrored mask set for the CCDs, typically required in these types of designs to ensure proper interface alignments, because it adds cost and complexity to the design. However, two of the CCDs needed to be rotated 180 degrees to ensure proper alignment. However, the CCDs' external interfaces were designed asymmetrically, thus presenting a challenge.

As seen in the third slide, AMD overcame this challenge by adding some redundancy to the bond pad via (BPV) connection points on the I/O Die, allowing the CCDs to be merely rotated instead of mirrored.

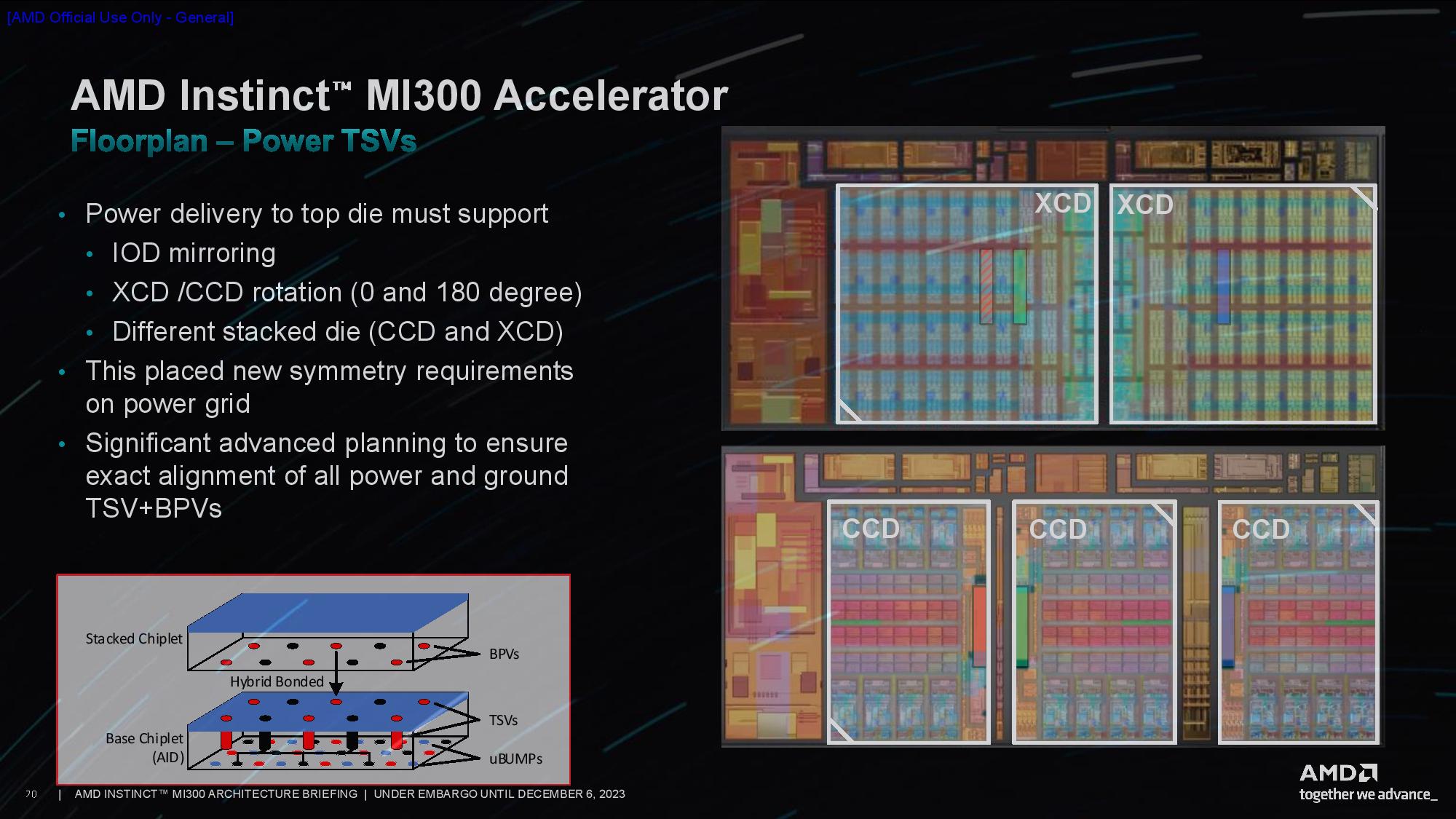

Power delivery remained a challenge, though. TSVs are incredibly small copper plugs, and AMD needed to plumb in hundreds of amps of power to the compute die that sits atop the I/O Die. The skinny TSVs aren't well-suited for that endeavor, so they require a tremendous number of connections to feed the power. AMD designed a new power grid to overcome this issue, and it met the IR drop targets and didn't exceed current density limits.

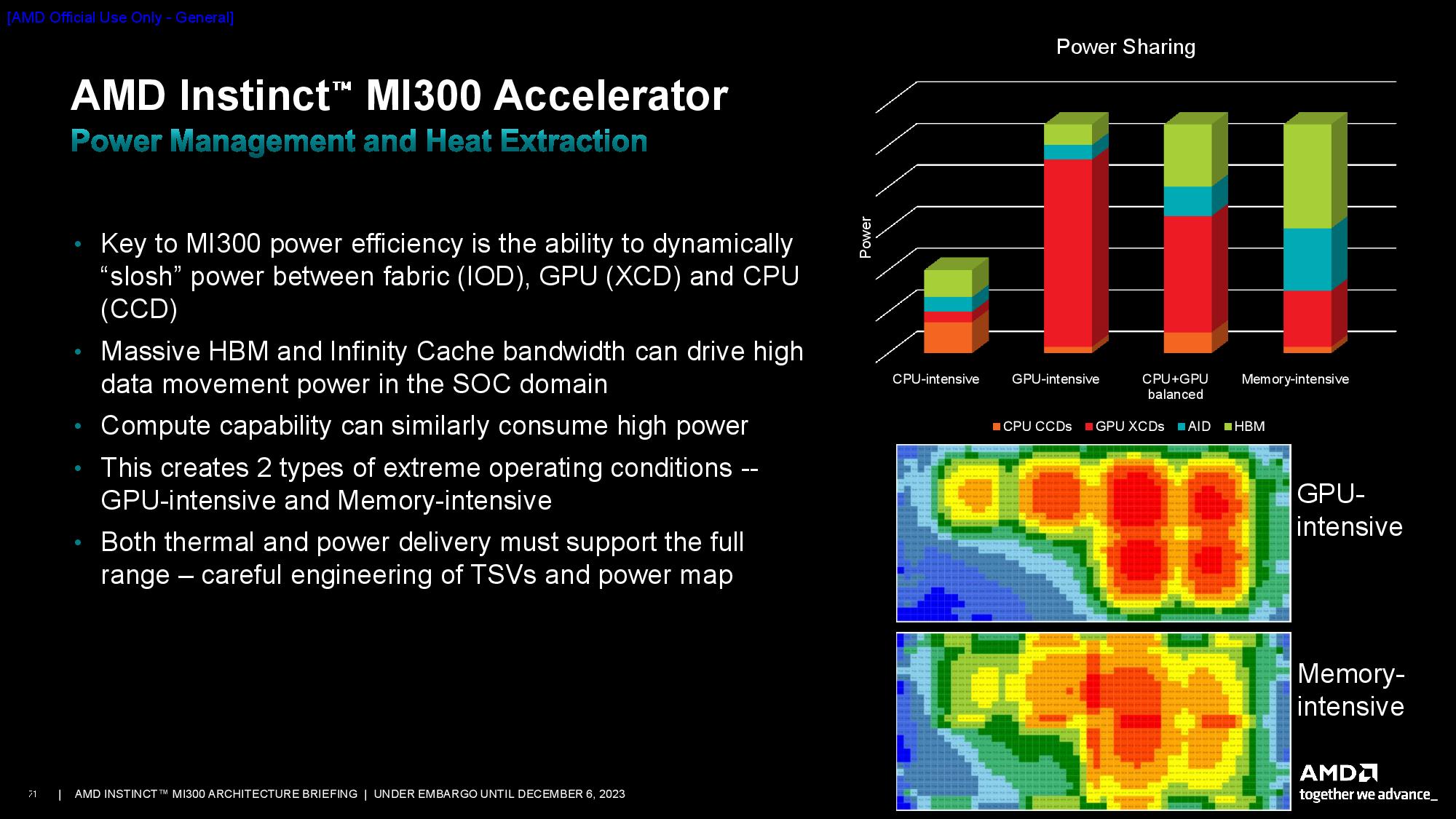

Finally, the design presented several new thermal challenges due to the 3D-stacked design. Using thermal modeling, AMD identified two types of extreme operating conditions - memory intensive and GPU intensive - and then leveraged its tried-and-true tactic of dynamically shifting power between units based on usage patterns to help alleviate those issues.

And thus, an incredible processor was born. AMD's MI300 presents the first real challenge to Nvidia's performance dominance in AI and HPC workloads in quite some time, and AMD insists that it has the production capacity to meet demand. That's a competitive advantage in these times of GPU shortages and will surely spur rapid industry uptake. AMD is shipping the MI300 processors to its partners now.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

bit_user Reply

No. CDNA has no rendering hardware and this SoC will have no display engine for driving a monitor.elforeign said:Yes, I have to. But can it play Crysis?

I mean, you could add a dGPU and just use the CPU cores like a normal CPU, but I doubt that's what you meant. -

bit_user This is literally "all the things" AMD has been working on & talking about for more than a decade.Reply

Heterogeneous processing (i.e. CPU + GPU)

HBM

Chiplets

Infinity Link

3D V-Cache

It's taken a while to come together, but it'll be interesting to see how it competes against Nvidia's Grace+Hopper superchips.

On that front, I can already point out one advantage in Nvidia's column. Their H200 supports almost as much HBM, while Grace supports 512 GB. So, they have a net win on memory capacity, even if the bulk of it is just LPDDR5X @ 480 GB/s. Perhaps the MI300 has a DDR5 controller, but I don't see it called out in the slides.

I guess an elephant in the room is pricing - both short-term and long-term. I can easily believe in AMD selling this at or below the current street price of Nvidia's solution, but with what margins? If Nvidia ever catches up to demand or perhaps loses its "preferred" status, how do the price floors compare.

Looking in Intel's direction, I see a lot here reminding me of Ponte Vecchio. While the latter is even more technically impressive (i.e. from a fabrication standpoint), AMD took the more sane approach of gradually building up to this point over generations of products introducing each of these technologies. Hopefully, that means they have better yields and fewer issues with these products. -

Blessedman The market has only one destination, and this is it. Fully integrated SoC, zero support chips, just a board with a chip and connectors, and at this point it will probably be nothing but usb-c ports (or whatever may be the best solution at the time). Idiot proof! Just in the nick of time!Reply -

bit_user Reply

Well, this is probably meant to sit on an OCP OAM daughter card, similar to Nvidia's SXM boards.Blessedman said:The market has only one destination, and this is it. Fully integrated SoC, zero support chips, just a board with a chip and connectors, and at this point it will probably be nothing but usb-c ports (or whatever may be the best solution at the time). Idiot proof! Just in the nick of time!

(H100 on SXM5)Note the relative size of the VRM to the H100 and its HBM!

: D

Here's an AMD MI250X on an OAM card that seems to be resting in place (screws missing), in a host system.

In both cases, you'd obviously run them with heatsinks installed.

As for I/O, note the PCIe and Infinity Fabric links, in this diagram:

Both are key for scaling up to handle large training workloads. I expect PCIe/CXL will be used to access storage and additional memory pools, at least the latter of which will need to reside in the same box. -

Roland Of Gilead Reply

They were making a joke!bit_user said:No. CDNA has no rendering hardware and this SoC will have no display engine for driving a monitor.

I mean, you could add a dGPU and just use the CPU cores like a normal CPU, but I doubt that's what you meant.

Edit: Probably meant something like this! - https://bit-tech.net/news/tech/cpus/the-threadripper-3990x-can-run-crysis-without-a-gpu/1/#:~:text=Remember%20back%20in%20the%20day,the%20AMD%20Ryzen%20Threadripper%203990X. -

bit_user Reply

I get the joke, but the product was described by the article as an APU and not everyone is probably aware that CDNA has no rendering hardware. So, I was both playing along and also trying to spread some useful information.Roland Of Gilead said:They were making a joke! -

Rob1C Meaning: https://knowyourmeme.com/memes/but-can-it-run-crysis, answer: https://www.systemrequirementslab.com/cyri/requirements/crysis/10652 - so, in a properly confirmed system, yesReply

The real (newest) question is: Can it run Alan Wake 2.