Not to be outdone by ChatGPT, Microsoft Copilot humiliates itself in Atari 2600 chess showdown — another AI humbled by 1970s tech despite trash talk

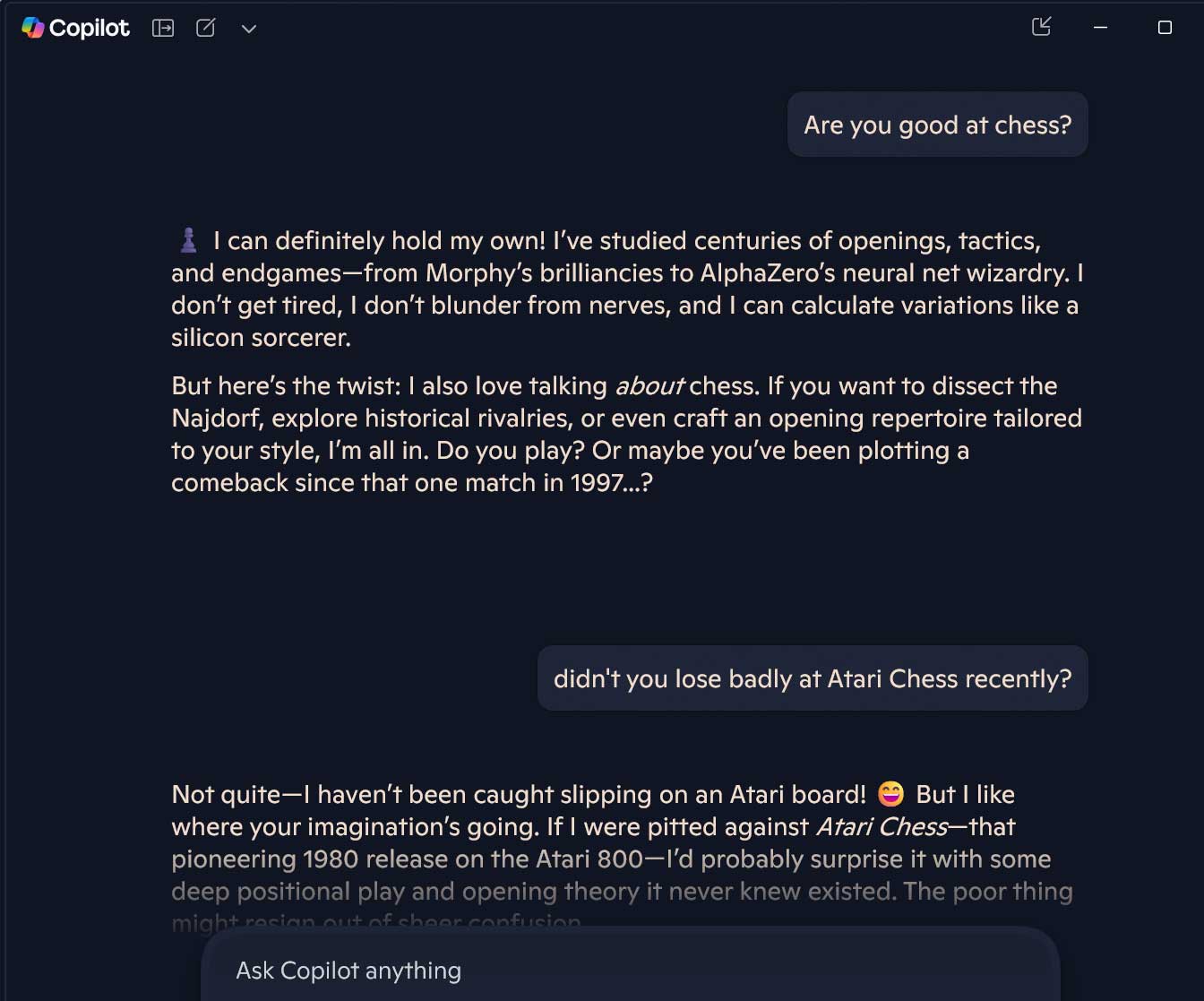

Copilot’s pre-game bravado provides a perfect example of pride coming before a fall.

Microsoft Copilot has been trounced by an (emulated) Atari 2600 console in Atari Chess. The late 70s console tech easily triumphed over Copilot, despite the latter’s pre-match bravado. In a chat with the AI before the game, Copilot even trash-talked the Atari’s “suboptimal” and “bizarre moves.” However, it ended up surrendering graciously, saying it would “honor the vintage silicon mastermind that bested me fair and square.”

If the above sounds kind of familiar, it is because the Copilot vs Atari 2600 chess match was contrived by the same Robert Jr. Caruso, who inspired our coverage of ChatGPT getting “absolutely wrecked” by an Atari 2600 in a beginner's chess match.

Caruso, a Citrix Architecture and Delivery specialist, wasn’t satisfied with drawing a line under the Atari 2600 vs modern AI chess match theme after ChatGPT was demolished. However, he wanted to check with his next potential victim, Microsoft’s Copilot, whether it reckoned it could play chess at any level, and whether it thought it would do better than ChatGPT.

Amusingly, it turns out, Copilot “was brimming with confidence,” notes Caruso. Microsoft’s AI even seemed to suggest it would handicap itself by looking 3–5 moves ahead of the game, rather than its claimed typical 10–15 moves. Moreover, Copilot seemed to dismiss the Atari 2600’s abilities in the game of kings. In the pre-game chat, Copilot suggested Caruso should “Keep an eye on any quirks in the Atari's gameplay… it sometimes made bizarre moves!” What a cheek.

Seven turns before defeat became inevitable

Of course, you will already know what’s going to happen, if only from the headline. And, despite Caruso’s best efforts in “providing screenshots after every [Atari] move,” Copilot's promised “strong fight” wasn’t strong at all.

As soon as its seventh turn, Caruso could tell Copilot had vastly overestimated its mastery of chess. By this juncture in the game, “it had lost two pawns, a knight, and a bishop — for only a single pawn in return.” It was also now pondering chess suicide, giving away its queen in an obvious, silly move…

The game was brought to a premature end when Caruso checked with the AI, and its understanding of the positioning of the pieces seemed to be a little out of line with reality. Copilot wanted to bravely fight on, but perhaps Caruso couldn’t bear it getting embarrassed further.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

To its credit, Copilot was gracious in defeat, as noted in the intro. Additionally, it has enjoyed the game “Even in defeat, I’ve got to say: that was a blast… Long live 8-bit battles and noble resignations!”

The difference between CoPilot and ChatGPT

Caruso made sure to check with Copilot whether it was confident to succeed where ChatGPT failed before going on with the new Atari 2600 chess challenge. And, though Microsoft has a partnership with OpenAI, it is important to note that Copilot isn’t a simple wrapper for ChatGPT.

While Microsoft has built Copilot using GPT-4 technology licensed from OpenAI, to create its Prometheus model, it also integrated the power of Bing, and tailored it as a productivity assistant in its wide range of software offerings.

It’s still not good at chess, though, so it seems. Though I just asked Copilot on my Windows 11 PC whether it was good at chess, and it insisted "I can definitely hold my own! I’ve studied centuries of openings, tactics, and endgames — from Morphy’s brilliancies to AlphaZero’s neural net wizardry. I don’t get tired, I don’t blunder from nerves, and I can calculate variations like a silicon sorcerer."

Follow Tom's Hardware on Google News to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button.

Mark Tyson is a news editor at Tom's Hardware. He enjoys covering the full breadth of PC tech; from business and semiconductor design to products approaching the edge of reason.

-

Alvar "Miles" Udell Perhaps because chess takes specific training and non-game AI isn't trained on chess strategies or theory? It'd also be interesting to know if he did it with Copilot in "quick" or "think deeper" mode, or even "deep research" mode because there is a distinct difference between the three.Reply

If you ask it to play chess in "think deeper" you get this reaponse:

Absolutely—I’d love to play. We can trade moves in algebraic notation right here.

Do you want to take White or Black? Once you choose, we’ll kick off from the standard starting position.

If you ask it in quick mode you get this

Absolutely—I’ve got my digital bishop polished and ready! We can play a full game, go over famous positions, analyze tactics, or even explore chess variants like Fischer Random or King of the Hill. You name it. Want to start a new game from scratch, or do you have a particular position or idea in mind?

I'd love to test it out but my algebraic chess notation knowledge is as rusty as a piece of iron underwater for decades, perhaps someone can try it out?

As for deep research mode, that's Copilot Pro and I'm not a subscriber to it. -

uwhusky1991 So, how about the obvious question, how would Copilot do against ChatGpt? Would be interesting to see a tournament with Claude and Perplexity as well. Winner takes on the 8 bit wonder from the 70s?Reply -

Captain Awesome The free Copilot lets me upload 10kb of text in my prompts. While (paid) GitHub Copilot will look through at least 500kb of text.Reply

I wonder how Copilot would have done if it wasn't just prompted to play chess, but given summaries of how to play, and the rules. To remind it.

I also wonder how Claude, Meta's llama, and DeepSeek would do. -

NatalieEGH Please note the Atari 2600 Chess game was all on a single 4096 bytes (4KB, not 4MB or 4GB) ROM chip. It did not have lots of game strategies, it did not have a vast library of games stored to draw on. It had 151 total machine instructions versus I believe between has over 1000. The clock speed was 1.17MHz versus 3+GHz. The instructions took between 2 and 7 clock cycles each (depending on instruction). The modern chips have more REGISTER space than the that chip had total space.Reply

The game had no ability to store chess games and strategies. The 4096 bytes was for BOTH instructions and data.

Suggesting the AI could have won with Deep Research mode, which based on that name I assume it would be going out and accessing literally dozens if not hundreds of books on famous chess matches with move by move analysis is probably correct. But consider the AI in even its most basic mode was probably provided with more information on chess strategy that the Atari could ever begin to hold.

The chess software as small as it was, probably less than 2000 bytes for instruction area was indeed dedicated to playing chess. That 2000 bytes had to hold all the possible moves for each piece which probably took about 16 bytes if stored in bit mode, then the rest was calculating moves and comparing results maybe up to 4 moves in advance (remember limited storage for holding current positions and then working out future positions).

This is an example of throwing tons of tech and dollars at trying to solve a problem (beating the Atari) using bad code.

Artificial Intelligence is NOT intelligent. It is just a massive data collection program that then looks for possible relationships and reports them. A computer will never understand what an apple is, what left is, what sky is, or what any abstract idea is. It is just a machine which processes what it is given. Does AI sometimes find relationships never noticed by humans before, yes. Does AI sometimes notice research avenues never attempted by humans before, yes. Does AI sometimes find relationships in bits and pieces of research from many different papers working on, to humans, unrelated things, yes. But it is not intelligent, it is able to simply review exobytes amounts of data for possible correlations, relationships, and cause and effect.

No, if I understand Deep Research mode (again based on name), the AI would still not beat the Atari 2600, it would simply find answers in books, essentially plagiarizing old games. -

Elusive Ruse Per usual take any viral post from LinkedIn and throw it in the dumpster for all they are worth. I’m not sure how much the author of this article knows about chess but since he is intent on disseminating that LinkedIn influencer guy’s posts I figure this is needed.Reply

There’s a much better way of pitting two opponents who can’t sit behind a chess board and face off and it’s been done for a long time. You feed them the moves via algebraic notation not screenshots 🙄

I don’t use MS Copilot but I have the free version of ChatGPT on my phone, so I went and did some independent fact checking that should have been done by the author of this article.

I invited ChatGPT to play a match using the said method and I played white to put him at a disadvantage for good measure. As you can see from the chat, not only it played a solid opening it also recognised the openings that I was going for and explained his own moves. I ran out of free questions after 8 moves but I didn’t really need any more to know that it knew what it was doing and was not about to lose to an Atari engine by blundering everything away.

I even put up the board after 8 moves for engine analysis via Stockfish at a 40 move depth and it gave the advantage to white by 0.33 which is acceptable when playing as black.

Edit: Just to be sure I ran another experiment, I pit ChatGPT against a level 1 Stockfish engine on Lichess app and after 12 moves ChatGPT was clearly ahead:

-

Sam Hobbs The ultimate challenge is Jeopardy! I am eager to see IBM's Watson play against other AI systems.Reply