VIDEO: Skinput Uses Your Body as a Touchscreen

Microsoft recently showed off a muscle-controlled interface that it was developing with the help of engineers at the University of Washington and the University of Toronto. Now they've teamed up with a Carnegie Mellon student to develop Skinput; a technology that turns your skin into an input device.

Skinput is described as a bio-acoustic sensing technique that allows the body to be used as an input surface. Redmond and Carnegie Mellon developed a special armband that is worn on the bicep of the user and senses impact or pressure on the skin. It also measures the acoustic signals created by that impact. Variations in bone density, size and mass as well as the different acoustics created by soft tissues and joints mean different locations are acoustically distinct. Software listens for impacts on the skin and classifies each one.

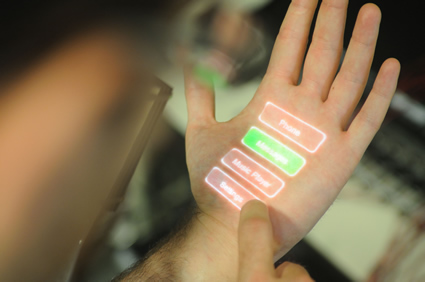

The video below demonstrates a number of uses for using your skin as a controller; playing Tetris using your hands as a control pad or controlling your iPod using taps to your fingers. The engineers also incorporated a pico projector into the armband, meaning virtual 'buttons' can be beamed onto the user's forearm or hands.

Check out the video to see the technology in action.

[Update] Thanks to Carnegie Mellon's Chris Harrison for pointing us in the direction of a clearer video. As a third year Ph.D. student in the Human-Computer Interaction Institute at CMU, Chris worked with Desney Tan and Dan Morris (both from Microsoft Research) on Skinput. His research focuses on new or clever interaction techniques and input technologies that allow for better control of smaller devices. For more on Skinput, and Harrison's other projects, check out his website.

*Image via Chris Harrison @ chrisharrison.net

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Jane McEntegart is a writer, editor, and marketing communications professional with 17 years of experience in the technology industry. She has written about a wide range of technology topics, including smartphones, tablets, and game consoles. Her articles have been published in Tom's Guide, Tom's Hardware, MobileSyrup, and Edge Up.

-

victomofreality Youtube's fault not tom's. Just checked and even the home page isn't loading on any of my computers.Reply -

sciggy Its up now. Pretty damn cool. There are certainly some interesting input devices coming out in the near futureReply -

dman3kFirst reaction: How can this be used in Porn?Even worse...Reply

Think Torture!

Imagine the things you could do with a device like this such as a woman giving birth, then having a guy wearing a device which enables them to experience what that woman went through? Pure torture for the guy, especially if you could amplify the experience. Or how about someone getting skinned alive? You name the torture, it could be recorded and used to torture victims without even doing anything to them other than the first original torture of course. The CIA would love such a device. -

N.Broekhuijsen Renegade_WarriorEven worse...Think Torture!Imagine the things you could do with a device like this such as a woman giving birth, then having a guy wearing a device which enables them to experience what that woman went through? Pure torture for the guy, especially if you could amplify the experience. Or how about someone getting skinned alive? You name the torture, it could be recorded and used to torture victims without even doing anything to them other than the first original torture of course. The CIA would love such a device.Reply

Did you even read the article or see the video??? -

I think it's awesome even though it can be easily replace with a webcam or infraredbeam attached on the arm for tracking the finger. Although this controller could be placed inside the human body while a camera can not.Reply

-

Judguh The first thing I thought of when I saw that device strapped to that guys arm was a primitive form of the biotic moves in Mass Effect :)Reply